Oracle 23ai vector search for RAG applications

Boyd Timmerman

Boyd Timmerman

In the previous article (here) I mentioned the use of similarity search between vectors to compare user input with stored embeddings. But what are vectors, embeddings and similarity search? I hope to give you an answer in this article.

Vectors

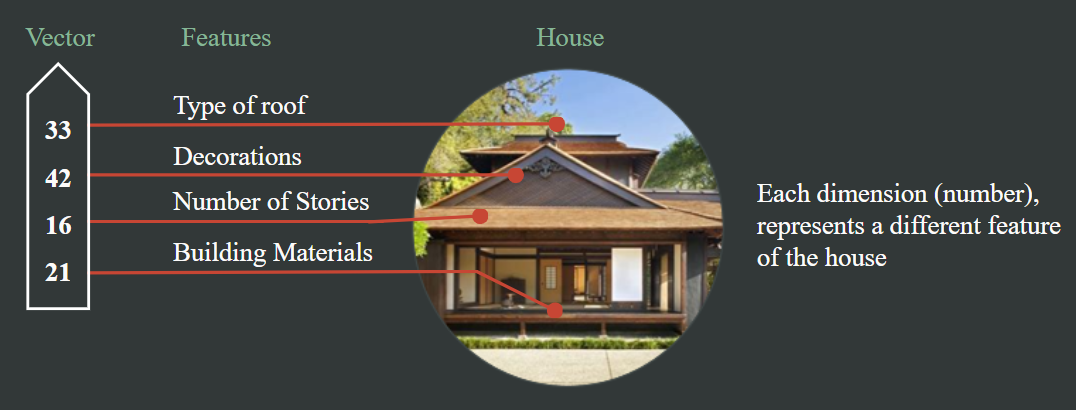

With the Oracle 23ai database a new datatype was introduced; the vector. A vector is a sequence of numbers, called dimensions, used to capture important “features” of the data. In the context of machine learning and natural language processing, data (such as text, images, or any other high-dimensional inputs) is often converted into vector representations. These vectors capture semantic meaning and relationships, allowing for more nuanced data operations than traditional text searches.

Vector embedding refers to the process of converting data, such as text or images, into a numerical vector format that captures the essential features and semantic meaning of the data. In Oracle 23ai, vector embeddings are used to represent high-dimensional data in a way that facilitates operations like similarity search and machine learning tasks.

These embeddings are created by transforming the original data into a fixed-size vector of numbers, where each number represents a specific dimension or feature of the data. This transformation allows for efficient comparison and analysis of data by leveraging mathematical operations on vectors.

Converting text to a vector can be easily done in the 23ai database. First we load a ONNX-model into the database. An ONNX model refers to a model that is compatible with the Open Neural Network Exchange (ONNX) format. ONNX is an open-source format designed to facilitate the interoperability of machine learning models across different frameworks. It allows developers to train a model in one framework, such as PyTorch, and then export it to the ONNX format for use in the Oracle DB. Loading such a model into the database can be done through the DBMS_VECTOR package. A example is shown below, here you can see that from the ‘staging‘ directory the model ALL_MINILM_L12_V2 is loaded.

begin

dbms_vector.load_onnx_model(

directory => 'staging'

, file_name => 'ALL_MINILM_L12_V2.onnx'

, model_name => 'ALL_MINILM_L12_V2'

, metadata => json(

'{

"function": "embedding",

"embeddingOutput": "embedding",

"input": {

"input":["DATA"]

}

}')

);

end;

This model is available in the OCI and can be used to convert text into vectors. The converted vector will consist out of 384 dimensions. So the vector-column were the vector is stored should capable of storing this kind of dimensions. When creating a vector-column you can either specify the dimensions or not. By specifying the dimensions the database will check if the correct amount of dimensions is stored, otherwise it will throw an error.

create table if not exists documents (

id number generated by default on null as identity primary key

, data varchar2(4000) not null

, title varchar2(100) not null

, vector vector(384, float32)

);

Embedding documents

To convert documents to vectors the DBMS_VECTOR_CHAIN package can be used. The process consists of three steps:

Convert document to text using dbms_vector_chain.utl_to_text(). Documents like PDF, DOC, HTML or JSON are converted to a text string and unwanted styling is removed.

Most embedding models have a maximum size for what they can convert into a vector. If you exceed this size, the text will be truncated. The model I used has a maximum size of 128 word tokens. Tokens can be tricky to understand because sometimes a token is a whole word, and other times it's just part of a word. For now we’ll stick to a maximum of 128 words. If a document is larger than 128 words we need to cut the document into smaller pieces called chunks. By using dbms_vector_chain.utl_to_chunks we can cut the text string into chunks with a max size of 128 words. The ‘split‘ parameter is used to define the method of cutting. Split by sentence or recursively are my preferred methods.

l_chunk := dbms_vector_chain.utl_to_chunks( l_text , json('{"by" : "words", "max" : "128", "overlap" : "20", "split" : "recursively", "normalize" : "all"]}' ));When the chunks are made we can loop over these and create embeddings, using the dbms_vector_chain.to_embedding. The params variable specifies the method for vectorizing the chunks. The example below shows a method by using the loaded model from earlier.

l_embedding := dbms_vector_chain.utl_to_embedding( data => l_chunk , params => json('{ "provider": "database", "model": "ALL_MINILM_L12_V2"}' ));Finally, we insert the vector into the vector column. Then we’re ready to perform similarity searches on the stored vectors.

Similarity search

Vector similarity search is a technique used to find and compare data points that are similar to each other based on their vector representations. This is typically done using mathematical measures such as cosine similarity or Euclidean distance. These measures quantify the degree of similarity or distance between vectors, allowing for efficient retrieval of data points that are most similar to a given query vector. For searching through documents the cosine similarity is the preferred search method. In applications with natural language processing, vector similarity search enables more nuanced and context-aware data retrieval compared to traditional keyword-based searches. It allows systems to understand and match the underlying semantics of the data, leading to more relevant and accurate results.

To perform similarity search in the Oracle database you can use different functions to achieve the same result. Below the three different notations of the vector distance function. All three use the cosine distance calculation, but differ in notation. I prefer the cosine_distance, because it explains what the function does without having to look at the parameters. The shorthand notation is convenient but not so explanatory and therefore less maintainable

with emb as(

select

to_vector(vector_embedding(ALL_MINILM_L12_V2 using '<QUESTION>' as data)) as query

)

select

doc.id

, vector_distance(doc.vector, emb.query, cosine) as default_distance

, cosine_distance(doc.vector, emb.query) as cosine_distance

, doc.vector <=> emb.query as shorthand_distance

from

documents doc

cross join

emb

order by default_distance

fetch first 3 rows only;

In this example we search for the 3 closest rows. The more similar vectors are the closer the distance between the vectors will be. By using the cosine calculation method, vector distance of 1 means the vectors are perfect similar and -1 is the complete opposite.

Conclusion

In conclusion, Oracle 23ai's vector search capabilities enhance the development of Retrieval-Augmented Generation (RAG) applications. By using vectors and embeddings, Oracle 23ai efficiently transforms complex data into numerical formats that capture meaning. This enables advanced operations like similarity search, improving data retrieval accuracy and relevance. These tools are valuable for machine learning and natural language processing, making Oracle 23ai a key asset for data-driven strategies.

Subscribe to my newsletter

Read articles from Boyd Timmerman directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by