Understanding Magic of LLMs : How Transformers Revolutionize AI

Guraasees Singh Taneja

Guraasees Singh TanejaTable of contents

- Exploring the Magic Behind Large Language Models (LLMs): A Deep Dive for AI Enthusiasts

- What Are LLMs and How Do They Create Text?

- The Backbone of LLMs: The Transformer Architecture

- The Magic Behind Each Transformer Layer: Multi-Head Attention

- The Power of Multi-Head Attention

- How Transformers Stay on Track: Layer Normalization & Residual Connections

- Decoder-Only Architecture: Simplicity Meets Efficiency

- Boosting Efficiency with Mixture of Experts (MoE)

- The Evolution of LLMs: From GPT-1 to GPT-4

- LLMs and the Future of AI: What’s Next?

Exploring the Magic Behind Large Language Models (LLMs): A Deep Dive for AI Enthusiasts

Hey AI explorers! 👋

If you're like me—a full-stack web developer who's learning to get into the world of artificial intelligence—there's a good chance you've already heard a lot about Large Language Models (LLMs). They're everywhere these days: from generating code and answering questions, to writing stories. LLMs are changing how we work, think, and interact with technology.

In this blog, I’m going to take you through how these models work, their evolution, and some of the cool tech that powers them. Whether you're a newbie or an experienced developer already knee-deep in AI, you'll walk away with a deeper understanding of LLMs and how they're reshaping our digital world.

I've been learning about LLMs since the beginning of this year, and I’ll be posting AI-related blogs over the next month with consistency. So, if you’re excited to learn about AI with me, stick around!

What Are LLMs and How Do They Create Text?

At their core, LLMs like GPT, BERT, and T5 are supercharged tools that can read, understand, and generate human-like text.

The reason they’re so powerful? Transformers.✔

Transformers are the backbone of most modern LLMs and have completely changed the game. Before them, machine learning models struggled with understanding long-range text. But Transformers? They shine at handling these complex dependencies, making them ideal for everything from language translation to content generation.

These models are trained on massive datasets (think millions of books, websites, and more) to learn the intricacies of human language. Once trained, they can write essays, answer questions, summarize content, and even code—all by recognizing patterns in language.

Let’s break down further!

The Backbone of LLMs: The Transformer Architecture

The secret sauce behind LLMs is the Transformer architecture, first introduced by Google in 2017. Originally designed to improve translation, Transformers come with two main components:

Encoders: These take input text (like a sentence) and generate a mathematical representation of it—a kind of "summary" of its meaning.

Decoders: The decoder then uses that summary to generate the output text (like a translation or a continuation).

The Magic Behind Each Transformer Layer: Multi-Head Attention

Okay, here’s where things get fun. The real magic behind Transformers is the multi-head attention mechanism.

Let’s take a simple sentence:

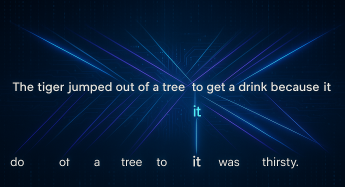

"The tiger jumped out of a tree to get a drink because it was thirsty."

The model needs to understand that “it” refers to the tiger—but how?

This is where self-attention comes in. Self-attention lets the model focus on different words in the sentence to understand their relationship. It does this using query, key, and value vectors:

Query: What are we looking for in the sentence?

Key: What does each word represent?

Value: What actual information does each word carry?

By comparing the query with the keys, the model assigns attention scores, telling it how much importance each word should have when interpreting others.

The coolest part?

This happens in parallel across all words, making the process super efficient (kind of like how asynchronous programming works in JavaScript*. I mean, you know how JS handles multiple tasks at the same time, right? 😄)*.

The Power of Multi-Head Attention

So, instead of just having one attention mechanism, multi-head attention uses multiple attention processes working at the same time. Each "head" of the attention mechanism focuses on different types of relationships in the text. One might focus on grammar (syntax), while another could focus on the meaning of words (semantics). Together, they create a richer understanding of the text.

How Transformers Stay on Track: Layer Normalization & Residual Connections

Transformers are deep—really deep. They often have many layers, making it hard to maintain smooth training. To keep things on track, we use two essential techniques:

Layer Normalization: This ensures that the activations (the outputs from each layer) stay within a certain range, speeding up the training process and preventing overfitting. In other words, it fixes this by making sure that the outputs of each layer stay within a certain range. It basically adjusts and normalizes the outputs (also called activations) so that they're always in a manageable range. This helps the model train faster and more effectively, avoiding issues where the model could get "stuck" or confused. It also prevents something called overfitting, where the model becomes too good at memorizing the training data and fails to generalize to new situations.

Residual Connections: It act like shortcuts. They let the original input skip some layers and jump directly to the final output of the layer stack. This helps the model retain important information that might have been lost in the deeper layers. Think of it as giving the model a little "memory" of what it already learned, so it doesn’t forget vital details as it goes deeper.

Decoder-Only Architecture: Simplicity Meets Efficiency

Interestingly, many modern LLMs, like GPT-3 and GPT-4, use a decoder-only architecture. But why ditch the encoder? Simple. When generating text, you don’t need to process the whole input upfront—you only need to predict the next word based on the previous ones.

For example, when you're typing an email or writing a text message, you're not thinking about the entire content of the message all at once. You’re focusing on the words that have already been written, and each word you type influences the next one.

These decoder-only models use masked self-attention to make sure they only look at the words before them, mimicking how we generate text: word-by-word, sentence-by-sentence.

In technical terms, this means the model applies masked self-attention. It looks at the words that have already been generated to predict the next one. It’s like a guessing game, where the model learns to predict based on context but only has a limited view of what came before.

This approach is much simpler and computationally more efficient because the encoder—where the model would traditionally process the entire input—isn’t needed. The decoder alone does the job of generating text, word-by-word, sentence-by-sentence.

Boosting Efficiency with Mixture of Experts (MoE)

Modern LLMs can have billions of parameters—and all that processing power can be a bit much. To make things more efficient, some models use a technique called Mixture of Experts (MoE).

MoE works by only activating a small subset of specialized "experts" within the model for each task. This reduces the computational load without sacrificing the model's overall capabilities. Imagine having a massive team of specialists but only calling on the ones you need for the job.

The Evolution of LLMs: From GPT-1 to GPT-4

LLMs have come a long way, and it's been an exciting ride. Here's a quick tour of how they've evolved over the years:

GPT-1 (2018): OpenAI's first shot at a generative pre-trained transformer. It was trained on a massive text dataset and could generate text, but it often stumbled over long sentences and sometimes repeated itself.

BERT (2018): Unlike GPT, which focuses on generating text, BERT is designed to understand it. BERT uses the encoder part of the Transformer and excels at tasks like question answering and text classification.

GPT-2 (2019) & GPT-3 (2020): These versions improved in generating more coherent, creative, and diverse text. GPT-3, with its 175 billion parameters, really took things to the next level in terms of fluency and context awareness.

GPT-4 (2023): The latest and greatest! GPT-4 is more powerful, contextually aware, and capable of generating even more accurate and human-like responses.

LLMs and the Future of AI: What’s Next?

As LLMs continue to evolve, they’re becoming more efficient, versatile, and creative. The future looks bright: we’ll soon see LLMs combined with other types of AI, like image and audio recognition, to create truly multimodal systems that can understand and generate across different formats.

For us developers, this opens up endless possibilities. From smarter search engines and advanced chatbots to AI-powered code generation tools, the potential applications are limitless.

As I continue my journey from full-stack developer to AI enthusiast, I’m beyond excited about where LLMs will take us. These aren’t just tools for natural language processing—they’re shaping the future of intelligent applications.

How about you? Are you working with LLMs in your projects, or just getting started? Drop a comment below—let's chat about your experiences and what excites you about the world of AI!

Happy coding and learning! 🚀

PS- This is the first day in my 7-day GenAI series for developers—don't miss out I’ll be posting weekly/bi-weekly content over the next week to help you level up your AI knowledge!

Subscribe to my newsletter

Read articles from Guraasees Singh Taneja directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Guraasees Singh Taneja

Guraasees Singh Taneja

Hi there! I'm Guraasees Singh, you can call me Aasees ,I'm a passionate developer focused on building applications which solves some problem. Currently exploring Web3 and AI, I love sharing my journey and insights on technology, web development, and the latest trends in Web3 & AI.