The Future of AI Agents: Why Event-Driven Architecture Is Essential

Spheron Network

Spheron NetworkTable of contents

- Beyond Better Models: An Infrastructure Challenge

- The Evolution of Artificial Intelligence

- Design Patterns That Empower Agents

- The Challenge of Scaling Intelligent Agents

- Event-Driven Architecture: A Foundation for Modern Systems

- Learning from History: The Rise and Fall of Early Social Platforms

- Why AI's Future Depends on Event-Driven Agents

- Scaling Agents with Event-Driven Architecture

- Conclusion: Event-Driven Agents Will Define AI's Future

AI agents are poised to revolutionize enterprise operations through autonomous problem-solving capabilities, adaptive workflows, and unprecedented scalability. However, the central challenge in realizing this potential isn't simply developing more sophisticated AI models. Rather, it lies in establishing robust infrastructure that enables agents to access data, utilize tools, and communicate across systems seamlessly.

Beyond Better Models: An Infrastructure Challenge

The true hurdle in advancing AI agents isn't an AI problem per se—it's fundamentally an infrastructure and data interoperability challenge. For AI agents to function effectively, they need more than sequential command executions; they require a dynamic architecture that facilitates continuous data flow and interoperability. As aptly noted by HubSpot CTO Dharmesh Shah, "Agents are the new apps," highlighting the paradigm shift in how we conceptualize AI systems.

To fully understand why event-driven architecture (EDA) represents the optimal solution for scaling agent systems, we must examine how artificial intelligence has evolved through distinct phases, each with their own capabilities and limitations.

The Evolution of Artificial Intelligence

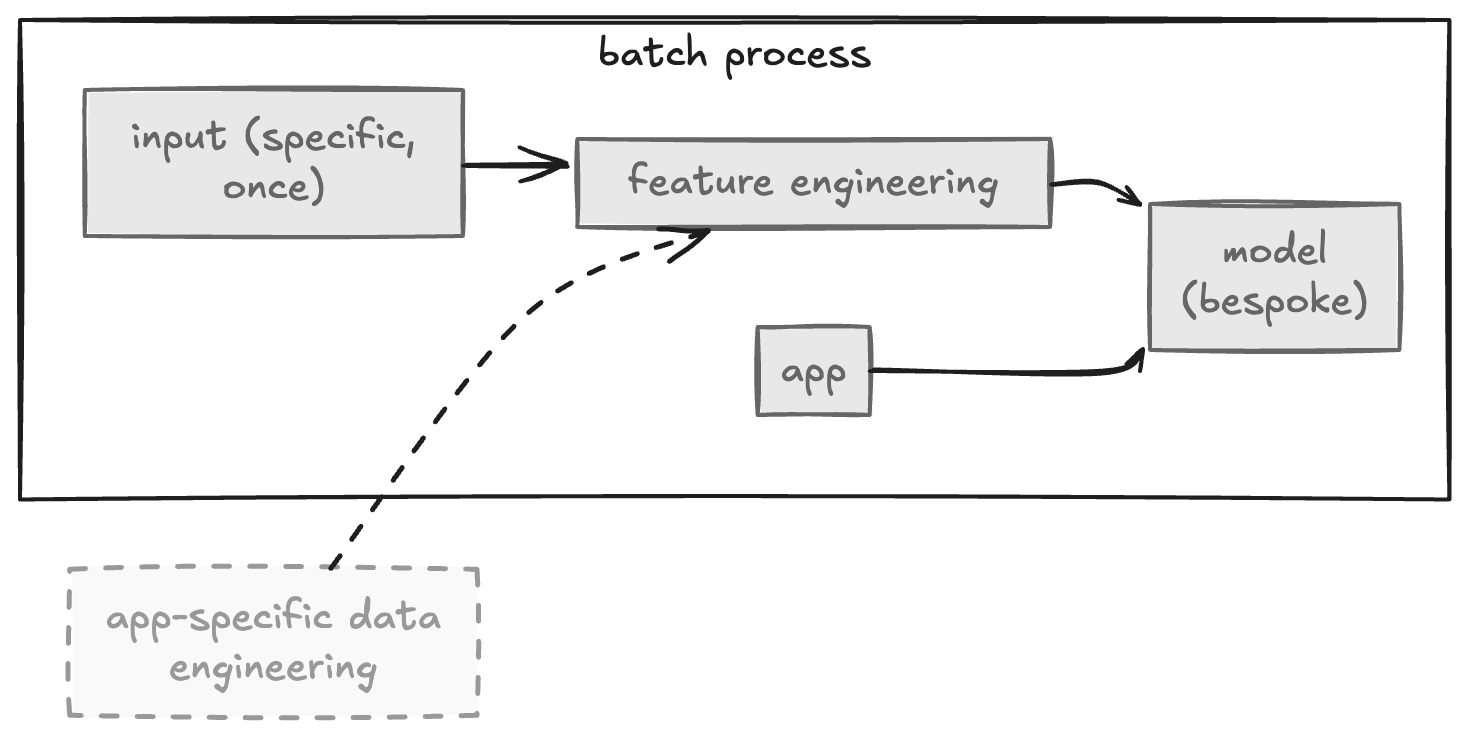

First Wave: Predictive Models

The initial wave of AI centered on traditional machine learning approaches that produced predictive capabilities for narrowly defined tasks. These models required considerable expertise to develop, as they were custom-crafted for specific use cases with their domain specificity embedded in the training data.

This approach created inherently rigid systems that proved difficult to repurpose. Adapting such models to new domains typically meant building from scratch—a resource-intensive process that inhibited scalability and slowed adoption across enterprises.

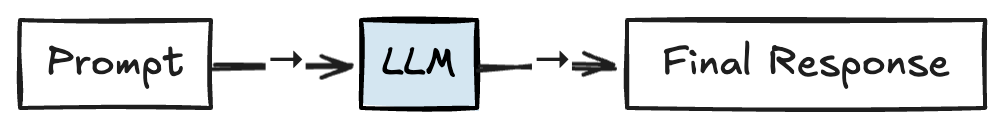

Second Wave: Generative Models

The second wave brought generative AI to the forefront, powered by advances in deep learning. Unlike their predecessors, these models were trained on vast, diverse datasets, enabling them to generalize across various contexts. The ability to generate text, images, and even videos opened exciting new application possibilities.

However, generative models brought their own set of challenges:

They remain fixed in time, unable to incorporate new or dynamic information

Adapting them to specific domains requires expensive, error-prone fine-tuning processes

Fine-tuning demands extensive data, significant computational resources, and specialized ML expertise

Since large language models (LLMs) train on publicly available data, they lack access to domain-specific information, limiting their accuracy for context-dependent queries

For example, asking a generative model to recommend an insurance policy based on personal health history, location, and financial goals reveals these limitations. The model can only provide generic or potentially inaccurate responses without access to relevant user data.

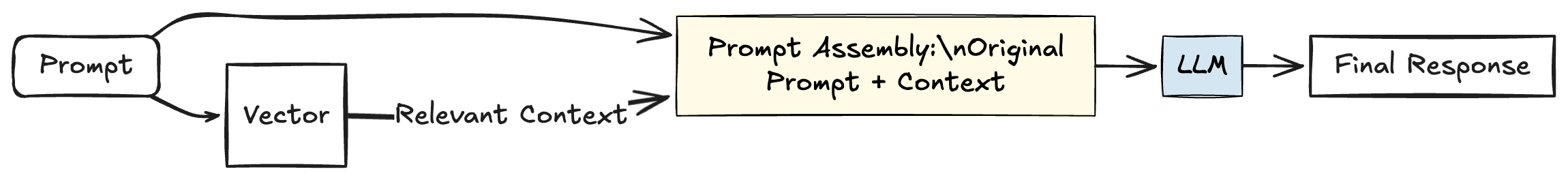

The Compound AI Bridge

Compound AI systems emerged to overcome these limitations, integrating generative models with additional components such as programmatic logic, data retrieval mechanisms, and validation layers. This modular approach enables AI to combine tools, fetch relevant data, and customize outputs in ways static models cannot.

Retrieval-Augmented Generation (RAG) exemplifies this approach by dynamically incorporating relevant data into the model's workflow. While RAG effectively handles many tasks, it relies on predefined workflows—every interaction and execution path must be established in advance. This rigidity makes RAG impractical for complex or dynamic tasks where workflows cannot be exhaustively encoded.

The Third Wave: Agentic AI Systems

We're now witnessing the emergence of the third wave of AI: agentic systems. This evolution comes as we reach the limitations of fixed systems and even advanced LLMs.

Google's Gemini reportedly failed to meet internal expectations despite being trained on larger datasets. Similar challenges have been reported with OpenAI's next-generation Orion model. Salesforce CEO Marc Benioff recently stated on "The Wall Street Journal's "Future of Everything" podcast that we've reached the upper limits of what LLMs can achieve, suggesting that autonomous agents—systems capable of independent thinking, adaptation, and action—represent the future of AI.

Agents introduce a critical innovation: dynamic, context-driven workflows. Unlike fixed pathways, agentic systems determine next steps adaptively, making them ideal for addressing the unpredictable, interconnected challenges that businesses face today.

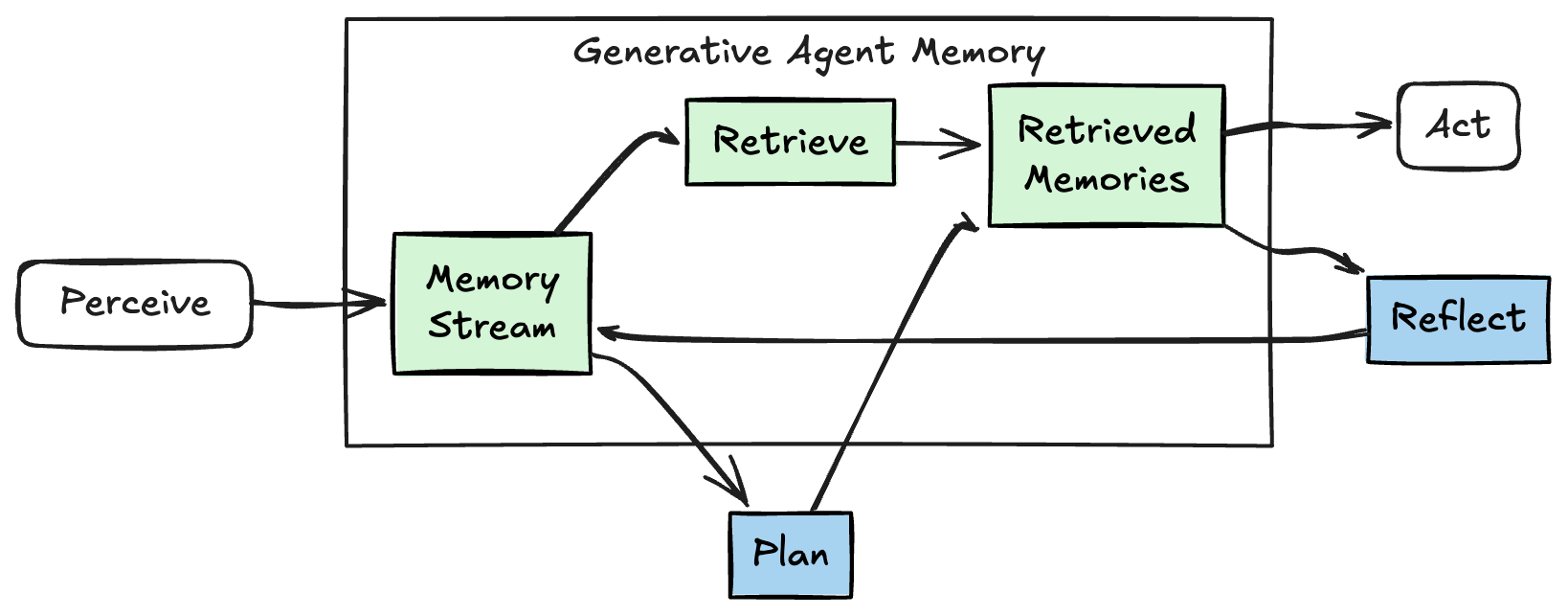

This approach fundamentally inverts traditional control logic. Rather than rigid programs dictating every action, agents leverage LLMs to drive decisions. They can reason, utilize tools, and access memory—all dynamically. This flexibility allows for workflows that evolve in real-time, making agents substantially more powerful than systems built on fixed logic.

Design Patterns That Empower Agents

AI agents derive their effectiveness not only from core capabilities but also from the design patterns that structure their workflows and interactions. These patterns enable agents to address complex problems, adapt to changing environments, and collaborate effectively.

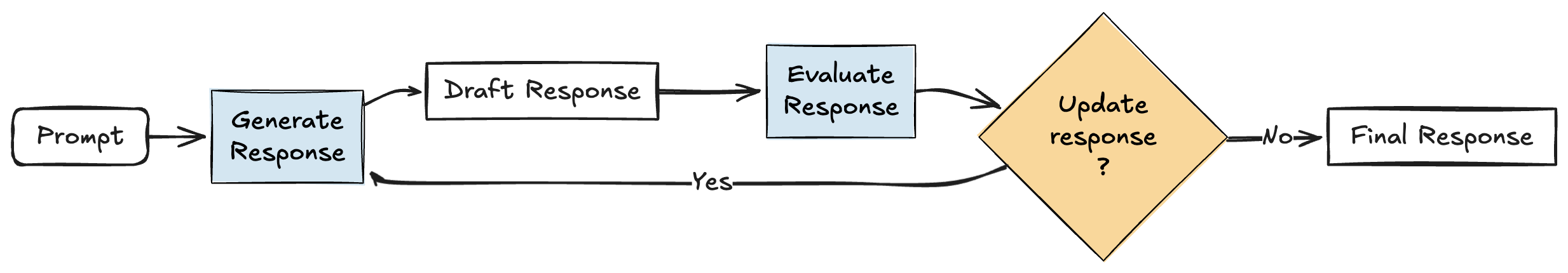

Reflection: Self-Improvement Through Evaluation

Reflection allows agents to evaluate their own decisions and improve outputs before taking action or providing final responses. This capability enables agents to identify and correct mistakes, refine reasoning processes, and ensure higher-quality outcomes.

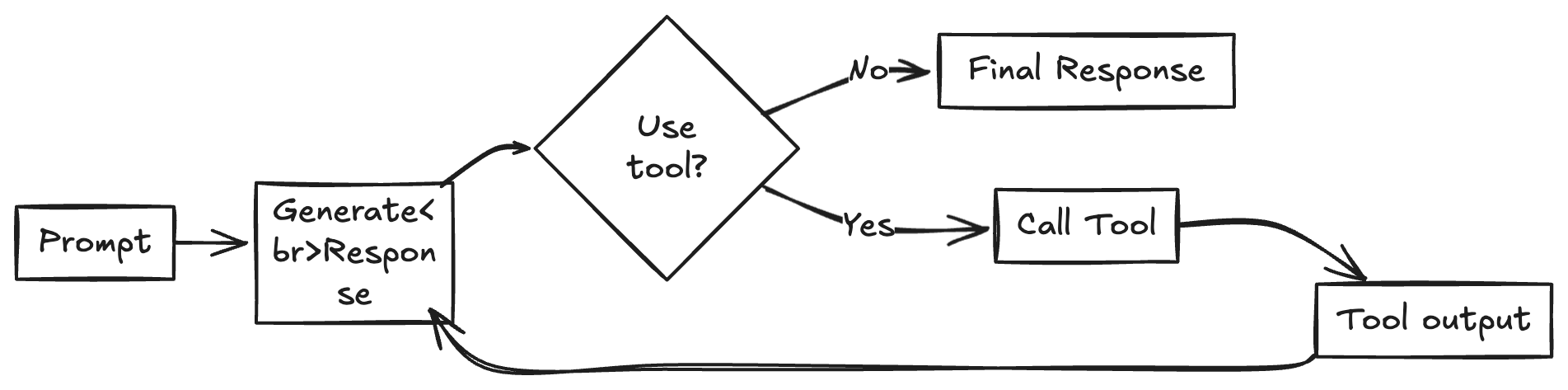

Tool Use: Expanding Capabilities

Integrating with external tools extends an agent's functionality, allowing it to perform tasks like data retrieval, process automation, or execution of deterministic workflows. This capability is particularly valuable for operations requiring strict accuracy, such as mathematical calculations or database queries. Tool use effectively bridges the gap between flexible decision-making and predictable, reliable execution.

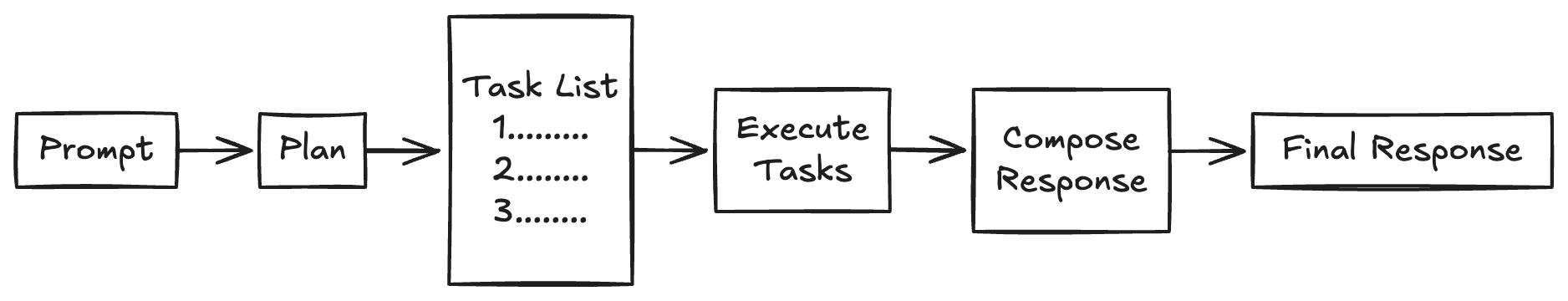

Planning: Transforming Goals Into Action

Agents with planning capabilities can decompose high-level objectives into actionable steps, organizing tasks in logical sequences. This design pattern proves crucial for solving multi-step problems or managing workflows with dependencies.

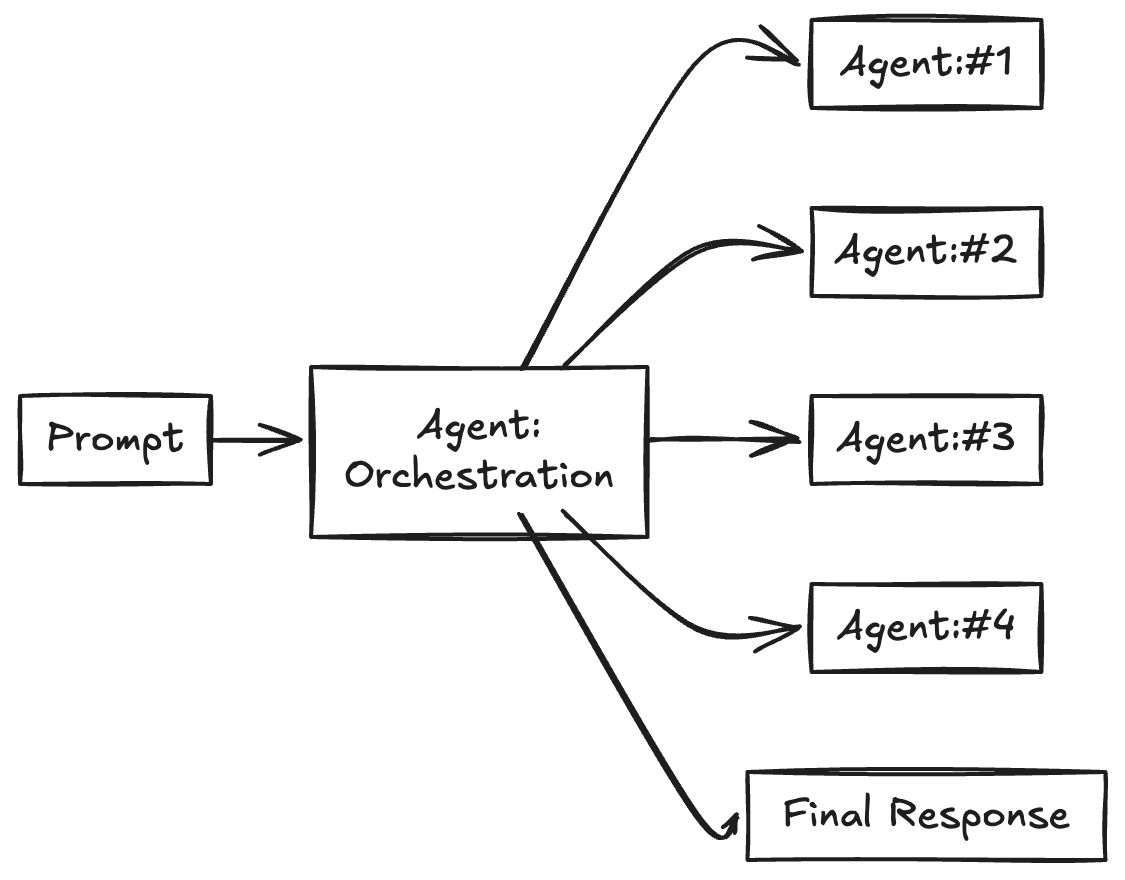

Multi-Agent Collaboration: Modular Problem-Solving

Multi-agent systems take a modular approach by assigning specific tasks to specialized agents. This approach offers flexibility—smaller language models (SLMs) can be deployed for task-specific agents to improve efficiency and simplify memory management. The modular design reduces complexity for individual agents by focusing their context on specific tasks.

A related technique, Mixture-of-Experts (MoE), employs specialized submodels or "experts" within a unified framework. Like multi-agent collaboration, MoE dynamically routes tasks to the most relevant expert, optimizing computational resources and enhancing performance. Both approaches emphasize modularity and specialization—whether through multiple agents working independently or through task-specific routing in a unified model.

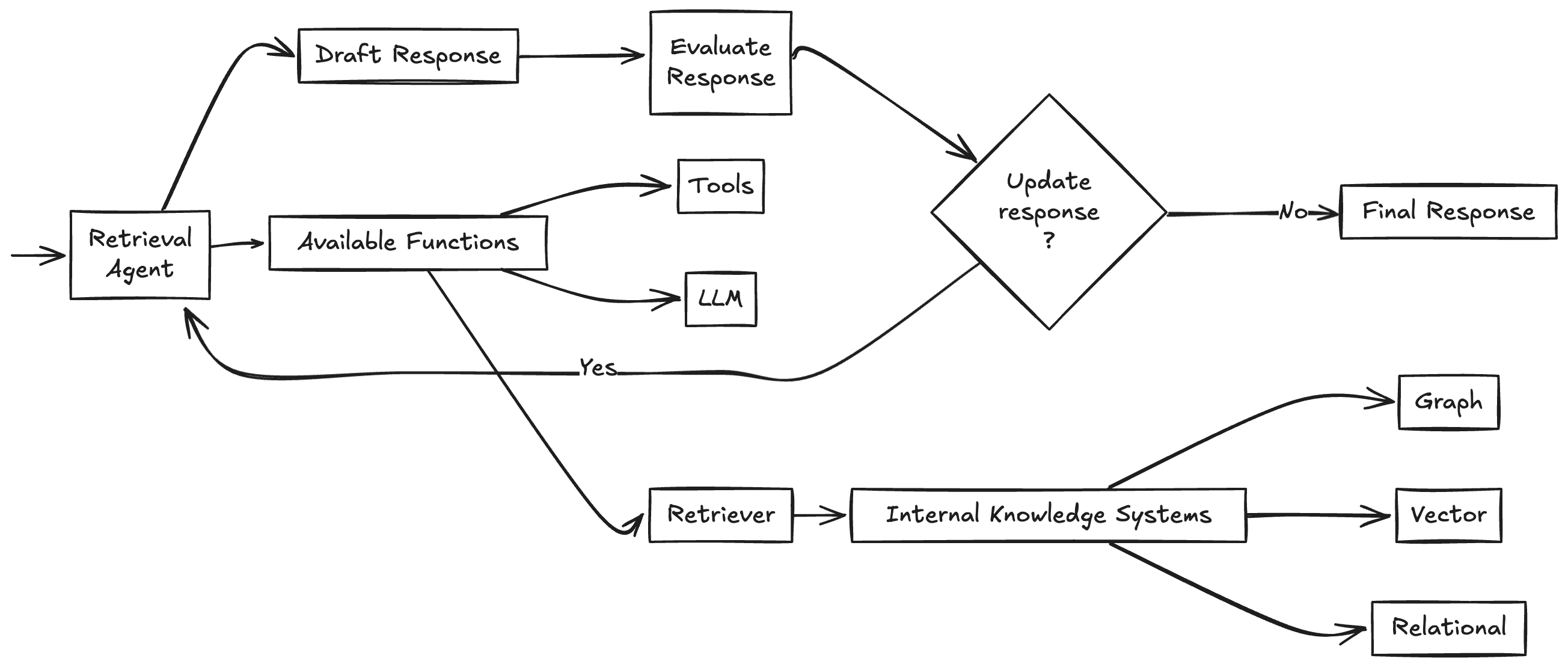

Agentic RAG: Adaptive and Context-Aware Retrieval

Agentic RAG evolves traditional RAG by making it more dynamic and context-driven. Instead of relying on fixed workflows, agents determine in real-time what data they need, where to find it, and how to refine queries based on the task. This flexibility makes agentic RAG well-suited for handling complex, multi-step workflows requiring responsiveness and adaptability.

For instance, an agent creating a marketing strategy might begin by extracting customer data from a CRM, use APIs to gather market trends, and refine its approach as new information emerges. By maintaining context through memory and iterating on queries, the agent produces more accurate and relevant outputs. Agentic RAG effectively combines retrieval, reasoning, and action capabilities.

The Challenge of Scaling Intelligent Agents

Scaling agents—whether individual or collaborative systems—fundamentally depends on their ability to access and share data effortlessly. Agents must gather information from multiple sources, including other agents, tools, and external systems, to make informed decisions and take appropriate actions.

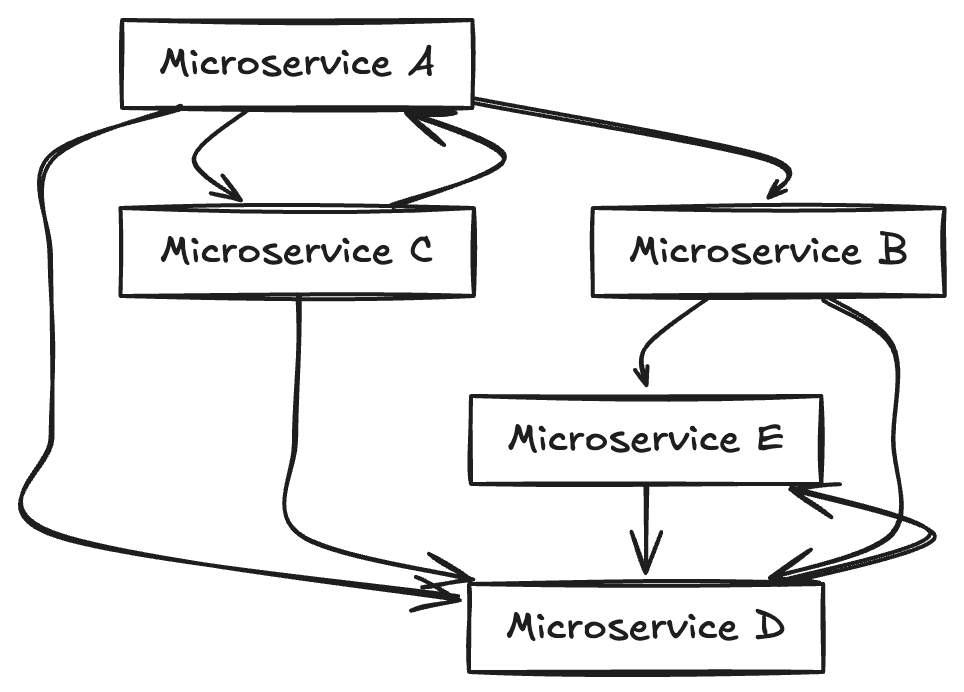

Connecting agents to necessary tools and data represents a distributed systems challenge, similar to those faced when designing microservices architectures. Components must communicate efficiently without creating bottlenecks or rigid dependencies.

Like microservices, agents must communicate effectively and ensure their outputs provide value across broader systems. Their outputs shouldn't merely loop back into AI applications—they should flow into critical enterprise systems like data warehouses, CRMs, customer data platforms, and customer success platforms.

While agents and tools could connect through RPC calls and APIs, this approach creates tightly coupled systems. Tight coupling inhibits scalability, adaptability, and support for multiple consumers of the same data. Agents require flexibility, with outputs seamlessly feeding into other agents, services, and platforms without establishing rigid dependencies.

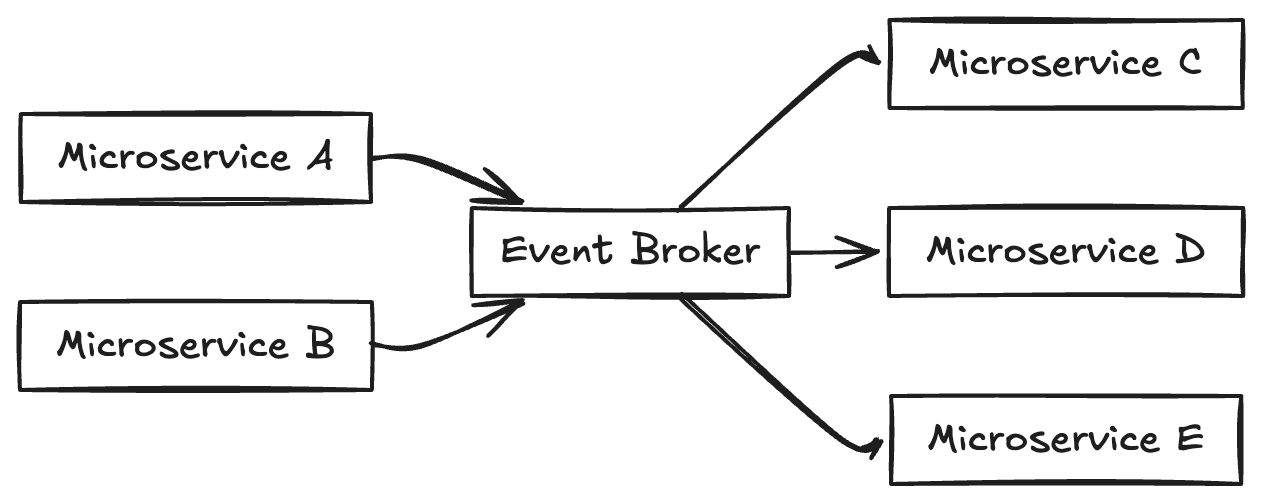

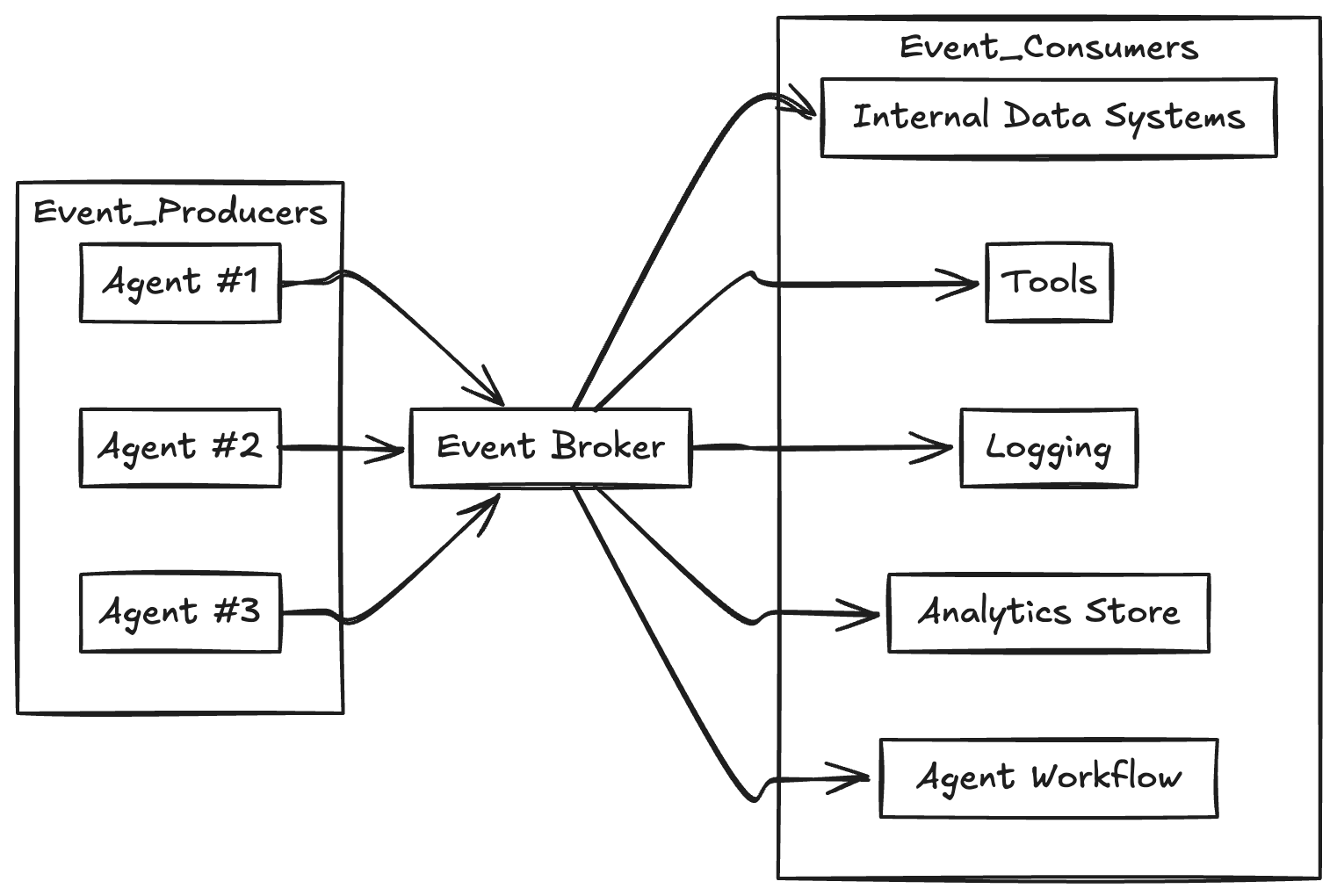

The solution? Loose coupling through event-driven architecture—the essential foundation that enables agents to share information, respond in real-time, and integrate with broader ecosystems without the complications of tight coupling.

Event-Driven Architecture: A Foundation for Modern Systems

In computing's early days, software systems existed as monoliths—everything contained within a single, tightly integrated codebase. While initially simple to build, monoliths became problematic as they grew.

Scaling represented a blunt instrument: entire applications required scaling, even when only specific components needed additional resources. This inefficiency led to bloated systems and brittle architectures incapable of accommodating growth.

Microservices transformed this paradigm by decomposing applications into smaller, independently deployable components. Teams could scale and update specific elements without affecting entire systems. However, this created a new challenge: establishing effective communication between distributed services.

Connecting services through direct RPC or API calls creates complex interdependencies. When one service fails, it impacts all nodes along connected paths, creating cascading failures.

Event-driven architecture (EDA) resolved this problem by enabling asynchronous communication through events. Services don't wait for each other—they react to real-time occurrences. This approach enhances system resilience and adaptability, allowing them to manage the complexity of modern workflows. EDA represents not just a technical improvement but a strategic necessity for systems under pressure.

Learning from History: The Rise and Fall of Early Social Platforms

The trajectories of early social networks like Friendster provide instructive lessons about scalable architecture. Friendster initially attracted massive user bases but ultimately failed because their systems couldn't handle the increasing demand. Performance issues drove users away, leading to the platform's demise.

Conversely, Facebook thrived not merely because of its features but because it invested in scalable infrastructure. Rather than collapsing under success, it expanded to market dominance.

Today, we face similar potential outcomes with AI agents. Like early social networks, agents will experience rapid adoption. Building capable agents isn't sufficient—the critical question is whether your underlying architecture can manage the complexity of distributed data, tool integrations, and multi-agent collaboration. Without proper foundations, agent systems risk failure similar to early social media casualties.

Why AI's Future Depends on Event-Driven Agents

The future of AI transcends building smarter agents—it requires creating systems that evolve and scale as technology advances. With AI stacks and underlying models changing rapidly, rigid designs quickly become innovation barriers. Meeting these challenges demands architectures prioritizing flexibility, adaptability, and seamless integration. EDA provides this foundation, enabling agents to thrive in dynamic environments while maintaining resilience and scalability.

Agents as Microservices with Informational Dependencies

Agents resemble microservices: autonomous, decoupled, and capable of independent task execution. However, agents extend beyond typical microservices.

While microservices typically process discrete operations, agents depend on shared, context-rich information for reasoning, decision-making, and collaboration. This creates unique requirements for managing dependencies and ensuring real-time data flows.

An agent might simultaneously access customer data from a CRM, analyze live analytics, and utilize external tools—all while sharing updates with other agents. These interactions require systems where agents operate independently while exchanging critical information seamlessly.

EDA addresses this challenge by functioning as a "central nervous system" for data. It allows agents to broadcast events asynchronously, ensuring dynamic information flow without creating rigid dependencies. This decoupling enables agents to operate autonomously while integrating effectively into broader workflows and systems.

Maintaining Context While Decoupling Components

Building flexible systems doesn't sacrifice contextual awareness. Traditional, tightly coupled designs often bind workflows to specific pipelines or technologies, forcing teams to navigate bottlenecks and dependencies. Changes in one system area affect the entire ecosystem, impeding innovation and scaling efforts.

EDA eliminates these constraints through workflow decoupling and asynchronous communication, allowing different stack components—agents, data sources, tools, and application layers—to function independently.

In today's AI stack, MLOps teams manage pipelines like RAG, data scientists select models, and application developers build interfaces and backends. Tightly coupled designs force unnecessary interdependencies between these teams, slowing delivery and complicating adaptation as new tools emerge.

Event-driven systems ensure workflows remain loosely coupled, allowing independent innovation across teams. Application layers don't need to understand AI internals—they simply consume results when needed. This decoupling also ensures AI insights extend beyond silos, enabling agent outputs to integrate seamlessly with CRMs, CDPs, analytics tools, and other systems.

Scaling Agents with Event-Driven Architecture

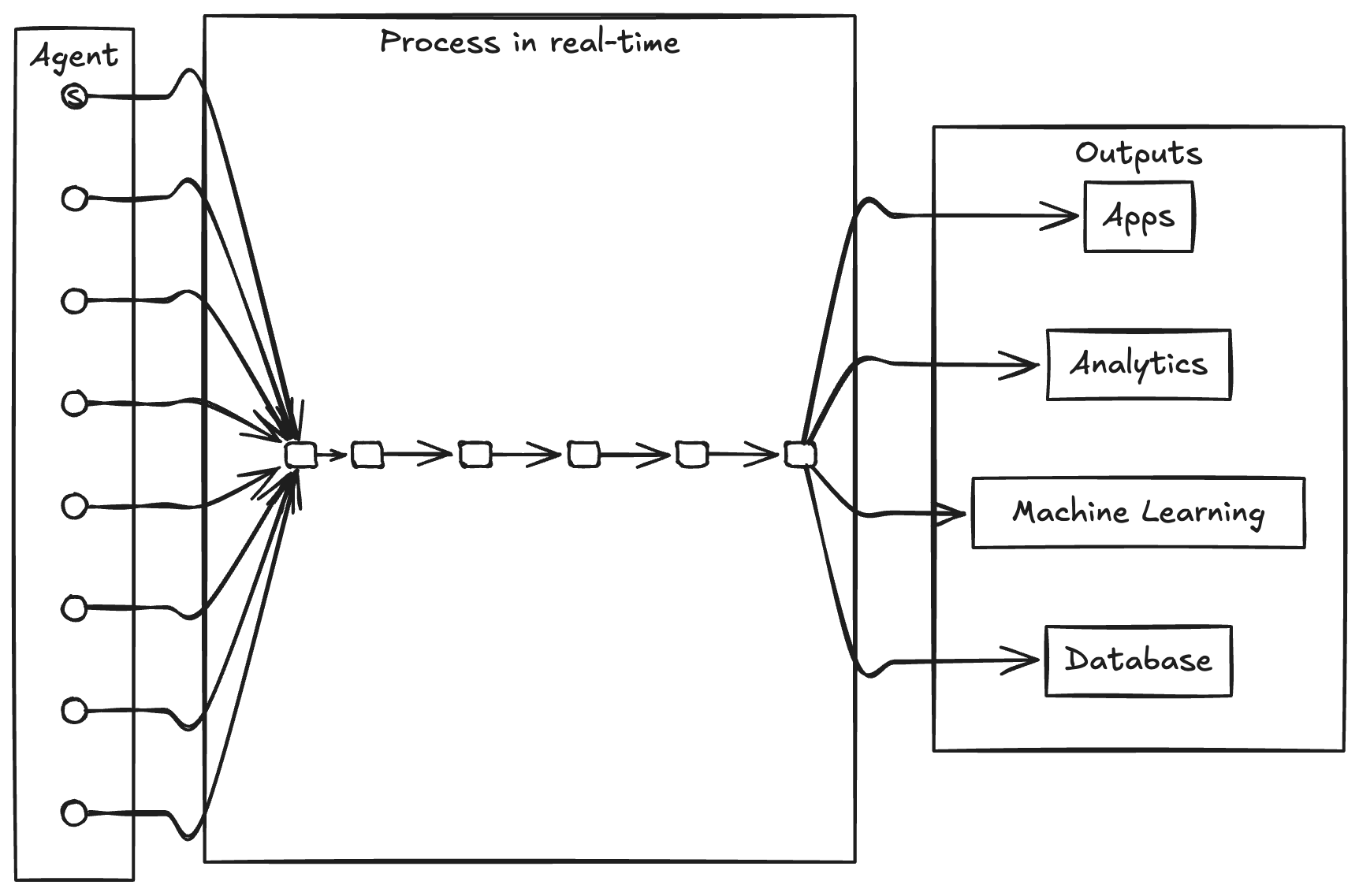

EDA forms the backbone for transitioning to agentic systems, with its ability to decouple workflows while facilitating real-time communication ensuring efficient agent operation at scale. Platforms like Kafka exemplify EDA advantages in agent-driven systems:

Horizontal Scalability: Kafka's distributed design supports adding new agents or consumers without bottlenecks, enabling effortless system growth

Low Latency: Real-time event processing allows agents to respond instantly to changes, ensuring fast, reliable workflows

Loose Coupling: Communication through Kafka topics rather than direct dependencies keeps agents independent and scalable

Event Persistence: Durable message storage guarantees data preservation during transit, critical for high-reliability workflows

Data streaming enables continuous information flow throughout organizations. A central nervous system serves as the unified backbone for real-time data transmission, connecting disparate systems, applications, and data sources to facilitate efficient agent communication and decision-making.

This architecture aligns naturally with frameworks like Anthropic's Model Context Protocol (MCP), which provides a universal standard for integrating AI systems with external tools, data sources, and applications. By simplifying these connections, MCP reduces development effort while enabling context-aware decision-making.

EDA addresses many challenges that MCP aims to solve, including seamless access to diverse data sources, real-time responsiveness, and scalability for complex multi-agent workflows. By decoupling systems and enabling asynchronous communication, EDA simplifies integration and ensures agents can consume and produce events without rigid dependencies.

Conclusion: Event-Driven Agents Will Define AI's Future

The AI landscape continues evolving rapidly, and architectures must adapt accordingly. Businesses appear ready for this transition—a Forum Ventures survey found 48% of senior IT leaders prepared to integrate AI agents into operations, with 33% indicating they're very prepared. This demonstrates clear demand for systems capable of scaling and managing complexity.

EDA represents the key to building agent systems that combine flexibility, resilience, and scalability. It decouples components, enables real-time workflows, and ensures agents integrate seamlessly into broader ecosystems.

Organizations adopting EDA won't merely survive—they'll gain competitive advantages in this new wave of AI innovation. Those who fail to embrace this approach risk becoming casualties of their inability to scale, much like the early social platforms that collapsed under their own success. As AI agents become increasingly central to enterprise operations, the foundations we build today will determine which systems thrive tomorrow.

Subscribe to my newsletter

Read articles from Spheron Network directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Spheron Network

Spheron Network

On-demand DePIN for GPU Compute