"LLMs Can't Reason"

Arshan Dabirsiaghi

Arshan DabirsiaghiThe top post on HN right now (well, yesterday) is about speaking more directly in the age of LLM "fluff". I have nothing to say about the piece’s main points. But, a sentence caught my eye that never seems to receive any pushback:

While it's true that LLMs are unable to reason...

Where does the resistance to acknowledge the reasoning capability of LLMs come from? I assume some people actually feel threatened, but I’m sure they are the minority. Others may have a reflexive "anti-hype" bias. Maybe others are trying to find a way to “close the door behind us” on human vs. machine intelligence by subtly redefining the word to mean human reasoning capability. If you saw the incredible things that LLMs can to today, 5 years ago, I don’t think anyone would deny they can reason. By the definition, it’s open-and-shut:

the power of comprehending, inferring, or thinking especially in orderly rational ways

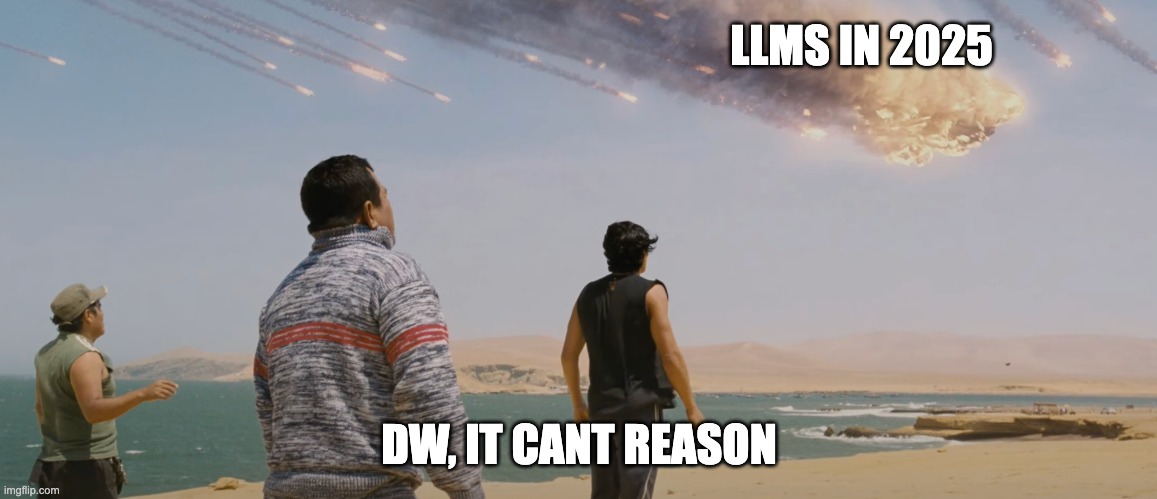

It’s very Don’t Look Up to to deny their reasoning capabilities outright, and way more productive to debate their limits. If you watch Cursor debug its own unit tests using a creative mix of system commands, custom scripts, print statement inserting — are we really debating whether some level of reasoning can be encoded into weights?

It’s only in the re-framing of their weaknesses today versus where we can all now imagine they can go, that this statement doesn’t feel starkly ignorant.

How reasoning ability is encoded seems like an implementation detail (like, weights vs. neurons.) And, I think, many people would be uncomfortable knowing how much of our human stimuli response is based on the same types of “weights” encoded into our genes and memory — we tend to “skip” reasoning steps, too. Much of our “intelligence” is pattern recognition. We also happen to hallucinate all the time and our memory is also incredibly unreliable.

I think people think about the future LLMs wrong. It’s very possible that LeCun’s arguments that LLMs are the wrong model to get to AGI are correct. But, imagine we built a system where today’s SOTA LLMs are the primitives, on a system scale where the LLMs are as small as transistors, complete with their own, individual multi-modal tools and memory, and it seems to me a very powerful intelligence is not only possible, capable of reasoning beyond human capacity — well, it seems not only possible, but inevitable.

Such a system would be orders of magnitude too slow and expensive in today’s world, but until LeCun gives us a better architecture, the industry will keep happily plodding along that path, with each organization contributing their own agents^M^M^M transistors adding more, more layered, and more effective reasoning.

Subscribe to my newsletter

Read articles from Arshan Dabirsiaghi directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Arshan Dabirsiaghi

Arshan Dabirsiaghi

CTO @ Pixee. ai (@pixeebot on GitHub) ex Chief Scientist @ Contrast Security Security researcher pretending to be a software executive.