Integrate Gemini, Claude, DeepSeek into Agents SDK by OpenAI

Mrunmay Shelar

Mrunmay Shelar

OpenAI recently introduced the Agents SDK, a lightweight, Python-first toolkit for building agentic AI apps. It’s built around three primitives:

Agents: LLMs paired with tools and instructions to complete tasks autonomously.

Handoffs: Let agents delegate tasks to other agents.

Guardrails: Validate inputs/outputs to keep workflows safe and reliable.

TLDR;

OpenAI’s Agents SDK is great for building tool-using agents with handoffs. But what if you want to use Gemini's latest reasoning model or take advantage of DeepSeek’s 90 percent lower cost?

With LangDB, you can run the same agent logic across more than 350 models, including Claude, Gemini, DeepSeek, and Grok, without changing your code. It works out of the box with frameworks like CrewAI and LangChain, and provides full trace visibility into every agent, model, and tool call.

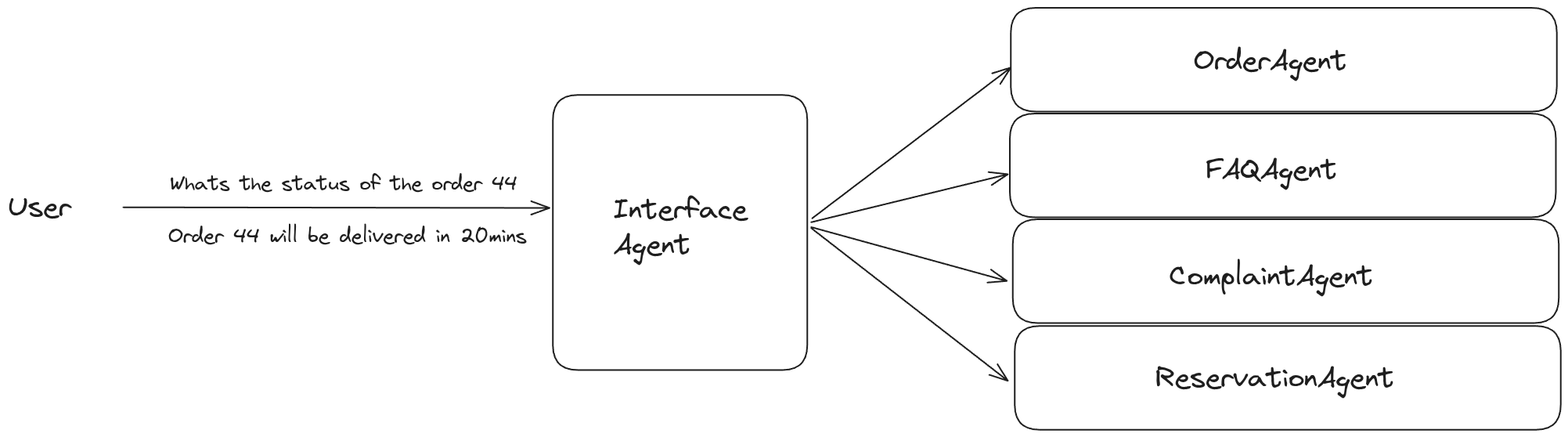

Building a Multi-Agent Customer Support System

Let’s create a production-grade AI agent system using the OpenAI Agents SDK, with LangDB providing multi-model flexibility and runtime control.

We’ll build four specialized agents, each powered by a different model to demonstrate LangDB's multi-model capabilities:

OrderAgent (claude-3.7-sonnet): checks order status

FAQAgent (gemini-2.5-pro): answers common customer questions

ComplaintAgent (grok-2): handles complaints with empathy

ReservationAgent (gpt-4o): manages table bookings

Then, we use a Classifier Agent to route user queries to the appropriate sub-agent using built-in handoff.

Overview

Each leaf agent is powered by a tool — a simple Python function decorated with @function_tool.

Example: Tool for Order Status

@function_tool

def check_order_status(order_id: str):

order_statuses = {

"12345": "Being prepared.",

"67890": "Dispatched.",

"11121": "Still processing."

}

return order_statuses.get(order_id, "Order ID not found.")

Defining the agent:

order_agent = Agent(

name="OrderAgent",

model="anthropic/claude-3.7-sonnet",

instructions="Help customers with their order status.",

tools=[check_order_status]

)

Each of the other agents follows the same structure, varying only in tools, instructions, and model selection.

Routing User Queries with Handoffs

classifier_agent = Agent(

name="User Interface Agent",

model="openai/gpt-4o-mini",

instructions="You are a restaurant customer support agent. Handoff to the appropriate agent based on the user query.",

handoffs=[order_agent,faq_agent,complaint_agent,reservation_agent]

)

This agent functions as a controller, deciding which specialized agent should handle the user's request.

Running Multi-Model Agents with LangDB

To run the agents through LangDB and switch between different providers, first configure the OpenAI-compatible client like this:

from agents import Agent, set_default_openai_client

from openai import AsyncOpenAI

from uuid import uuid4

client = AsyncOpenAI(

api_key="langdbAPIKey",

base_url=f"https://api.us-east-1.langdb.ai/{langDBProjectID}/v1",

default_headers={"x-thread-id": str(uuid4()), "x-run-id": str(uuid4())})

set_default_openai_client(client, use_for_tracing=False)

LangDB allows you to test your agents using models such as GPT, Claude, Gemini, or Grok, while keeping the agent logic unchanged.

To switch models, simply change the configuration:

faq_agent = Agent(

name="FAQAgent",

model="gemini/gemini-2.5-pro-exp-03-25", # or claude-3.7-sonnet, gpt-4o,

instructions="Answer common customer questions about hours, menu, and location."

...)

This approach enables you to evaluate multiple providers and optimize for quality, cost, or latency.

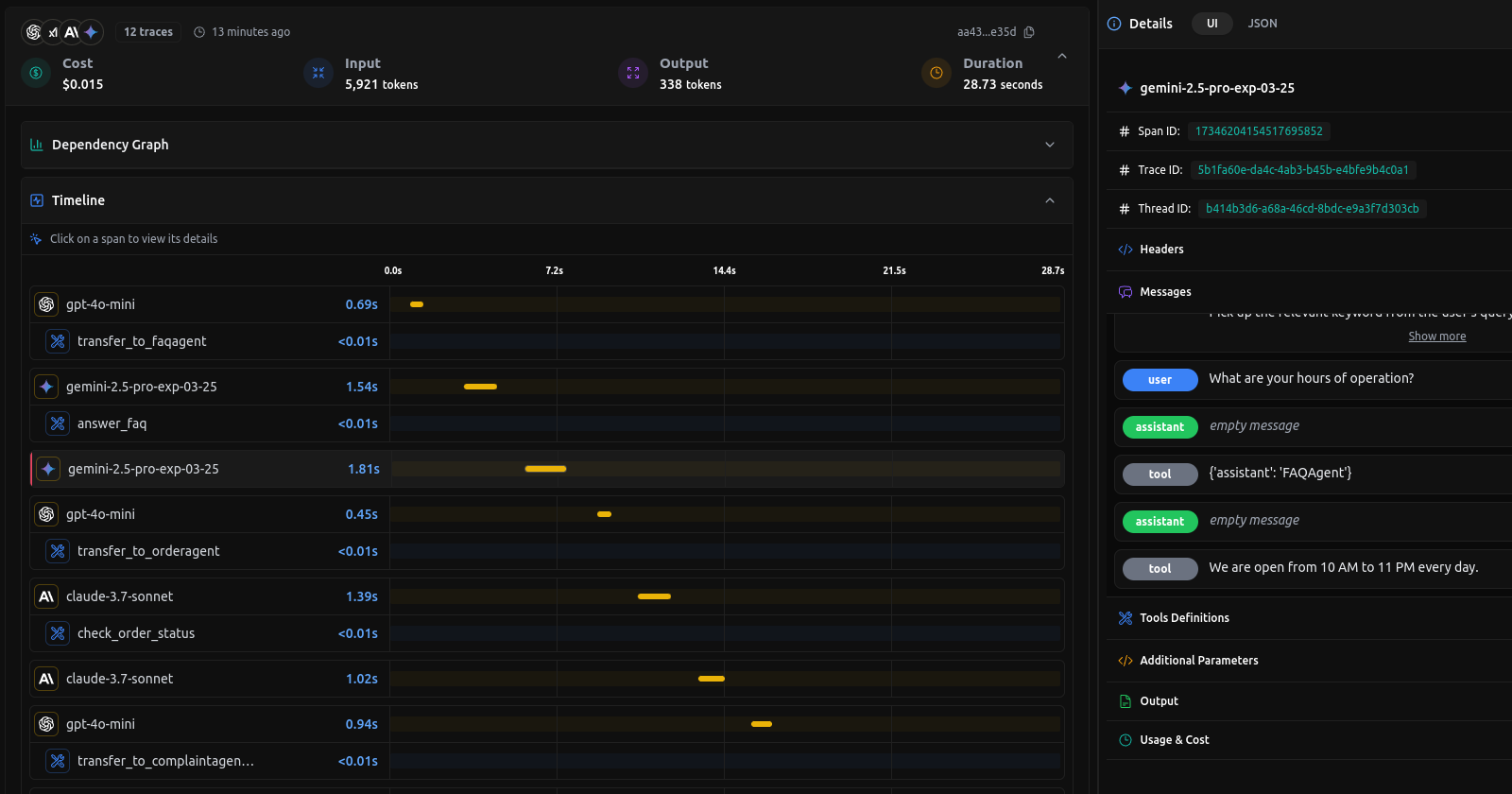

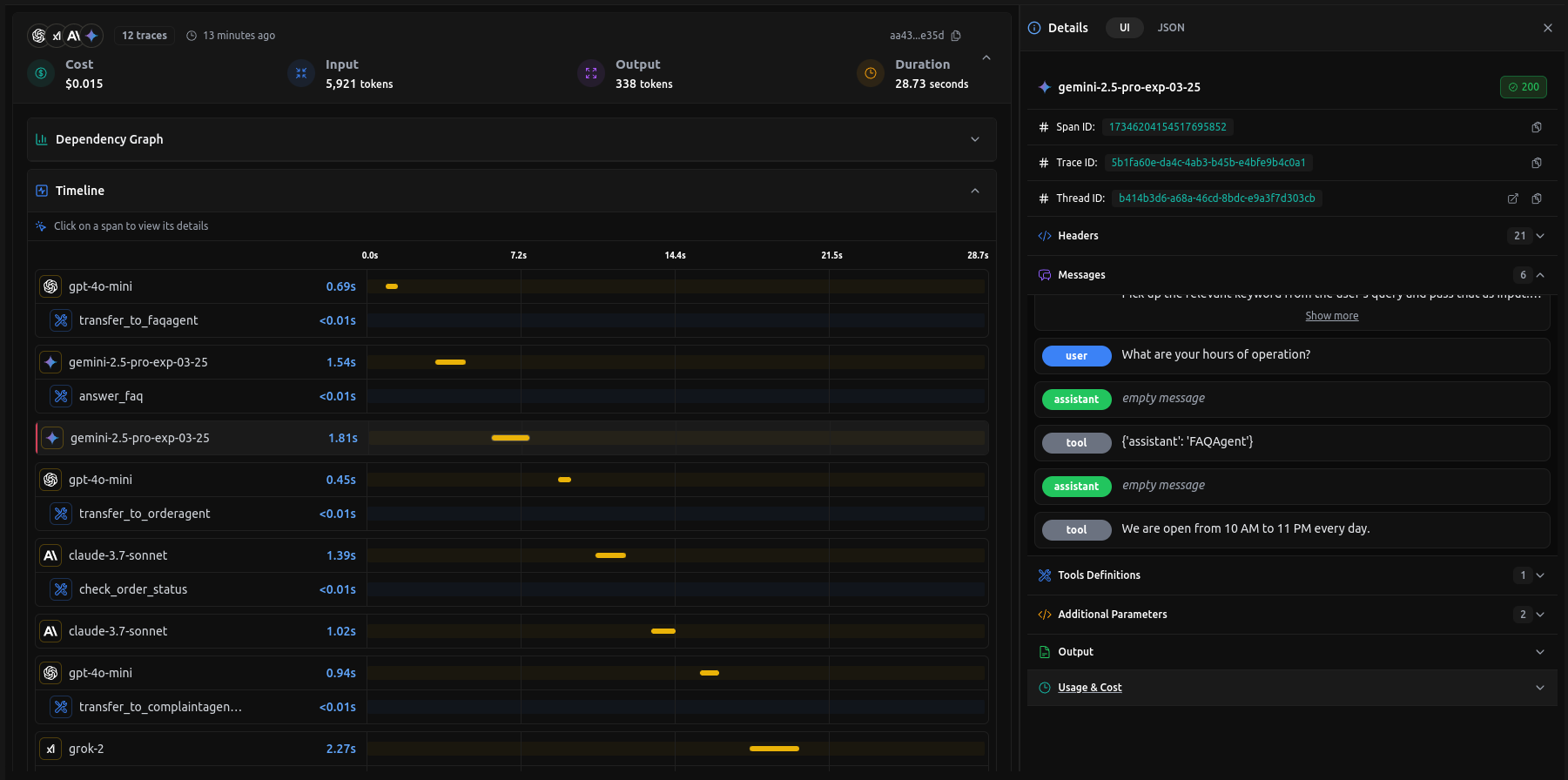

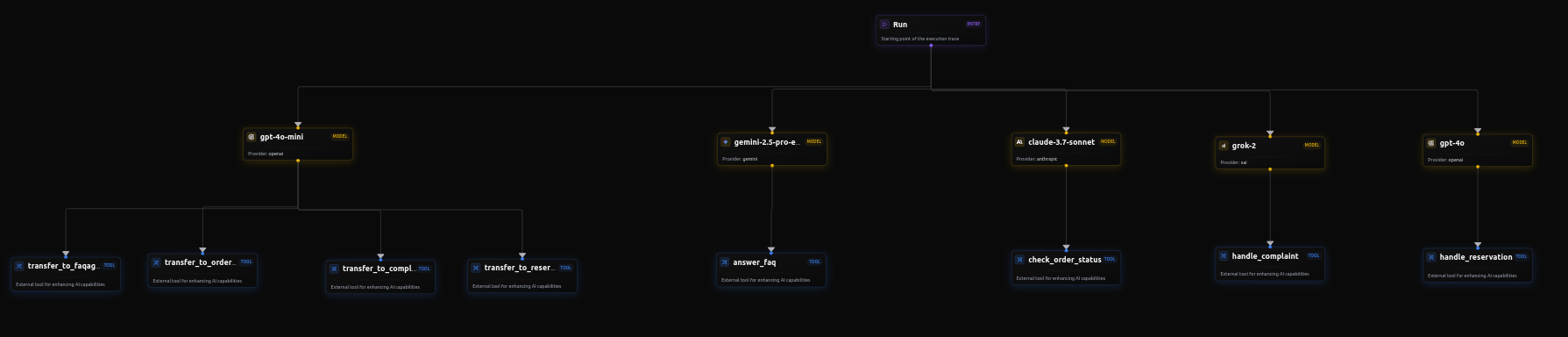

Observing the Agents Execution

LangDB provides a unified trace view that helps developers inspect agent flows across different models. Each span in the trace shows the model used, any tools called, and the time taken at each step.

In the example above, you can see:

Multiple agents running on different models in a single request

Visual breakdown of agent handoffs and tool calls

Timeline and cost details for each model involved

This trace graph shows a single user query routed through multiple agents, each using a different model. It captures the classifier decision, tool calls, and model usage in one place, making it easy to inspect and debug the full interaction flow.

The visibility helps in debugging behavior, verifying tool usage, and understanding model performance across complex workflows.

Why this matters?

In real-world applications, different models can excel depending on the type of task or interaction style required:

Some are better suited for fast, low-latency tasks

Others handle nuanced, empathetic, or creative responses well

Certain models are optimized for summarization or structured formatting

Others provide strong performance for general-purpose conversations

LangDB lets you assign the most suitable model to each agent, giving you task-specific control while maintaining a unified development experience.

Conclusion

The OpenAI Agents SDK provides a clean way to define agent workflows. Paired with LangDB, it becomes possible to run the same agent setup across multiple model providers without changing your application code.

LangDB gives you visibility into agent execution through trace views and lets you switch between over 350 supported models using a consistent interface. This makes it easier to compare performance, debug behavior, and adapt to evolving requirements.

Try It Yourself

To explore this setup, check out the sample project in the repository. It contains the necessary code to run agents locally, modify model configurations, and observe how requests are routed across different models using LangDB.

Visit LangDB and signup to get 10$ credit.

View the sample code: GitHub Repository.

References

Subscribe to my newsletter

Read articles from Mrunmay Shelar directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by