5. Understanding Loss Functions in Neural Networks

Muhammad Fahad Bashir

Muhammad Fahad Bashir

Welcome! In this post, we will explore loss functions in neural networks. Previously, we covered an example of logistic regression to help understand the concepts.our series- Deep Learning Essential.

What is a Loss Function?

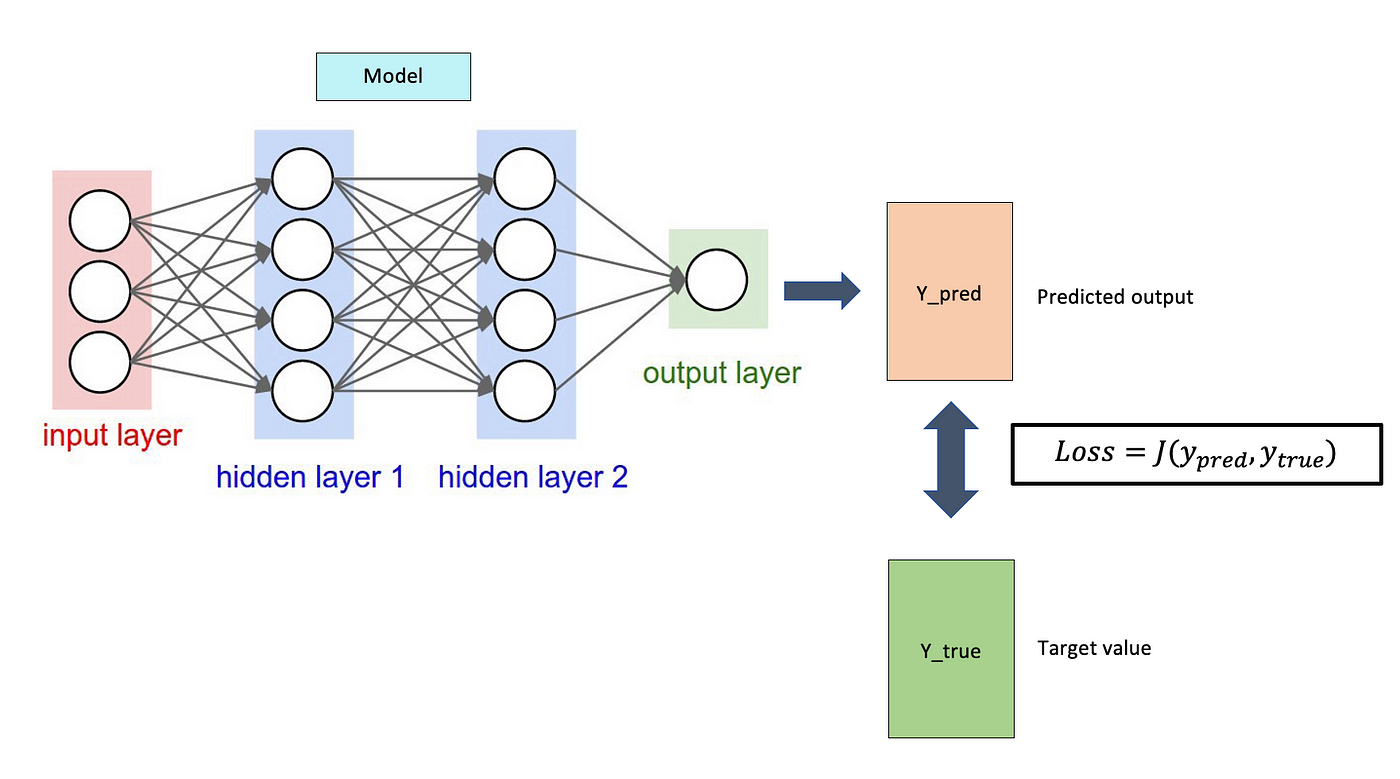

A loss function measures the difference between the actual value (y) and the predicted value (ŷ) of a neural network. The goal of training a neural network is to minimize this difference so that the model can make accurate predictions.

Example

If ŷ is close to y, the loss is close to 0.

If ŷ is far from y, the loss is large.

🔹 Key Takeaway: The loss function tells us how far our predictions are from the actual values.

What is Training?

Training a neural network means teaching it to make correct predictions. Imagine you have many pictures of cats and dogs. You want the network to tell you if a new picture is a cat or a dog.

To do this, the network uses weights and biases. These are numbers that help the network make its predictions. Think of weights as how important each pixel in the picture is. Bias is like a starting point.

The goal of training is to find the right weights and biases. When the network sees a cat picture, it should say "cat" with high confidence. If it sees a dog picture, it should say "dog."

Parameters: Weights and Biases

In neural networks, weights and biases are called parameters. When we train the network, we adjust these parameters. We want the network's prediction (y-hat) to be as close as possible to the real answer (y).

y-hat: The network's guess.

y: The correct answer.

If y-hat is close to y, the network is doing a good job.

How Loss Functions Works

The network makes a prediction (y-hat).

The loss function compares y-hat to the real answer (y).

The loss function gives a number that shows how big the error is.

The network uses this number to change its weights and biases.

The network makes a new prediction, and the process repeats.

We want the loss function to be as small as possible. This means the network's predictions are close to the real answers.

Type of Loss Functions

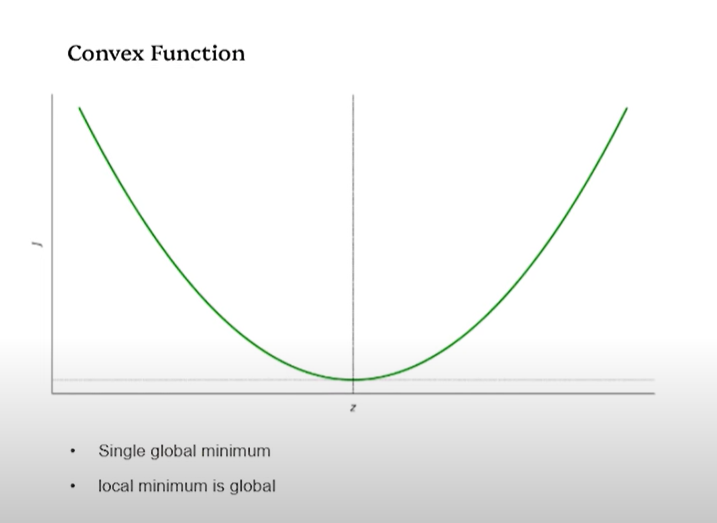

Convex Functions: A Special Type of loss function

When choosing a loss function, we often use convex functions. Convex functions have a special shape. They have only one lowest point, called the global optimum.

Why do we like convex functions? Because they make it easier to train the network. With a convex function, the network can find the best weights and biases without getting stuck.

Negative Log Loss: A Common Choice

A common loss function in logistic regression is negative log loss. It is also called binary cross-entropy loss.

Negative log loss tells us the distance between y-hat and y. If y-hat is close to y, the loss is small. If y-hat is far from y, the loss is big.

The formula for negative log loss looks like this:

- [y * log(y-hat) + (1 - y) * log(1 - y-hat)]

This formula might look scary, but it's not too bad. Let's break it down with examples.

Example 1: y = 0, y-hat = 0

If the real answer is 0, and the network predicts 0, the loss is 0. This is good! The network is correct.

Example 2: y = 1, y-hat = 0.1

If the real answer is 1, but the network predicts 0.1, the loss is higher. This tells us the network needs to improve.The negative log loss penalizes incorrect predictions more heavily.

Cost Function: Average Loss

We use the loss function to measure the error for one sample. What if we have many samples? We need to find the average loss over all the samples. This average loss is called the cost function.

The cost function tells us how well our network is doing on average. We want the cost function to be as small as possible.

To calculate the cost function:

Calculate the loss for each sample.

Add up all the losses.

Divide by the number of samples.

The cost function depends on the weights and biases. By changing the weights and biases, we can change the cost function. Our goal is to find the weights and biases that make the cost function as small as possible

Improving Weights and Biases

So far, we've talked about how to measure the error of our network. But how do we actually improve the weights and biases?In logistic regression and neural networks, weight (W) and bias (B) are the parameters that the model learns during training.

Adjust W and B so that ŷ is as close as possible to y for all training examples.

Process

Start with random values for W and B.

Calculate the loss and cost for the current parameters.

Use an optimization algorithm (e.g., gradient descent) to update W and B.

One way to do this is with an algorithm called gradient descent. Gradient descent helps us find the best weights and biases step by step.

Conclusion

Loss functions are very important for training neural networks. They tell us how wrong our network is. We use this information to improve the network's predictions. By choosing the right loss function and using algorithms like gradient descent, we can train networks to solve complex problems.

Ready to dive deeper? Continue exploring the world of neural networks - Deep Learning Essential

Subscribe to my newsletter

Read articles from Muhammad Fahad Bashir directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by