RAG Chatbot Creation: Simple Guide for Beginners

Abdullah Farhan

Abdullah Farhan

Hello everyone, as I explore the world of AI, I've noticed that beginners, myself included, often struggle with taking the first steps. So, I decided to put together a quick guide on how to set up a chatbot and input the data you need.

Tech Stack

Docker

Python

Next.js

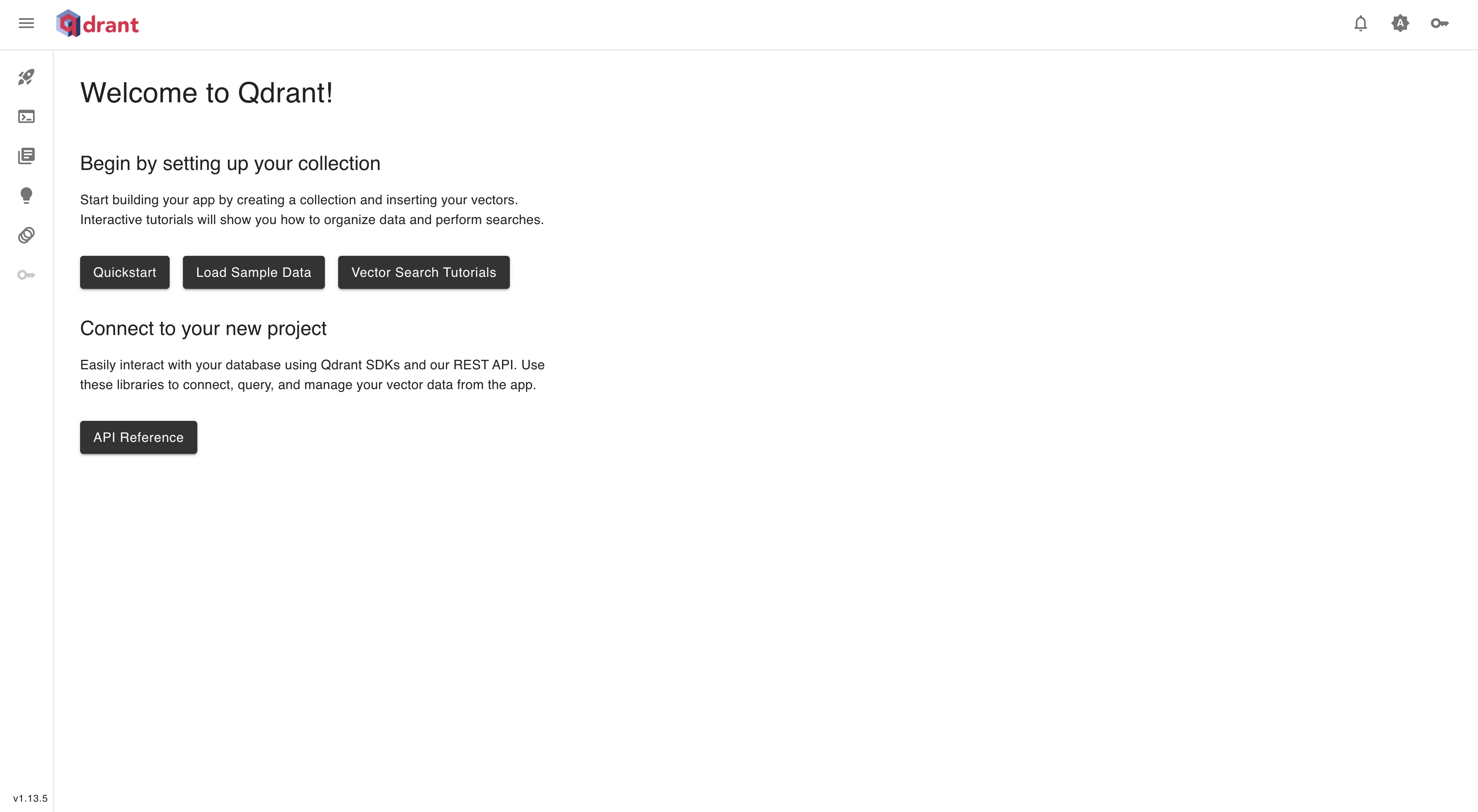

Step 1 → Initializing the Quadrant

First, let's understand what Qdrant is. Qdrant is an open-source vector database mainly used for storing and searching high-dimensional vectors. It's perfect for AI tasks like recommendation systems, semantic search, and LLM-based apps. Plus, it has a Docker image, so you can set it up very easily.

Why Use Qdrant?

Fast Vector Search – Finds similar items in massive datasets.

Hybrid Search – Mixes text-based and vector-based search.

Scalable & Distributed – Works with large AI models.

Easy API Integration – Supports REST & gRPC.

Docker-Friendly – Just one command to get it running.

Spining up Quadrant

Spin quadrant container we will use the docker image

docker run -p 6333:6333 qdrant/qdrant

Qudrat UI will be running on the →http://localhost:6333/dashboard#/welcome

Step 2 → Loading vector database

First, let's understand what a vector database is. A vector database is a special type of database that stores and searches high-dimensional vectors instead of traditional rows and columns like MySQL or PostgreSQL.

What is a Vector?

A vector is just a bunch of numbers representing something in a mathematical way. In AI, everything (text, images, audio, videos) can be converted into vectors using embeddings. These vectors capture the meaning or features of the data.

For example:

A word like "dog" might be stored as

[0.23, 0.67, 0.89, 0.12]An image of a cat could have a vector like

[0.56, 0.78, 0.34, 0.91]If you search for "pet", the database finds vectors close to dog and cat.

To load data, we will use a random dataset themed on songs (generated using ChatGPT). You can use your own dataset if you prefer.

[

{

"id": 1,

"title": "Blinding Lights",

"artist": "The Weeknd",

"album": "After Hours",

"genre": "Pop",

"language": "English",

"release_year": 2019,

"lyrics_snippet": "I said, ooh, I'm blinded by the lights",

"description": "A retro-style synthwave song about longing for love in a city at night.",

"mood": "Energetic",

"theme": "Love & Nightlife",

"instrumentation": ["Synthesizers", "Drum Machine", "Bass"],

"vibe": "Uplifting",

"tempo": 171,

"popularity": 95

},

{

"id": 2,

"title": "Bohemian Rhapsody",

"artist": "Queen",

"album": "A Night at the Opera",

"genre": "Rock",

"language": "English",

"release_year": 1975,

"lyrics_snippet": "Is this the real life? Is this just fantasy?",

"description": "An operatic rock masterpiece that tells a dramatic and mysterious story.",

"mood": "Dramatic",

"theme": "Life & Fate",

"instrumentation": ["Piano", "Electric Guitar", "Drums"],

"vibe": "Epic",

"tempo": 72,

"popularity": 98

},

{

"id": 3,

"title": "Shape of You",

"artist": "Ed Sheeran",

"album": "Divide",

"genre": "Pop",

"language": "English",

"release_year": 2017,

"lyrics_snippet": "I'm in love with the shape of you",

"description": "A catchy pop song about falling in love and attraction.",

"mood": "Romantic",

"theme": "Love & Attraction",

"instrumentation": ["Acoustic Guitar", "Percussion", "Synth"],

"vibe": "Feel-Good",

"tempo": 96,

"popularity": 94

},

{

"id": 4,

"title": "Lose Yourself",

"artist": "Eminem",

"album": "8 Mile Soundtrack",

"genre": "Hip-Hop",

"language": "English",

"release_year": 2002,

"lyrics_snippet": "You better lose yourself in the music, the moment",

"description": "A motivational rap song about seizing the moment and chasing dreams.",

"mood": "Motivational",

"theme": "Ambition & Struggle",

"instrumentation": ["Piano", "Drum Beat", "Synth Bass"],

"vibe": "Intense",

"tempo": 171,

"popularity": 97

},

.....100 more json objects

]

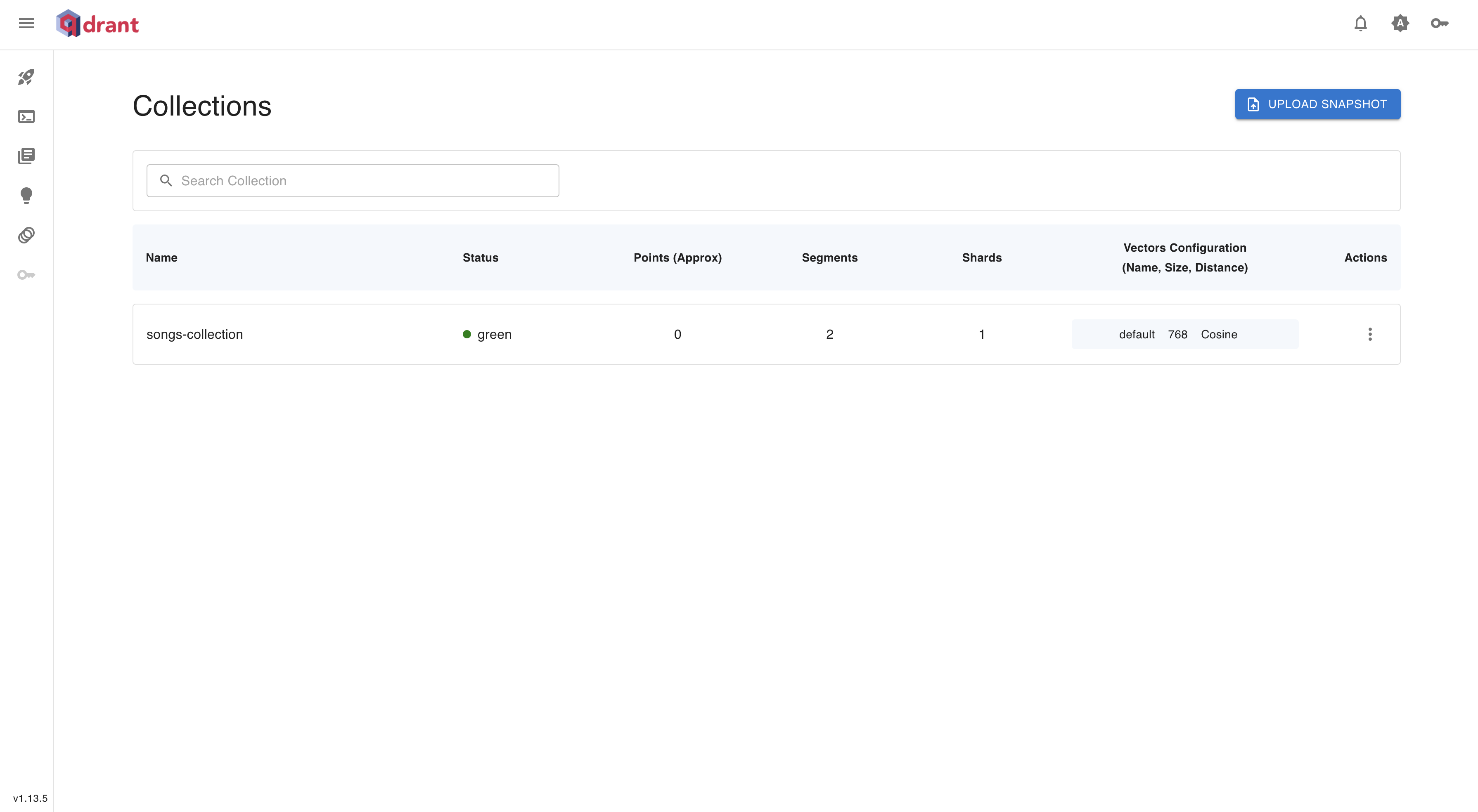

Step 2.1 → creating vector collection

Install python qudrant_client library

pip install qdrant-client

Create quadrant_client.py

from qdrant_client import QdrantClient

from qdrant_client.models import VectorParams, Distance

client = QdrantClient("localhost", port=6333)

client.create_collection(

collection_name="songs-collection",

vectors_config=VectorParams(size=768, distance=Distance.COSINE)

)

print("Collection 'songs-collection' created successfully!"

Run the script

python quadrant_client.py

This will create the vector collection in your Qudrant database

Step 2.2 → loading data

Create embedding model

First of all, what are embedding models?

Embedding models are ML models that convert words, sentences, images, or any data into numerical vectors (embeddings). These vectors capture the meaning, context, or relationship between different pieces of data.

For example:

Word embeddings (Word2Vec, GloVe) → Convert words to vectors.

Image embeddings (ResNet, CLIP) → Convert images to vectors.

Sentence embeddings (Sentence Transformer) → Convert whole sentences to vectors.

Once data is converted into embeddings, we can use it for things like semantic search, recommendation systems, clustering, and classification.

we will be using sentenc_tranformer as embedding model

Now, Sentence Transformer is a type of embedding model that works specifically for sentences and paragraphs. It’s based on BERT but optimized to generate better sentence-level embeddings.

How It Works:

You input a sentence → Like "AI is transforming the world!"

Model processes it using transformers (like

all-MiniLM-L6-v2).It outputs a fixed-length vector (embedding) → Something like

[0.12, -0.45, 0.88, ...](size 384 or 768).Similar sentences get similar embeddings → This helps in finding relationships between different sentences.

Since Sentence Transformer is an embedding model for sentences, you can use it for:

Semantic Search – Finding similar documents/sentences.

Chatbots – Understanding user queries.

Recommendation Systems – Suggesting content based on meaning.

Duplicate Question Detection – Like Quora/StackOverflow.

Text Clustering – Grouping similar content

Enough Theory let’s code!!

Install SenteceTransformer

python install SentenceTranformer

Initialize SentenceTranformer, Create embedding_model.py

from sentence_transformers import SentenceTransformer

model = SentenceTransformer("sentence-transformers/all-mpnet-base-v2")

Create data_loader.py

import json

from qdrant_client.models import PointStruct

import uuid

from embedding_model import model

from quadrant_client import client

qudrant_points = []

for song in songs:

song_text = json.dumps(song, default=str)

embedding = model.encode(song_text).tolist()

qdrant_points.append(

PointStruct(

id=str(uuid.uuid4()),

vector=embedding,

payload=song

)

)

client.upsert(

collection_name="songs-collection",

points=qdrant_points

)

print("Data inserted into Qdrant successfully!")

Step 3 → Searching in Vector Data based on Query

Now we will search and generate data based on the query text provided by the user. The embedding model tries to match the patterns in the query with the patterns in the vector database, and it will provide the most likely answers.

Create main.py

import re

from datetime import datetime, timedelta, timezone

from generate_embeddings import model

from quadrant_client import client

query_text = input("Enter your query: ")

query_embedding = model.encode(query_text).tolist()

search_params = {

"collection_name": "songs-collection",

"query_vector": query_embedding,

"limit": 5

}

search_results = client.search(

collection_name="songs-collection",

query_vector=query_embedding,

limit=5,

query_filter=None

)

for result in search_results:

print("\n----------\n")

print("Score:", result.score)

print("Song :", result.payload)

Output →

python main.py

Enter your query: songs where artists should be Ed Sheeran

----------

Score: 0.492522

Song : {'id': 73, 'title': 'Song 73', 'artist': 'Ed Sheeran', 'album': 'Certified Lover Boy', 'genre': 'R&B',

'language': 'English', 'release_year': 1996, 'lyrics_snippet': 'Sample lyrics from song 73...', 'description': 'A energetic song about overcoming challenges.',

'mood': 'Sad', 'theme': 'Life & Fate', 'instrumentation': ['Electric Guitar', 'Saxophone'], 'vibe': 'Raw', 'tempo': 126, 'popularity': 66}

----------

Score: 0.4881825

Song : {'id': 81, 'title': 'Song 81', 'artist': 'Ed Sheeran', 'album': 'Certified Lover Boy', 'genre': 'R&B', 'language': 'Hindi',

'release_year': 2016, 'lyrics_snippet': 'Sample lyrics from song 81...', 'description': 'A rebellious song about party & fun.', 'mood': 'Happy', 'theme': 'Party & Fun', 'instrumentation': ['Violin', 'Bass'], 'vibe': 'Raw', 'tempo': 79, 'popularity': 50}

----------

Score: 0.48138124

Song : {'id': 84, 'title': 'Song 84', 'artist': 'Ed Sheeran', 'album': 'Certified Lover Boy', 'genre': 'Alternative', 'language': 'Hindi',

'release_year': 1998, 'lyrics_snippet': 'Sample lyrics from song 84...', 'description': 'A sensual song about love & attraction.', 'mood': 'Uplifting', 'theme': 'Party & Fun', 'instrumentation': ['Piano', 'Guitar'], 'vibe': 'Chill',

'tempo': 149, 'popularity': 84}

----------

Score: 0.47935104

Song : {'id': 57, 'title': 'Song 57', 'artist': 'Ed Sheeran', 'album': 'Parachutes', 'genre': 'R&B', 'language': 'English', 'release_year': 2010, 'lyrics_snippet': 'Sample lyrics from song 57...',

'description': 'A sad song about overcoming challenges.', 'mood': 'Uplifting', 'theme': 'Life & Fate', 'instrumentation': ['Violin', 'Bass'], 'vibe': 'Tropical', 'tempo': 151, 'popularity': 63}

----------

Score: 0.4738466

Song : {'id': 41, 'title': 'Song 41', 'artist': 'Ed Sheeran', 'album': 'Unorthodox Jukebox', 'genre': 'Alternative', 'language': 'English', 'release_year': 1992, 'lyrics_snippet': 'Sample lyrics from song 41...',

'description': 'A happy song about ambition & struggle.', 'mood': 'Motivational', 'theme': 'Passion', 'instrumentation': ['Electric Guitar', 'Saxophone'], 'vibe': 'Deep', 'tempo': 110, 'popularity': 52}

Note → The score in this context represents the similarity score between the query vector and the vectors of the songs in the collection. It indicates how closely the song matches the search query, with higher scores representing a closer match. The score is typically a floating-point number between 0 and 1, where 1 indicates a perfect match.

Step 4 → Integrating LLM

PreReq → LLM Key

For Free LLM Model you can use google Gemini Model → https://aistudio.google.com/apikey

We will be using Azure OpenAI Model

import re

from datetime import datetime, timedelta, timezone

from generate_embeddings import model

from quadrant_client import client

from azure_openai_client import ChatCompletion

import os

query_text = input("Enter your query: ")

query_embedding = model.encode(query_text).tolist()

search_params = {

"collection_name": "songs-collection",

"query_vector": query_embedding,

"limit": 5

}

search_results = client.search(

collection_name="songs-collection",

query_vector=query_embedding,

limit=2,

query_filter=None

)

documents = []

for result in search_results:

documents.append(f"Score: {result.score}\nContent: {result.payload}")

messages = [

{"role": "system", "content": "You are an AI assistant. Answer based on the given context."},

{"role": "user", "content": f"Context: {documents}\n\nUser Query: {query_text}"}

]

try:

llm_response = ChatCompletion(messages)

except Exception as e:

raise HTTPException(status_code=500, detail=str(e))

messages = ({"role": "system", "content": "perfect!!..now extract the response from the llm response that I am sharing and send it back in propper html format"},

{"role": "user", "content": f"llm_response: {llm_response.choices[0].message.content}"}

)

try:

llm_response = ChatCompletion(messages)

except Exception as e:

raise HTTPException(status_code=500, detail=str(e))

if chat_id != None:

return {

"query": query_text,

"search_results": documents,

"llm_response": {

"llm_data": llm_response.choices[0].message.content,

"chat_id": chat_id

}

}

return {

"query": query_text,

"search_results": documents,

"llm_response": {

"llm_data": llm_response.choices[0].message.content,

"chat_id": llm_response.id

}

}

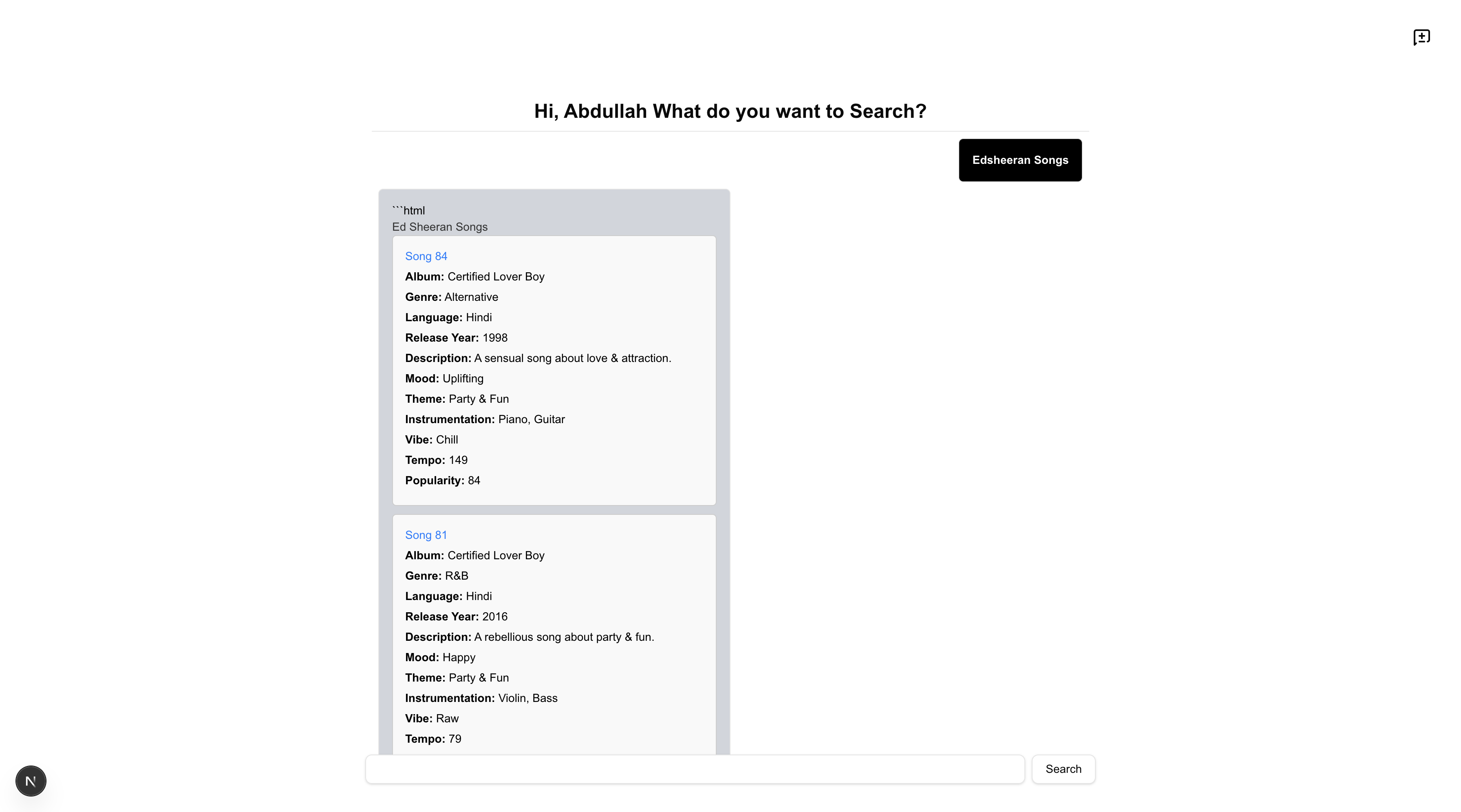

Output →

Enter your query: Give me Edshreeran songs only

🔥 AI Response 🔥

Here are the Ed Sheeran songs from the provided context:

1. **Song 84**

Artist: Ed Sheeran

Album: ÷ (Divide)

Genre: Pop

Language: English

Release Year: 2017

Description: A catchy song about attraction and romance.

Mood: Uplifting

Theme: Love & Relationships

Instrumentation: Acoustic Guitar, Percussion

Vibe: Danceable

Tempo: 96

Popularity: 100

2. **Song 81**

Artist: Ed Sheeran

Album: x (Multiply)

Genre: Pop/Soul

Language: English

Release Year: 2014

Description: A heartfelt ballad about eternal love.

Mood: Romantic

Theme: Love & Commitment

Instrumentation: Electric Guitar, Piano

Vibe: Intimate

Tempo: 79

Popularity: 98

Let me know if you need more information!

As you can see how structured and accurate the data is

Step 4 → Integrating Frontend

We will be using https://ui.shadcn.com/ (shadcn library for faster development of ui)

Step 4.1 → Create Next.js Application

npx create-next-app frontend

Home.tsx

"use client";

import { ChatsDrawer } from "@/components/chat-drawer";

import { Button } from "@/components/ui/button";

import { Input } from "@/components/ui/input";

import { Icon, MessageSquareDiff } from "lucide-react";

import { initialize } from "next/dist/server/lib/render-server";

import { useEffect, useRef, useState } from "react";

export default function Home() {

const [searchQuery, setSearchQuery] = useState("");

const [chats, setChats] = useState([]);

const [currentChatId, setCurrentChatId] = useState(null);

const [chatOptions, setChatOptions] = useState([]);

const divRef = useRef<HTMLDivElement>(null);

const [loading, setLoading] = useState({

status: false,

query: "",

});

const handleSearch = async () => {

setLoading(() => {

return {

status: true,

query: searchQuery,

};

});

setSearchQuery("");

let freshData = {

user_query: searchQuery,

llm_response: "",

}

setChats((prevChats: any[]) => [...prevChats, freshData]);

let response = await fetch("http://127.0.0.1:8000/search/", {

method: "POST",

headers: {

"Content-Type": "application/json",

},

body: JSON.stringify({

query_text: searchQuery,

}),

});

let data = await response.json();

let currentChats = [...chats];

if (!currentChatId) {

let dataToBeSaved = {

llm_response: data.llm_response.llm_data,

chat_id: data.llm_response.chat_id,

user_query: searchQuery,

};

setChats((prevChats: any[]) => [...prevChats, dataToBeSaved]);

localStorage.setItem(

`${data.llm_response.chat_id}`,

JSON.stringify([...chats, dataToBeSaved])

);

setCurrentChatId(data.llm_response.chat_id);

} else {

let dataToBeSaved = {

llm_response: data.llm_response.llm_data,

chat_id: currentChatId,

user_query: searchQuery,

};

setChats((prevChats: any[]) => [...prevChats, dataToBeSaved]);

localStorage.setItem(

`${currentChatId}`,

JSON.stringify([...chats, dataToBeSaved])

);

}

setChatOptions((prevChats: any[]) => [

...prevChats,

{ chat_id: data.llm_response.chat_id, user_query: searchQuery },

]);

setLoading(() => {

return {

status: false,

query: searchQuery,

};

});

};

useEffect(() => {

if (divRef.current) {

divRef.current.scrollTop = divRef.current.scrollHeight;

}

}, [chats]);

const loadNewChat = (chat_id: string) => {

setCurrentChatId(chat_id);

let data = JSON.parse(localStorage.getItem(chat_id) || "[]");

setChats(data);

};

useEffect(() => {

let keys = Object.keys(localStorage);

let chats = [];

keys.forEach((key) => {

if (key.includes("chat")) {

chats.push(...JSON.parse(localStorage.getItem(key) || "[]"));

}

});

setChatOptions(

chats.map((chat: any) => ({

chat_id: chat.chat_id,

user_query: chat.user_query,

}))

);

}, []);

const initializeNewChat = () => {

setCurrentChatId(null);

setChats([]);

setSearchQuery("");

};

const handleOnClick = (query) => {};

return (

<div className="w-full h-[100vh] p-4">

<div className="flex justify-end cursor-pointer">

<MessageSquareDiff onClick={() => initializeNewChat()} />

</div>

<ChatsDrawer loadNewChat={loadNewChat} chatOptions={chatOptions} />

<div className="max-w-4xl mx-auto w-full h-full flex flex-col items-center justify-center">

<div className="text-2xl font-bold text-center">

Hi, What do you want to Search?

</div>

<div ref={divRef} className="h-[80%] w-full overflow-scroll p-2 pb-2">

{chats &&

chats.map((chat: any, index) => (

<div key={index} className="flex flex-col gap-2 p-2 border-b">

<div className="text-sm font-semibold bg-black text-white border rounded-sm w-max p-4 ml-auto">

{chat.user_query}

</div>

{

loading.status && chat.user_query === loading.query ? (

<div className="text-sm bg-gray-300 text-black w-1/2 p-4 mr-auto border rounded-sm">

loading...

</div>

) : (

<div

className="text-sm bg-gray-300 text-black w-1/2 p-4 mr-auto border rounded-sm"

dangerouslySetInnerHTML={{ __html: chat.llm_response }}

></div>

)

}

</div>

))}

</div>

<div className="flex gap-2 w-full">

<Input

value={searchQuery}

onChange={(e) => setSearchQuery(e.target.value)}

/>

<Button

onClick={handleSearch}

className="cursor-pointer"

variant="outline"

>

Search

</Button>

</div>

</div>

</div>

);

}

ChatsDrawer.tsx

"use client";

import { useEffect, useState } from "react";

import {

Drawer,

DrawerContent,

DrawerFooter,

DrawerHeader,

DrawerTitle,

} from "@/components/ui/drawer";

import { Button } from "./ui/button";

const WIDTH = 250;

export function ChatsDrawer({loadNewChat, chatOptions}) {

const [isOpen, setIsOpen] = useState(false);

useEffect(() => {

const handleMouseMove = (e: MouseEvent) => {

if (e.clientX < 40) {

setIsOpen(true);

}

if (e.clientX > WIDTH) {

setIsOpen(false);

}

}

window.addEventListener('mousemove', handleMouseMove);

return () => {

window.removeEventListener('mousemove', handleMouseMove);

}

}, []);

return (

<Drawer open={isOpen} onOpenChange={setIsOpen} direction="left">

<DrawerContent style={{ maxWidth: WIDTH, height:"100vh" }} className="bg-background">

<DrawerHeader>

<DrawerTitle className="text-[12px]">Your projects</DrawerTitle>

{chatOptions &&

chatOptions.map((chat, index) => (

<div key={index} className="my-1 w-min">

<Button variant={"outline"} onClick={() => {

loadNewChat(chat.chat_id);

}} className="border pl-1 w-full rounded hover:bg-accent cursor-pointer hover:text-accent-foreground text-[12px]">

<div className="w-full flex">

<div className="pl-2 flex items-center"></div> <div className="pl-2">{chat.user_query}</div>

</div>

</Button >

</div>

))

}

</DrawerHeader>

<DrawerFooter>

</DrawerFooter>

</DrawerContent>

</Drawer>

)

}

Spin Up the frontend

user/frontend: npm run dev

Subscribe to my newsletter

Read articles from Abdullah Farhan directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by