What is Model Context Protocol? Key Information You Should Know

Meetkumar Chavda

Meetkumar ChavdaTable of contents

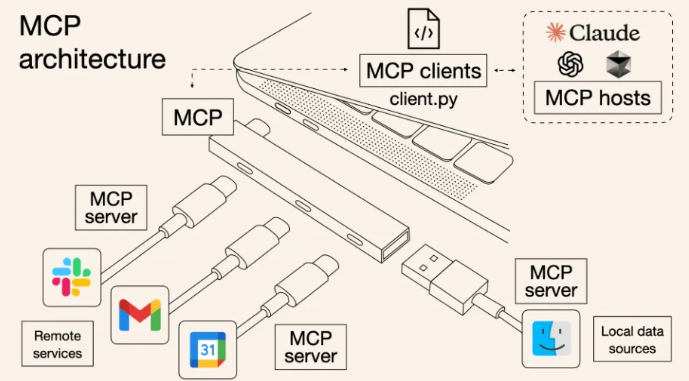

Think of MCP like a USB hub for your LLM just as a single hub lets your laptop access multiple devices (storage, keyboards, displays), MCP allows your AI to seamlessly pull in data from various sources.

Instead of hype, let’s focus on what it actually does: MCP powers up LLMs with your custom codebase, making them context-aware and efficient.

MCP Architecture :

Imagine you’re running a DevOps Cafe, where different tools (IDEs, AI apps, or CLI tools) come in to order data from multiple sources. But instead of fetching data manually, you have MCP acting as your super-efficient ordering system.

How the Cafe Runs (MCP Architecture in Action) :

The Customer (MCP Client):

This is your IDE, AI tool, or another program that wants to access data.

It doesn’t know (or care) exactly where the data comes from it just places an order.

The Waiters (MCP Protocol):

These are lightweight connectors that handle communication between customers and different kitchens.

They ensure orders are formatted correctly, so the right kitchen gets the right request.

The Kitchens (MCP Servers):

Each MCP Server specializes in handling a specific type of request:

Kitchen A (MCP Server- Local Data) → Fetches local database records.

Kitchen B (MCP Server- Remote Services) → Calls APIs for external services like Gmail, Slack, and Google Calendar.

Kitchen C (MCP Server- AI Models) → Interfaces with AI models like Claude or other hosted LLMs for intelligent responses.

The Ingredients (Data Sources):

These are your local files, databases, and remote services where the real data exists.

MCP Servers don’t store the data they just know how to fetch it when needed.

Model Context Protocol (MCP) vs. Traditional APIs:

| Feature | Model Context Protocol (MCP) | Traditional APIs |

| Purpose | Built for AI model interactions, maintaining context across requests. | General-purpose, used for web services and data exchange. |

| Context Handling | Stateful, meaning AI models retain context across interactions. | Stateless, requiring explicit context passing in every request. |

| Data Exchange | Uses structured, context-aware exchanges for efficient AI processing. | Relies on a standard request-response model (e.g., REST). |

| Scalability | Easily scales with minimal overhead by adding new MCP nodes. | Scales using API gateways, load balancers, and additional servers. |

| Statefulness | Supports long-term sessions or real-time state tracking. | Typically stateless, requiring every request to be independent. |

| Protocol Type | Uses gRPC, WebSockets, HTTP/2, MQTT, or GraphQL, depending on the AI workload. | Uses REST, gRPC, GraphQL, WebSockets, but primarily request-response-based. |

| Complexity | More complex due to AI context management and persistence. | Simpler to implement but requires manual state handling. |

| Best For | AI-driven chatbots, recommendation engines, real-time AI systems. | Web services, traditional API-based applications, CRUD operations. |

Benefits of Implementing MCP

Simplified Development: Write once, integrate multiple times without rewriting custom code for every integration.

Flexibility: Switch AI models or tools without complex reconfiguration.

Real-time Responsiveness: MCP connections remain active, enabling real-time context updates and interactions.

Security & Compliance: Built-in access controls and standardized security practices.

Scalability: Easily add new capabilities as your AI ecosystem grows—simply connect another MCP server.

When Are Traditional APIs Better?

If your use case demands precise, predictable interactions with strict limits, traditional APIs might be preferable. MCP is great for flexibility and context-awareness, but granular APIs are ideal when:

Fine-grained control and highly-specific, restricted functionalities are needed.

You prefer tight coupling for performance optimization.

You want maximum predictability with minimal context autonomy.

Conclusion

MCP: Unified interface for AI agents to dynamically interact with external data/tools

APIs: Traditional methods, requiring individualized integrations and more manual oversight

MCP provides a unified and standardized way to integrate AI agents and models with external data and tools. It's not just another API; it's a powerful connectivity framework enabling intelligent, dynamic, and context-rich AI applications.

Subscribe to my newsletter

Read articles from Meetkumar Chavda directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by