Your First AI Agent with LangGraph: A Beginner-Friendly Guide

Spheron Network

Spheron Network

The promise of artificial intelligence has long been tethered to the idea of autonomous systems capable of tackling complex tasks with minimal human intervention. While chatbots have provided a glimpse into the potential of conversational AI, the true revolution lies in the realm of AI agents – systems that can think, act, and learn. Building effective agents demands a deep understanding of their architecture and limitations.

This article aims to demystify building AI agents, providing a comprehensive guide using LangGraph, a powerful framework within the LangChain ecosystem. We'll explore the core principles of agent design, construct a practical example, and delve into the nuances of their capabilities and limitations.

From Isolated Models to Collaborative Agents

Traditional AI systems often function as isolated modules dedicated to a specific task. A text summarization model operates independently of an image recognition model, requiring manual orchestration and context management. This fragmented approach leads to inefficiencies and limits the potential for complex, multi-faceted problem-solving.

AI agents, in contrast, orchestrate a suite of capabilities under a unified cognitive framework. They persistently understand the task, enabling seamless transitions between different processing stages. This holistic approach empowers agents to make informed decisions and adapt their strategies based on intermediate results, mimicking the human problem-solving process.

The Pillars of Agent Intelligence

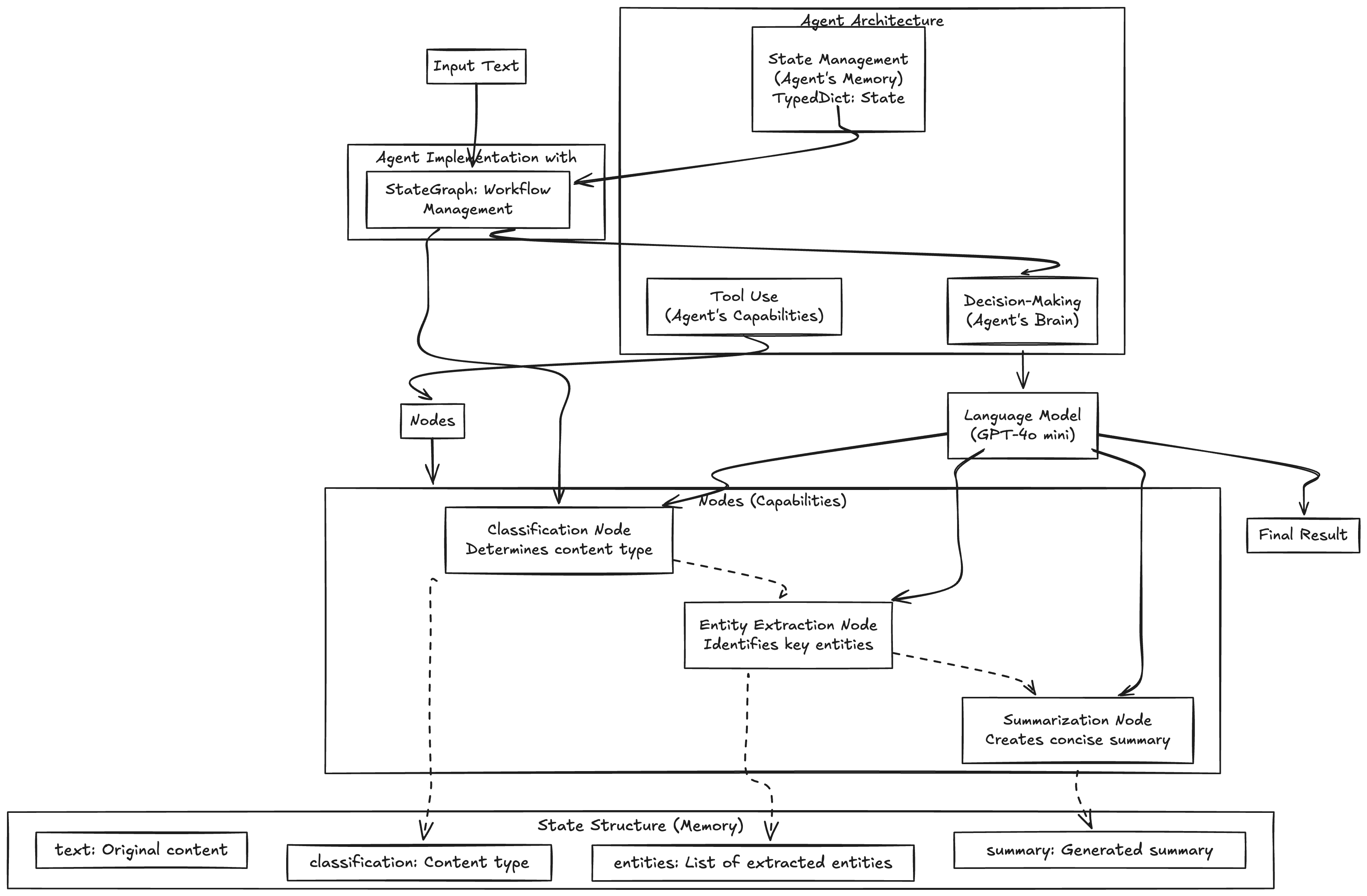

The foundation of AI agent intelligence rests on three key principles:

State Management: This refers to the agent's ability to maintain a dynamic memory, track its progress, store relevant information, and adapt its strategy based on the evolving context.

Decision-Making: Agents must be able to analyze the current state, evaluate available tools, and determine the optimal course of action to achieve their objectives.

Tool Utilization: Agents need to seamlessly integrate with external tools and APIs, leveraging specialized capabilities to address specific aspects of the task.

Building Your First Agent with LangGraph: A Structured Approach

LangGraph provides a robust framework for constructing AI agents by representing their workflow as a directed graph. Each node in the graph represents a distinct capability, and the edges define the flow of information and control. This visual representation facilitates the design and debugging of complex agent architectures.

Let's embark on a practical example: building an agent that analyzes textual content, extracts key information, and generates concise summaries.

Setting Up Your Development Environment

Before we dive into the code, ensure your development environment is properly configured.

Project Directory: Create a dedicated directory for your project.

mkdir agent_project cd agent_projectVirtual Environment: Create and activate a virtual environment to isolate your project dependencies.

python3 -m venv agent_env source agent_env/bin/activate # For macOS/Linux agent_env\Scripts\activate # For WindowsInstall Dependencies: Install the necessary Python packages.

pip install langgraph langchain langchain-openai python-dotenvOpenAI API Key: Obtain an API key from OpenAI and store it securely.

.env File: Create a

.envfile to store your API key.echo "OPENAI_API_KEY=your_api_key" > .envReplace

your_api_keywith your actual API key.Test Setup: Create a

verify_setup.pyfile to verify your environment.import os from dotenv import load_dotenv from langchain_openai import ChatOpenAI load_dotenv() model = ChatOpenAI(model="gpt-4o-preview") response = model.invoke("Is the system ready?") print(response.content)Run Test: Execute the test script.

python verify_setup.py

Constructing the Agent's Architecture

Now, let's define the agent's capabilities and connect them using LangGraph.

Import Libraries: Import the required LangGraph and LangChain components. Python

import os from typing import TypedDict, List from langgraph.graph import StateGraph, END from langchain.prompts import PromptTemplate from langchain_openai import ChatOpenAI from langchain.schema import HumanMessageDefine Agent State: Create a

TypedDictto represent the agent's state.class AgentState(TypedDict): input_text: str category: str keywords: List[str] summary: strInitialize Language Model: Instantiate the OpenAI language model.

model = ChatOpenAI(model="gpt-4o-preview", temperature=0)Define Agent Nodes: Create functions that encapsulate each agent capability.

def categorize_content(state: AgentState): prompt = PromptTemplate( input_variables=["input_text"], template="Determine the category of the following text (e.g., technology, science, literature). Text: {input_text}\nCategory:" ) message = HumanMessage(content=prompt.format(input_text=state["input_text"])) category = model.invoke([message]).content.strip() return {"category": category} def extract_keywords(state: AgentState): prompt = PromptTemplate( input_variables=["input_text"], template="Extract key keywords from the following text (comma-separated). Text: {input_text}\nKeywords:" ) message = HumanMessage(content=prompt.format(input_text=state["input_text"])) keywords = model.invoke([message]).content.strip().split(", ") return {"keywords": keywords} def summarize_text(state: AgentState): prompt = PromptTemplate( input_variables=["input_text"], template="Summarize the following text in a concise paragraph. Text: {input_text}\nSummary:" ) message = HumanMessage(content=prompt.format(input_text=state["input_text"])) summary = model.invoke([message]).content.strip() return {"summary": summary}Construct the Workflow Graph: Create

StateGraphand connect the nodes. Pythonworkflow = StateGraph(AgentState) workflow.add_node("categorize", categorize_content) workflow.add_node("keywords", extract_keywords) workflow.add_node("summarize", summarize_text) workflow.set_entry_point("categorize") workflow.add_edge("categorize", "keywords") workflow.add_edge("keywords", "summarize") workflow.add_edge("summarize", END) app = workflow.compile()Run the Agent: Invoke the agent with a sample text.

sample_text = "The latest advancements in quantum computing promise to revolutionize data processing and encryption." result = app.invoke({"input_text": sample_text}) print("Category:", result["category"]) print("Keywords:", result["keywords"]) print("Summary:", result["summary"])

Agent Capabilities and Limitations

This example demonstrates the power of LangGraph in building structured AI agents. However, it's crucial to acknowledge the limitations of these systems.

Rigid Frameworks: Agents operate within predefined workflows, limiting their adaptability to unexpected situations.

Contextual Understanding: Agents may struggle with nuanced language and cultural contexts.

Black Box Problem: The internal decision-making processes of agents can be opaque, hindering interpretability.

Human Oversight: Agents require human supervision to ensure accuracy and validate outputs.

Conclusion

Building AI agents is an iterative process that demands a blend of technical expertise and a deep understanding of the underlying principles. By leveraging frameworks like LangGraph, developers can create powerful systems that automate complex tasks and enhance human capabilities. However, it's essential to recognize these systems' limitations and embrace a collaborative approach that combines AI intelligence with human oversight.

Subscribe to my newsletter

Read articles from Spheron Network directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Spheron Network

Spheron Network

On-demand DePIN for GPU Compute