Harnessing the Edge: Deep Dive into Cloudflare Workers & Building a Serverless S3 File Uploader

Hrushi Borhade

Hrushi Borhade

"Why make your code travel around the world when it can just meet your users at the door?" At the forefront of this ever-evolving landscape of cloud computing is Cloudflare Workers, a serverless platform that executes code at the edge of Cloudflare's global network. It's like having tiny minions stationed worldwide, ready to run your code without the hassle of server management. (And unlike real minions, these ones actually follow instructions!)

Today, we'll explore the architecture behind Cloudflare Workers and demonstrate their practical application by building a sophisticated file uploader that leverages Cloudflare Workers, Amazon S3 and React.

Understanding Cloudflare Workers Architecture

The Foundation: V8 Isolates

At the core of Cloudflare Workers lies Google's V8 JavaScript engine, the same technology that powers Chrome. However, Cloudflare implements it in a unique way using "isolates" - lightweight, secure execution environments that start instantly and consume minimal resources. Think of these isolates as studio apartments for your code - compact, self-contained, and much cheaper than the traditional server mansion.

Unlike traditional serverless platforms that require container spinup (cold starts that feel like waiting for your old PC to boot up in 2005), Cloudflare Workers leverage these isolates to execute code in milliseconds. This architectural choice enables true edge computing, allowing your code to run across Cloudflare's network spanning 275+ cities worldwide. Your code is now more well-traveled than most people's Instagram feeds!

┌─────────────────────────────────┐

│ Cloudflare Edge │

│ ┌─────────┐ ┌─────────┐ │

│ │ Isolate │ │ Isolate │ ... │

│ └─────────┘ └─────────┘ │

└─────────────────────────────────┘

▲

│

Request from user

The Runtime Environment

Cloudflare Workers operate in a customized JavaScript runtime environment. Unlike Node.js, which provides access to the operating system, Workers execute in a sandboxed environment optimized for security and performance. It's like Node.js went on a security-focused diet and emerged leaner, meaner, and much less likely to expose your server's underbelly to the dark corners of the internet.

This environment includes:

Web Standards Support: Workers implement standard Web APIs like

fetch,Request,Response, making them familiar to web developers. No need to learn a new language - your JavaScript skills are transferable (finally, something in tech that doesn't require learning yet another framework!).Service Workers API: The programming model is inspired by browser Service Workers, providing a consistent development experience. If you're a frontend developer, this is like finding out the foreign country you're visiting actually speaks your language.

Edge Key-Value Storage: KV namespaces offer distributed, eventually consistent storage directly integrated with Workers. It's like having a global refrigerator where you can store snacks... I mean data... that can be accessed from anywhere.

Durable Objects: For maintaining state and coordinating across requests, providing strong consistency guarantees. Finally, a way to keep your application's state under control without it developing a mind of its own.

The Request Lifecycle

When a request hits a Worker, it triggers the following sequence:

Routing: The request enters Cloudflare's edge network at the closest point to the user. "Welcome to your local neighborhood edge server!"

Worker Activation: An isolate is assigned to handle the request (or reused if already active). "Hey, wake up, you've got work to do!"

Script Execution: Your code executes, potentially interacting with other services or Cloudflare's built-in features. "Time to shine, little code snippet!"

Response Generation: The Worker generates a response that's sent back to the user. "Here's what you asked for, have a nice day!"

All this happens in milliseconds, making Workers ideal for latency-sensitive applications, API gateways, and edge computation. It's like ordering fast food, except the food is actually fast, good for you, and doesn't leave you with regrets.

Why Use Cloudflare Workers?

Performance Benefits

Reduced Latency: Code executes at the edge, closest to users. Because nobody likes waiting, especially not your impatient users refreshing the page every 2 seconds.

No Cold Starts: Isolates start in microseconds, not seconds. Cold starts are so 2020.

Global Distribution: Automatic deployment to 275+ locations worldwide. Your code now has more stamps in its passport than most travel influencers.

Cost Effectiveness

Cloudflare's pricing model is refreshingly straightforward :

Free tier with generous allowances

Pay-as-you-go based on request count rather than execution time

No charges for idle time (If only gym memberships worked this way!)

Development Experience

Simple Deployment: Deploy with a single command using Wrangler CLI. No 27-step deployment checklist required.

Local Development: Test locally before deploying to production. Because nobody likes discovering bugs in production.

TypeScript Support: First-class TypeScript integration. Your compiler errors, now with global distribution!

Modules: Support for ES modules and npm packages. Bring your favorite dependencies to the edge party.

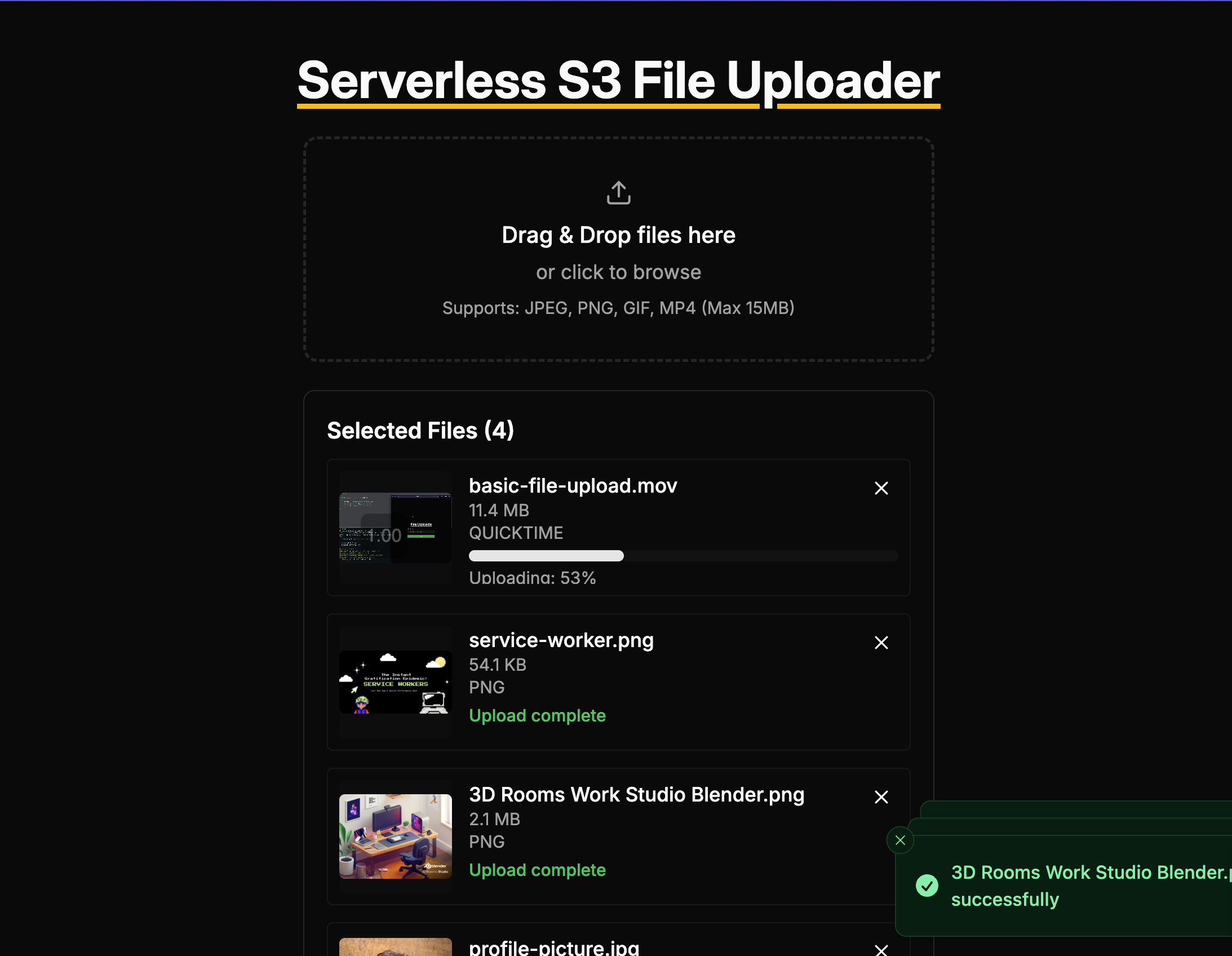

Building a Serverless S3 File Uploader

┌─────────────┐ ┌──────────────────┐ ┌─────────┐

│ React App │────►│ Cloudflare Worker │────►│ AWS S3 │

└─────────────┘ └──────────────────┘ └─────────┘

▲ │

└───────────────────────────────────────────┘

Direct upload with presigned URL

Now, let's apply this knowledge by building a practical application: a serverless file uploader that uses Cloudflare Workers as a secure intermediary between your frontend and Amazon S3. Let's turn this theoretical knowledge into something useful that will actually impress your manager (or at least justify all that time you spent "researching" new tech).

Explore the complete code example on Github

System Architecture

Our file upload system consists of three main components:

React Frontend: A polished UI with drag-and-drop functionality, auto uploads, progress tracking, and preview capabilities. Because nobody likes an ugly upload form from 2005.

Cloudflare Worker: A secure intermediary that generates presigned URLs for direct S3 uploads. Think of it as a bouncer who checks IDs before letting files into the S3 club.

Amazon S3: The storage backend where files are ultimately stored. The reliable filing cabinet of the internet.

This architecture offers several advantages:

Eliminates the need for a traditional backend server (goodbye, server maintenance nightmares!)

Leverages Cloudflare's global network for low-latency API responses

Keeps AWS credentials secure by never exposing them to the client (because we all know how well browsers keep secrets)

Utilizes S3 presigned URLs for secure, direct uploads

The Cloudflare Worker Implementation

Let's examine the Cloudflare Worker code that facilitates secure S3 uploads:

import { getSignedUrl } from '@aws-sdk/s3-request-presigner';

import { S3Client, PutObjectCommand } from '@aws-sdk/client-s3';

let s3Client = null;

export default {

async fetch(request, env, ctx) {

if (request.method === "OPTIONS") {

return handleCORS(request, env);

}

if (!s3Client && env.AWS_ACCESS_KEY_ID && env.AWS_SECRET_ACCESS_KEY && env.AWS_REGION) {

s3Client = new S3Client({

region: env.AWS_REGION,

credentials: {

accessKeyId: env.AWS_ACCESS_KEY_ID,

secretAccessKey: env.AWS_SECRET_ACCESS_KEY,

},

});

}

const url = new URL(request.url);

if (url.pathname === "/get-upload-url" && request.method === "POST") {

return handleUploadUrl(request, env);

} else if (url.pathname === "/confirm-upload" && request.method === "POST") {

return handleConfirmUpload(request, env);

}

return new Response("Not found", { status: 404 });

}

};

Our Worker exposes two key endpoints:

/get-upload-url: Generates a presigned URL for direct-to-S3 uploads/confirm-upload: Validates successful uploads and returns the public URL

Let's break down the key components:

Initialization and Environment Variables

The Worker leverages Cloudflare's environment variables system to securely store AWS credentials. This ensures sensitive information never leaks to clients:

if (!s3Client && env.AWS_ACCESS_KEY_ID && env.AWS_SECRET_ACCESS_KEY && env.AWS_REGION) {

s3Client = new S3Client({

region: env.AWS_REGION,

credentials: {

accessKeyId: env.AWS_ACCESS_KEY_ID,

secretAccessKey: env.AWS_SECRET_ACCESS_KEY,

},

});

}

Generating Presigned URLs

The handleUploadUrl function creates secure, time-limited URLs that allow direct uploads to S3:

async function handleUploadUrl(request, env) {

try {

if (!s3Client) {

throw new Error("S3 client could not be initialized. Check AWS credentials.");

}

const body = await request.json();

const { filename, filetype } = body;

if (!filename || !filetype) {

return corsResponse(JSON.stringify({

error: "Missing required parameters: filename and filetype"

}), env, 400);

}

const sanitizedFilename = filename.replace(/[^\w\s.-]/g, '_');

const key = `uploads/${Date.now()}-${crypto.randomUUID()}-${sanitizedFilename}`;

const command = new PutObjectCommand({

Bucket: env.AWS_S3_BUCKET,

Key: key,

ContentType: filetype,

});

const presignedUrl = await getSignedUrl(s3Client, command, {

expiresIn: 3600,

});

return corsResponse(JSON.stringify({

url: presignedUrl,

key,

bucket: env.AWS_S3_BUCKET

}), env);

} catch (error) {

console.error(`Error generating presigned URL: ${error}`);

return corsResponse(JSON.stringify({

error: "Failed to generate upload URL",

details: error.message

}), env, 500);

}

}

This function:

Sanitizes the filename to prevent security issues

Creates a unique path with timestamp and UUID to avoid collisions

Uses AWS SDK to generate a presigned PUT URL

Returns the URL to the client for direct upload

Security Considerations

The Worker implements several security best practices:

Input Validation: Checking for required parameters

Filename Sanitization: Preventing path traversal attacks

CORS Handling: Properly configured cross-origin resource sharing

Error Handling: Graceful error responses without exposing internals

The React Frontend

Our React frontend provides a polished user experience with numerous advanced features:

function App() {

const [files, setFiles] = useState<FileInfo[]>([]);

const [isDragging, setIsDragging] = useState<boolean>(false);

const [selectedPreview, setSelectedPreview] = useState<FileInfo | null>(null);

// ... additional code ...

const uploadFile = async (fileInfo: FileInfo) => {

try {

// Update file status to uploading

setFiles((prev) =>

prev.map((f) =>

f.id === fileInfo.id ? { ...f, status: "uploading", progress: 0 } : f

)

);

// Step 1: Get presigned URL

const response = await fetch(`${API_URL}/get-upload-url`, {

method: "POST",

headers: {

"Content-Type": "application/json",

},

body: JSON.stringify({

filename: fileInfo.name,

filetype: fileInfo.type,

}),

});

if (!response.ok) {

throw new Error(`Failed to get upload URL: ${response.statusText}`);

}

const presignedUrl = await response.json();

// Step 2: Upload to S3 using axios with onUploadProgress

await axios.put(presignedUrl.url, fileInfo.file, {

headers: {

"Content-Type": fileInfo.type,

},

onUploadProgress: (progressEvent) => {

const percentCompleted = Math.round(

(progressEvent.loaded * 100) /

(progressEvent.total || fileInfo.size)

);

setFiles((prev) =>

prev.map((f) =>

f.id === fileInfo.id ? { ...f, progress: percentCompleted } : f

)

);

},

});

// Update status on successful upload

setFiles((prev) =>

prev.map((f) =>

f.id === fileInfo.id

? {

...f,

status: "success",

progress: 100,

url: `https://${presignedUrl.bucket}.s3.amazonaws.com/${presignedUrl.key}`,

}

: f

)

);

// Show success toast

toast.success(`${fileInfo.name} uploaded successfully`);

} catch (error) {

// Error handling...

}

};

}

Key Frontend Features

What makes this file uploader special?

Instant Upload Initiation: Files begin uploading immediately upon selection - no additional clicks required. Because life's too short to wait for your users to click "upload."

Real-time Progress Visualization: Users see accurate upload progress with animated progress bars:

Animations: Using Framer Motion's spring transitions for a premium feel:

Comprehensive Validation: Client-side checks before upload begins:

// Validate file type

if (!ALLOWED_TYPES.includes(selectedFile.type)) {

invalidFiles.push({

name: selectedFile.name,

reason: "Unsupported file type"

});

return;

}

// Validate file size

if (selectedFile.size > MAX_FILE_SIZE) {

invalidFiles.push({

name: selectedFile.name,

reason: "Exceeds 15MB limit"

});

return;

}

- Intelligent Error Handling: Toast notifications that consolidate similar errors:

// Group by reason

const byReason = invalidFiles.reduce((acc, curr) => {

acc[curr.reason] = acc[curr.reason] || [];

acc[curr.reason].push(curr.name);

return acc;

}, {} as Record<string, string[]>);

// Show toast for each reason group

Object.entries(byReason).forEach(([reason, files]) => {

toast.error(

<div>

<p className="font-medium mb-1">Failed to add {files.length} file(s)</p>

<p className="text-sm">Reason: {reason}</p>

{/* Additional rendering logic */}

</div>,

{ duration: 5000 }

);

});

- Interactive Preview System: Modal-based preview with maximize functionality:

<Dialog

open={selectedPreview !== null}

onOpenChange={(open) => !open && closePreviewModal()}

>

<DialogContent className="sm:max-w-3xl md:max-w-4xl max-h-screen overflow-hidden p-1 sm:p-2">

{/* Preview content */}

</DialogContent>

</Dialog>

Conclusion

Cloudflare Workers represent a paradigm shift in cloud computing, bringing computation closer to users and enabling new architectural patterns. In this article, we've explored the underpinnings of this technology and demonstrated its practical application by building a serverless file uploader.

The combination of React, Cloudflare Workers, and S3 presigned URLs offers a powerful, scalable, and secure approach to file uploads without the need for traditional backend servers. This architecture leverages the best aspects of each technology:

React's component model and rich ecosystem for UI development

Cloudflare Workers' edge computing capabilities for low-latency API interactions

S3's reliable object storage with secure direct upload capabilities

As edge computing continues to evolve, we can expect to see more applications migrating functionality to the edge, reducing latency and improving user experiences worldwide. Whether you're building a simple API or a complex application, consider how moving logic to the edge might benefit your users and your architecture. The file uploader we've built today is just the beginning of what's possible with edge computing.

Explore the complete code example on GitHub or reach out for a chat on implementing cloudflare workers in your architecture.

Subscribe to my newsletter

Read articles from Hrushi Borhade directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Hrushi Borhade

Hrushi Borhade

Software engineer who loves building things that matter. Fascinated by the art of storytelling, human psychology and business. Usually caffeinated, occasionally filming, perpetually curious