Part 2: Secure Your EKS Cluster with IAM Users, Roles & RBAC – The Right Way!

Neamul Kabir Emon

Neamul Kabir Emon

Add IAM User & IAM Role to AWS EKS

Welcome to Day 2 of the Amazon EKS Production-Ready Series! In this tutorial, we’ll configure Role-Based Access Control (RBAC) by adding IAM users and roles that allow granular access to your EKS cluster. Whether it’s for developers with limited visibility or cluster administrators with full control, this guide walks you through the entire process using Terraform and Kubernetes manifests.

👉 If you haven’t followed Part 1 yet, start here to set up your EKS cluster infrastructure first:

🔗 Part 1 Article: https://neamulkabiremon.hashnode.dev/from-zero-to-production-day-1-build-a-scalable-amazon-eks-cluster-with-terraform

🔗 GitHub Repository: terraform-eks-production-cluster

🎯 Goal of This Article

By the end of this tutorial, you’ll have:

Created an IAM user with limited access (viewer)

Created a Kubernetes ClusterRole and ClusterRoleBinding

Configured

aws-authusing Terraform’saws_eks_access_entryCreated an IAM user and role for full admin access

Validated RBAC access using multiple AWS CLI profiles

🧑💻 Step 1: Create Viewer Role (Read-Only Access)

First, create a directory for RBAC manifests:

mkdir 01-rbac-viewer-role && cd 01-rbac-viewer-role

0-viewer-cluster-role.yaml

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: viewer

rules:

- apiGroups: ["*"]

resources: ["deployments", "configmaps", "pods", "secrets", "services"]

verbs: ["get", "list", "watch"]

1-viewer-cluster-role-binding.yaml

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: my-viewer-binding

roleRef:

kind: ClusterRole

name: viewer

apiGroup: rbac.authorization.k8s.io

subjects:

- kind: Group

name: my-viewer

apiGroup: rbac.authorization.k8s.io

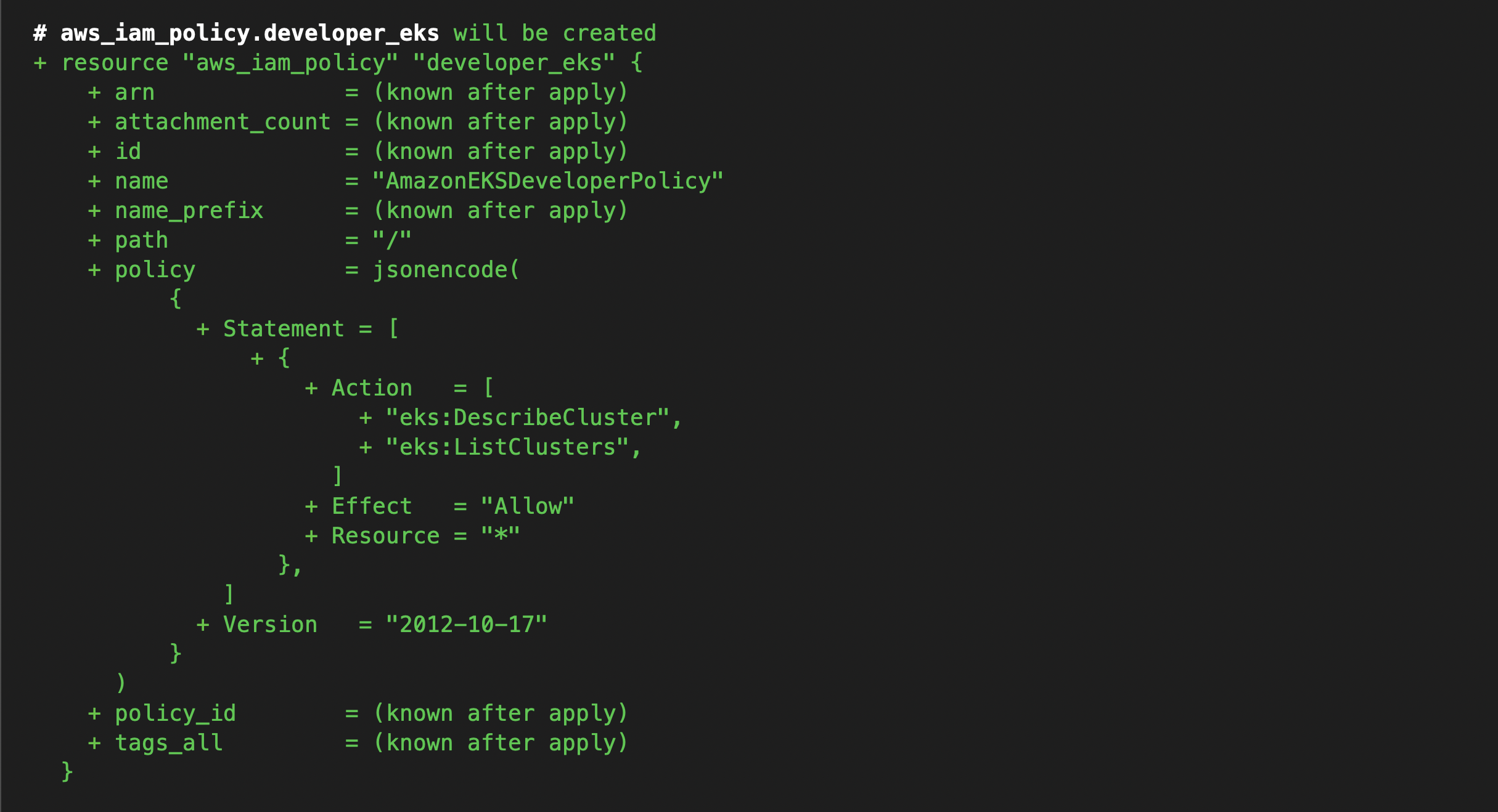

🛠️ Step 2: Add Developer IAM User in Terraform

Create 9-add-developer-user.tf in the Terraform root:

resource "aws_iam_user" "developer" {

name = "developer"

}

resource "aws_iam_policy" "developer_eks" {

name = "AmazonEKSDeveloperPolicy"

policy = <<POLICY

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"eks:DescribeCluster",

"eks:ListClusters"

],

"Resource": "*"

}

]

}

POLICY

}

resource "aws_iam_user_policy_attachment" "developer_eks" {

user = aws_iam_user.developer.name

policy_arn = aws_iam_policy.developer_eks.arn

}

resource "aws_eks_access_entry" "developer" {

cluster_name = aws_eks_cluster.eks.name

principal_arn = aws_iam_user.developer.arn

kubernetes_groups = ["my-viewer"]

}

Apply the Terraform config:

terraform apply -auto-approve

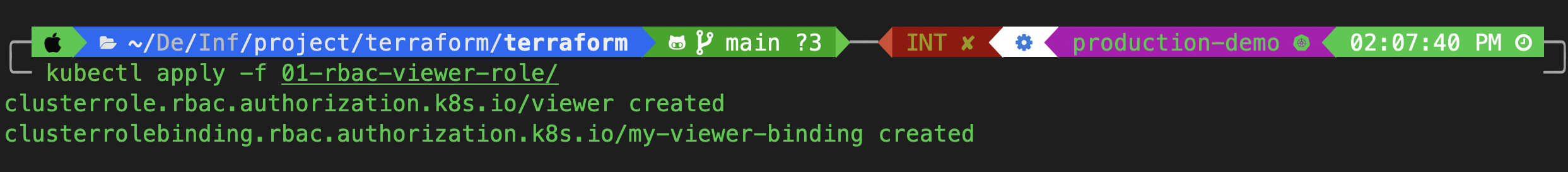

Apply the Kubernetes RBAC:

kubectl apply -f 01-rbac-viewer-role/

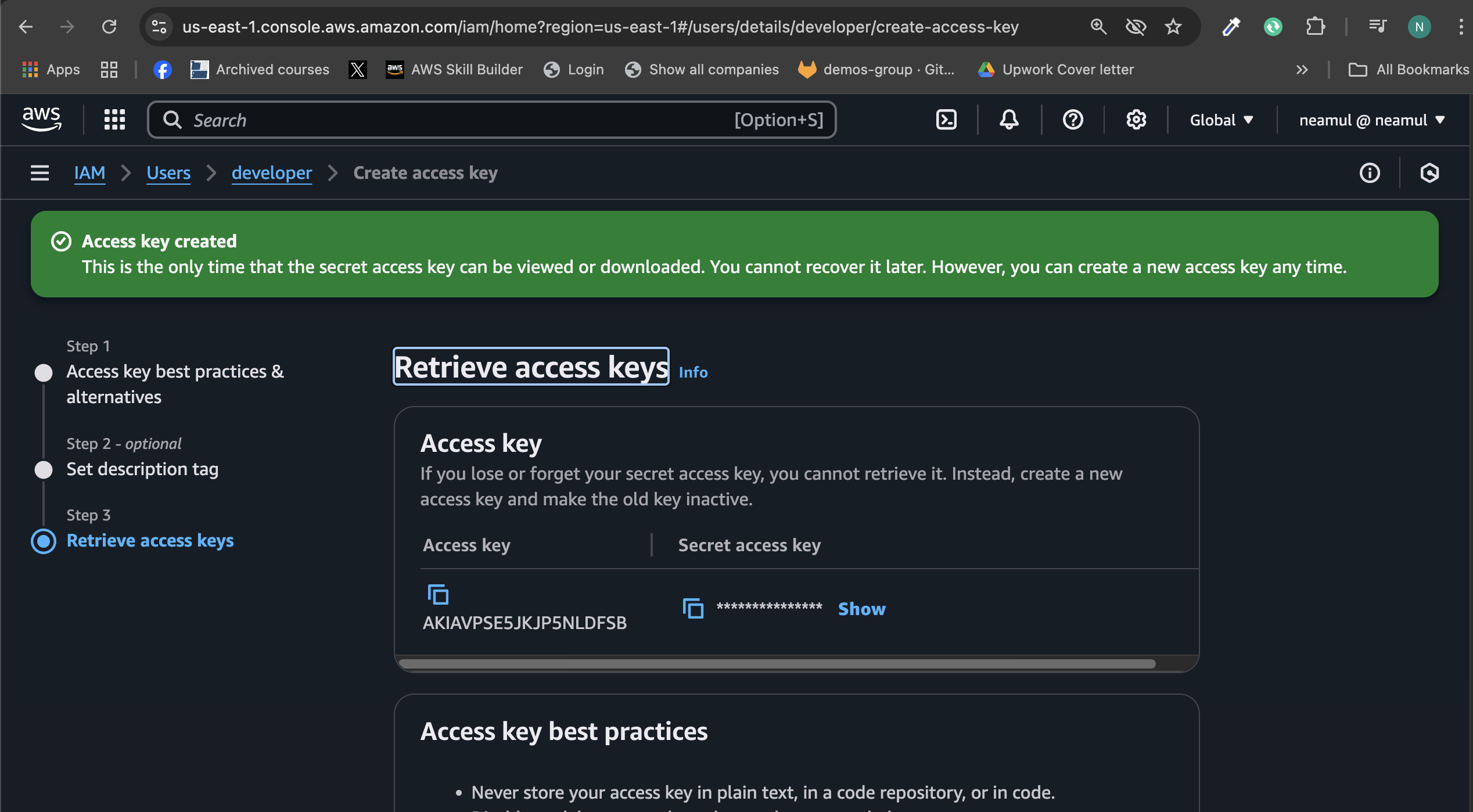

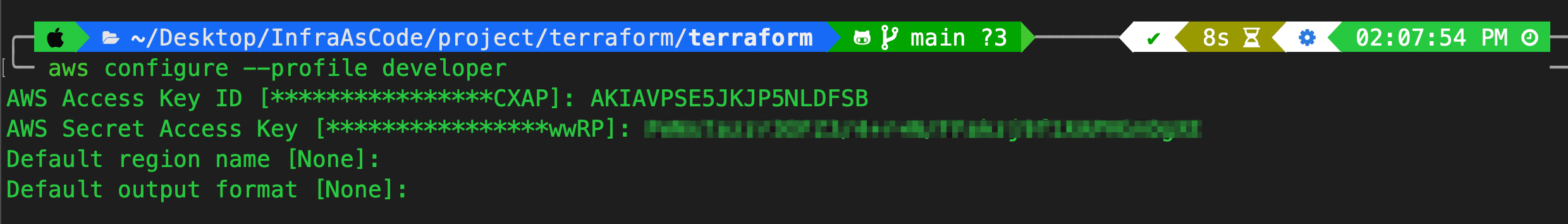

Generate access keys for the developer user and configure profile:

IAM > Users > developer > Create access key

Generate access keys for the developer user and configure profile:

aws configure --profile developer

Connect to EKS:

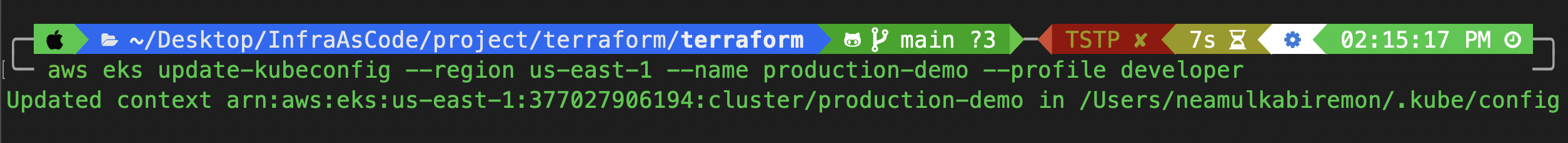

aws eks update-kubeconfig --region us-east-1 --name production-demo --profile developer

Test access:

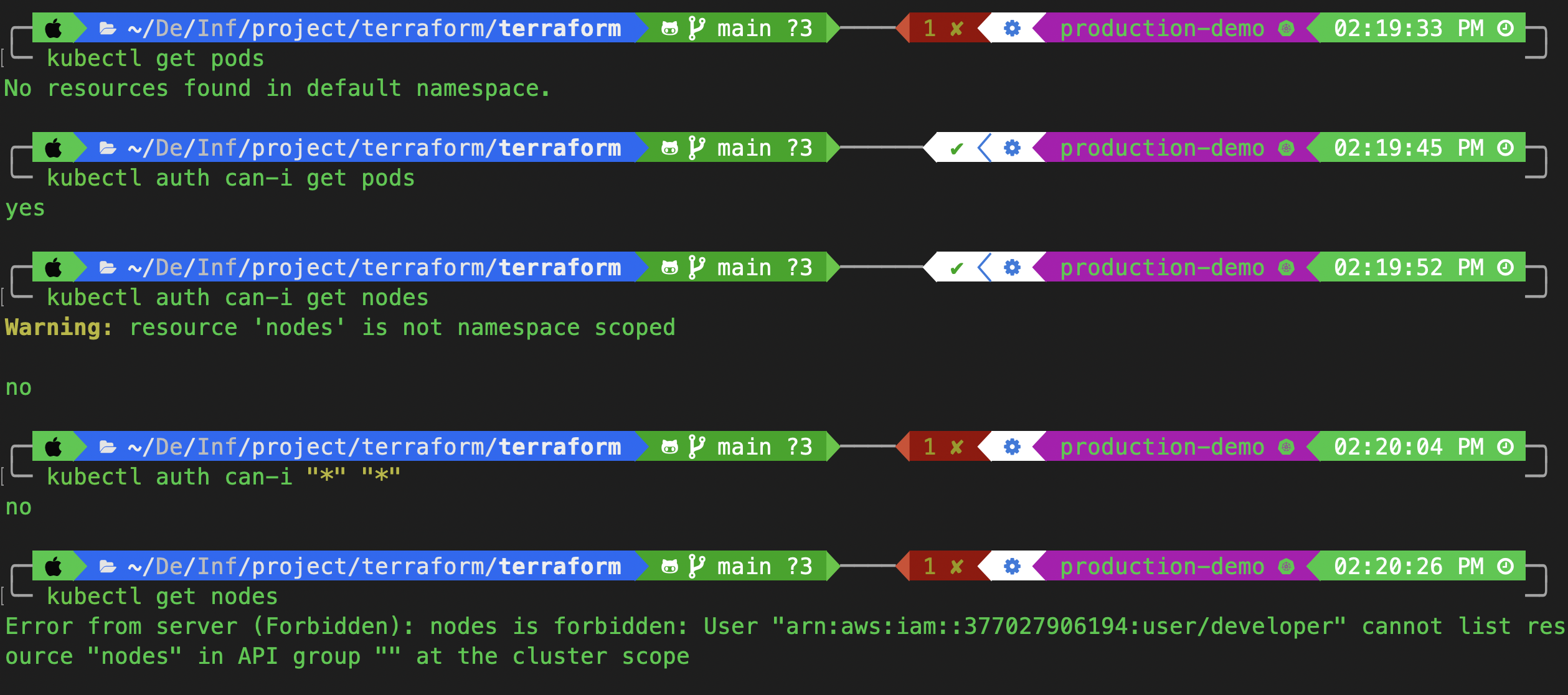

kubectl get pods

kubectl auth can-i get pods

kubectl auth can-i get nodes

kubectl auth can-i "*" "*"

As you can see, the role is working correctly. It can get and read pods, but it cannot write to them.

🧑💼 Step 3: Add Admin IAM Role + Manager User

Now we will create another role for the admin. To do this, we need to switch back to our previous AWS user that has full access.

aws eks update-kubeconfig --region us-east-1 --name production-demo

Terraform Configuration 10-add-manager-role.tf

data "aws_caller_identity" "current" {}

resource "aws_iam_role" "eks_admin" {

name = "${local.env}-${local.eks_name}-eks-admin"

assume_role_policy = <<POLICY

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": "sts:AssumeRole",

"Principal": {

"AWS": "arn:aws:iam::${data.aws_caller_identity.current.account_id}:root"

}

}

]

}

POLICY

}

resource "aws_iam_policy" "eks_admin" {

name = "AmazonEKSAdminPolicy"

policy = <<POLICY

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"eks:*"

],

"Resource": "*"

},

{

"Effect": "Allow",

"Action": "iam:PassRole",

"Resource": "*",

"Condition": {

"StringEquals": {

"iam:PassedToService": "eks.amazonaws.com"

}

}

}

]

}

POLICY

}

resource "aws_iam_role_policy_attachment" "eks_admin" {

role = aws_iam_role.eks_admin.name

policy_arn = aws_iam_policy.eks_admin.arn

}

resource "aws_iam_user" "manager" {

name = "manager"

}

resource "aws_iam_policy" "eks_assume_admin" {

name = "AmazonEKSAssumeAdminPolicy"

policy = <<POLICY

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"sts:AssumeRole"

],

"Resource": "${aws_iam_role.eks_admin.arn}"

}

]

}

POLICY

}

resource "aws_iam_user_policy_attachment" "manager" {

user = aws_iam_user.manager.name

policy_arn = aws_iam_policy.eks_assume_admin.arn

}

resource "aws_eks_access_entry" "manager" {

cluster_name = aws_eks_cluster.eks.name

principal_arn = aws_iam_role.eks_admin.arn

kubernetes_groups = ["my-admin"]

}

Create Cluster Role Binding

mkdir 02-clusterrolebinding-admin-access && cd 02-clusterrolebinding-admin-access

admin-cluster-role-binding.yaml

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: my-admin-binding

roleRef:

kind: ClusterRole

name: cluster-admin

apiGroup: rbac.authorization.k8s.io

subjects:

- kind: Group

name: my-admin

apiGroup: rbac.authorization.k8s.io

Now, go back to the root directory and apply this role binding.

cd ..

kubectl apply -f 02-clusterrolebinding-admin-access/

Apply the Terraform config:

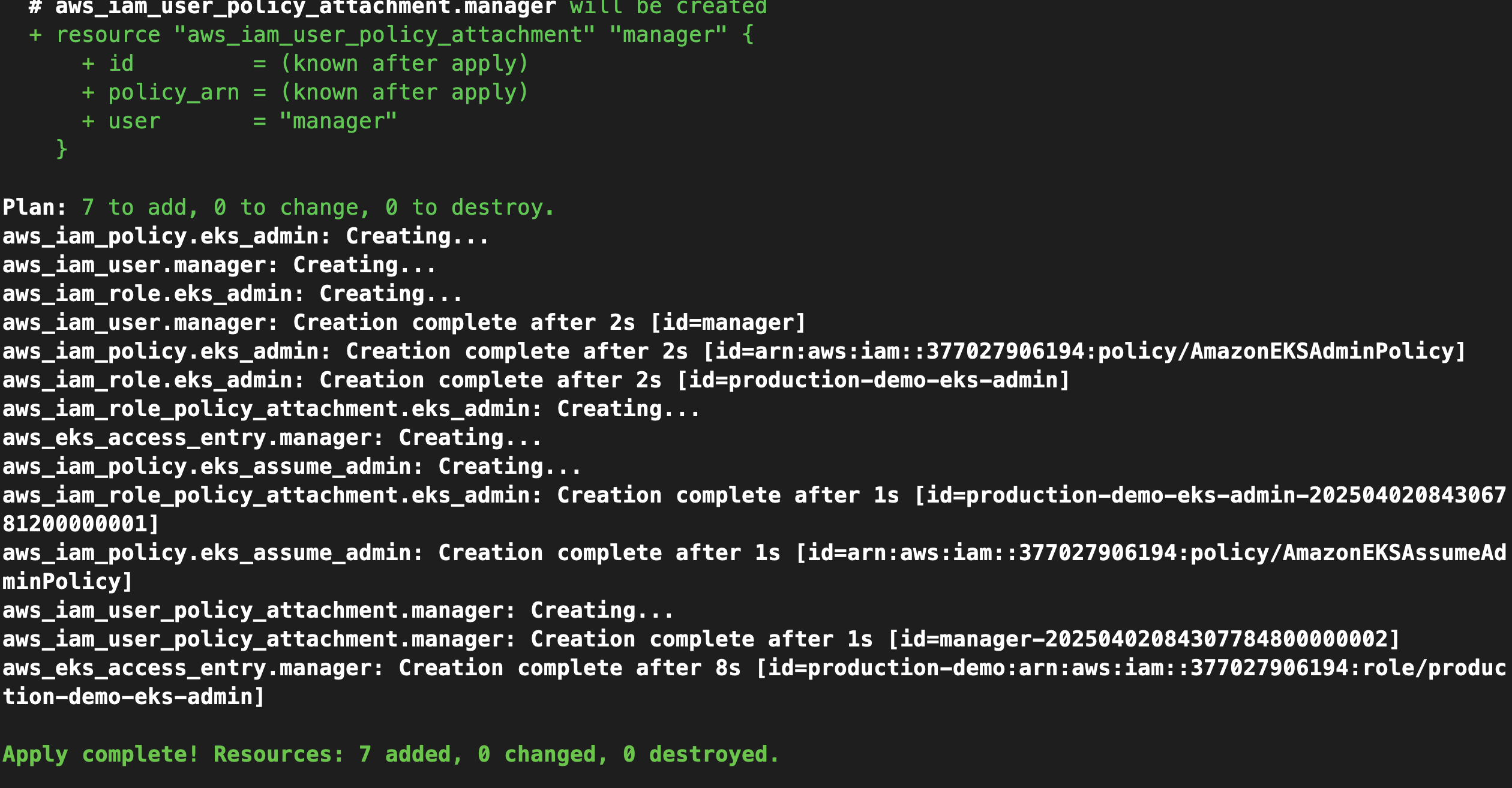

terraform apply -auto-approve

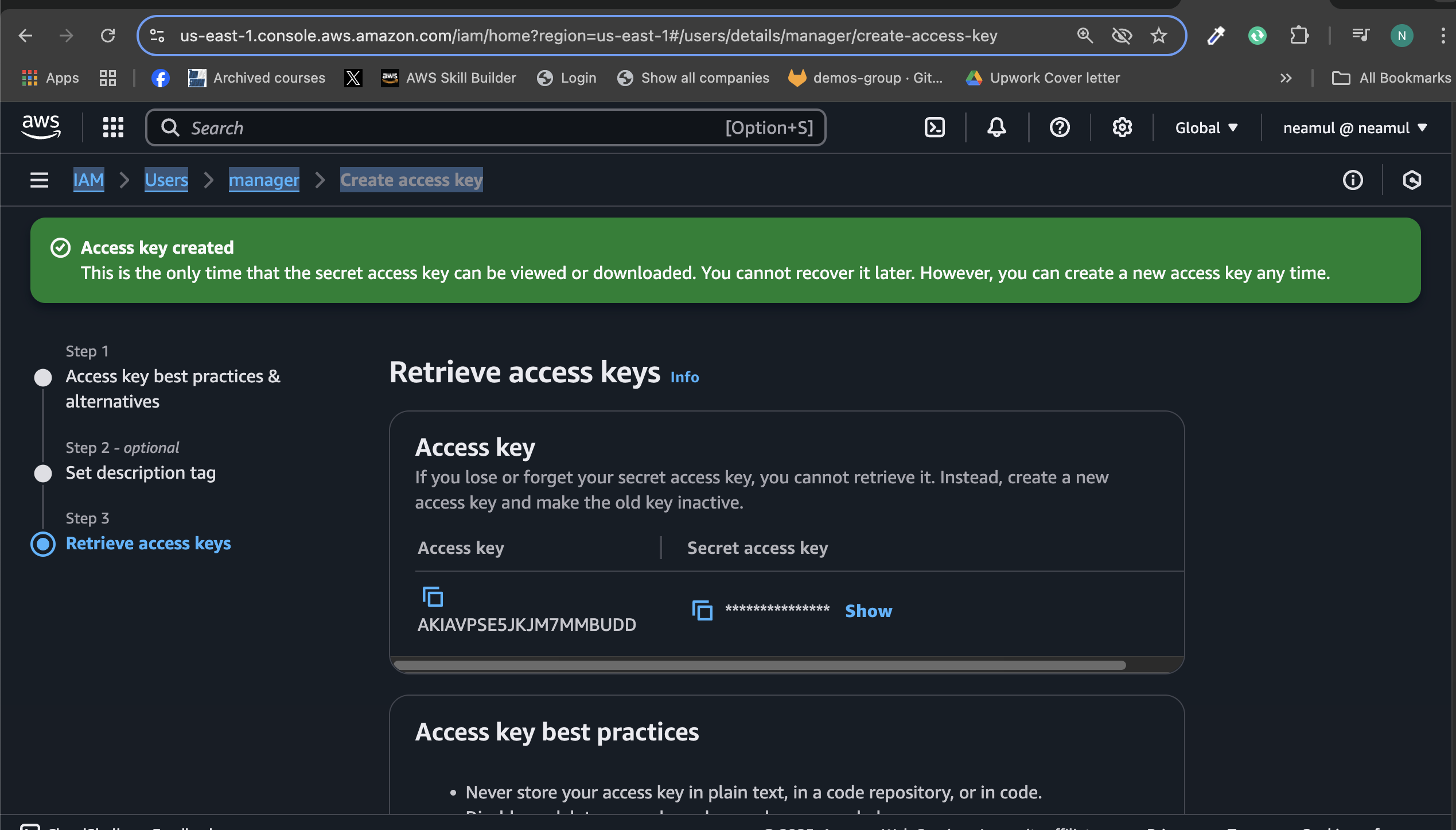

Once created, we need to generate the credentials to connect to the cluster and test the user. To do this, go to the AWS console.

IAM > Users > manager > Create access key

Copy the access key because we need it for aws configure.

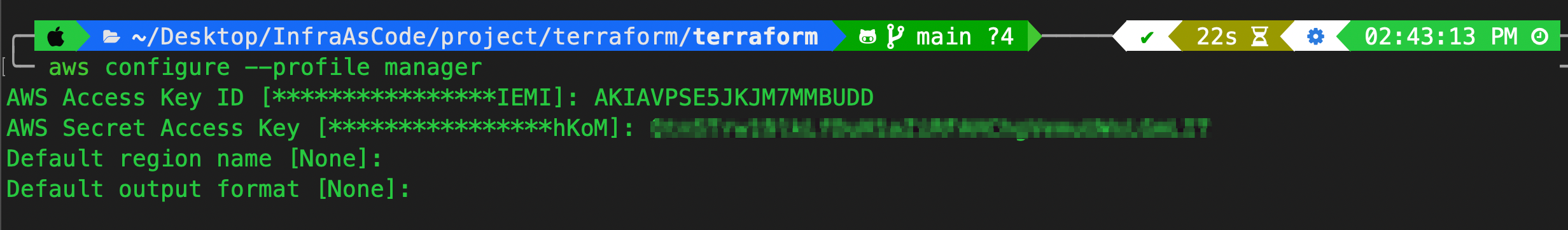

Now, return to the CLI and set up the manager user by entering the command and and set the credentials:

aws configure --profile manager

Now we need to configure another AWS credential manually.

Now Edit ~/.aws/config and add the eks-admin profile under the manager profile replace the role ARN IAM user with your user, then save it.:

[default]

region = us-east-1

output = json

[profile root-mfa-delete-demo]

[profile developer]

[profile manager]

[profile eks-admin]

role_arn = arn:aws:iam::<your-account-id>:role/production-demo-eks-admin

source_profile = manager

Update kubeconfig:

aws eks update-kubeconfig --region us-east-1 --name production-demo --profile eks-admin

This time, use the EKS admin profile, not the manager profile.

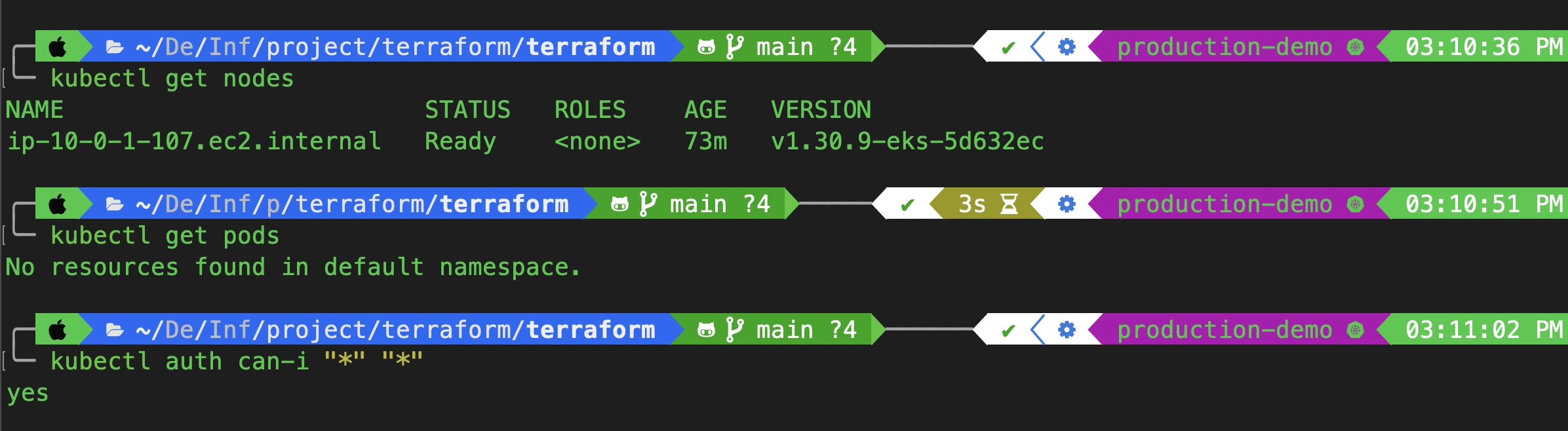

As you have successfully set up admin role-based access control, test it to ensure it is properly implemented.

Test access:

kubectl get nodes

kubectl get pods

kubectl auth can-i "*" "*"

✅ Summary

In this tutorial, you:

Created a developer IAM user with limited access to EKS

Set up RBAC via ClusterRoles and RoleBindings

Provisioned a manager IAM user and admin role with elevated permissions

Validated RBAC functionality with multiple AWS profiles

⏭️ What’s Next? In the next article (Part 3), we’ll cover:

Installing Metrics Server using Helm

Enabling Horizontal Pod Autoscaler (HPA) in EKS

Deploying a test app to observe autoscaling in action

📌 Follow me to stay updated and get notified when Day 3 drops!

#EKS #Terraform #AWS #RBAC #DevOps #Kubernetes #IAM #InfrastructureAsCode #CloudSecurity #IaC

Subscribe to my newsletter

Read articles from Neamul Kabir Emon directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Neamul Kabir Emon

Neamul Kabir Emon

Hi! I'm a highly motivated Security and DevOps professional with 7+ years of combined experience. My expertise bridges penetration testing and DevOps engineering, allowing me to deliver a comprehensive security approach.