Master Docker from Beginner to Expert: A Comprehensive Guide

Akash Prasad

Akash Prasad

What is Docker? 🤔

Docker is an application that creates a virtual environment for your application, which is called a container. So you may ask, what’s the need for it? Let’s say you have your application ready and want to share it with your other team members for testing. So in this case, each of your team members has manually set up your application in their system, which can be different for all, which means they have to go through various configuration settings to make the application work in their system. And even after all that hassle they might face some issues. Here Docker comes in, it solves all these problems by simply packaging your entire application with all the configuration involved This package is called a Docker image file. So now anyone who wants to start your application just needs to get this image and run it with Docker. Docker creates a container, which is an isolated environment where the image runs. And by this approach they can easily start your application without any manual setup.

Docker Image

Docker image is a pre-configured package file that has all the required steps and settings needed, in other words, a complete package of the application. These images can be stored in a repository, either private or public, such as Docker Hub.

So, what is a Container?

A container is a virtual environment that is independent of your host machine. It is basically a running Docker image. And the best thing about it is that you can have as many containers as you want of the same application and they won’t conflict with each other.

Docker Vs Virtual Machine

Both Docker and virtual machines are used to create virtual environments, but they work in very different way.

| Feature | Docker | Virtual Machine |

| Architecture | Uses the host OS kernel, virtualizes only the application layer | Creates a full virtual OS with its own kernel and application layer |

| Performance | Lightweight and faster | Heavier and slower |

| Resource Usage | Uses fewer system resources | Requires more CPU, memory, and storage |

| Scalability | Easily spins up multiple containers | More difficult to scale |

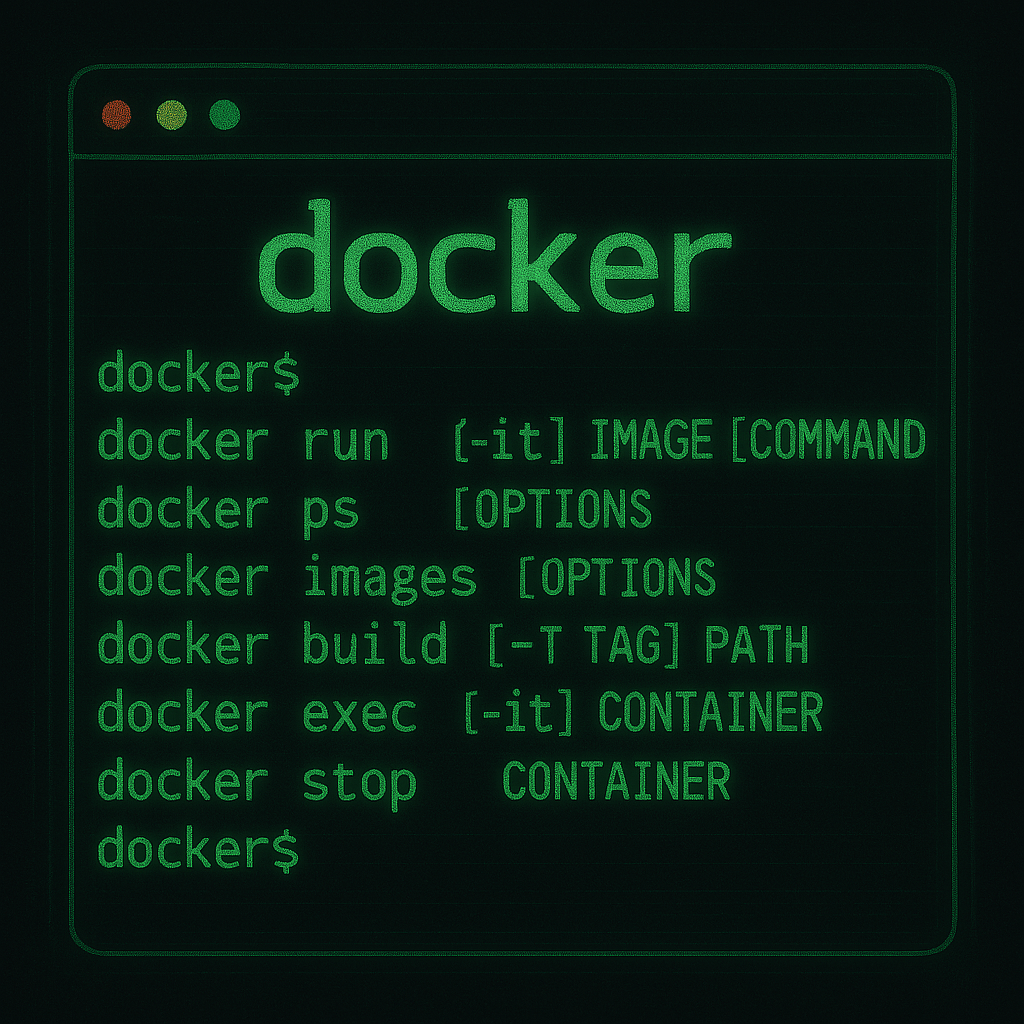

Docker Commands

To download an image from Docker Hub

docker pull <image-name>

So the command to download an image of Ubuntu would be

docker pull ubuntu

To view all downloaded images

docker images

To run an image or start a Container

docker run <image-name or image-id>

docker run -d <image-name or image-id> # detach mode

docker run --name <custom-name> <image-name or image-id> # you can set name of you continer

#To stop a container

docker stop <container-id>

# if you dont have a image docker run will first pull then run the container you can also specify \

# verison of image

docker run redis:1.0

To view all containers

docker ps #running ones

docker ps -a #all

To restart a container

docker start <container-id>

So, how to access these containers from the host

We can actually provide port binding to the container to make it accessible from the host

docker run -d -p 3000:6379 redis

# <host-port:container-port>

To debug a container, you can view its logs

docker logs <container-id/name>

To interact with your Docker container

docker exec -it <container-id/name> /bin/bash #gives access to interactive terminal of container

# if above cmd doesnt works try these

docker exec -it <container-id/name> bash

docker exec -it <container-id/name> sh

# use exit to get out of terminal

exit

How will containers communicate?

Let’s say you have two containers, one backend and another the DB, so how will the backend will access the DB? Here comes Docker network where we can create a network for both our containers, and they will be able to access each other via this network

docker run -p 8000:8000 --net <my-network> backend

docker run -p 6379:6379 --net <my-network> database

How to manage all containers at once

What if we want to start all the containers with one command for that? We can use docker-compose which manages all containers. And to use it, we have to create a YAML file where all the container configurations are set. It even takes care of the network we don’t have to manually set up like above.

Create a docker-compose.yaml file

version: '3.8' # Defines the Compose file version

services:

database:

image: postgres

container_name: database

restart: always # Automatically restarts the container if it stops for any reason.

environment:

POSTGRES_USER: myuser

POSTGRES_PASSWORD: mypassword

POSTGRES_DB: mydb

backend:

image: backend_image

container_name: backend

restart: always

environment:

DB_HOST: database # Service name as hostname

DB_USER: myuser

DB_PASSWORD: mypassword

DB_NAME: mydb

depends_on:

- database

To start and stop

docker-compose up

docker-compose stop #Stops the running containers but does not remove them.

docker-compose down #Stops & deletes all containers in docker-compose.yml.

docker-compose start #restart

How do you build an application’s Docker image?

For that, we create Dockerfile With no extensions, it is a blueprint for your application

Syntax of Dockerfile

# 1️⃣ Specify the base image

FROM node:18 # pulls the Node.js 18 image from Docker Hub.

# 2️⃣ Set working directory inside the container

WORKDIR /app

# 3️⃣ Copy local files into the container

COPY package.json ./

COPY . . # Copies everything from the current directory, runs on host

# 4️⃣ Install dependencies, runs in container

RUN npm install

# 5️⃣ Expose a port (for networking)

EXPOSE 3000

# 6️⃣ Set an environment variable (optional)

ENV NODE_ENV=production

# 7️⃣ Define the startup command

CMD ["node", "server.js"]

# Run vs Cmd command

# runs at build time of image | runs at runtime of container

# used to install packages | default cmd for container

So, now let’s build a Docker Image from Dockerfile

docker build -t myapp:v1.0 /path/to/dockerfile

# -t myapp:latest → Names the image myapp with the tag latest.

docker build --no-cache -t myapp:v2 .

# Ignores cached layers and forces a fresh build.

docker rmi <image_id>

# to delete image

Docker Volume

Whenever we create a container and then stop it or delete it, the data inside the container gets lost meaning it’s not persistent, to fix it we can use Docker volumes while create a persistent data.

Types of Docker Volumes 🗄️

Docker provides different types of storage options to manage data efficiently. Below are the main types of Docker volumes and their use cases.

1️⃣ Anonymous Volumes

📌 What it is:

Automatically created when a container needs persistent storage but no specific volume name is given.

Docker assigns a random name to the volume.

docker run -d -v /app/data my_container

2️⃣ Named Volumes

📌 What it is:

A user-defined volume with a specific name that can be reused across multiple containers.

Stored in

/var/lib/docker/volumes/on the host system.

📌 Use Case:

- When you want persistent storage that can be easily referenced by multiple containers.

docker volume create my_volume

docker run -d -v my_volume:/app/data my_container

- The volume

my_volumewill persist even if the container is deleted.

Basic Commands for Docker Volumes

1️⃣ Create a Volume

docker volume create my_volume

2️⃣ List All Volumes

docker volume ls

3️⃣ Inspect a Volume

docker volume inspect my_volume

4️⃣ Use a Volume in a Container

docker run -d -v my_volume:/app/data my_container

This mounts my_volume Inside the container at /app/data.

5️⃣ Remove a Volume

docker volume rm my_volume

6️⃣ Remove All Unused Volumes

docker volume prune

Example: Using a Volume with a Database (MySQL)

docker run -d --name mydb -v my_db_data:/var/lib/mysql -e MYSQL_ROOT_PASSWORD=root mysql:latest

- This ensures that even if the

mydbcontainer is removed, the database data remains inmy_db_data.

Conclusion 🏁

Docker has revolutionized the way applications are developed, tested, and deployed by providing a lightweight, scalable, and consistent environment. Unlike traditional virtual machines, Docker containers share the host OS kernel, making them more efficient and faster to start. With Docker, teams can avoid the hassle of environment mismatches, streamline deployments, and improve collaboration. Whether you're working on a small project or a large-scale microservices architecture, Docker is a powerful tool that simplifies application management. 🚀

Subscribe to my newsletter

Read articles from Akash Prasad directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Akash Prasad

Akash Prasad

I am a Full Stack Developer and an AI enthusiast!