[Kind] Kubernetes in Docker

Aman Mulani

Aman MulaniTable of contents

Introduction

I am sure that if you use Kubernetes for container orchestration, you must have used either minikube or kind for running k8s locally. When first faced with the challenge of testing a few loading balancing scenarios, I chose minikube, as I had used it while learning kubernetes. However, this time, I found installation issues on my apple silicon chip laptop. Therefore, I started to look for alternatives, and there’s where I came across kind.

FYI, I haven’t extensively used both minikube and kind. The following are just my observations based on my usage and research.

In essence, Kubernetes cluster consists of a control plane (master node), and a set of worker nodes. The control plane consists of scheduler, controller, api-server, etc. The worker node consists of, CRE (container runtime engine), that manages and runs containers. kube-proxy helps manage service networking, while a Container Network Interface (CNI) plugin (such as Flannel or Calico) enables inter-node communication. Kubelet, the agent that ensures the node is running the assigned workload.

As you can see for Kubernetes itself to function we need to have quite a few components working in sync. While majority of the cloud providers already provide Kubernetes setup with ease, testing kubernetes can be challenging locally.

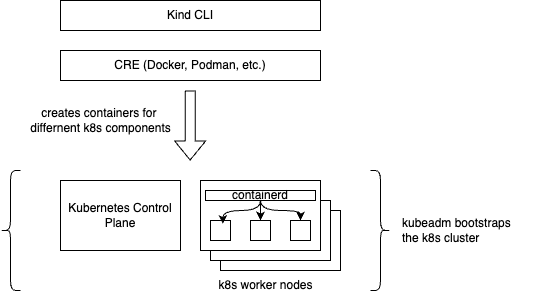

Here’s where kind comes into the picture, Kind enables you to create a Kubernetes cluster where each node is a separate container running inside Docker. While Kind uses Docker to launch the cluster, each Kubernetes node inside the cluster runs its own container runtime (usually containerd). Kind manages their networking and lifecycle to simulate a real Kubernetes cluster.

Kind

As you can find in the documentation, kind stands for Kubernetes in Docker. To put in simple words, kind is a tool that let’s you run Kubernetes inside container. How ironical! A container to run a tool whose sole purpose is to orchestrate other containers! The master node and worker nodes are provisioned as separate docker containers when a cluster is created via kind.

If you look through the code base of kind, you will find that kind at its core, internally, just executes docker commands (if docker provider is used).

func createContainer(name string, args []string) error {

return exec.Command("docker", append([]string{"run", "--name", name}, args...)...).Run()

}

This is what makes kind an interesting lightweight tool as it simplifies cluster management by using Docker to create nodes, but still manages cluster configuration, networking, and lifecycle using Kubernetes tools like kubeadm. The image below is a rough illustration of what kind does, it does not show the true working, and is only for representational purposes.

Code Highlights

Since I don’t want this article to be a tutorial, I am attaching the references to the code bits that I found interesting wrt Kind.

Provider Interface (provider.go)

At the time of writing this article, Kind support three types of CREs, docker, nerdctl and podman. Kind uses the provider pattern (or the strategy pattern for the OO folks). Simple, yet powerful design pattern that makes any provider pluggable.

Docker Provider (docker/provider.go)

This is the concrete implementation of provider interface for docker. In this file, you can find how nodes are created from a base image.

Kind CLI (cmd/kind/root.go)

The kind CLI tool internally figures out which provider to use (docker, podman, etc.) and goes on the run the commands against the concrete implementation for that provider. CLI supports the following top level subcommands:

// add all top level subcommands: build, completion, create, delete, export, get, // version and load cmd.AddCommand(build.NewCommand(logger, streams)) cmd.AddCommand(completion.NewCommand(logger, streams)) cmd.AddCommand(create.NewCommand(logger, streams)) cmd.AddCommand(delete.NewCommand(logger, streams)) cmd.AddCommand(export.NewCommand(logger, streams)) cmd.AddCommand(get.NewCommand(logger, streams)) cmd.AddCommand(version.NewCommand(logger, streams)) cmd.AddCommand(load.NewCommand(logger, streams))

Another interesting thing to explore is how kubeadm initialisation happens, but I will leave that as a homework for the readers!

Images in the kind cluster

Kind is unable to automatically access to locally built images because it does not have direct access to the Docker daemon on your host. But the interesting thing is that using the following command, you can load your locally built image into the kind cluster:

kind load docker-image kind.local/image-name:image-tag --name kind-cluster-name

where kind.local/ is a custom namespace for local images in the kind cluster. The kind load command allows users to push images from the host’s Docker daemon to the Kind cluster, making them accessible to nodes inside the containers

Conclusion

Overall, I feel that kind successfully caters to the following needs: fast cluster spin-up, lightweight resource usage, and easy CI/CD integration, and without a doubt can be used for these use-cases.

Regardless of your choice of tool, kind or minikube, understanding how Kubernetes works internally, especially concepts like kubeadm, container runtimes, and networking, is crucial for effectively managing clusters in production. Here is where understanding such tools and having a look at the source code can help you develop better understanding. I also find the code of kind to be clean and can be used to learn a couple of design patterns. So the next time you want to test Kubernetes locally, do have a look at kind, not just at kind but its source code as well!

Subscribe to my newsletter

Read articles from Aman Mulani directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by