Docker Storage 101: The Role of Storage Drivers in Container

Ritik Jain

Ritik Jain

My journey into Docker’s internals began when I explored how Docker pulls images. That curiosity led me to wonder how Docker manages storage on a local system. I found the answer in the Docker documentation on storage drivers, which offers a detailed explanation of the mechanisms behind image and container storage.

Docker Layers

Docker stores data by breaking an image into layers that capture changes to the file-system. This design makes it possible for multiple images to share common layers, meaning that if different images use the same base layer, that layer is stored only once. As a result, overall storage is saved.

Dividing an image into multiple layers also lowers the network bandwidth needed when pulling images. Instead of downloading an entire image every time, Docker only needs to fetch layers that aren’t already present on the system.

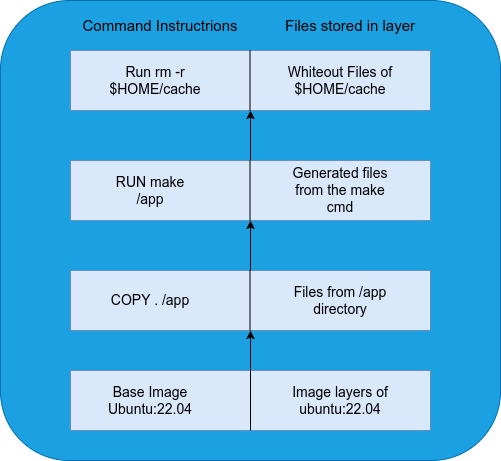

Each layer corresponds to an instruction in the Dockerfile. For example, consider this Dockerfile:

FROM ubuntu:22.04

LABEL org.opencontainers.image.authors="org@example.com"

COPY . /app

RUN make /app

RUN rm -r $HOME/.cache

CMD python /app/app.py

In this file, instructions like FROM, COPY, and RUN generate new image layers by applying changes to the filesystem. On the other hand, instructions such as LABEL and CMD only modify metadata and do not create new layers.

Storage Drivers

Docker’s storage driver is responsible for managing how layers are created and interacts with each other. It uses a mechanism called Copy-on-Write (CoW) to optimise storage usage, though this comes with a trade-off in write performance.

Mechanism of Copy-on-Write

In Copy-on-Write (CoW), files are stored as changes across a stack of layers. Docker arranges these layers in the order of instructions from the Dockerfile during the image build. The file operations done in the CoW mechanism are as follows:

Reading Files:

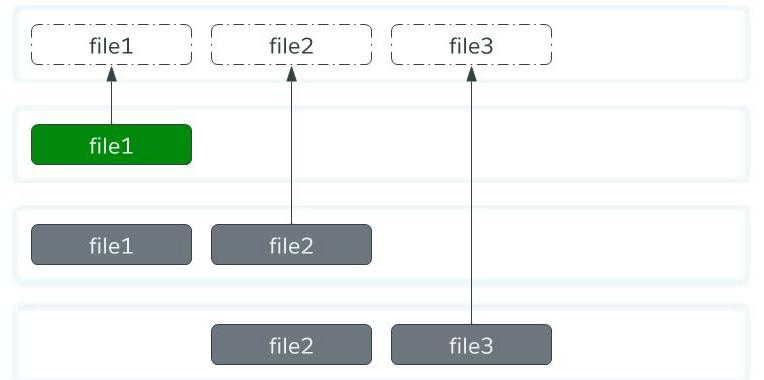

When a file is read, Docker goes through the layers from top to bottom and returns the first matching file.

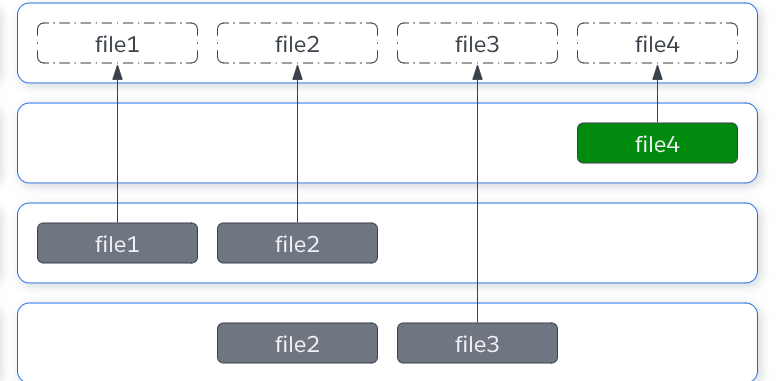

Adding Files:

Adding a new file creates a new layer that includes the file.

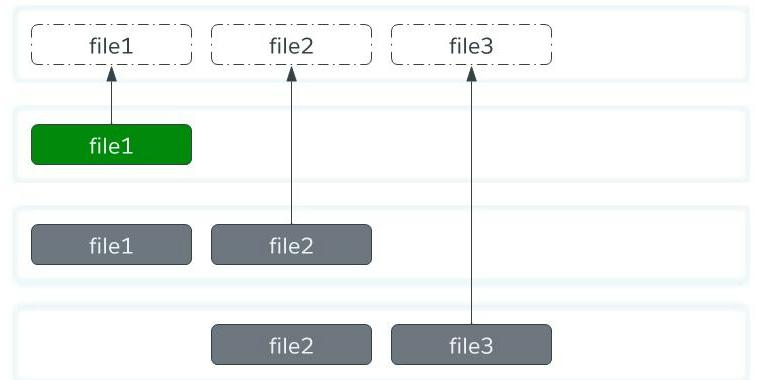

Modifying Files:

To modify a file, Docker first looks for the file in the stack of layers. The first file found is copied to a new layer where the changes are made. Future reads will use this modified copy.

Deleting Files:

Instead of removing a file from lower layers, Docker creates a special “whiteout” file that marks the file as deleted.

Benefits of Copy-on-Write

Storage Optimisation

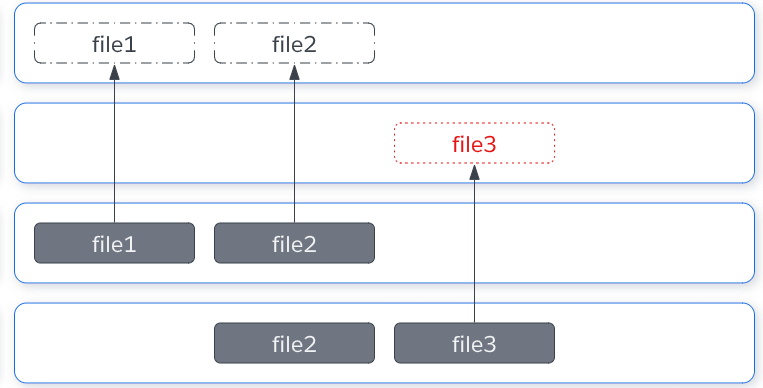

By sharing unmodified data across layers, Docker avoids duplicating data. For example, consider two images:acme/my-base-image:1.0have layers:[ "sha256:72e830a4dff5f0d5225cdc0a320e85ab1ce06ea5673acfe8d83a7645cbd0e9cf", "sha256:07b4a9068b6af337e8b8f1f1dae3dd14185b2c0003a9a1f0a6fd2587495b204a" ]acme/my-final-image:1.0(which uses the base image) will have:[ "sha256:72e830a4dff5f0d5225cdc0a320e85ab1ce06ea5673acfe8d83a7645cbd0e9cf", "sha256:07b4a9068b6af337e8b8f1f1dae3dd14185b2c0003a9a1f0a6fd2587495b204a", "sha256:cc644054967e516db4689b5282ee98e4bc4b11ea2255c9630309f559ab96562e", "sha256:e84fb818852626e89a09f5143dbc31fe7f0e0a6a24cd8d2eb68062b904337af4" ]

The first two layers are shared between both images, so they’re stored only once on disk. This saves both storage space and network bandwidth when pulling images.

Limitation of Copy-on-Write

- Write Overhead

Every time a file is modified, Docker must copy it to the writable layer before applying changes. This extra copying process increases write latency. For write-intensive applications, such as databases, this overhead will become a significant performance bottleneck.

Storage Location

All these layers—both from images and containers—are stored in a central directory on the host, typically found at /var/lib/docker/<storage-driver>. This is where Docker manages and organizes the data used by the storage driver.

Containers and Layers

When a container is launched, Docker adds a thin, writable layer on top of the immutable image layers. This layer, known as the container layer, holds only the changes made by the running application, making it very lightweight.

Benefit

Prevents Race Conditions

Since each container gets its own writable layer while sharing the same underlying read-only image layers, multiple containers can run simultaneously without interfering with each other.Space Optimisation

Common image layers are reused across containers, which means the same data isn’t duplicated multiple times. This efficiency helps reduce overall disk usage.

Limitation

Write-intensive applications, such as databases, can experience performance overhead because every write operation involves copying data (due to the Copy-on-Write mechanism). For these applications, using volumes is recommended to avoid this extra overhead.

Conclusion

Breaking data into layers in Docker is a smart approach to reducing disk usage through re-usability. By storing changes as layers, the same data is shared across multiple images, saving valuable storage space. The Copy-on-Write mechanism uses a stack where the topmost layer holds the latest changes, ensuring that any edited file is read from this updated layer without altering the underlying data.

Additionally, the clear separation between immutable read-only image layers and the writable container layer not only optimises storage but also prevents race conditions. This architecture allows multiple containers to run concurrently from the same image without interfering with each other.

Reflecting on these insights, I’m inspired to apply similar principles in future projects. For example, in my next endeavour developing parallel shared memory systems, I plan to separate read and write data to minimise lock contention—a simple yet effective way to prevent race conditions. Learning about Docker’s storage drivers has deepened my understanding of container optimisation and offers valuable lessons that can be applied both within and beyond Docker environments.

References

Subscribe to my newsletter

Read articles from Ritik Jain directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by