Terraform and Ansible : Towards a Collaborative and Automated Infrastructure

Ankoay Feno

Ankoay FenoTable of contents

- Do you already know or master Terraform and Ansible?

- Why use S3 for the Terraform state file?

- 'By default, this state is stored in a local file named 'terraform.tfstate'. We recommend storing it in a secure and versioned location like HCP Terraform to encrypt and safely share it with your team.'

- Goals of This Exercise.

- Prerequisites

- Best Practice Setup

- File Structure and Architecture

- Getting Started

- Migrate the Terraform State to S3 and DynamoDB

- Congratulations! Your Infrastructure is Set Up

- Destruction of Infrastructure

Do you already know or master Terraform and Ansible?

Mastering Terraform and Ansible is a great start, but when it comes to collaborating with a team or integrating them into a CI/CD pipeline, it’s a whole new challenge.

In this hands-on exercise, we’ll use AWS as the cloud provider. We’ll store the Terraform state in S3 and use DynamoDB for the lock ID, which helps prevent conflicts when multiple people are working with Terraform simultaneously/.

Why use S3 for the Terraform state file?

When working with Terraform, it’s a best practice to automate your infrastructure with code. But imagine what would happen if all your data were lost or encrypted. You’d want to have a backup.

You might think of using Git, but committing the Terraform state file to Git isn’t a recommended practice.

As HashiCorp says in their documentation:

'By default, this state is stored in a local file named 'terraform.tfstate'. We recommend storing it in a secure and versioned location like HCP Terraform to encrypt and safely share it with your team.'

In this exercise, we chose S3 to store the state file, as it's a secure and scalable solution.

Why use DynamoDB for the Terraform lock ID?

When working in a team, you’ll often encounter situations where multiple people try to run terraform apply simultaneously. Without a mechanism to prevent this, Terraform could end up modifying the state file unexpectedly, causing conflicts.

Thanks to DynamoDB, we can manage a lock ID that ensures only one person can run terraform apply at a time. This helps avoid those frustrating situations where someone has to wait for the other person to finish before they can run their own command. While there are other ways to manage this, DynamoDB is a popular and simple solution.

Goals of This Exercise.

In this tutorial, we aim to:

Create S3 and DynamoDB for storing the state and managing the lock.

Create an EC2 instance for demonstration purposes.

Use Ansible to configure the EC2 instance (install server tools, Docker, and run an NGINX container for the demo).

Prerequisites

Before starting, ensure you have:

An AWS account.

AWS CLI installed.

Terraform installed.

Ansible installed.

Best Practice Setup

For better security and manageability, I recommend creating an IAM user (e.g., user_terraform) and attach it to group with the following permissions:

IAMFullAccess

AmazonS3FullAccess (allows Terraform to create an S3 bucket)

AmazonEC2FullAccess (allows Terraform to create EC2 instances)

AmazonDynamoDBFullAccess (allows Terraform to manage DynamoDB tables)

File Structure and Architecture

Here’s how I’ve structured the project:

ankoayfeno@cloudshell:~/terraform-ansible-as-team$ tree

.

├── backend.tf

├── Environments

│ └── Hand-on

│ ├── ansible

│ │ ├── ansible_playbook.yaml

│ │ └── hosts.ini

│ ├── main.tf

│ └── outputs.tf

├── Key

│ ├── key

│ └── key.pub

├── LICENSE

├── main.tf

├── Modules

│ ├── dynamo

│ │ └── main.tf

│ ├── ec2

│ │ ├── main.tf

│ │ ├── outputs.tf

│ │ └── variables.tf

│ ├── s3

│ │ └── main.tf

│ └── vpc

│ └── main.tf

├── outputs.tf

├── providers.tf

This architecture is clean and well-documented, without the need for a detailed README.

Getting Started

Step 1: Configure AWS CLI

Before we proceed, configure your AWS CLI with the access key and secret ID for the user_terraform.

aws configure

Step 2: Clone the Repository

Clone the template repository to simplify the process:

git clone https://github.com/Ankoay-Feno/terraform-ansible-as-team

cd terraform-ansible-as-team

Step 3: Create an SSH Key Pair

In the Key directory, create an SSH key pair:

mkdir Key

sh-keygen -trsa -b 4096 -C "user_email@example.com" -f Key/key

Step 4: Initialize Terraform and Deploy

Now, initialize Terraform and deploy the infrastructure:

terraform init

terraform plan # Optional: Shows the infrastructure overview

terraform apply --auto-approve

After this, you’ll have an S3 bucket, a DynamoDB table, an EC2 instance, and VPC rules set up. But we still need to move the state file to S3 for team access.

Migrate the Terraform State to S3 and DynamoDB

Uncomment the backend.tf file to configure the backend:

terraform {

backend "s3" {

bucket = "ankoay-s3"

key = "state/terraform.tfstate"

region = "us-east-1"

dynamodb_table = "terraform-locks"

encrypt = true

}

}

Reinitialize Terraform to migrate the state to the S3 bucket:

terraform init --migrate-state

terraform plan # Optional

terraform apply --auto-approve # Optional: Test the remote state

Congratulations! Your Infrastructure is Set Up

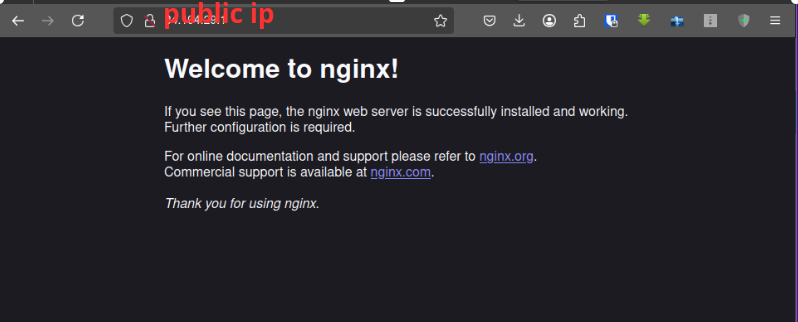

Well done! You've successfully set up your infrastructure with Terraform and Ansible. You now have a fully functional EC2 instance, S3 bucket, and DynamoDB table. The EC2 instance is running an NGINX container, which will display a simple webpage, confirming everything is working.

Destruction of Infrastructure

Now, let's clean up everything we built.

Step 1: Migrate the State Back to Local

To do this, comment out the backend.tf configuration:

# terraform {

# backend "s3" {

# bucket = "ankoay-s3"

# key = "state/terraform.tfstate"

# region = "us-east-1"

# dynamodb_table = "terraform-locks"

# encrypt = true

# }

# }

Reinitialize Terraform to migrate the state back:

yes| terraform init --migrate-state

Reinitialize Terraform to migrate the state back:

Edit the S3 and DynamoDB modules to allow Terraform to destroy the resources:

S3 Module (Module/s3/main.tf):

resource "aws_s3_bucket" "terraform_state" {

bucket = "ankoay-s3"

force_destroy = true

# lifecycle {

# prevent_destroy = true

# }

tags = {

Name = "Terraform State Bucket"

Environment = "Terraform Backend"

}

}

resource "aws_s3_bucket_versioning" "terraform_state" {

bucket = aws_s3_bucket.terraform_state.id

versioning_configuration {

status = "Enabled"

}

}

resource "aws_s3_bucket_server_side_encryption_configuration" "terraform_state" {

bucket = aws_s3_bucket.terraform_state.id

rule {

apply_server_side_encryption_by_default {

sse_algorithm = "AES256"

}

}

}

resource "aws_s3_bucket_public_access_block" "terraform_state" {

bucket = aws_s3_bucket.terraform_state.id

block_public_acls = true

block_public_policy = true

ignore_public_acls = true

restrict_public_buckets = true

}

DynamoDB Module (Module/dynamo/main.tf):

resource "aws_dynamodb_table" "terraform_locks" {

name = "terraform-locks"

billing_mode = "PAY_PER_REQUEST"

hash_key = "LockID"

attribute {

name = "LockID"

type = "S"

}

# lifecycle {

# prevent_destroy = true

# }

}

Step 3: Apply the Changes

terraform apply --auto-approve

Step 4: Destroy the Infrastructure

Once everything is ready, destroy the demo environment:

terraform destroy --auto-approve

Conclusion

Congratulations! You’ve successfully automated the creation and management of your infrastructure with Terraform and Ansible, storing the state in S3 and using DynamoDB for locking. This approach ensures better collaboration and avoids conflicts when multiple people work on the same infrastructure.

Subscribe to my newsletter

Read articles from Ankoay Feno directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by