Word Embeddings Part - 1

Phanee Chowdary

Phanee Chowdary

Tokenization and Indexing

You might have seen machine learning models that takes some text and predict whether it is spam or not. Similarly a model can analyze a movie review and can determine its sentiment (positive or negative).

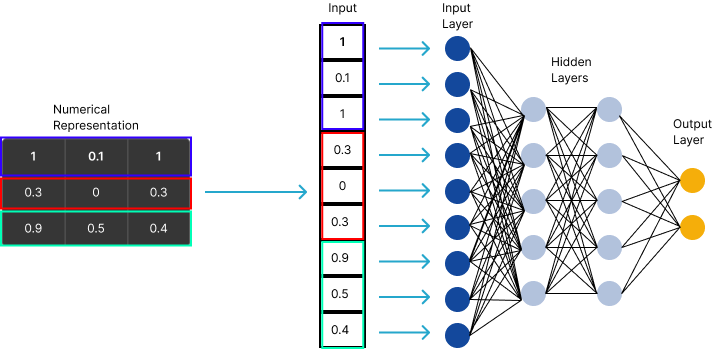

The first rule of training any machine learning model is to convert input into numbers.

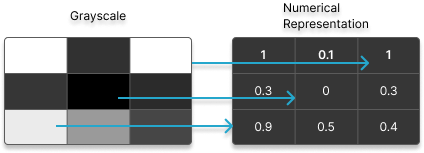

Let us consider an example of a grayscale image as below. The brighest pixel has value 1 and the darkest one has value 0.

By converting input into numerical form based on pixel values we can use its input to train out model.

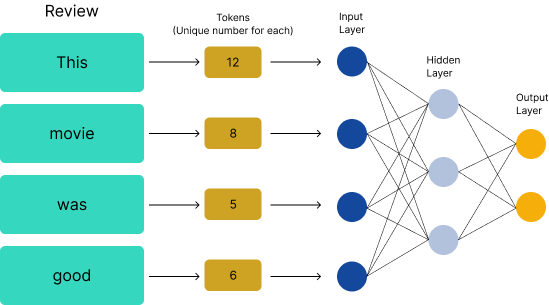

But in this post we are dealing with text input for the model, so lets see how it is prepared for the machine learning models. Lets consider a statement This movie was good.

To convert this review sentence into numbers first we assign token value (unique) to each unique word this is called tokenization. Now we have numerical values, and our machine learning model expects numbers so we can train model using token values as its input. However this method is only suitable for ordinal features cases where the numbers imply ranking like low-1, medium-2, high-3 where the order carries meaning.

For example

Lets assume the words good, bad and great are the assigned 6, 22 and 21. Here the words bad and great are close which might lead the model to assume that words and bad are similar whereas they are opposite to each other. In reality good and great has same meaning but due to their token values the model may misinterpret and this can lead to incorrect prediction. So this make the simple token numbers are not suitable for machine learning models.

To address this issue we can use technique called One-Hot Encoding.

One-Hot Encoding

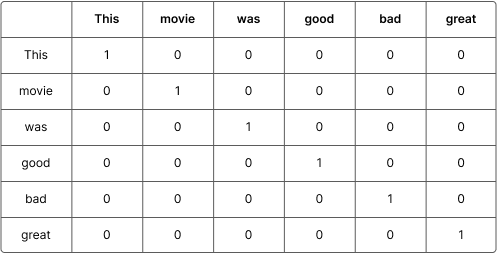

In this technique each word is represented as unique vector, where the vector size is total vocabulary size. This method eliminates the ranking issue that token numbers have and ensure that all words are trated equally without any implicit numerical relationships. Each word is assigned unique vector where only one position contain 1 indicating the word’s presence and all other positions are 0.

For example

Sentence 1: This movie was good, Sentence 2: This movie was bad, Sentence 3: This movie was great

Vocabulary from these three sentences is Vocabulary: [“This“, “movie“, “was“, “good“, “bad“, “great“]

ONE-HOT ENCODING TABLE

Now using these one-hot encoded vectors we can convert our training data to numerical format suitable for the model training:

Sentence 1: [[100000], [010000], [001000], [000100]]

Sentence 2: [[100000], [010000], [001000], [000010]]

Sentence 3: [[100000], [010000], [001000], [000001]]

One-hot encoding help eliminate the unintended numerical relationships and is simple to implement. However they has major drawbacks.

It is

sparsemeaning if we have a vocabulary of 10000 words, then each word is represented by a 10000 dimensional sparse vector which is memory intensive and computationally expensive.It cannot capture the semantic and contextual meaning as one-hot encoding treats words as completely independent entities. It does not capture relatioonships between words such as how

good,greatare similar in meaning.

These limitations make one-hot encoding inefficient for large-scale natural language processing tasks.

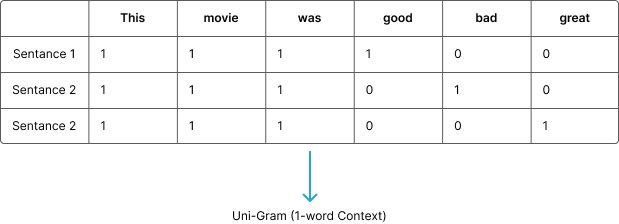

Another approach is using Bag of Words.

Bag of Words

In this method we simply count the number of times a word appears in a sentence and place that counts in their respective word position. Since we are considering only single word counts this technique is also called Uni-Gram (1-word context) as each word is treated independently when creating the feature vector.

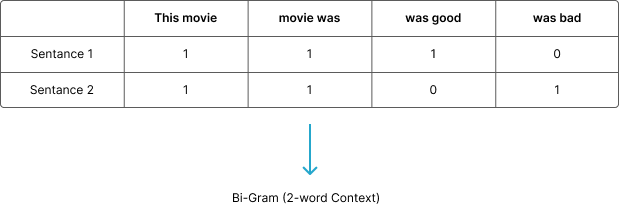

Instead of creating uni-gram we can consider two-word context Bi-Gram and count the occurances of all possible 2-word combinations to create a feature vector.

Let us consider only 2 sentances for this case for simplicity purpose.

This adds more context compared to one-hot encoding or uni-gram bag of words approach, similarly we can extend this to N-word context called N-Gram features where the model captures context information from upto N words.

However there are some challenges when using N-Grams as features:

If the dataset is large there will be many possible N-Gram combinations leading to a very large and sparse feature vector. The model can only consider a limited number of words at a time. For example with a Tri-Gram (3-word context) the model captures context only from 3 words around it.

N-Grams do not capture deeper meanings or relationships between words this makes them less advanced for advanced NLP tasks like

Language TranslationandText Generationwhich heavily reply on understanding word meaning and context.

Semantic and Contextual Understanding of Text

Subscribe to my newsletter

Read articles from Phanee Chowdary directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Phanee Chowdary

Phanee Chowdary

Deep Learning Researcher