DevSecOps Pipeline Project: Deploy Netflix Clone on Kubernetes

Amit singh deora

Amit singh deora

Deploy a Netflix-like application on AWS using DevSecOps practices. The project will involve Continuous Integration (CI) and Continuous Deployment (CD) using Jenkins, monitoring using Prometheus and Grafana, and ensuring security using tools like SonarQube, Trivy, and OWASP. We will also use Kubernetes and GitOps principles to manage deployments using ArgoCD.

Prerequisites

AWS Account – You need an active AWS account to create EC2 instances and other resources.

Basic knowledge of AWS – Familiarity with EC2, IAM roles, Security Groups, and Elastic IP.

Tools Setup – You should have tools like Git, Docker, AWS CLI, and VS Code (or any code editor) installed.

Phase 1: Initial Setup and Deployment

Step 1: Setting Up EC2 Instance for Jenkins and Security Tools

Log in to AWS Console:

- Go to the AWS Console, and log in to your account.

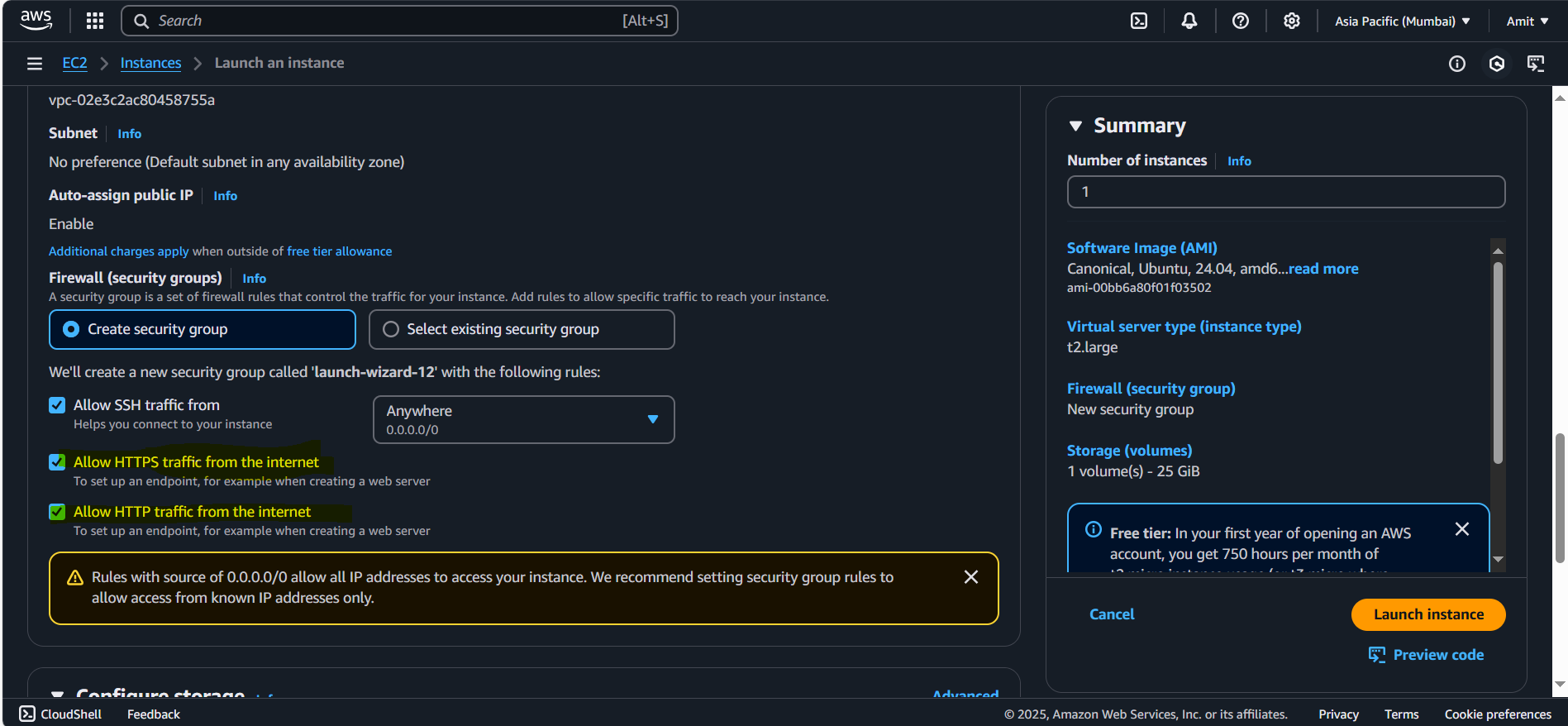

Create EC2 Instance:

Go to EC2 > Instances > Launch Instance.

Choose Ubuntu 22 as the AMI (Amazon Machine Image).

Choose the instance type T2.large (because we are going to install Jenkins, SonarQube, Trivy, etc., and need resources for this).

Configure Instance: You can leave most options as default. Choose Default VPC and Default Subnet.

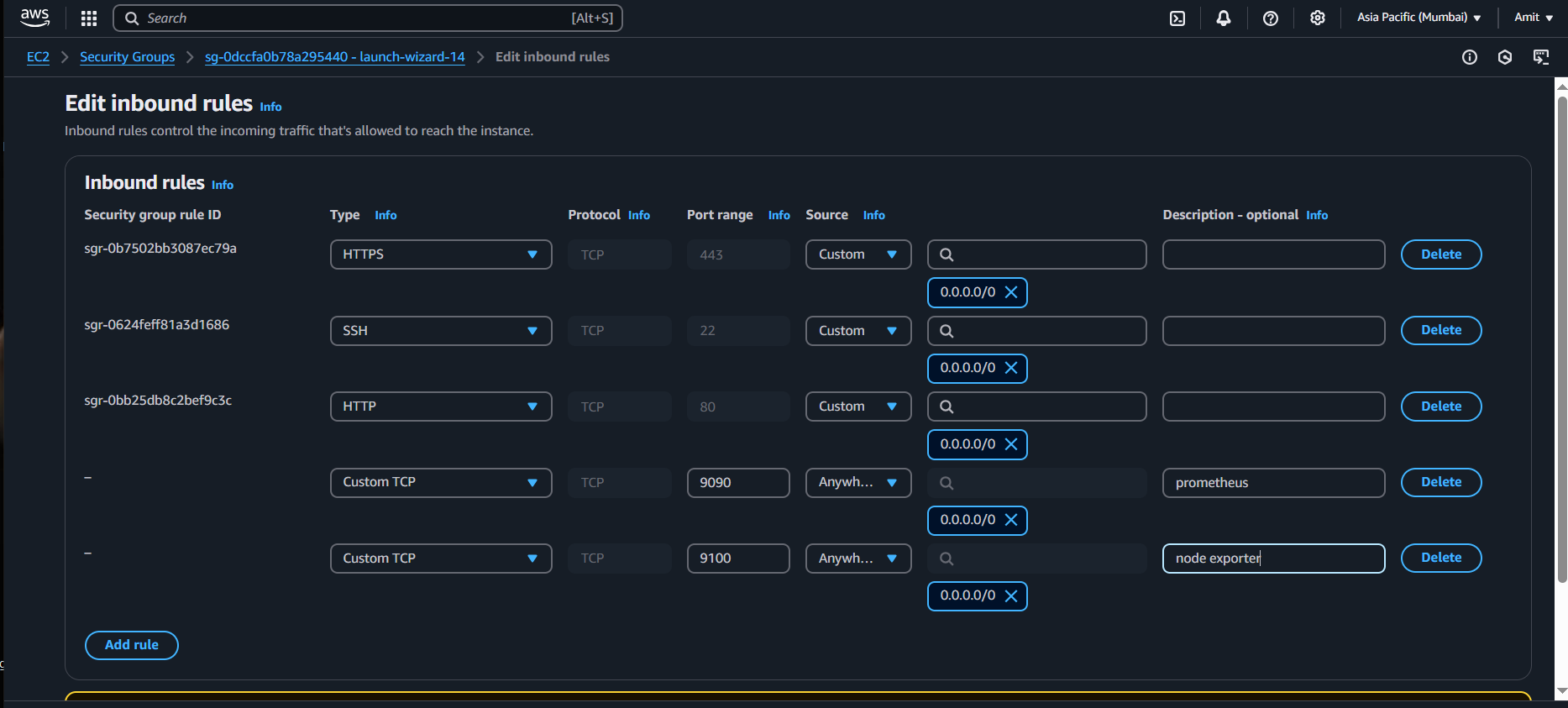

Security Group: Create a new security group. Allow the following ports:

SSH (Port 22) for connecting to the instance.

HTTP (Port 80) for web access.

HTTPS (Port 443) for secure access.

Add custom ports later (8080 for Jenkins, 9000 for SonarQube, and 8081 for the app).

We have allowed HTTPS because when we connect jenkins with dockerhub.

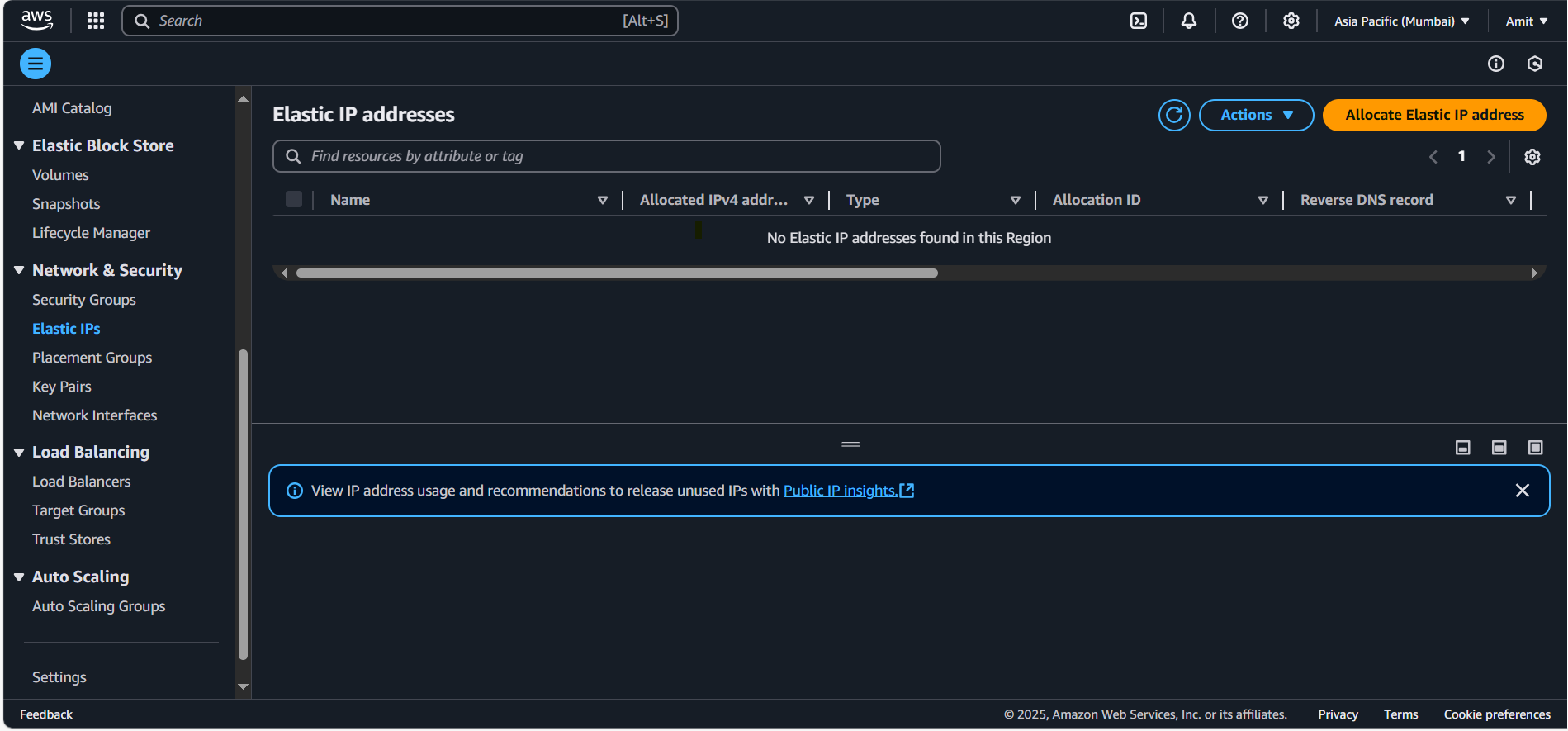

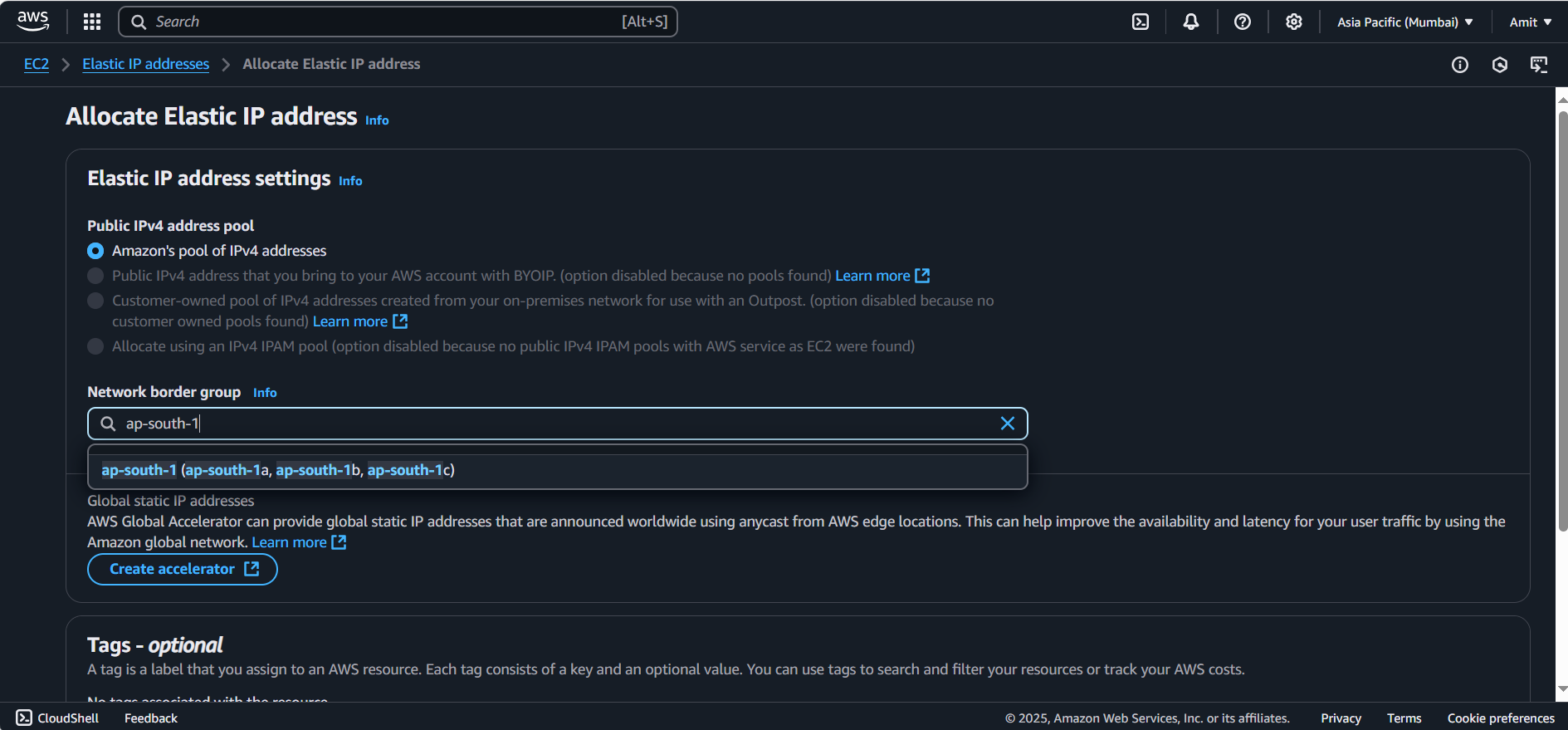

Elastic IP:

Go to Elastic IP > Allocate Elastic IP > Allocate.

Name the Elastic IP, e.g.,

Netflix-ElasticIP.

Associate this IP with your EC2 instance to ensure that it keeps the same IP even if the instance stops and starts because we might complete our project in intervals, so we need consistent IP.

Step 2: SSH Into Your EC2 Instance

Update Package List

Install Docker: Enable Docker: Verify Docker Installation:

sudo apt-get update

sudo apt-get install docker.io -y

sudo usermod -aG docker $USER # Replace with your system's username, e.g., 'ubuntu'

newgrp docker

sudo chmod 777 /var/run/docker.sock

Step 3: Clone the GitHub Repository

git clone https://github.com/amitsinghs98/DevSecOps-Project-Netflix.git

To run the project locally without Dockerfile we need “npm”

DEV PART: Run Application with Dockerfile locally

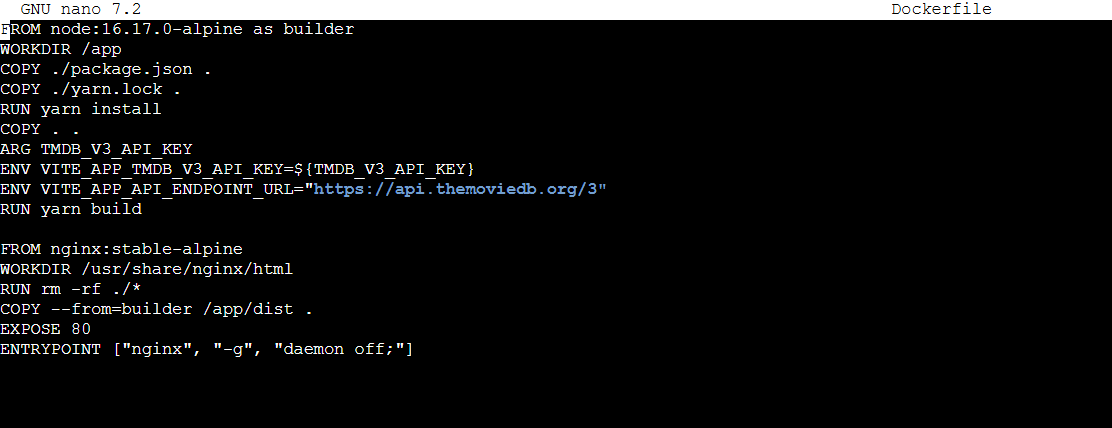

The Dockerfile contains various instructions such as:

Base Image: The application is built using

node:16.Working Directory: Defined using

WORKDIR.Copying Files: Required files are copied to the container.

Defining an Argument: The application requires a

TMDB V3 API Keyfor fetching movie data.

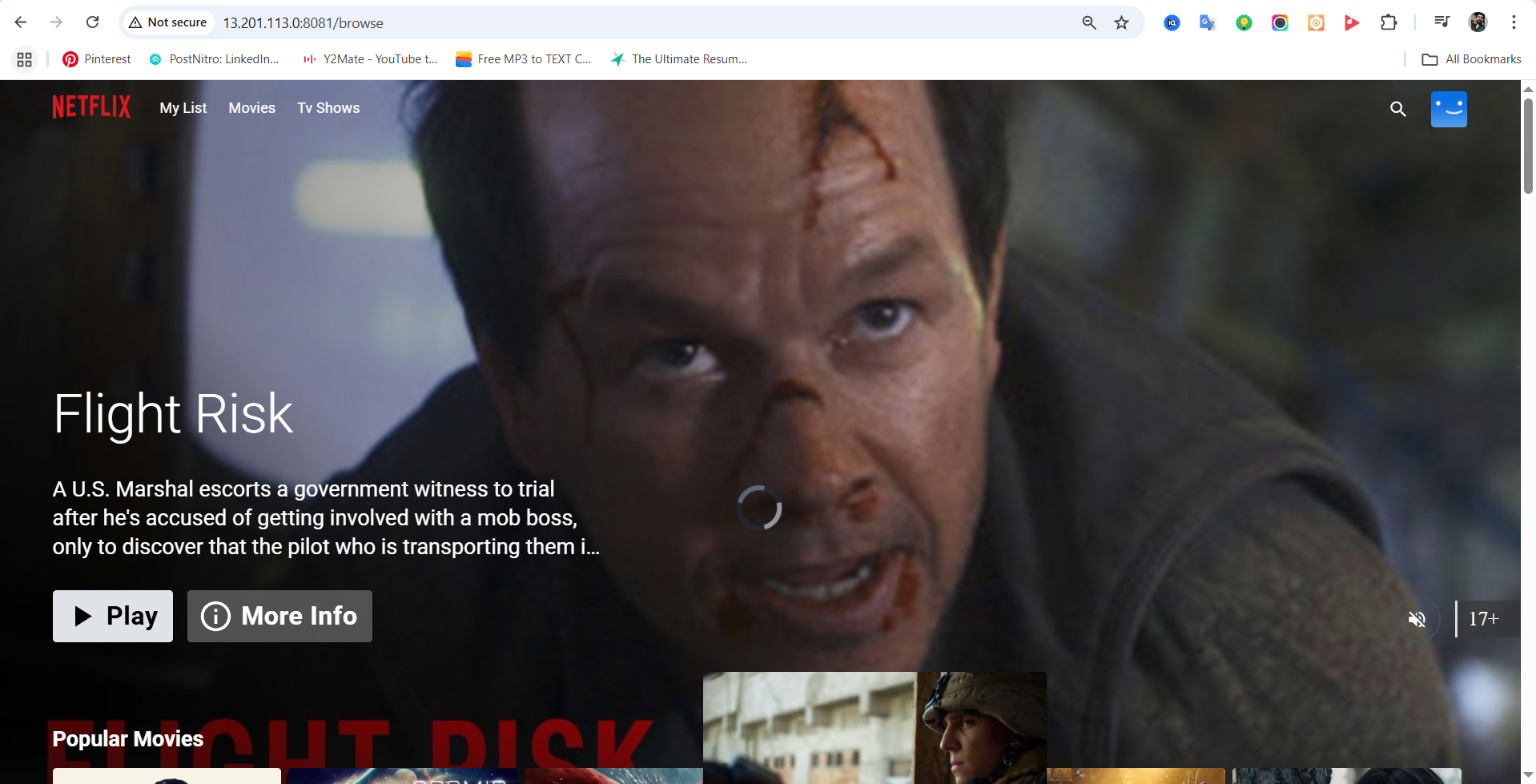

Try to run project with dockerfile

docker build -t netflix .

docker run -d -p 8081:80 netflix:latest

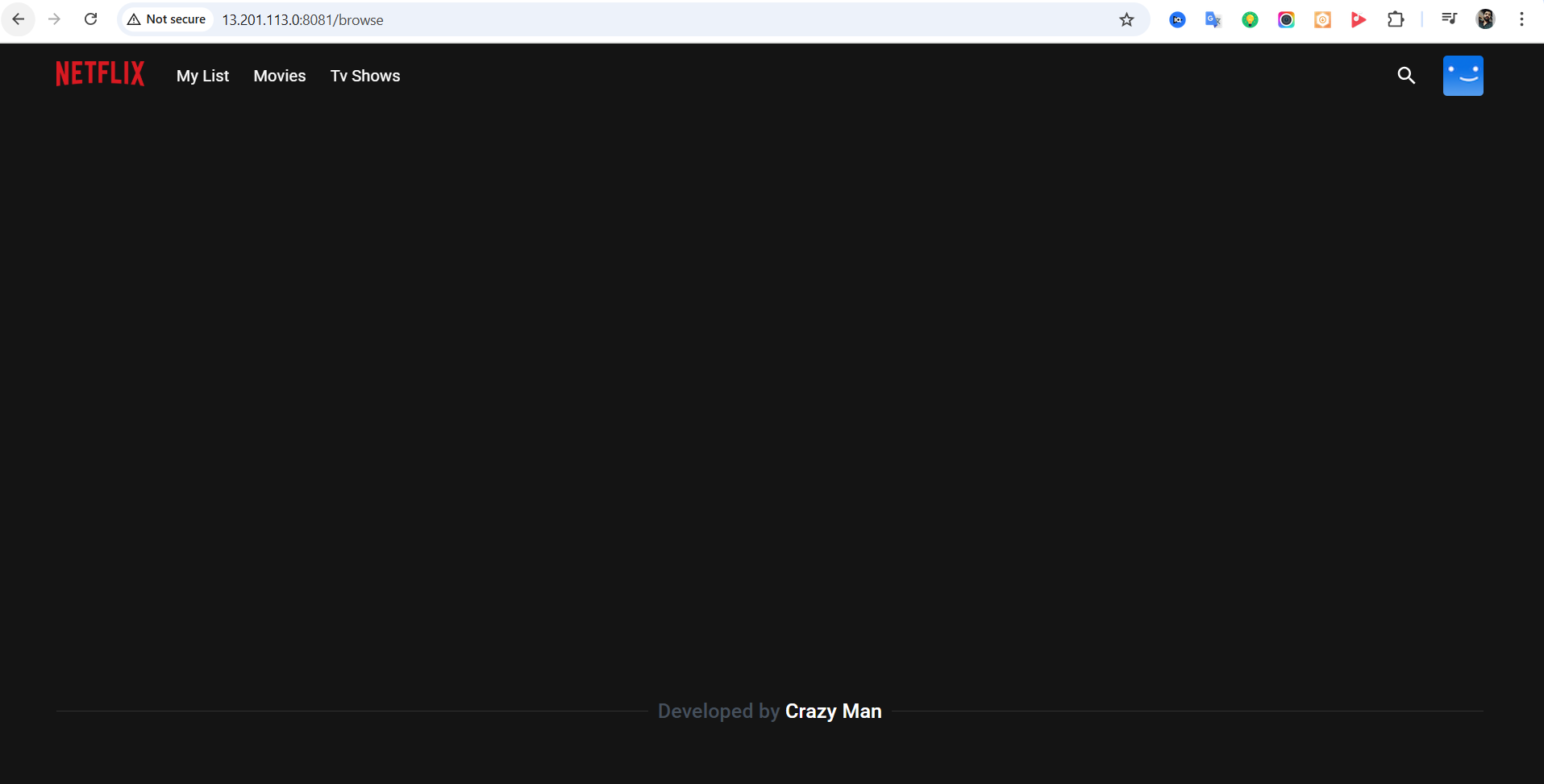

Make sure you open the PORT:8081

You will see the blank screen as API isn’t correctly configured to fetch data.

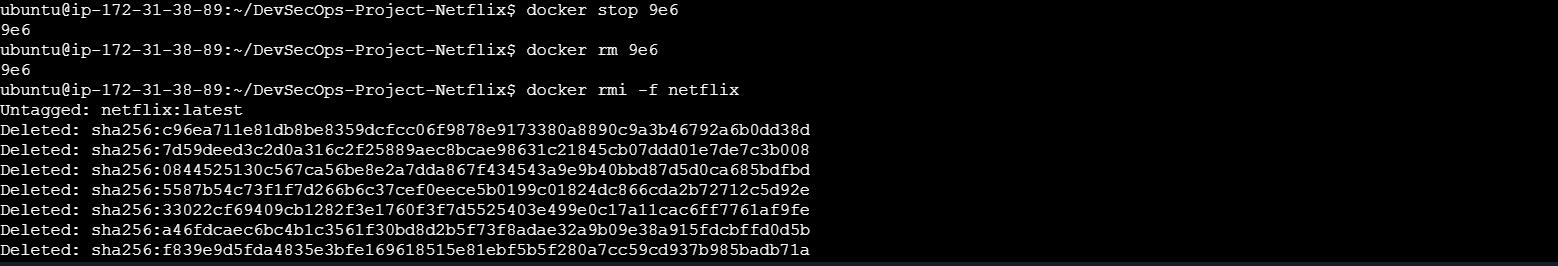

#to delete

docker stop <containerid>

docker rmi -f netflix

Setup Proper API Key to fetch Application Data with TMDB

Note: To run any movie app on your website we need the API Key, TMDB provide the API Keys.

Understanding API and TMDB

API (Application Programming Interface) allows communication between different services.

TMDB (The Movie Database) provides an API to fetch details of movies and series.

Use Case: We will fetch Netflix movie data from TMDB into our application.

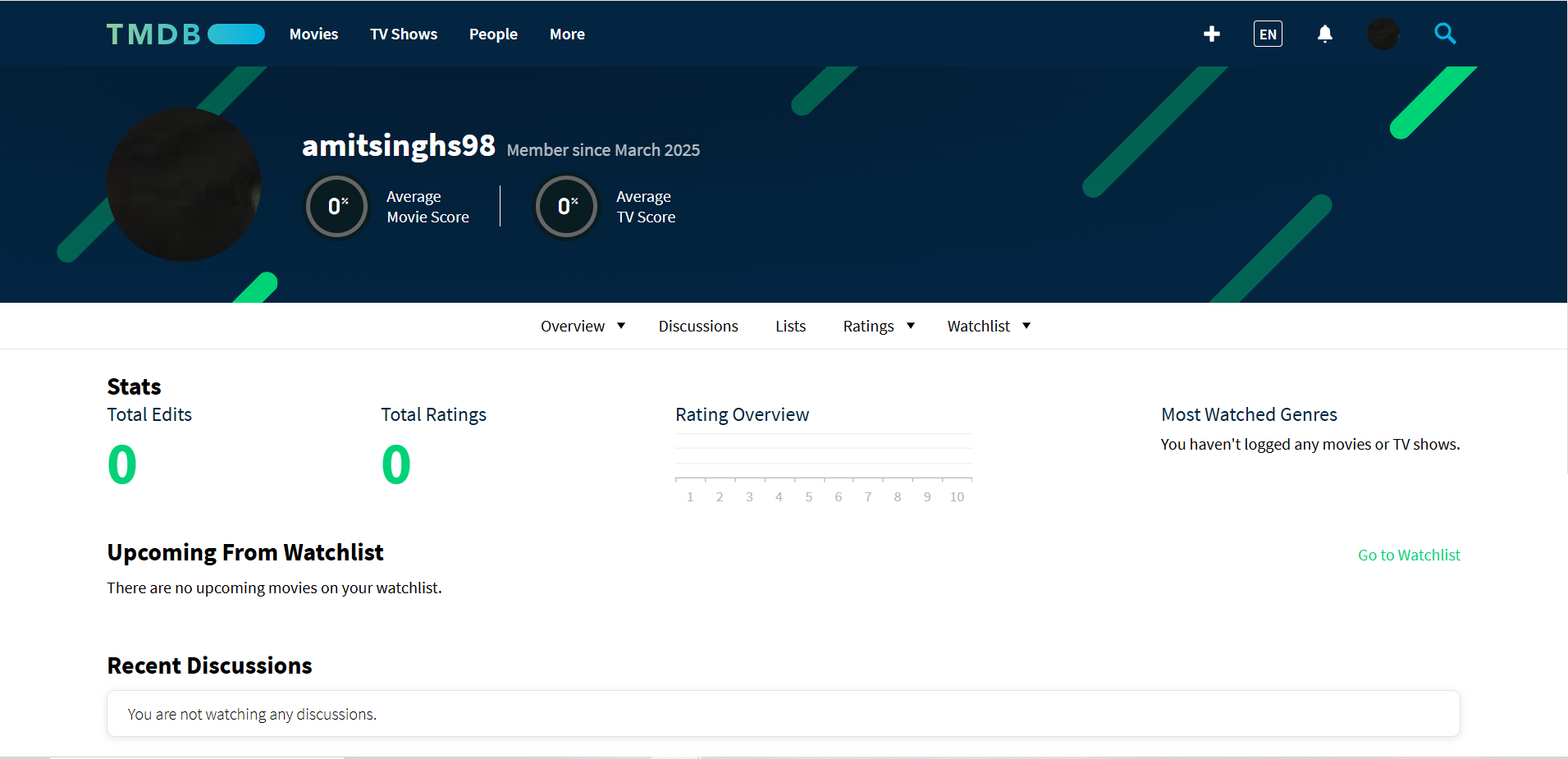

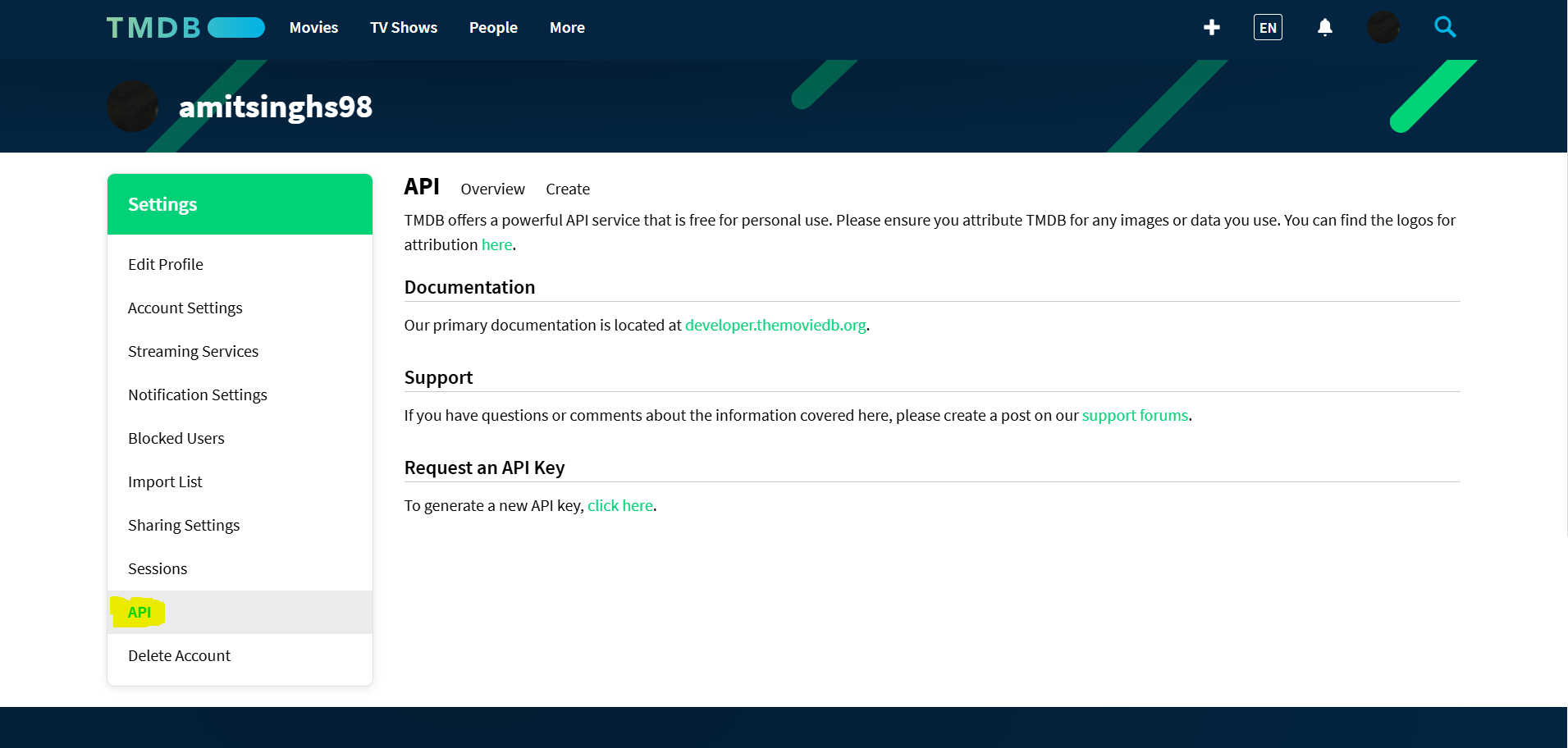

Login to TMDB > Settings > API

Create a new API key by clicking "Create" and accepting the terms and conditions.

Provide the required basic details and click "Submit."

You will receive your TMDB API key.

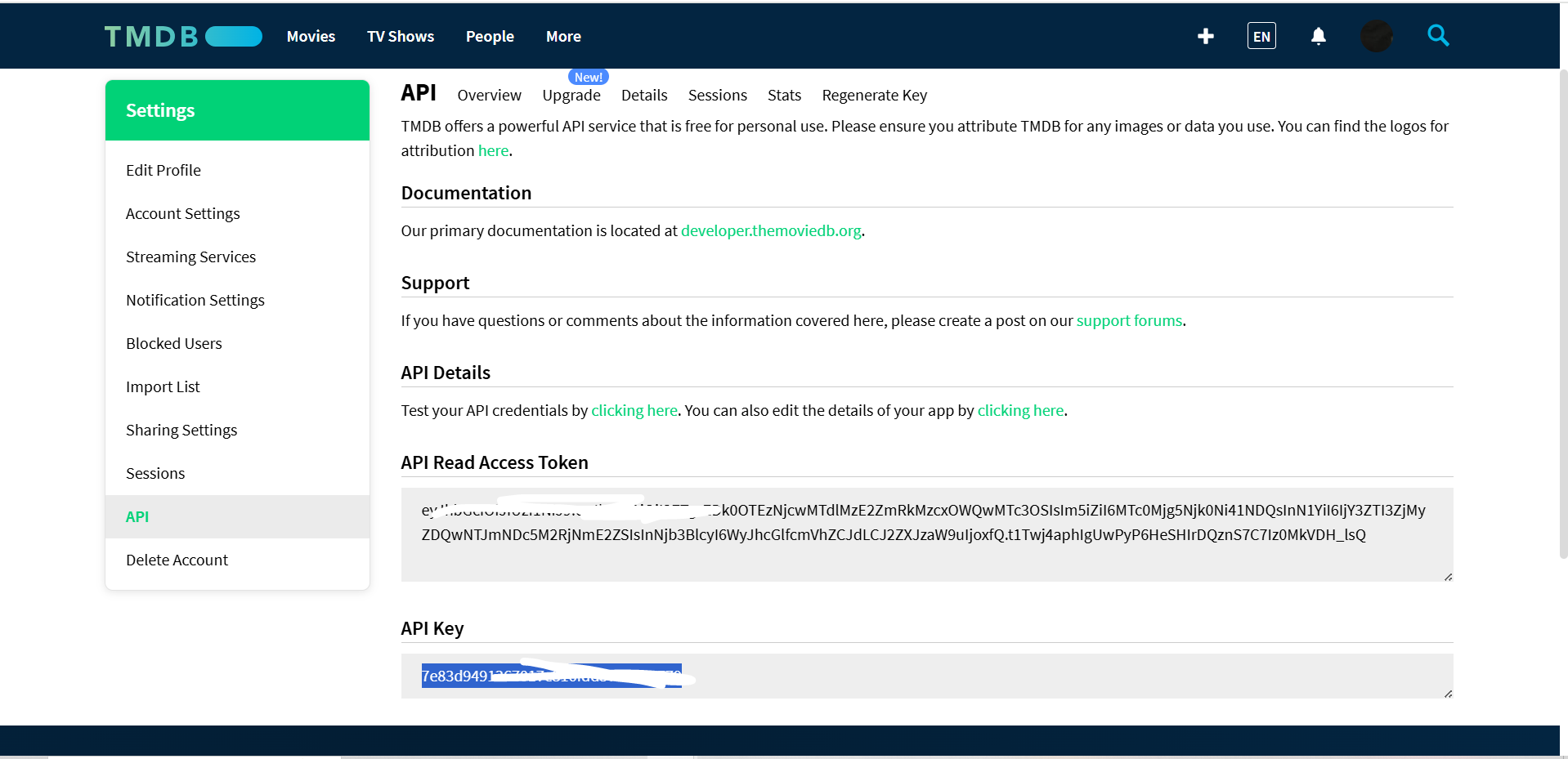

Rebuilding Docker Image with API Key

Since the API key is required, we need to rebuild the Docker image with the argument:

docker build --build-arg TMDB_V3_API_KEY=<your-api-key> -t netflix .

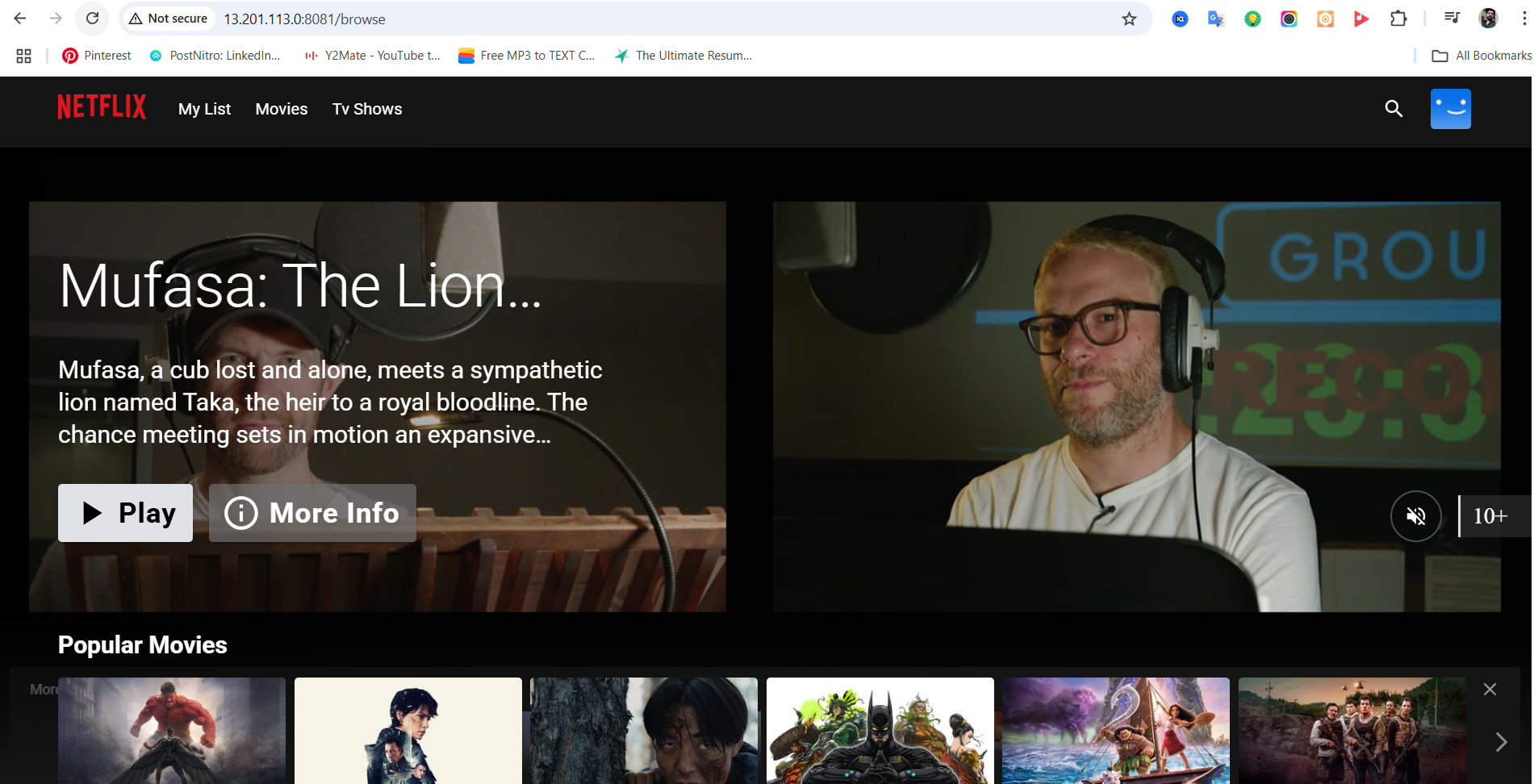

Then, run the new container:

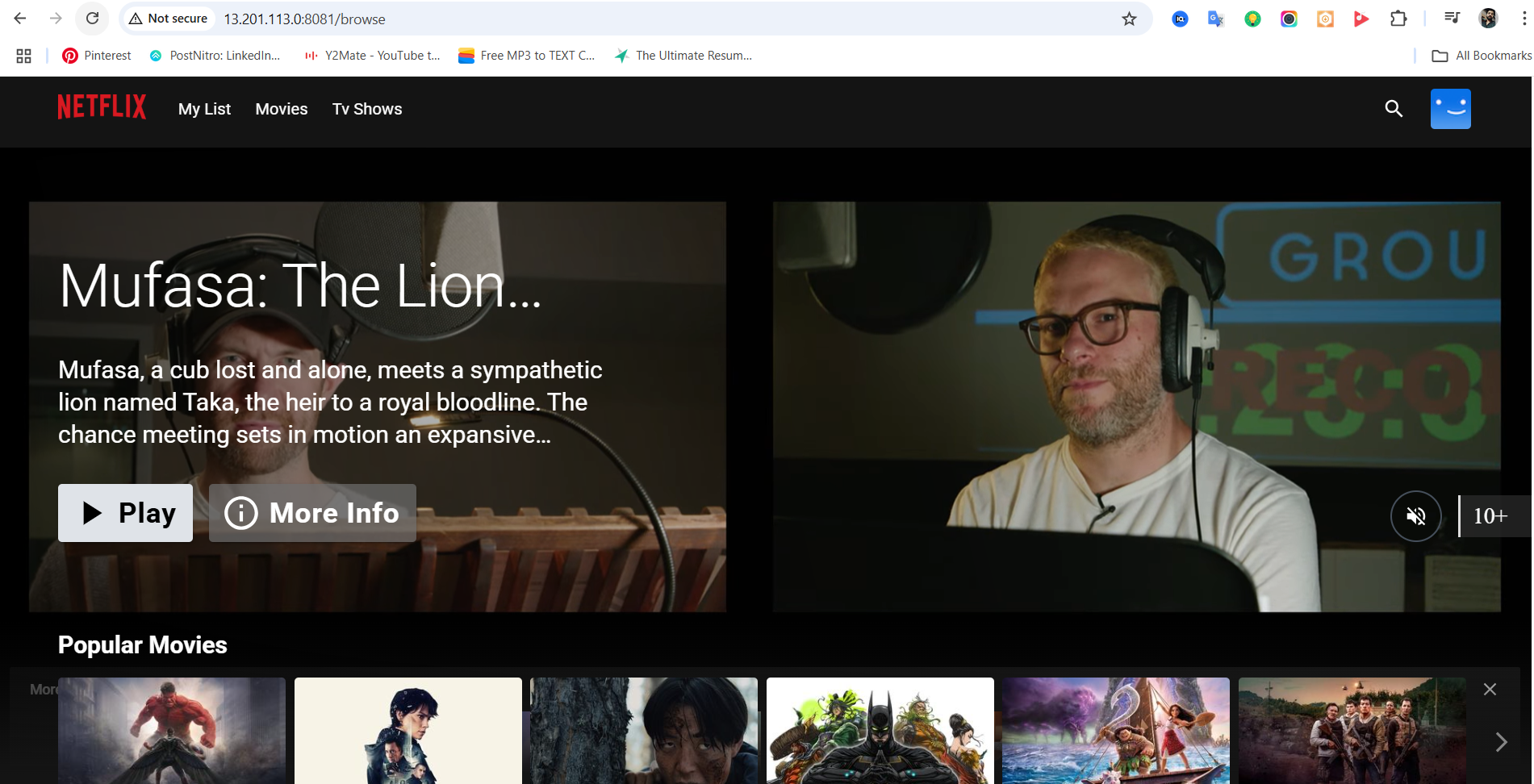

Docker run -d -p 8081:80 netflix

Now, refresh the application. You should see all the movies and series listed.

Our Dev part is done, now will do Sec Part

Phase2: Security part

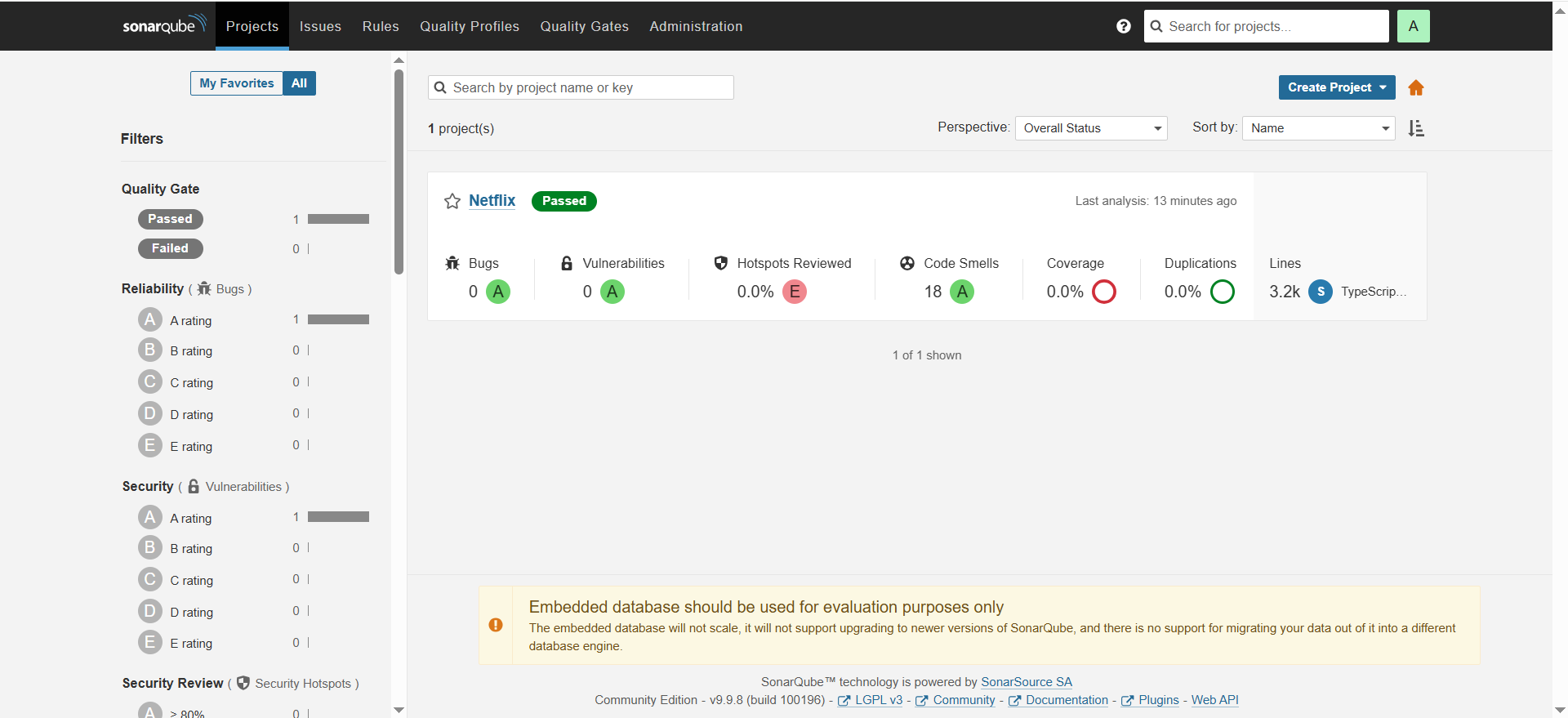

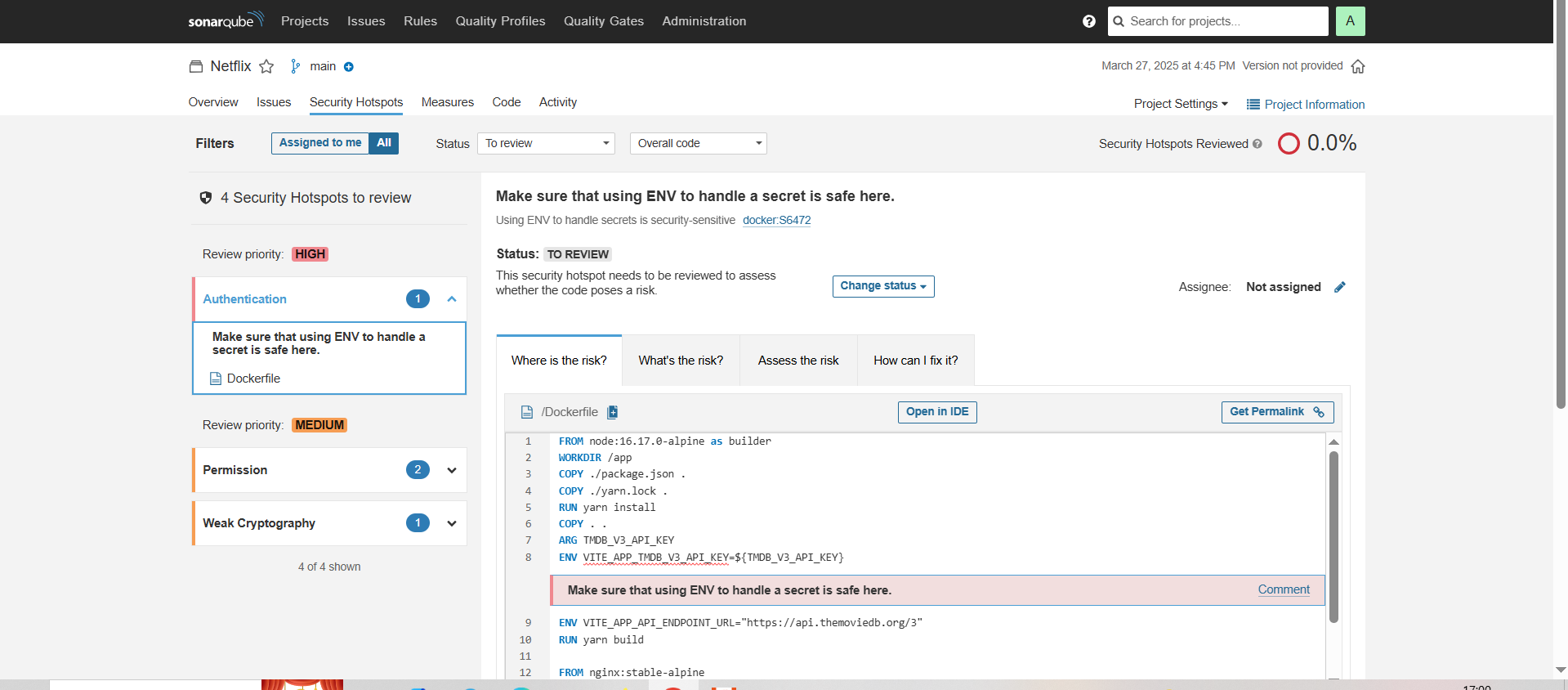

Adding Security with SonarQube

Understanding SonarQube

SonarQube is used for code analysis and security scanning.

It helps in identifying code issues, vulnerabilities, and dependencies

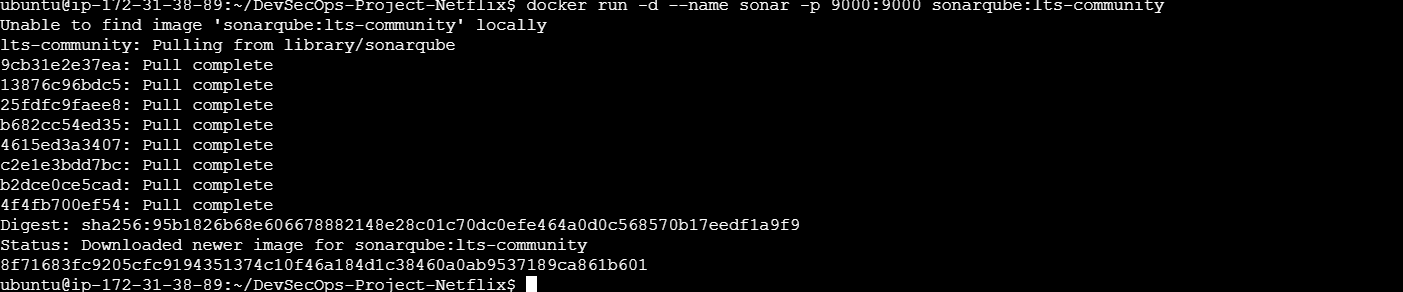

Running SonarQube as a Docker Container

Use the following command to deploy SonarQube:

docker run -d --name sonar -p 9000:9000 sonarqube

Verify the running containers:

docker ps

Accessing SonarQube

Open

http://localhost:9000in a browser.To access:

publicIP:9000 (by default username & password is admin)

Once logged in, you can start scanning your code for vulnerabilities.

Installing and Using Trivy for Security Scanning

Trivy is an open-source security scanner for detecting vulnerabilities in containers, file systems, and repositories. Provide reports what’s problem is with your file system or docker image.

To install Trivy:

sudo apt-get install wget apt-transport-https gnupg lsb-release

wget -qO - https://aquasecurity.github.io/trivy-repo/deb/public.key | sudo apt-key add -

echo deb https://aquasecurity.github.io/trivy-repo/deb $(lsb_release -sc) main | sudo tee -a /etc/apt/sources.list.d/trivy.list

sudo apt-get update

sudo apt-get install trivy

trivy version

We will install Trivy directly on Jenkins instance, as Jenkins don’t have trivy as plugin.

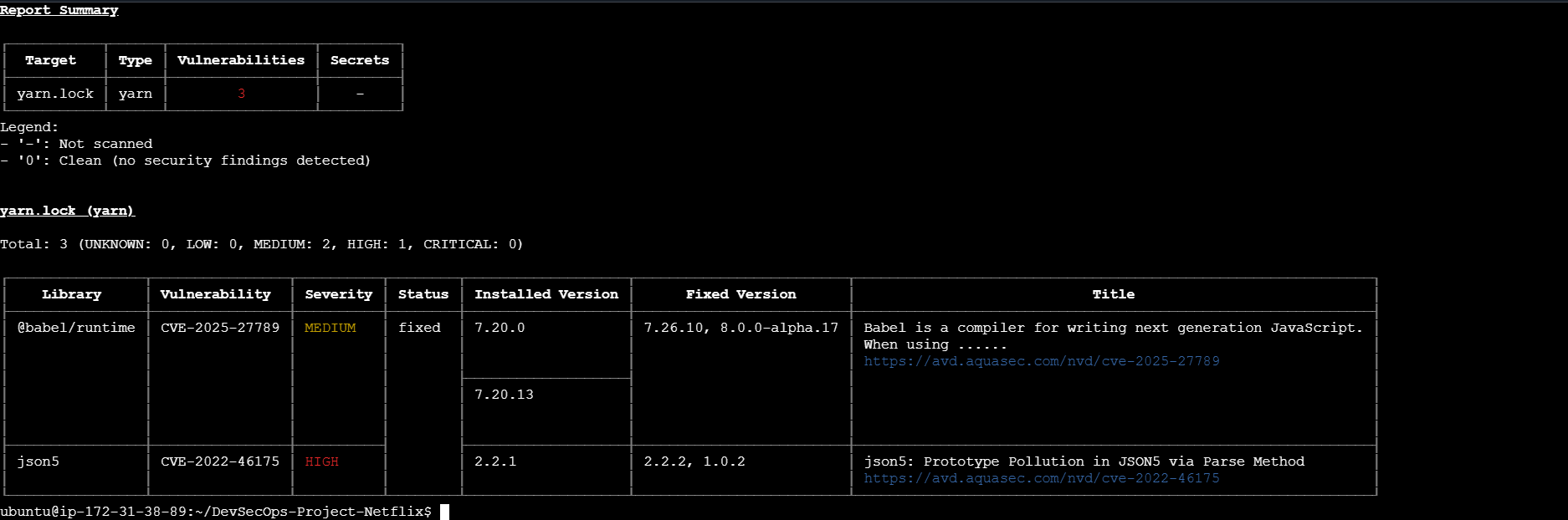

Scanning the File System

To scan the current file system for vulnerabilities:

trivy fs .

Trivy will generate a report listing vulnerabilities categorized as low, medium, high, and critical.

trivy fs .

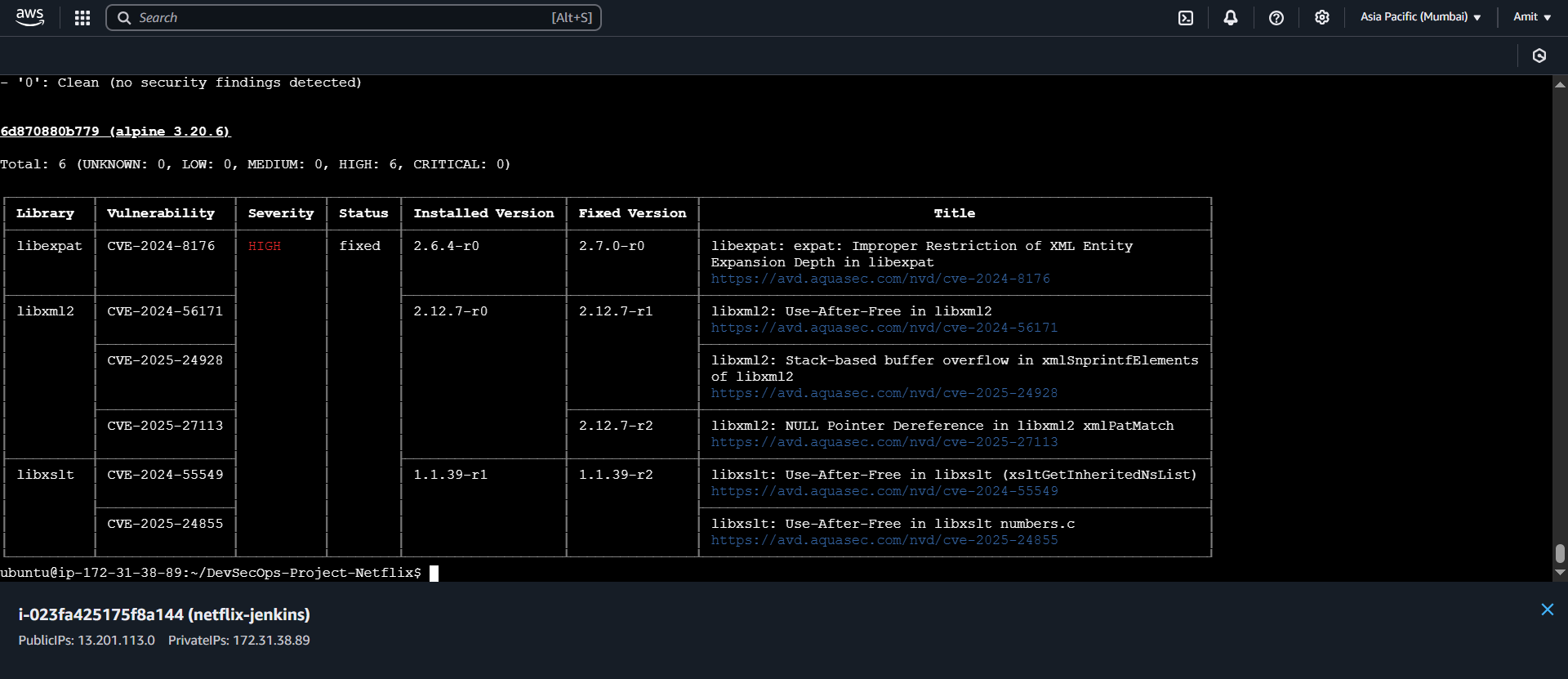

Scanning Docker Images for Vulnerabilities

Check for security issues in your Netflix app image:

docker images

trivy image netflix:latest # or write image id

For security we have used SonarQube and Trivy

Phase 3: CI/CD Setup

Install Jenkins for Automation:

- Install Jenkins on the EC2 instance to automate deployment: Install Java as pre-requisite

sudo apt update

sudo apt install fontconfig openjdk-17-jre

java -version

openjdk version "17.0.8" 2023-07-18

OpenJDK Runtime Environment (build 17.0.8+7-Debian-1deb12u1)

OpenJDK 64-Bit Server VM (build 17.0.8+7-Debian-1deb12u1, mixed mode, sharing)

#jenkins

sudo wget -O /usr/share/keyrings/jenkins-keyring.asc \

https://pkg.jenkins.io/debian-stable/jenkins.io-2023.key

echo deb [signed-by=/usr/share/keyrings/jenkins-keyring.asc] \

https://pkg.jenkins.io/debian-stable binary/ | sudo tee \

/etc/apt/sources.list.d/jenkins.list > /dev/null

sudo apt-get update

sudo apt-get install jenkins

sudo systemctl start jenkins

sudo systemctl enable jenkins

Access Jenkins in a web browser using the public IP of your EC2 instance.

publicIp:8080

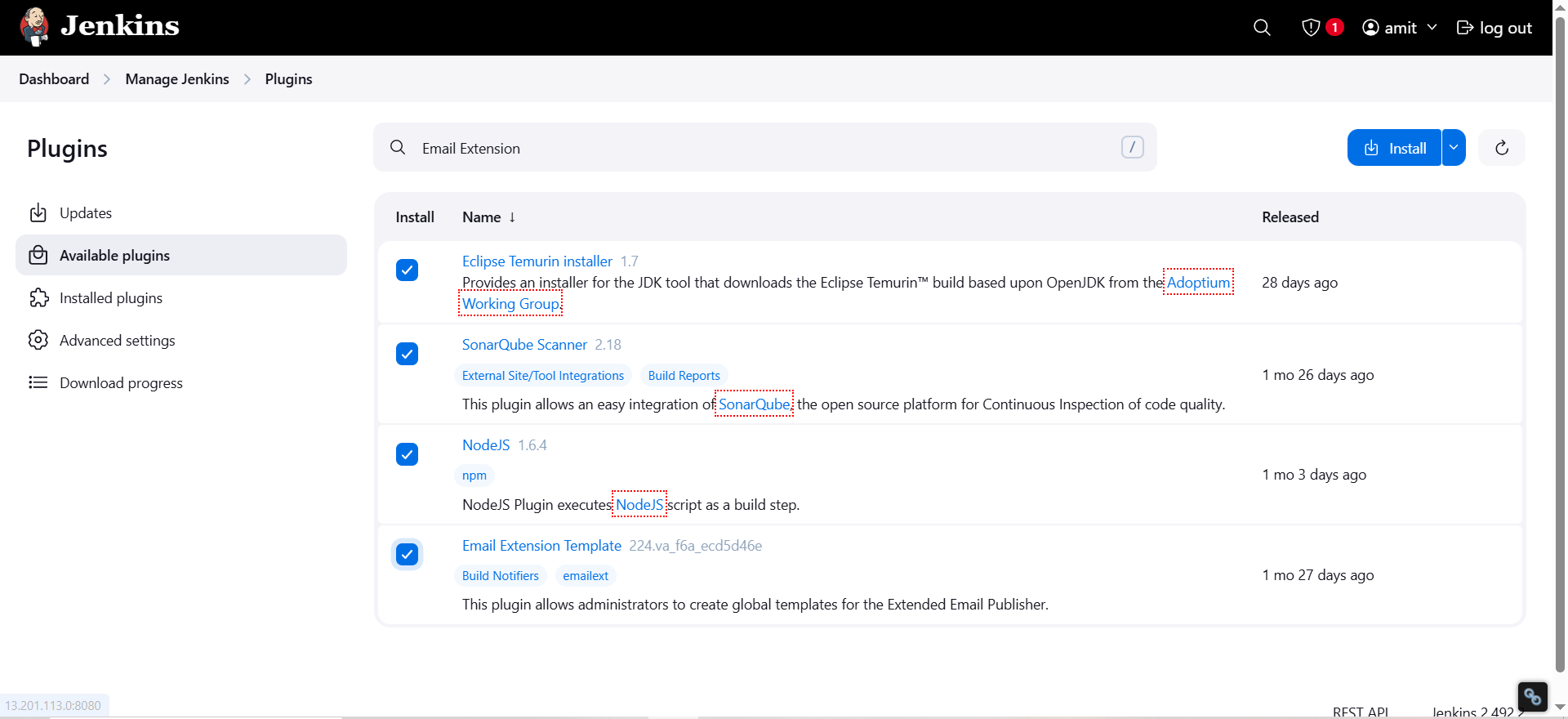

Install Necessary Plugins in Jenkins:

Goto: Manage Jenkins →Plugins → Available Plugins → Install below plugins

Eclipse Temurin Installer (Install without restart)

SonarQube Scanner (Install without restart)

NodeJs Plugin (Install Without restart)

Email Extension Plugin

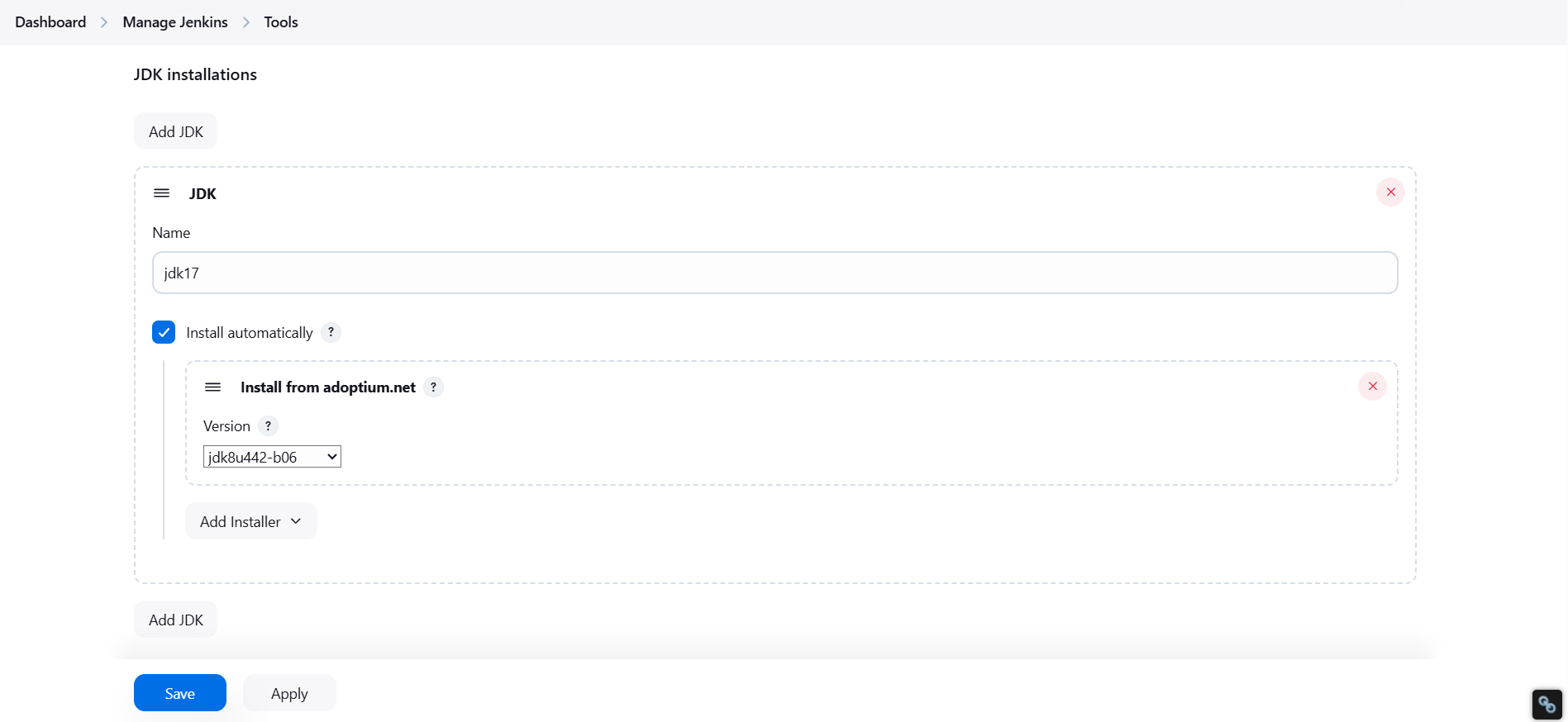

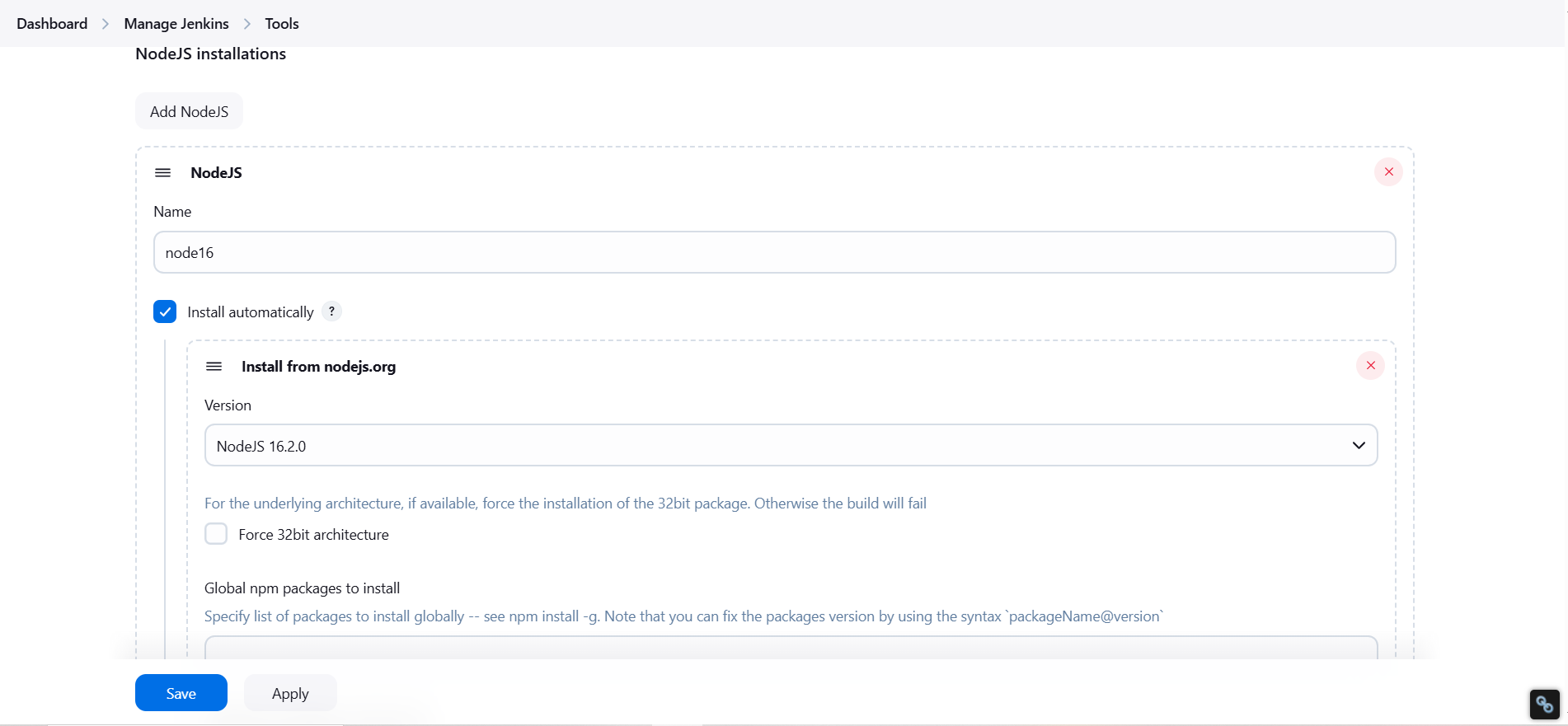

Configure Java and Nodejs in Global Tool Configuration

Goto: Manage Jenkins → Tools → Install JDK(17) and NodeJs(16)→ Click on Apply and Save

Make sure you select the jdk version 17 from above

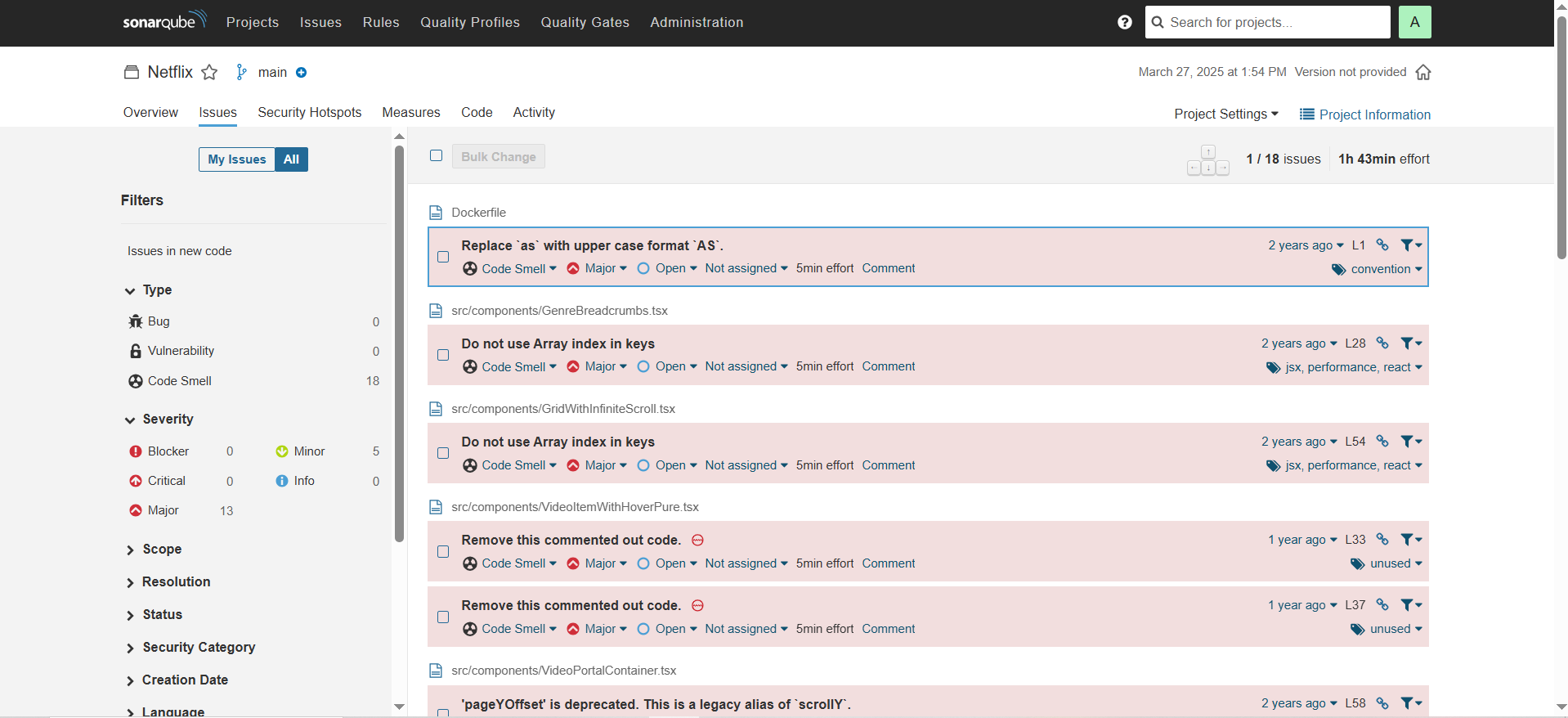

SonarQube

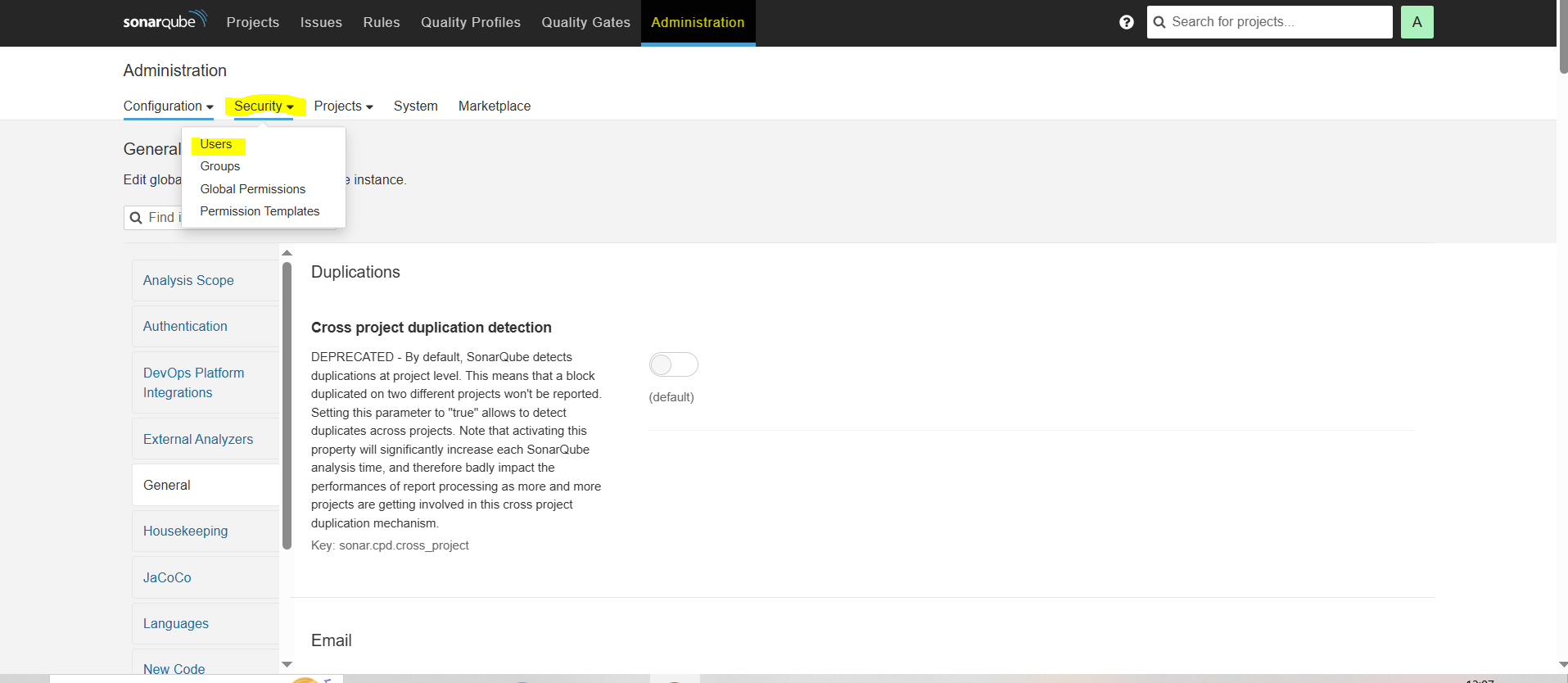

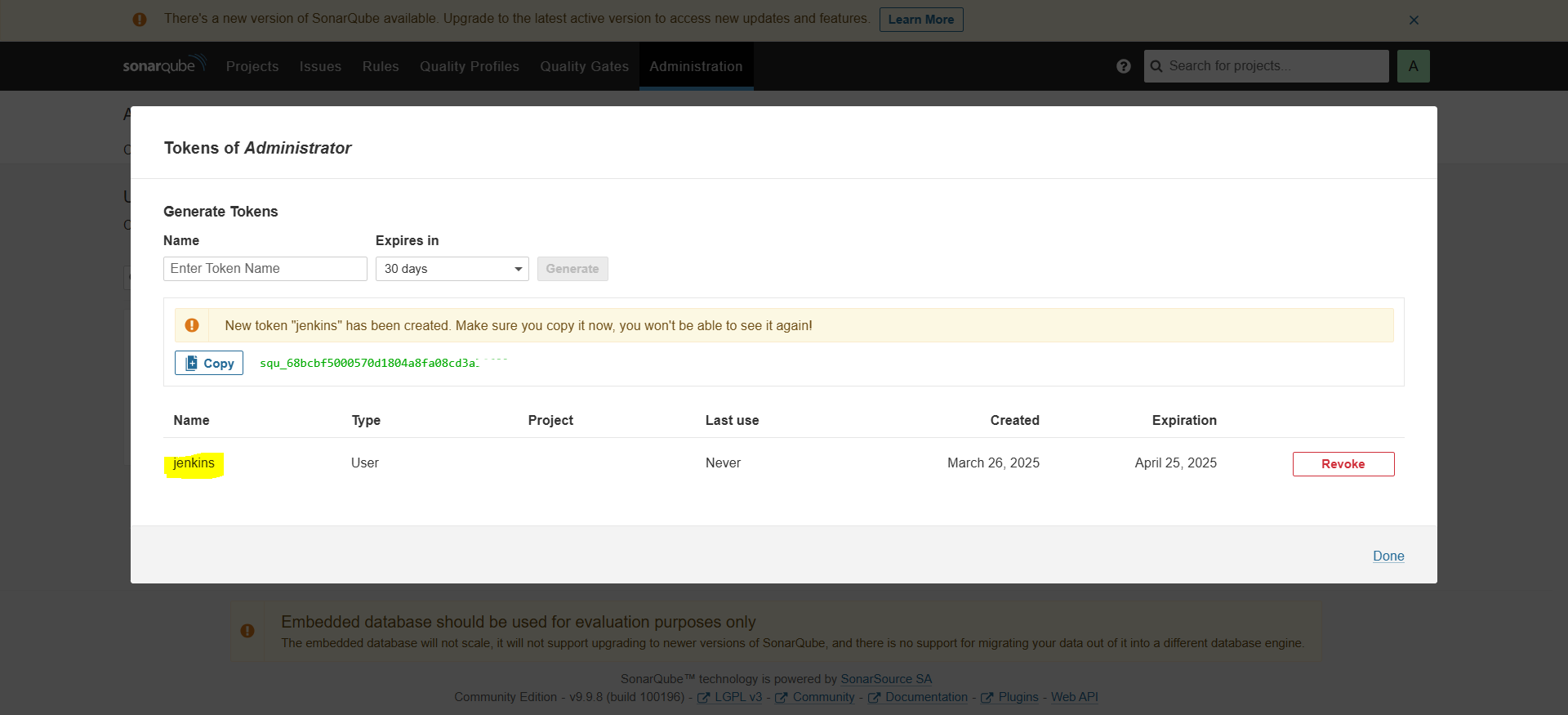

Create the token: Go to Sonarqube → Administration → Security → Users

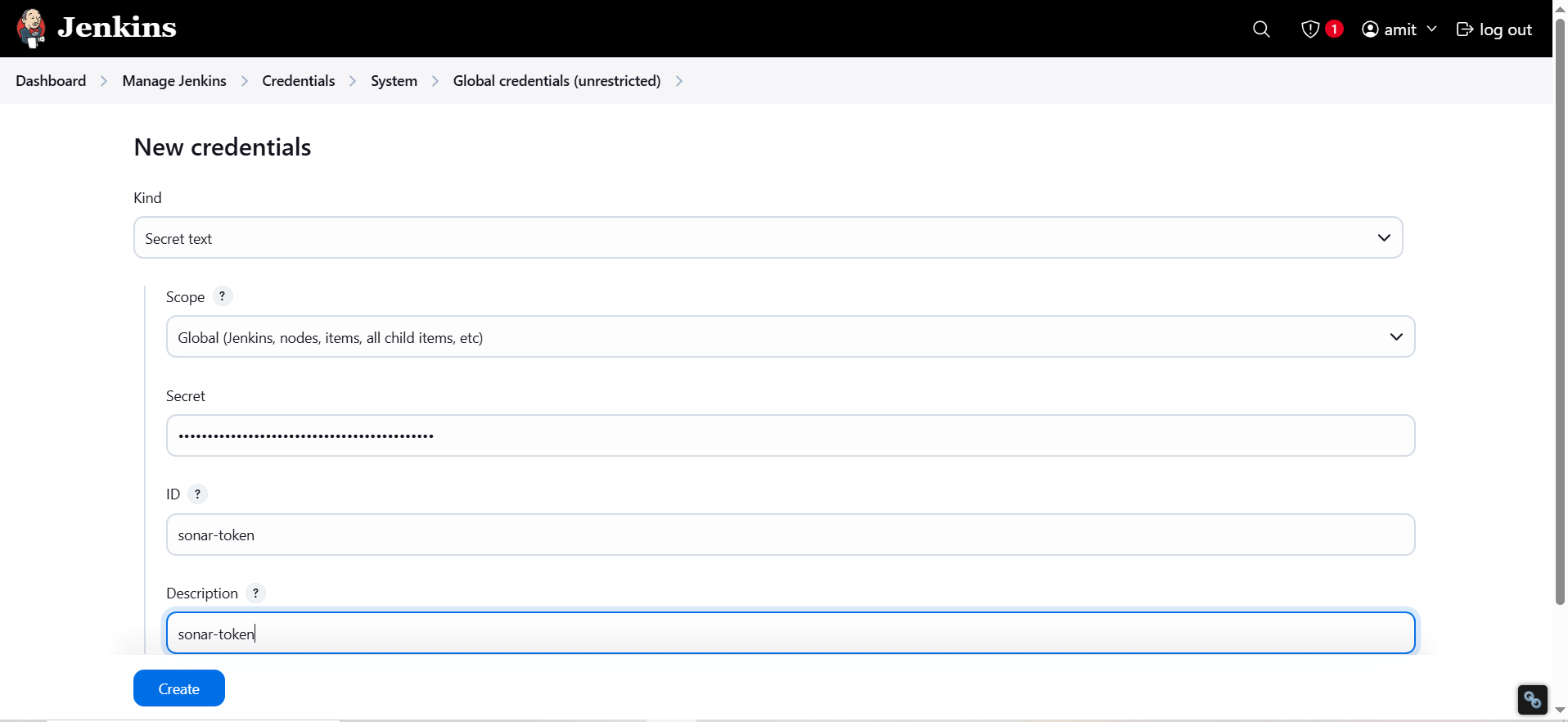

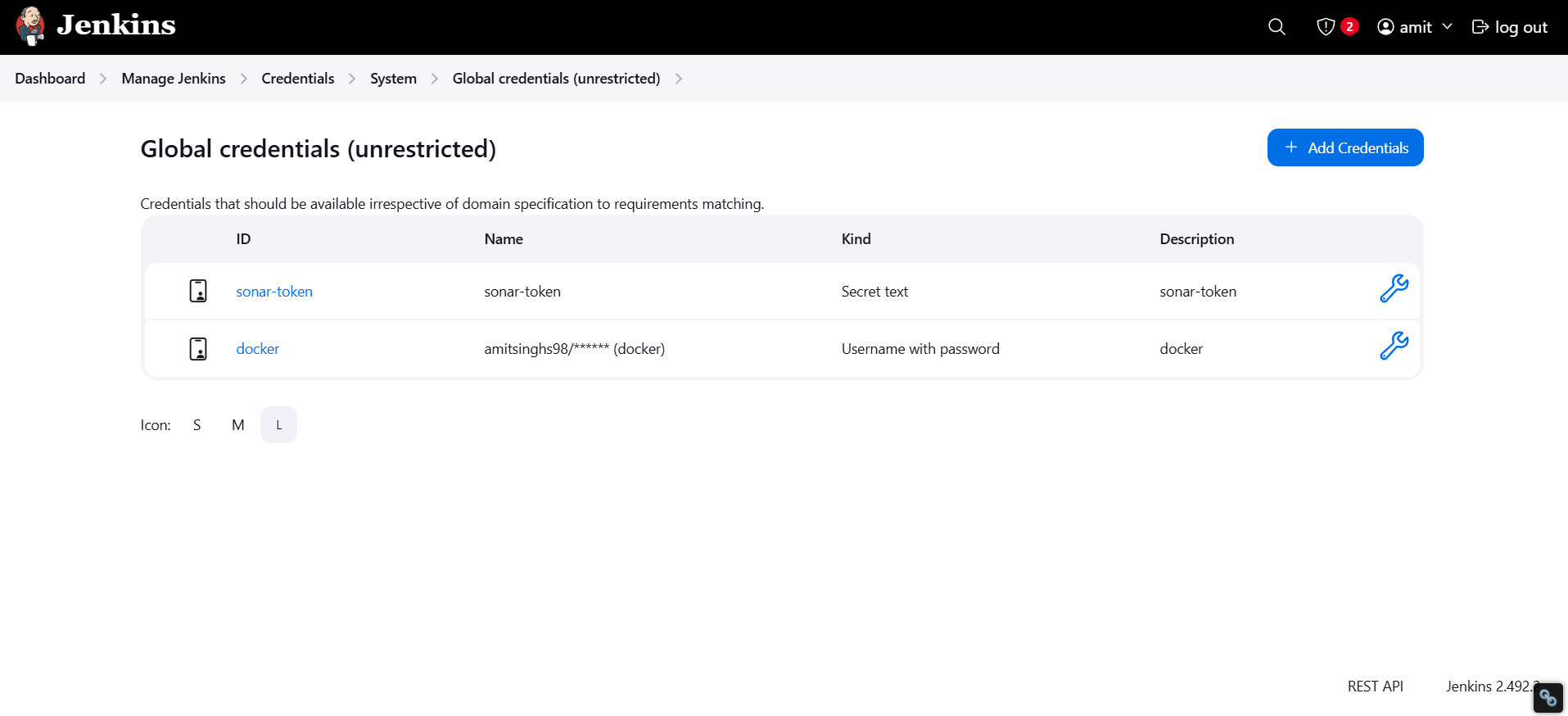

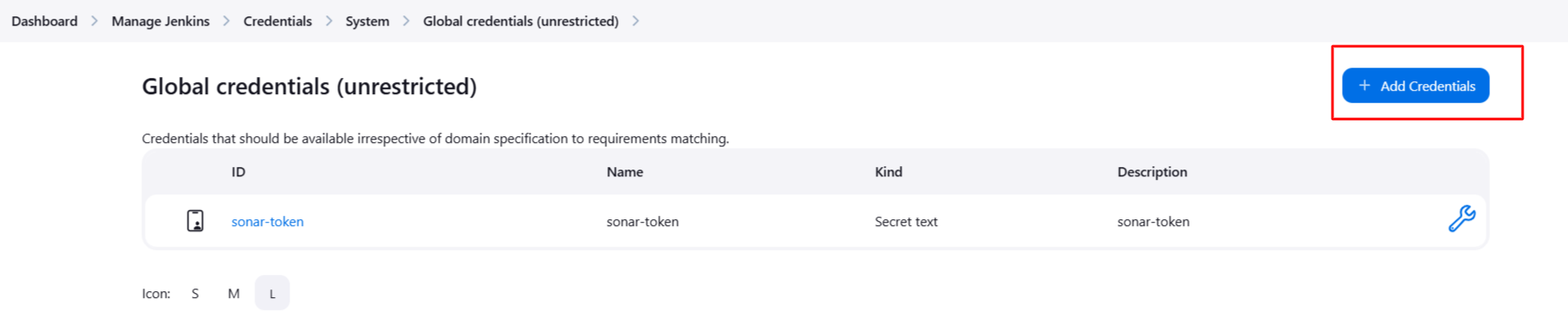

Goto: Jenkins Dashboard → Manage Jenkins → Credentials → Add Secret Text. It should look like this

After adding sonar token

Click on Apply and Save

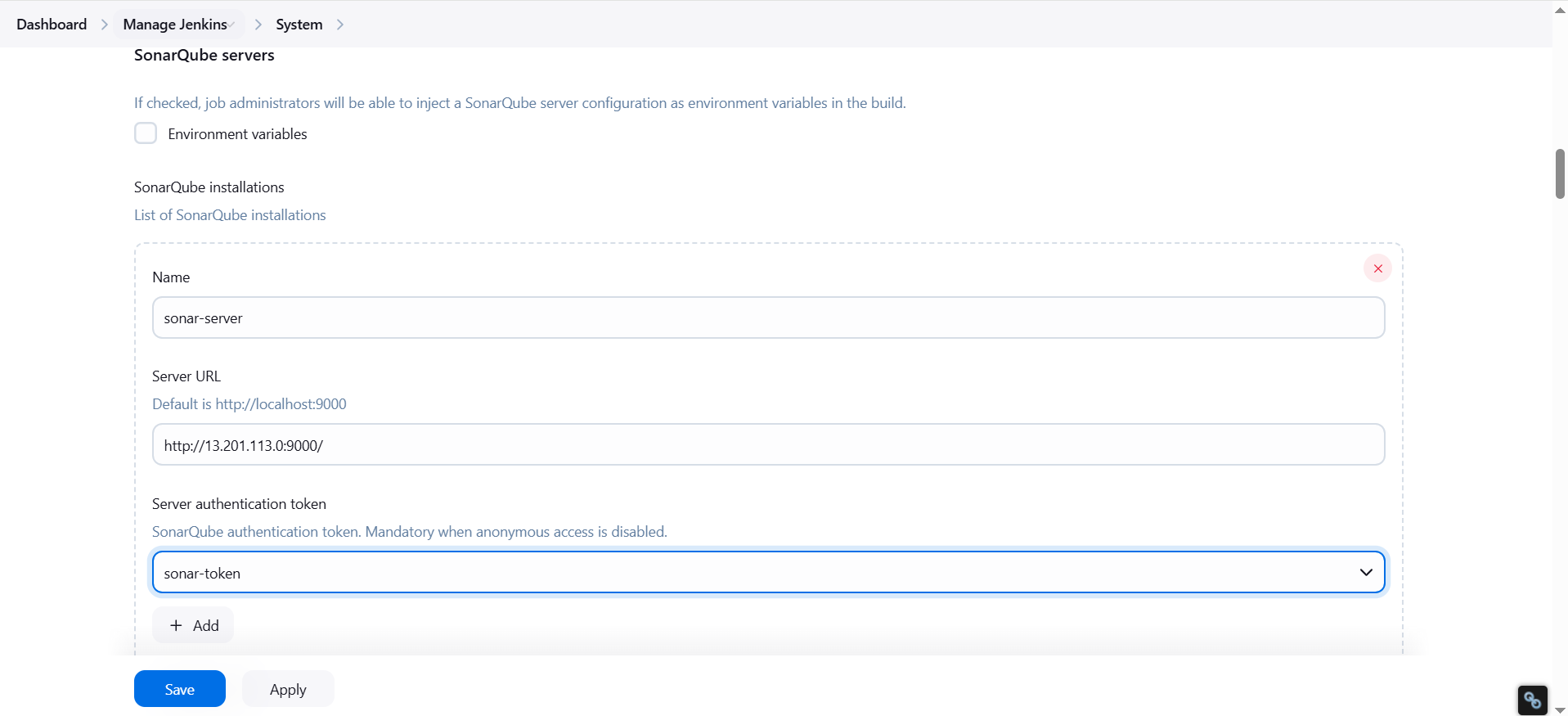

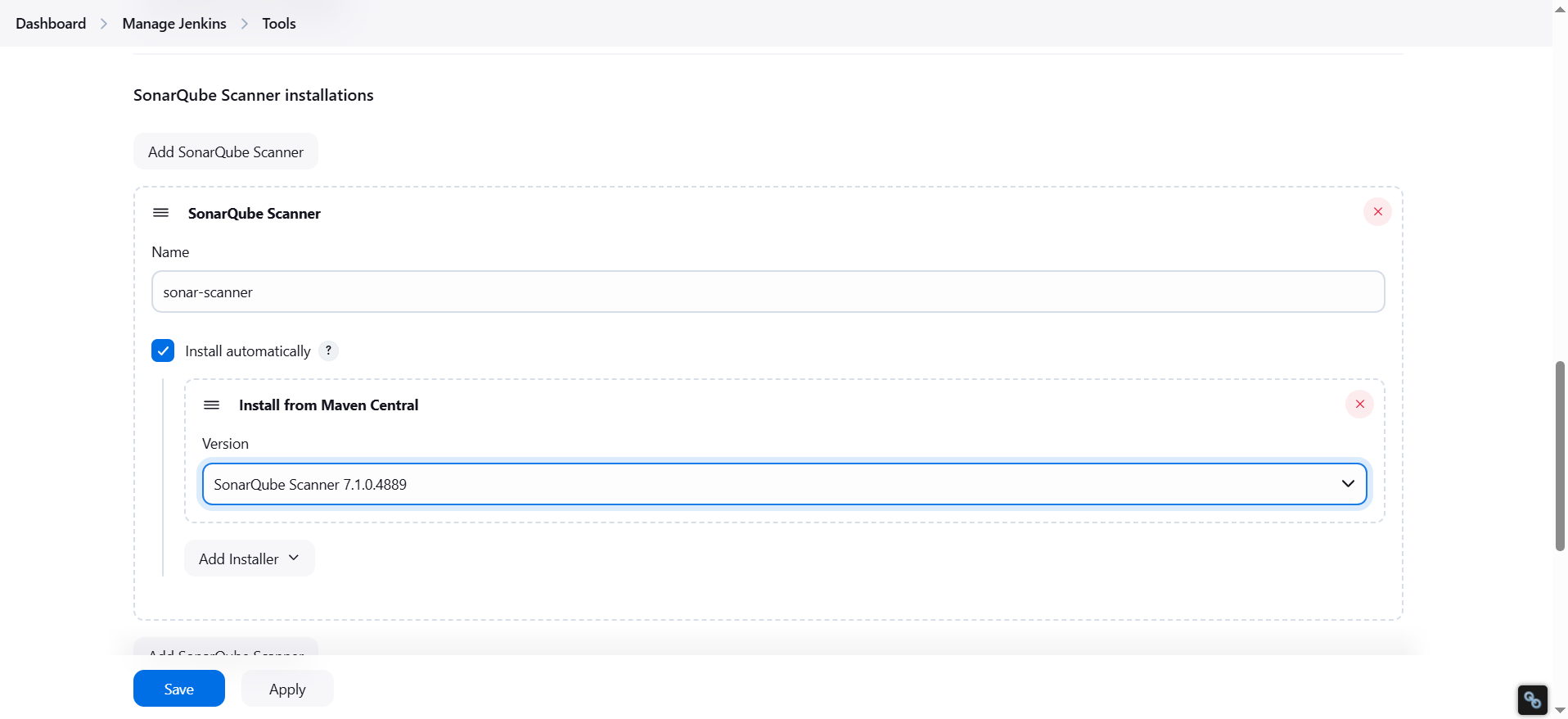

The Configure System option is used in Jenkins to configure different server

Global Tool Configuration is used to configure different tools that we install using Plugins

We will install a sonar scanner in the tools also.

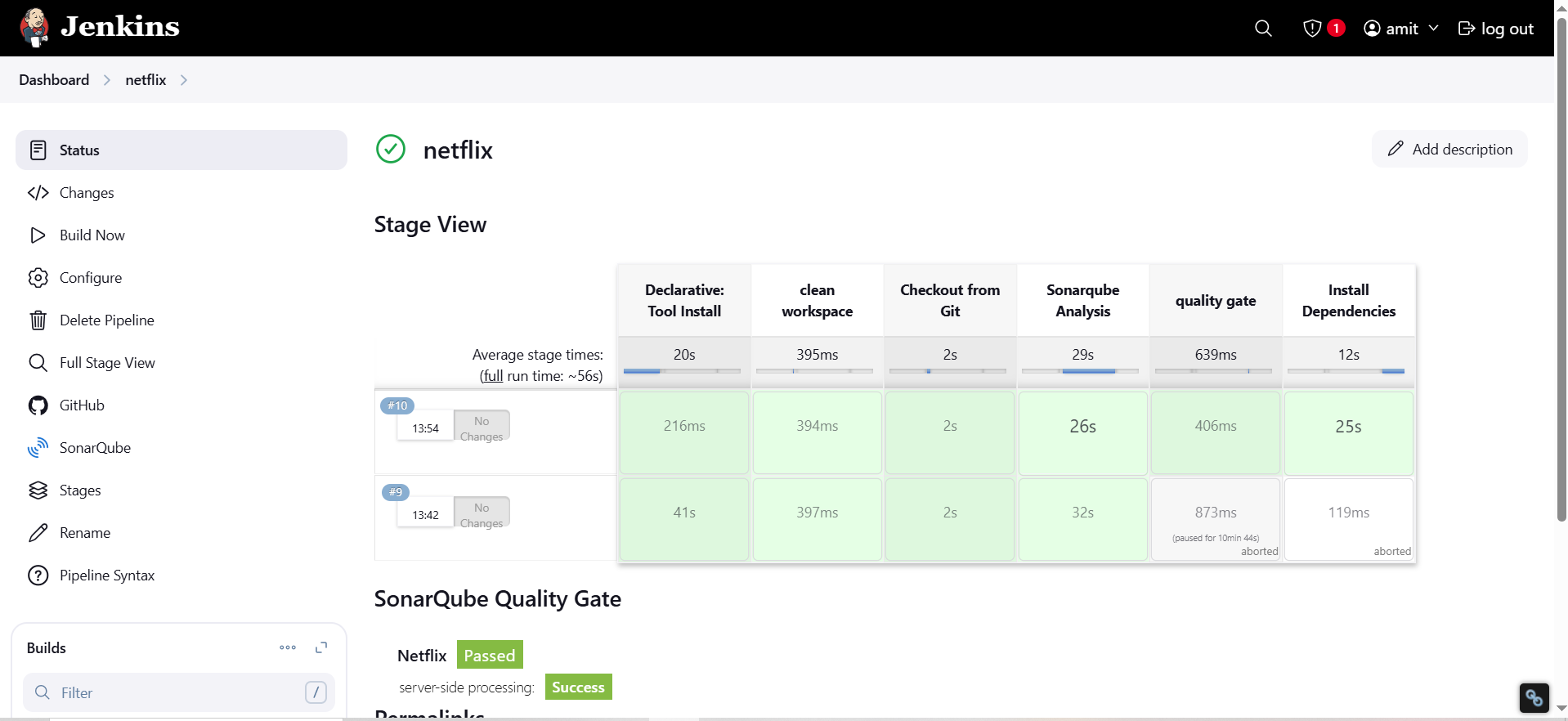

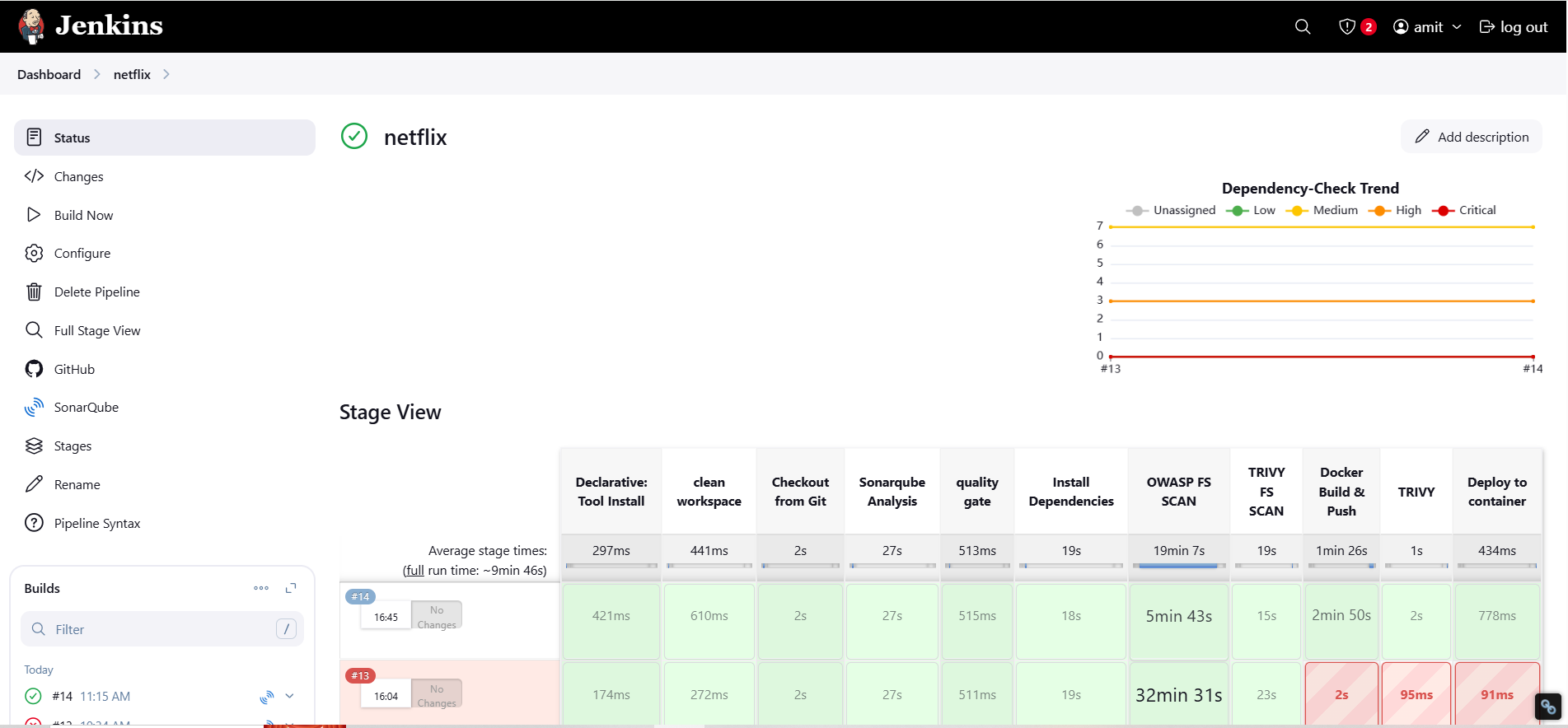

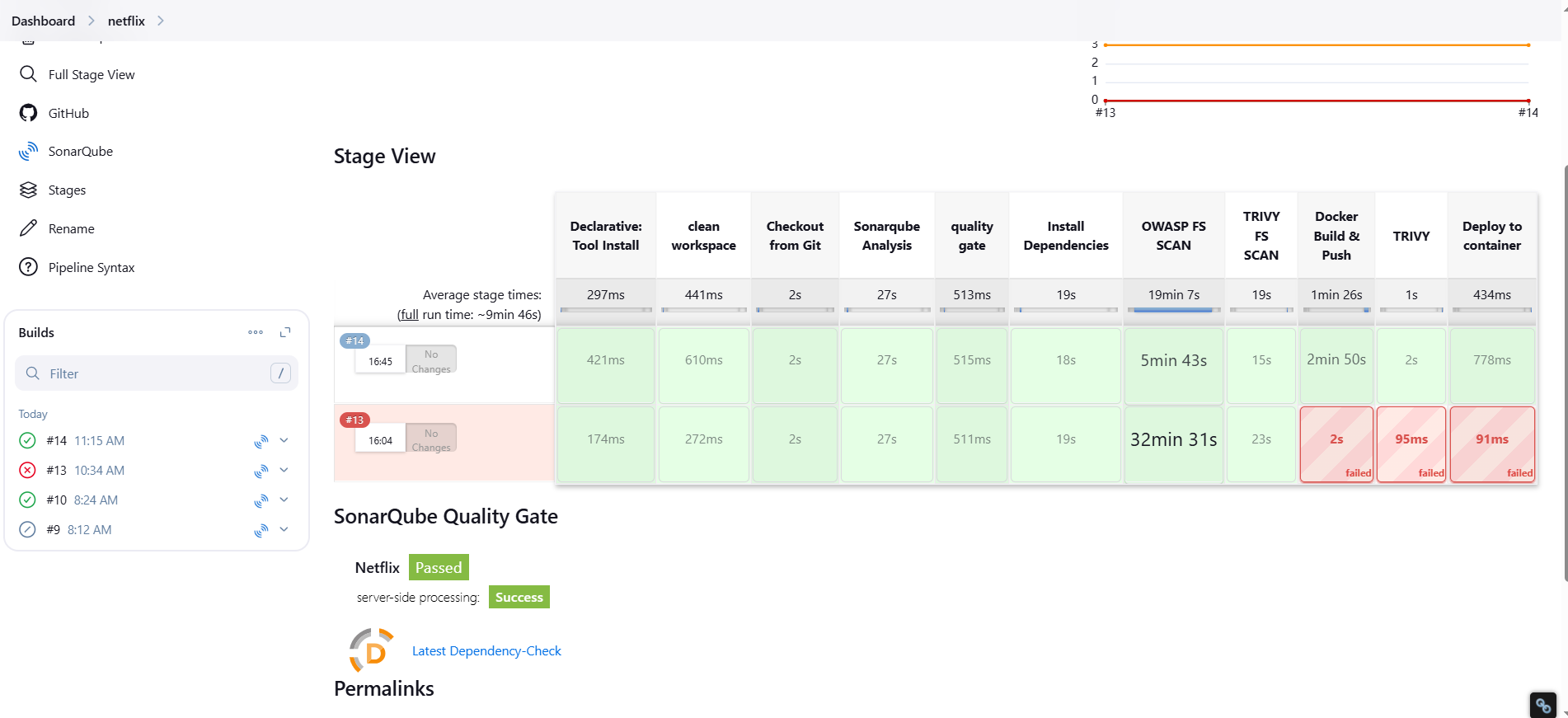

Run the pipeline

Configure CI/CD Pipeline in Jenkins:

- Create a CI/CD pipeline in Jenkins to automate your application deployment.

pipeline {

agent any

tools {

jdk 'jdk17'

nodejs 'node16'

}

environment {

SCANNER_HOME = tool 'sonar-scanner'

}

stages {

stage('clean workspace') {

steps {

cleanWs()

}

}

stage('Checkout from Git') {

steps {

git branch: 'main', url: 'https://github.com/amitsinghs98/DevSecOps-Project-Netflix.git'

}

}

stage("Sonarqube Analysis") {

steps {

withSonarQubeEnv('sonar-server') {

sh '''$SCANNER_HOME/bin/sonar-scanner -Dsonar.projectName=Netflix \

-Dsonar.projectKey=Netflix'''

}

}

}

stage("quality gate") {

steps {

script { timeout(time: 40, unit: 'MINUTES') {

waitForQualityGate abortPipeline: true, credentialsId: 'sonar-token'

}

}

}

}

stage('Install Dependencies') {

steps {

sh "npm install"

}

}

}

}

We have created our basic pipeline now we will integrate more stages to improve security and make deployment

Enhancing Security in CI/CD Pipeline

To ensure security in the pipeline, we are integrating:

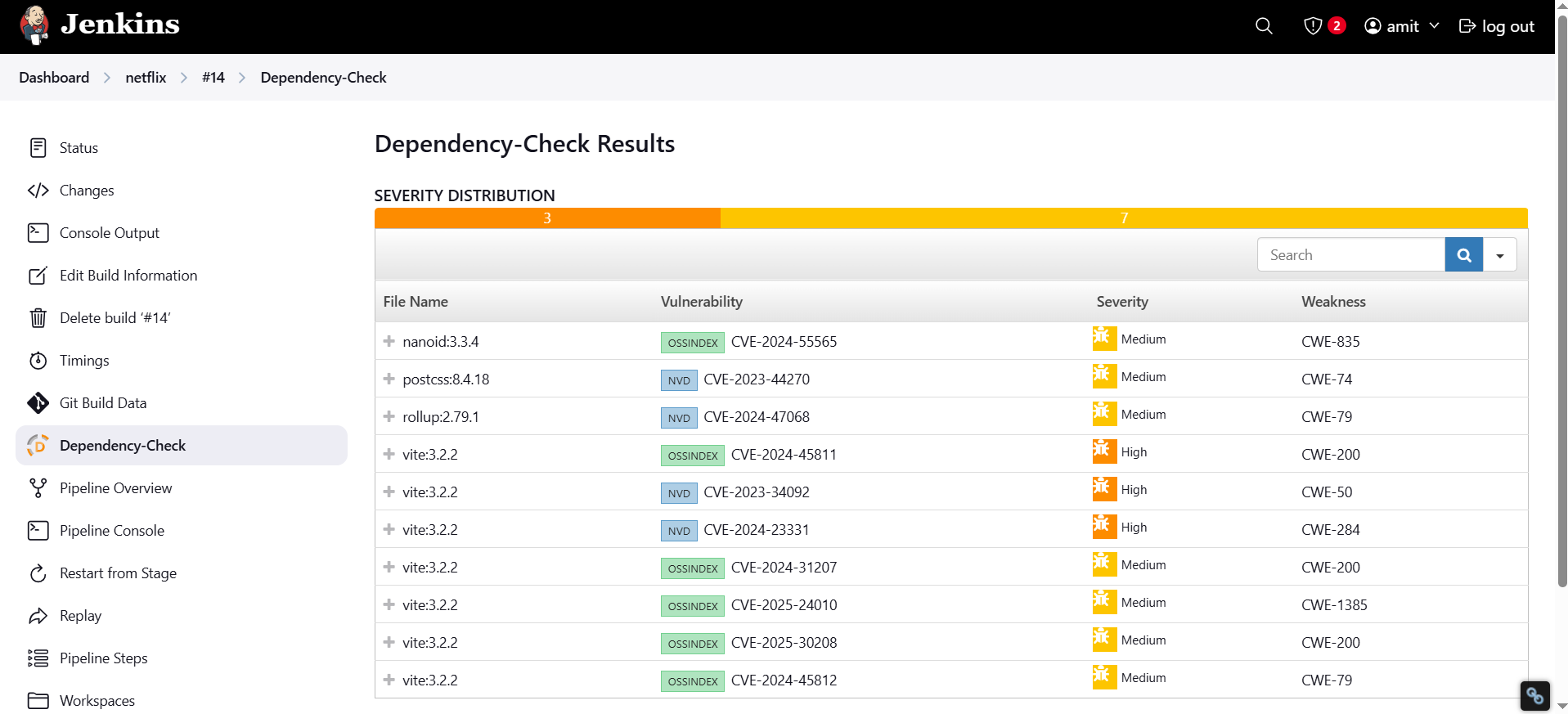

OWASP Dependency Check → Identifies vulnerabilities in dependencies

Trivy → Scans Docker images for security issues

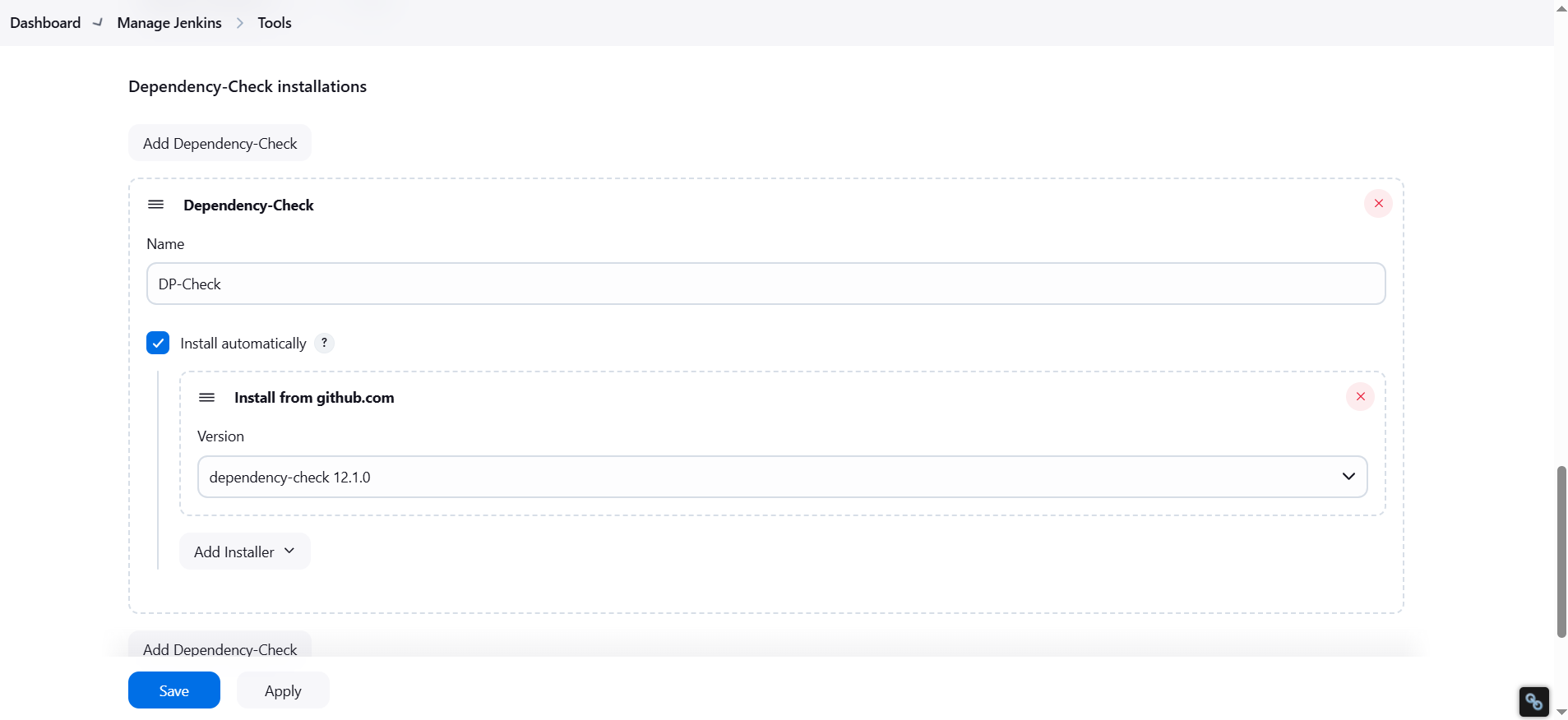

Installing OWASP Dependency Check Plugin in Jenkins

To scan dependencies for security vulnerabilities:

Navigate to Manage Jenkins > Plugin Manager > Available Plugins

Search for "OWASP Dependency Check" and install it

Configure the plugin in Manage Jenkins > Global Tool Configuration

Set the name as DP-Check for consistency

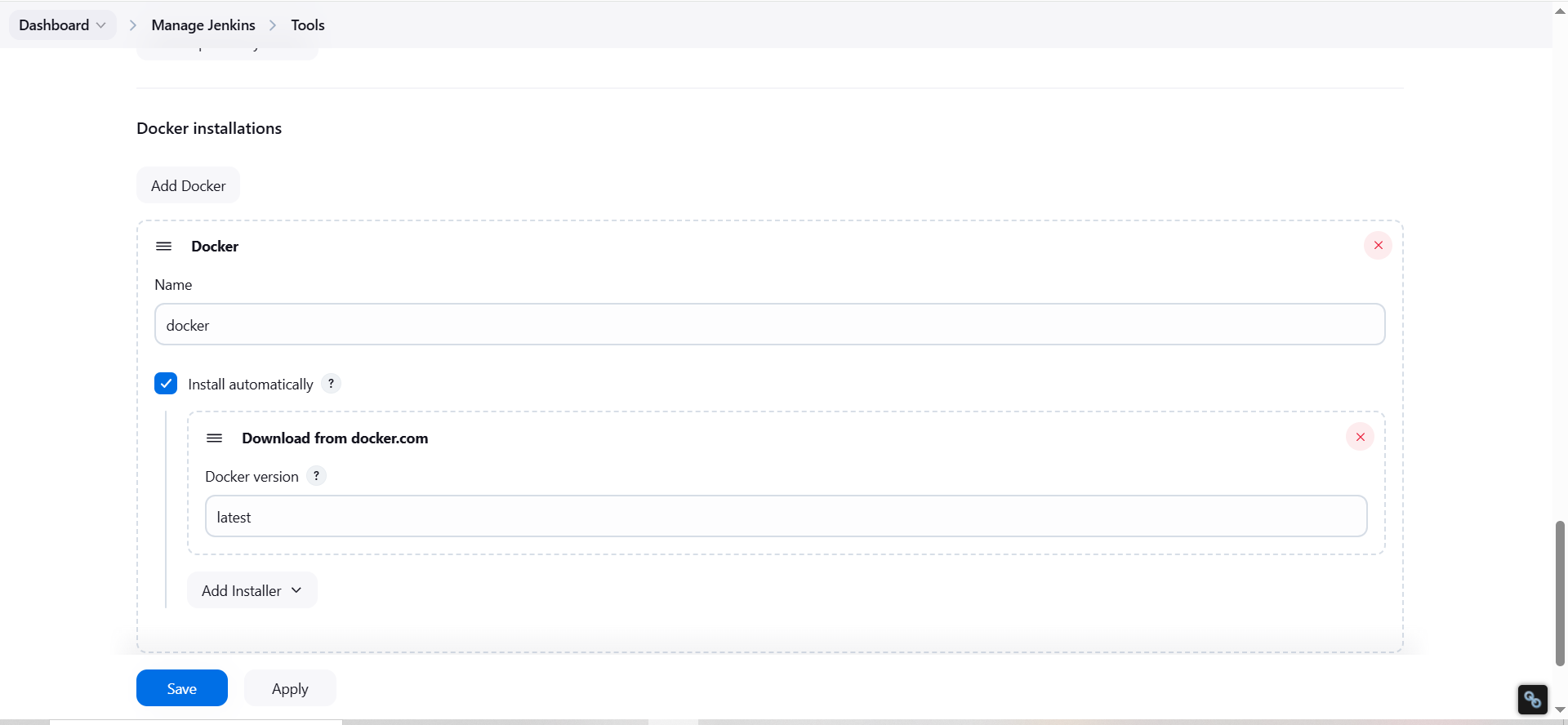

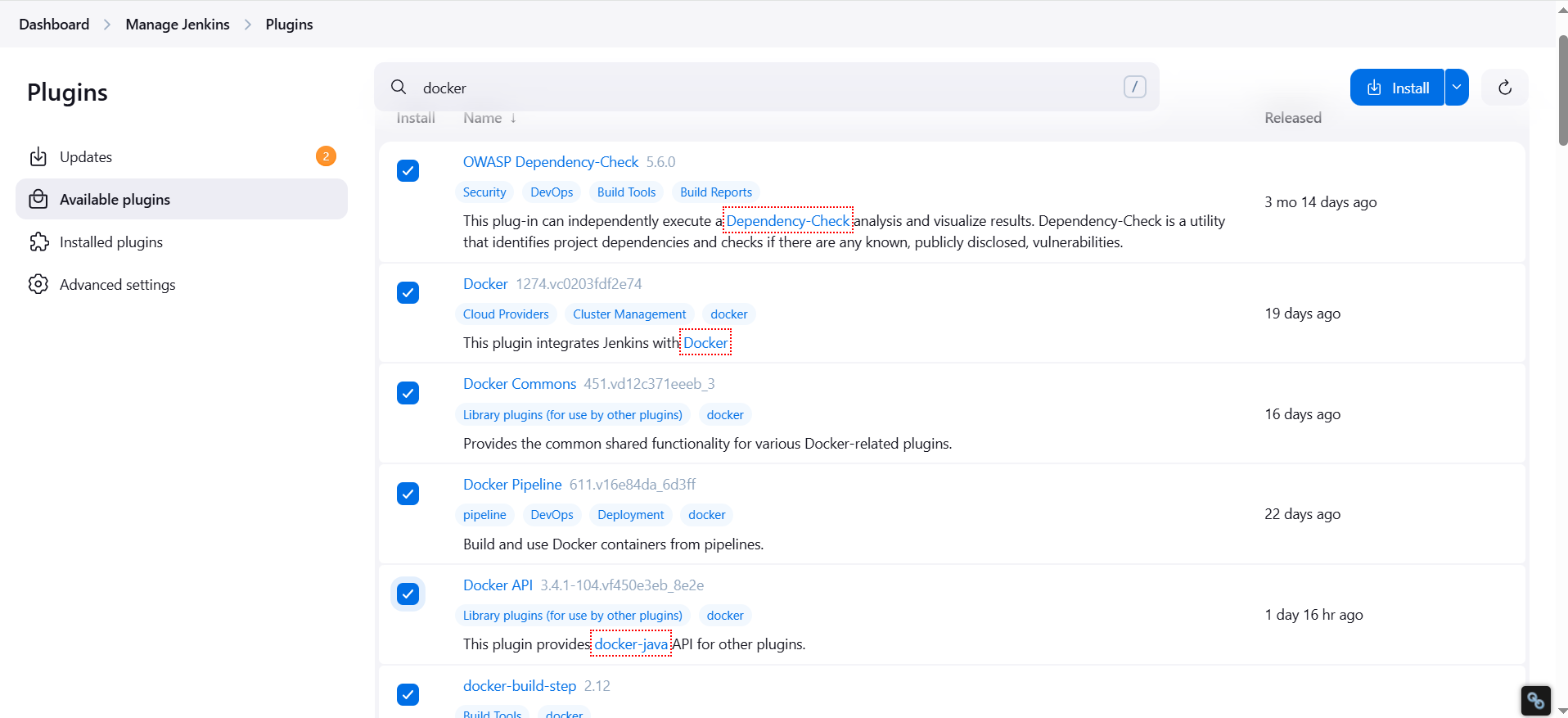

Installing Docker Plugins in Jenkins

Since the pipeline will build Docker images, we need these plugins:

Docker Commons

Docker Pipeline

Docker API

Docker Build Step

Install them by navigating to Manage Jenkins > Plugin Manager > Available Plugins and searching for "Docker".

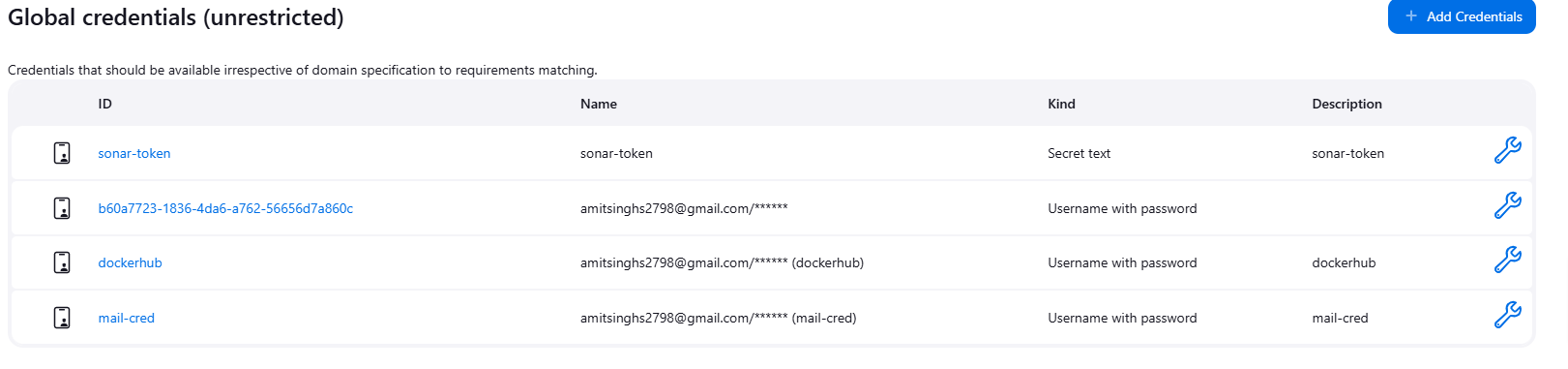

Configuring Docker Hub Credentials in Jenkins

To push Docker images from Jenkins to Docker Hub:

Go to Manage Jenkins > Credentials > System > Global Credentials

Click Add Credentials for Dockerhub and Save the credentials

Configure the docker in global tools also

pipeline{

agent any

tools{

jdk 'jdk17'

nodejs 'node16'

}

environment {

SCANNER_HOME=tool 'sonar-scanner'

}

stages {

stage('clean workspace'){

steps{

cleanWs()

}

}

stage('Checkout from Git') {

steps {

git branch: 'main', url: 'https://github.com/amitsinghs98/DevSecOps-Project-Netflix.git'

}

}

stage("Sonarqube Analysis "){

steps{

withSonarQubeEnv('sonar-server') {

sh ''' $SCANNER_HOME/bin/sonar-scanner -Dsonar.projectName=Netflix \

-Dsonar.projectKey=Netflix '''

}

}

}

stage("quality gate") {

steps {

script { timeout(time: 40, unit: 'MINUTES') {

waitForQualityGate abortPipeline: true, credentialsId: 'sonar-token'

}

}

}

}

stage('Install Dependencies') {

steps {

sh "npm install"

}

}

stage('OWASP FS SCAN') {

steps {

dependencyCheck additionalArguments: '--scan ./ --disableYarnAudit --disableNodeAudit', odcInstallation: 'DP-Check'

dependencyCheckPublisher pattern: '**/dependency-check-report.xml'

}

}

stage('TRIVY FS SCAN') {

steps {

sh "trivy fs . > trivyfs.txt"

}

}

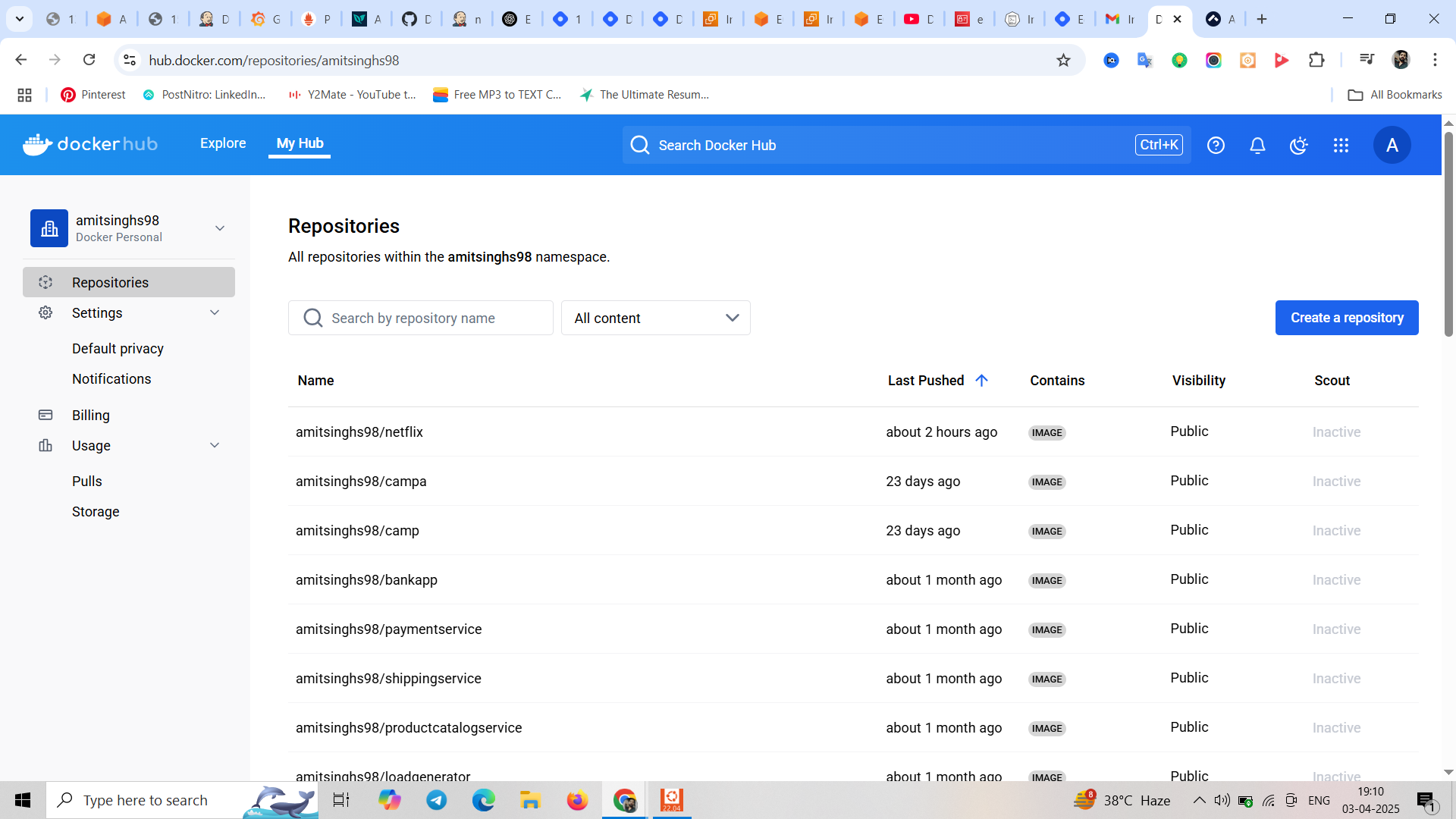

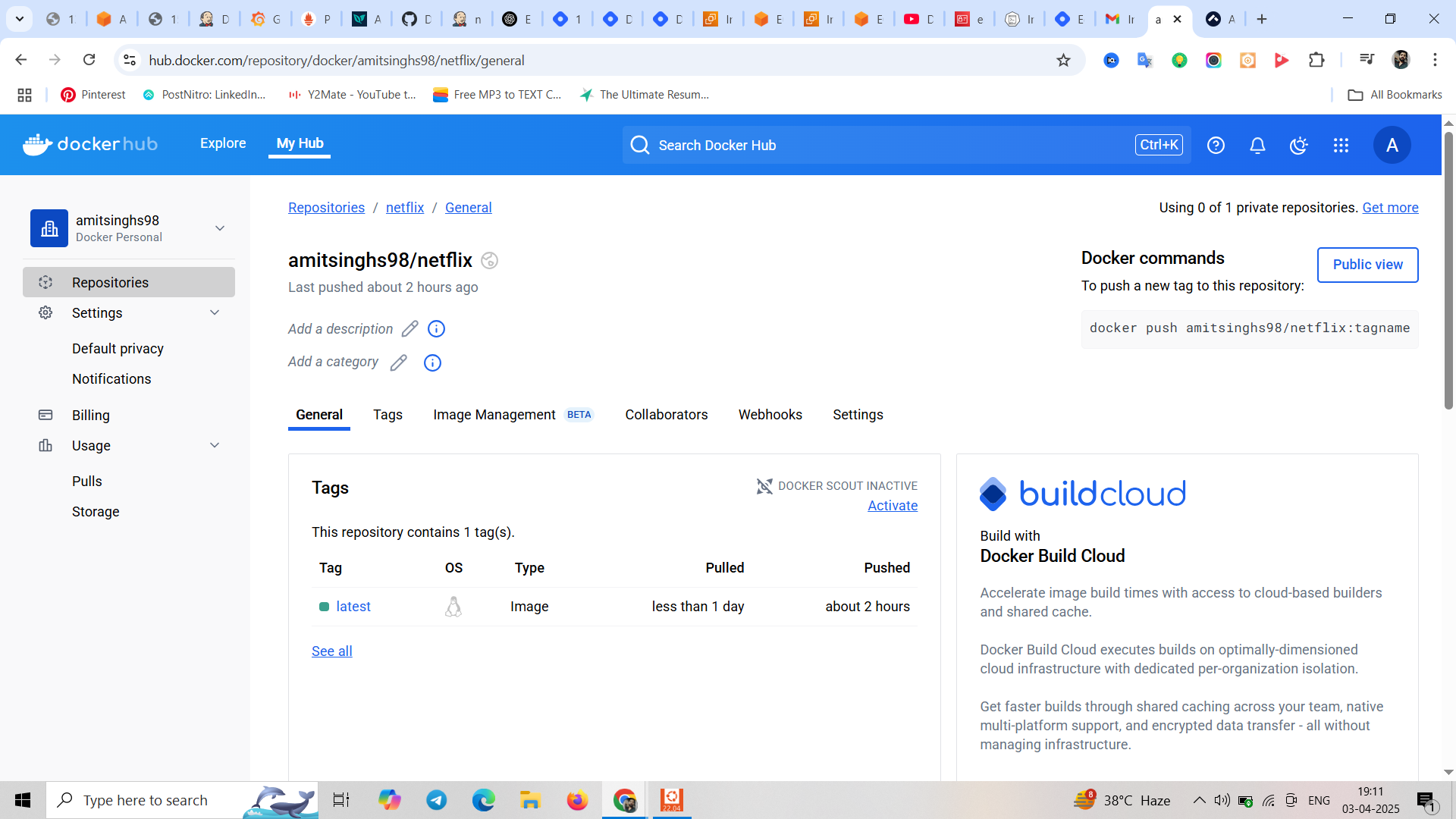

stage("Docker Build & Push"){

steps{

script{

withDockerRegistry(credentialsId: 'docker', toolName: 'docker'){

sh "docker build --build-arg TMDB_V3_API_KEY=7e83d9491367017e316fdd3719d01779 -t netflix ."

sh "docker tag netflix amitsinghs98/netflix:latest "

sh "docker push amitsinghs98/netflix:latest "

}

}

}

}

stage("TRIVY"){

steps{

sh "trivy image amitsinghs98/netflix:latest > trivyimage.txt"

}

}

stage('Deploy to container'){

steps{

sh 'docker run -d -p 8081:80 amitsinghs98/netflix:latest'

}

}

}

}

Note: sh 'docker run -d -p 8081:80 amitsinghs98/netflix:latest' we dont specify the name because each time pipeline will start it will create a new random name so that no error occurs.

Phase 4: Monitoring

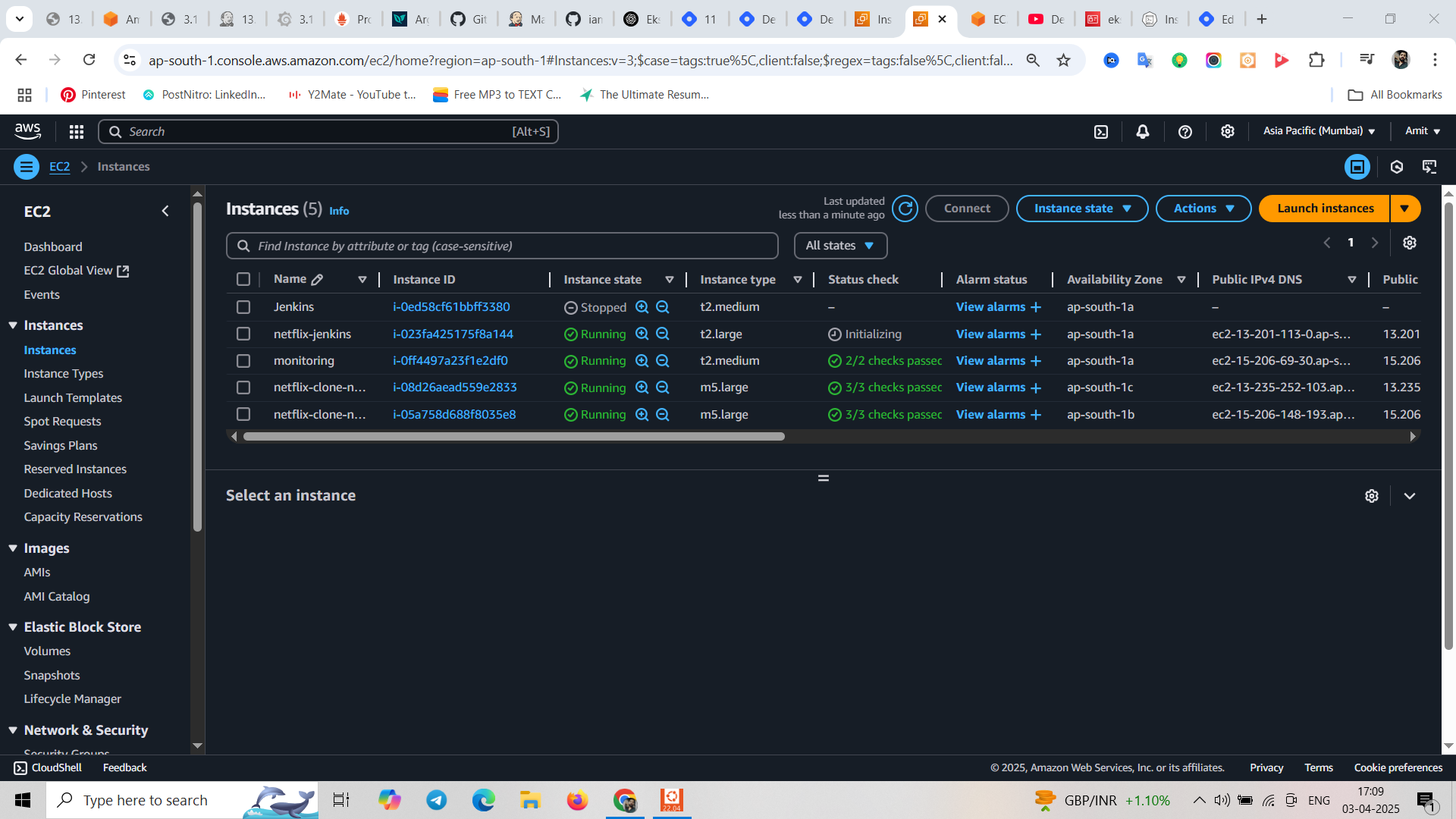

We will create a new t2.medium instance for monitoring

Install Prometheus and Grafana:

Set up Prometheus and Grafana to monitor your application.

Installing Prometheus:

First, create a dedicated Linux user for Prometheus and download Prometheus:

sudo useradd --system --no-create-home --shell /bin/false prometheus wget https://github.com/prometheus/prometheus/releases/download/v2.47.1/prometheus-2.47.1.linux-amd64.tar.gzExtract Prometheus files, move them, and create directories:

tar -xvf prometheus-2.47.1.linux-amd64.tar.gz cd prometheus-2.47.1.linux-amd64/ sudo mkdir -p /data /etc/prometheus sudo mv prometheus promtool /usr/local/bin/ sudo mv consoles/ console_libraries/ /etc/prometheus/ sudo mv prometheus.yml /etc/prometheus/prometheus.ymlSet ownership for directories:

sudo chown -R prometheus:prometheus /etc/prometheus/ /data/Create a systemd unit configuration file for Prometheus:

sudo nano /etc/systemd/system/prometheus.serviceAdd the following content to the

prometheus.servicefile:[Unit] Description=Prometheus Wants=network-online.target After=network-online.target StartLimitIntervalSec=500 StartLimitBurst=5 [Service] User=prometheus Group=prometheus Type=simple Restart=on-failure RestartSec=5s ExecStart=/usr/local/bin/prometheus \ --config.file=/etc/prometheus/prometheus.yml \ --storage.tsdb.path=/data \ --web.console.templates=/etc/prometheus/consoles \ --web.console.libraries=/etc/prometheus/console_libraries \ --web.listen-address=0.0.0.0:9090 \ --web.enable-lifecycle [Install] WantedBy=multi-user.targetHere's a brief explanation of the key parts in this

prometheus.servicefile:UserandGroupspecify the Linux user and group under which Prometheus will run.ExecStartis where you specify the Prometheus binary path, the location of the configuration file (prometheus.yml), the storage directory, and other settings.web.listen-addressconfigures Prometheus to listen on all network interfaces on port 9090.web.enable-lifecycleallows for management of Prometheus through API calls.

Enable and start Prometheus:

sudo systemctl enable prometheus

sudo systemctl start prometheus

Verify Prometheus's status:

sudo systemctl status prometheus

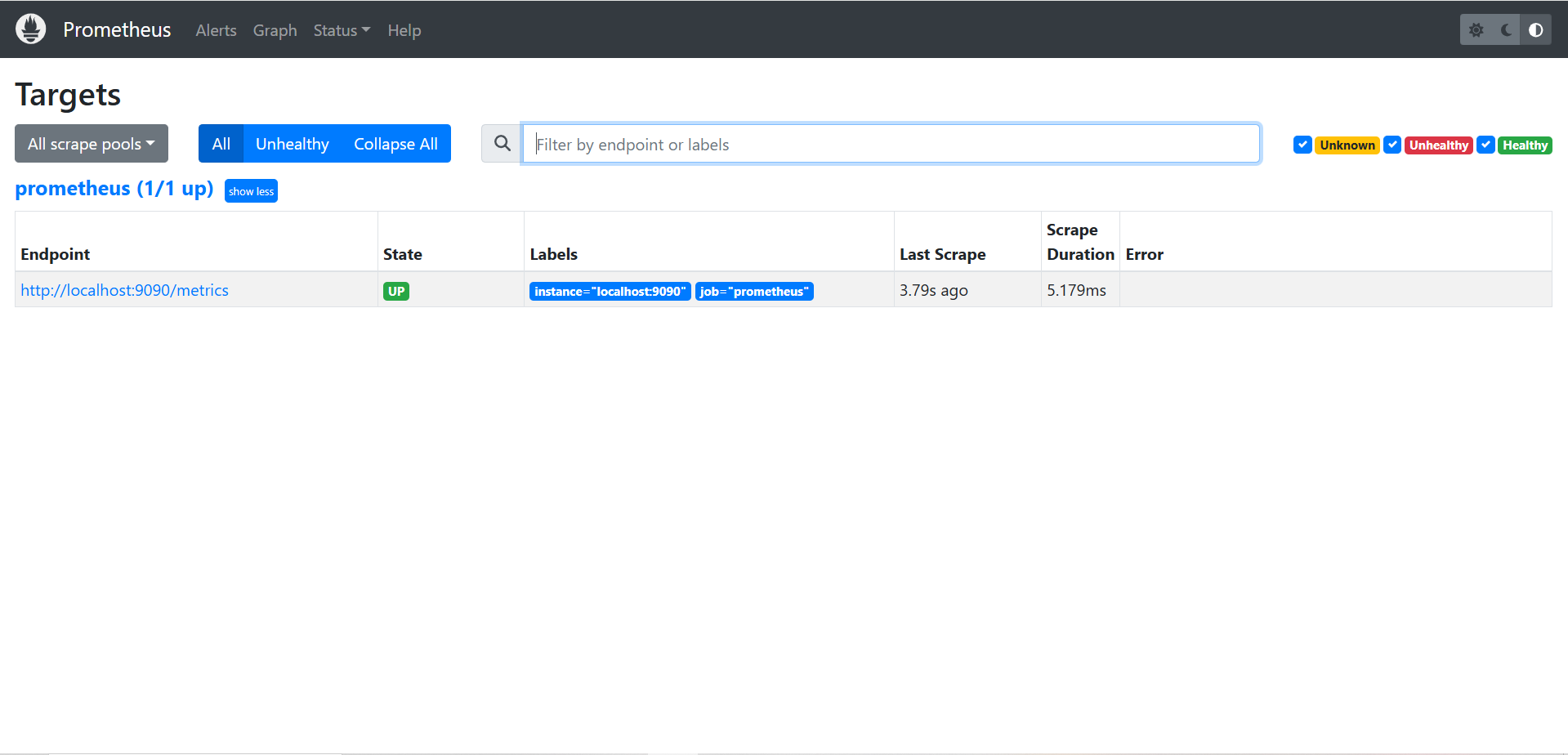

You can access Prometheus in a web browser using your server's IP and port 9090: Make sure you open the port 9090 in security group of ec2.

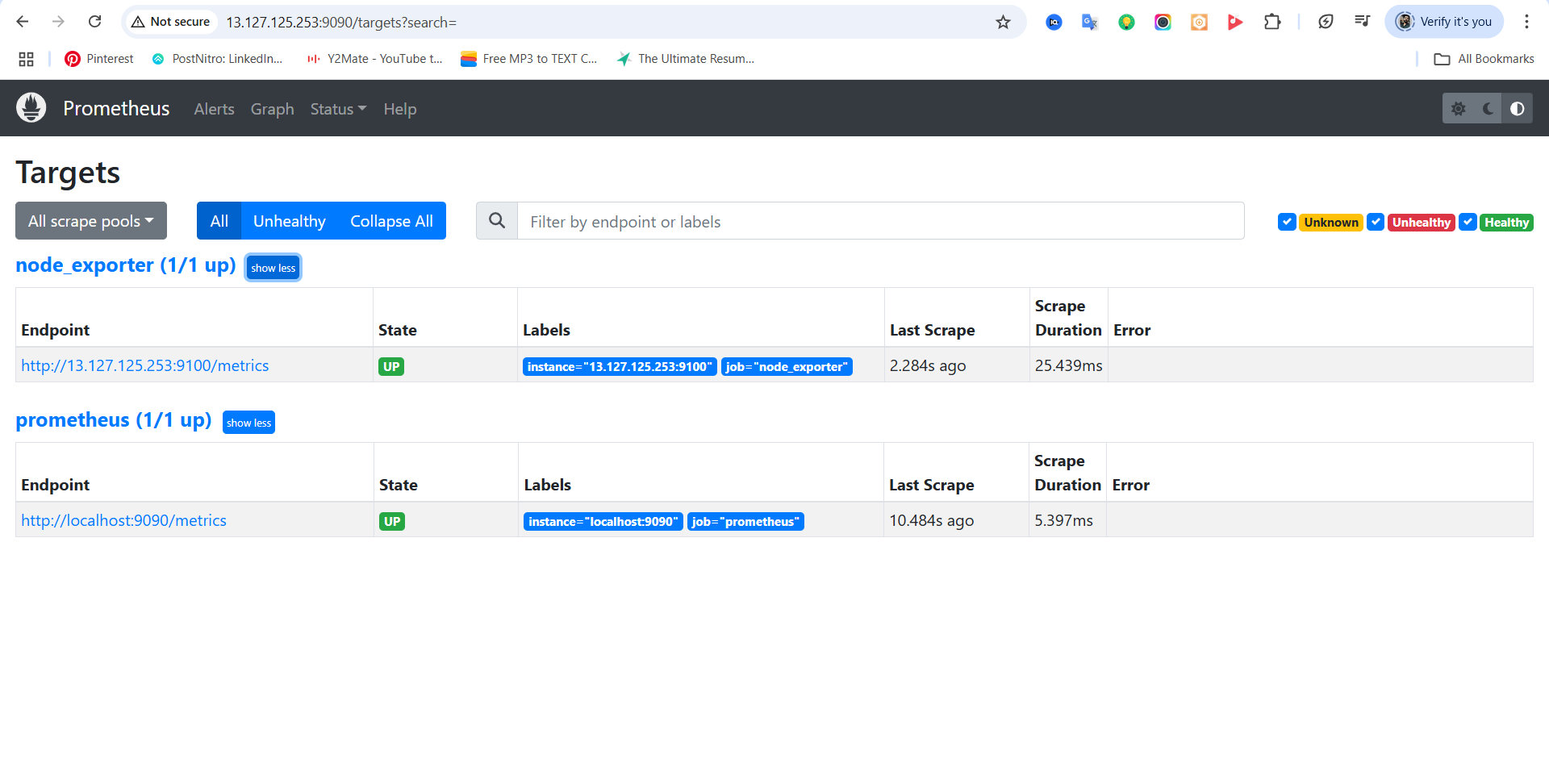

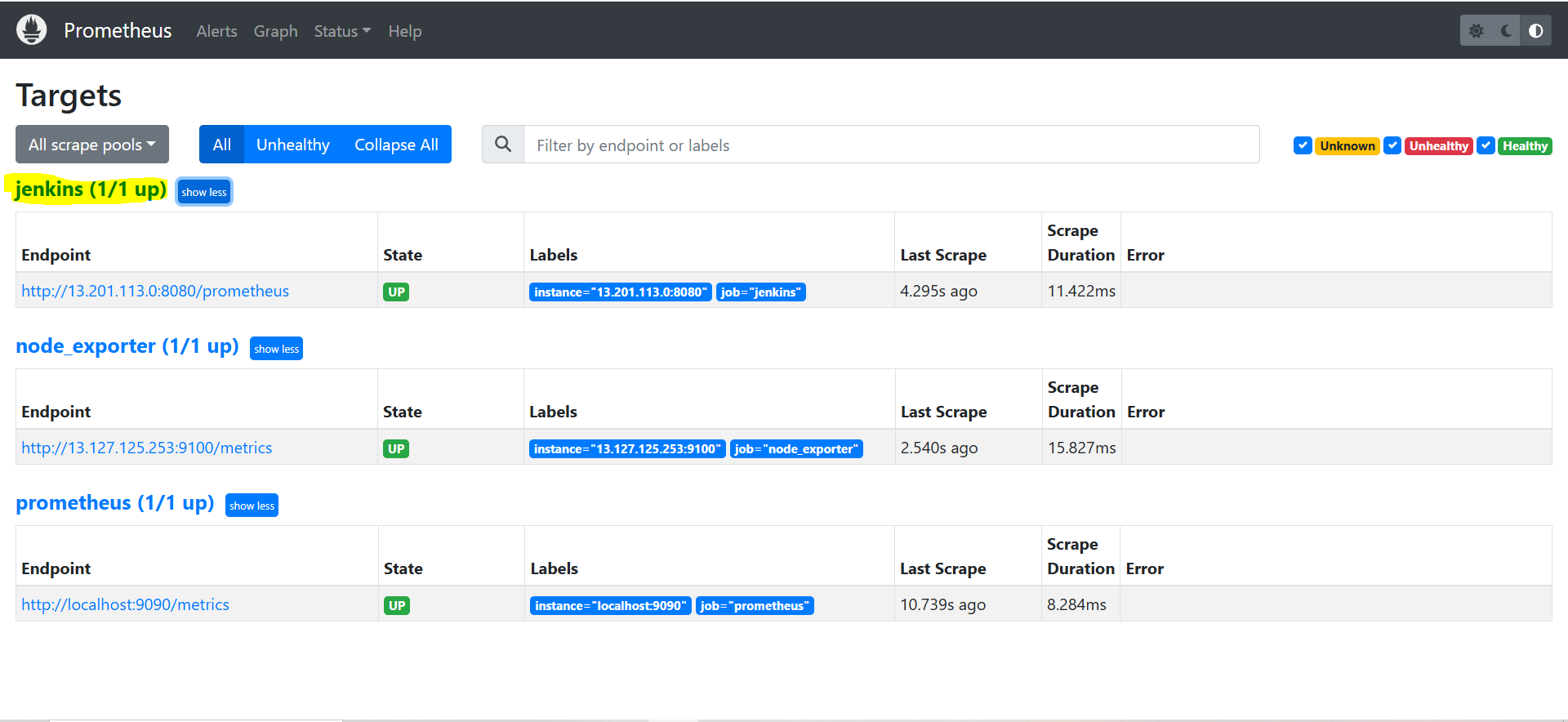

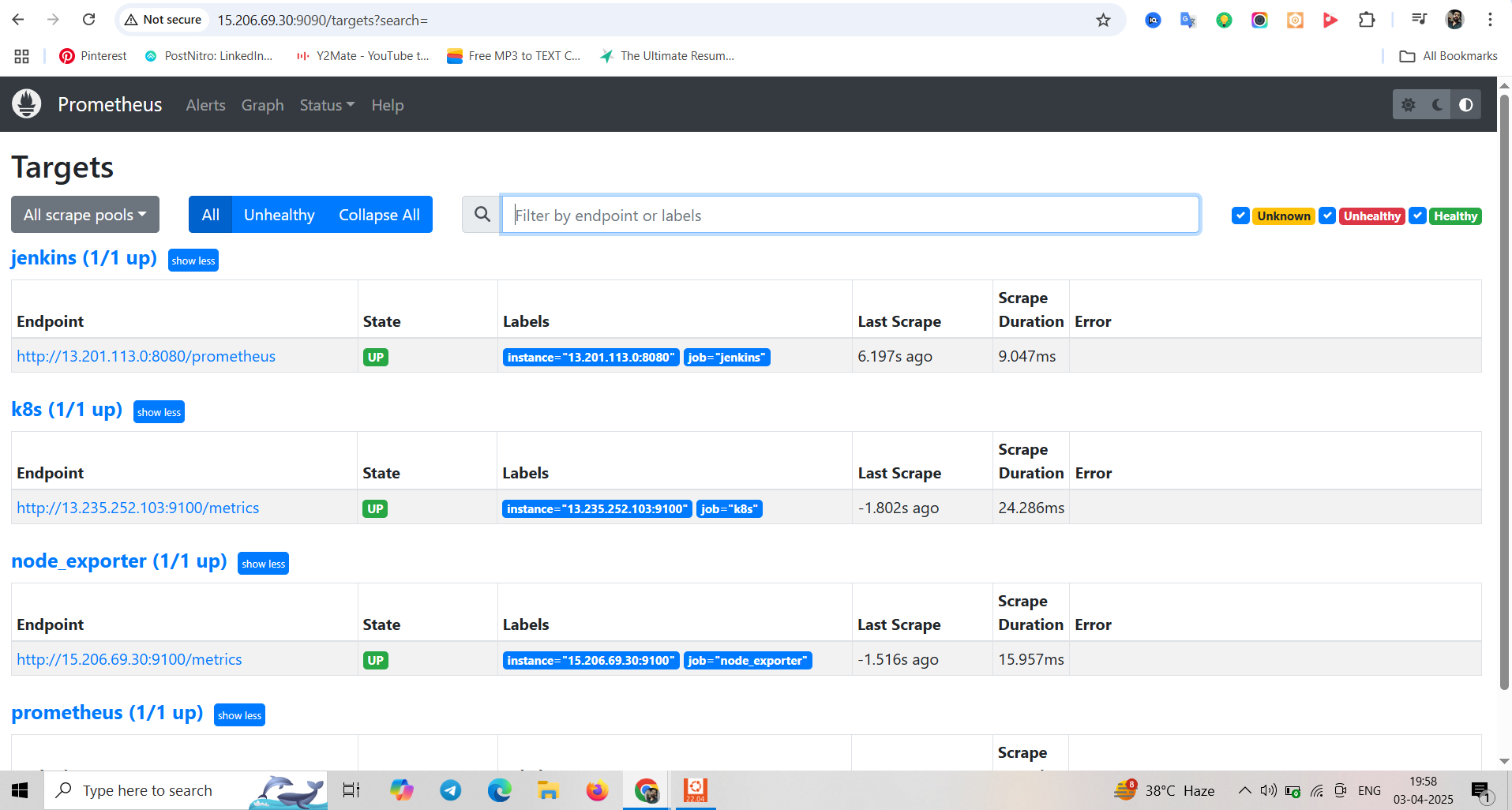

http://<your-server-ip>:9090 Login and go to > Targets, but before checking targets you need to have a node exporter installed. Node exporter will fetch the metric from application and will be used by Prometheuscat.

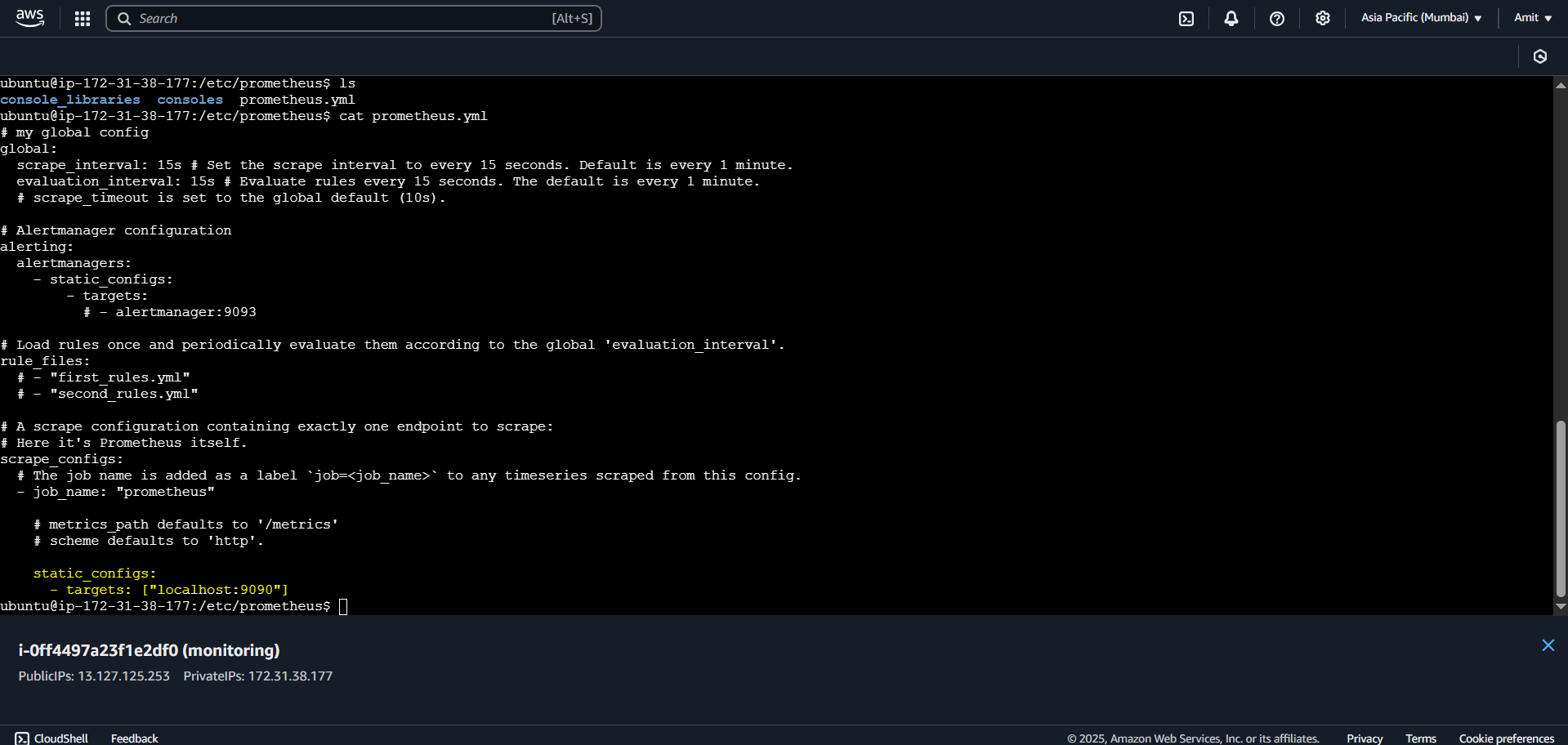

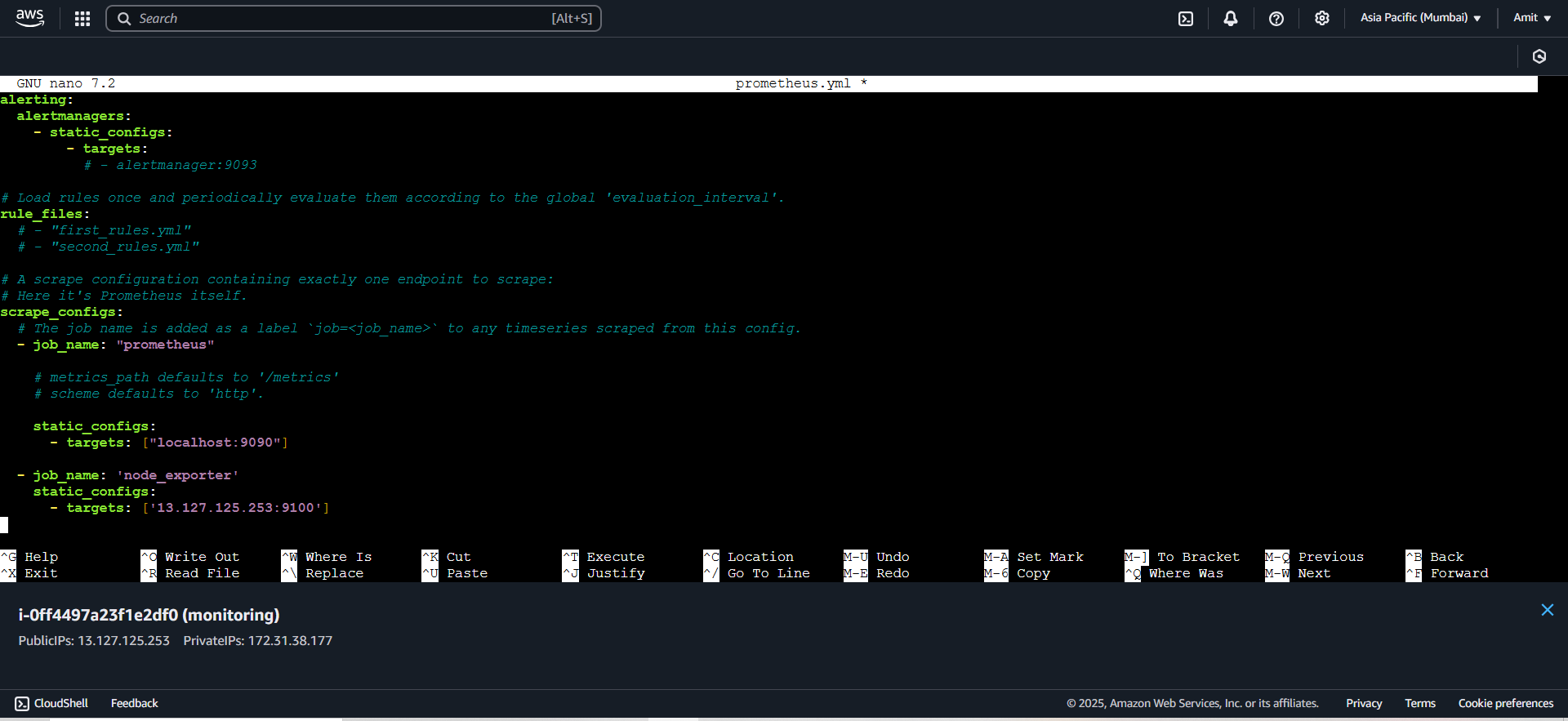

cat /etc/prometheus/prometheus.yml

You can see in prometheus file target. Let’s add node exporter in job name

Installing Node Exporter:

Create a system user for Node Exporter and download Node Exporter:

sudo useradd --system --no-create-home --shell /bin/false node_exporter wget https://github.com/prometheus/node_exporter/releases/download/v1.6.1/node_exporter-1.6.1.linux-amd64.tar.gzExtract Node Exporter files, move the binary, and clean up:

tar -xvf node_exporter-1.6.1.linux-amd64.tar.gz sudo mv node_exporter-1.6.1.linux-amd64/node_exporter /usr/local/bin/ rm -rf node_exporter*Create a systemd unit configuration file for Node Exporter:

sudo nano /etc/systemd/system/node_exporter.serviceAdd the following content to the

node_exporter.servicefile:[Unit] Description=Node Exporter Wants=network-online.target After=network-online.target StartLimitIntervalSec=500 StartLimitBurst=5 [Service] User=node_exporter Group=node_exporter Type=simple Restart=on-failure RestartSec=5s ExecStart=/usr/local/bin/node_exporter --collector.logind [Install] WantedBy=multi-user.targetReplace

--collector.logindwith any additional flags as needed.Enable and start Node Exporter:

sudo systemctl enable node_exporter sudo systemctl start node_exporterVerify the Node Exporter's status:

sudo systemctl status node_exporterYou can access Node Exporter metrics in Prometheus.

Configure Prometheus Plugin Integration:

Integrate Jenkins with Prometheus to monitor the CI/CD pipeline.

Prometheus Configuration:

To configure Prometheus to scrape metrics from Node Exporter and Jenkins, you need to modify the

prometheus.ymlfile. Here is an exampleprometheus.ymlconfiguration for your setup:global: scrape_interval: 15s scrape_configs: - job_name: 'node_exporter' static_configs: - targets: ['localhost:9100']

Check the validity of the configuration file:

promtool check config /etc/prometheus/prometheus.yml

Reload the Prometheus configuration without restarting:

curl -X POST http://localhost:9090/-/reload

You can access Prometheus targets at: http://<your-prometheus-ip>:9090/targets

You can see our node exporter is up and running,

Grafana

Install Grafana on Ubuntu 22.04 and Set it up to Work with Prometheus

Step 1: Install Dependencies:

First, ensure that all necessary dependencies are installed:

sudo apt-get update

sudo apt-get install -y apt-transport-https software-properties-common

Step 2: Add the GPG Key:

Add the GPG key for Grafana:

wget -q -O - https://packages.grafana.com/gpg.key | sudo apt-key add -

Step 3: Add Grafana Repository:

Add the repository for Grafana stable releases:

echo "deb https://packages.grafana.com/oss/deb stable main" | sudo tee -a /etc/apt/sources.list.d/grafana.list

Step 4: Update and Install Grafana:

Update the package list and install Grafana:

sudo apt-get update

sudo apt-get -y install grafana

Step 5: Enable and Start Grafana Service:

To automatically start Grafana after a reboot, enable the service:

sudo systemctl enable grafana-server

Then, start Grafana:

sudo systemctl start grafana-server

Step 6: Check Grafana Status:

Verify the status of the Grafana service to ensure it's running correctly:

sudo systemctl status grafana-server

Step 7: Access Grafana Web Interface:

Open a web browser and navigate to Grafana using your server's IP address. The default port for Grafana is 3000. For example:

http://<your-server-ip>:3000

You'll be prompted to log in to Grafana. The default username is "admin," and the default password is also "admin."

Step 8: Change the Default Password:

When you log in for the first time, Grafana will prompt you to change the default password for security reasons. Follow the prompts to set a new password.

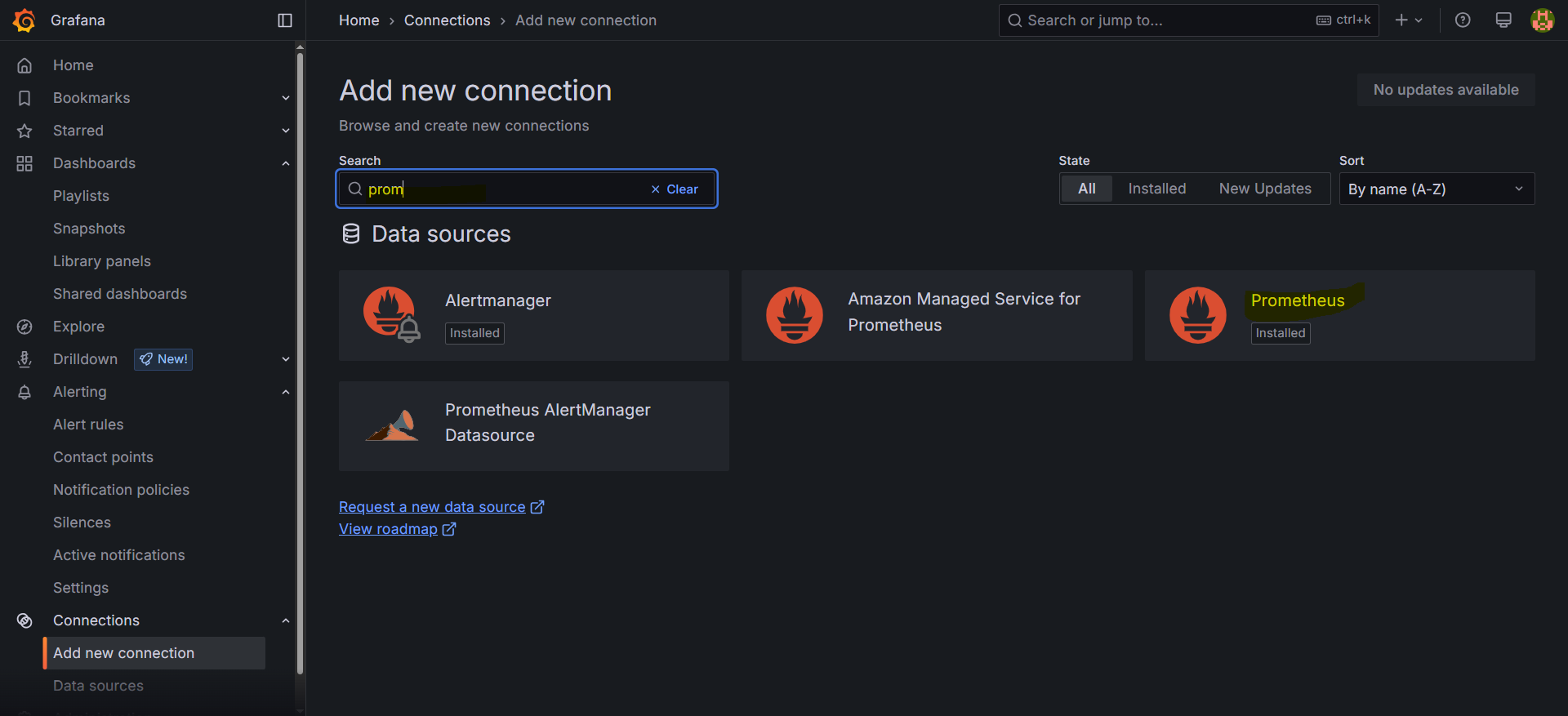

Step 9: Add Prometheus Data Source:

To visualize metrics, you need to add a data source. Follow these steps:

Click on the gear icon (⚙️) in the left sidebar to open the "Configuration" menu.

Select "Data Sources."

Click on the "Add data source" button.

Choose "Prometheus" as the data source type.

In the "HTTP" section:

Set the "URL" to

http://localhost:9090(assuming Prometheus is running on the same server).Click the "Save & Test" button to ensure the data source is working.

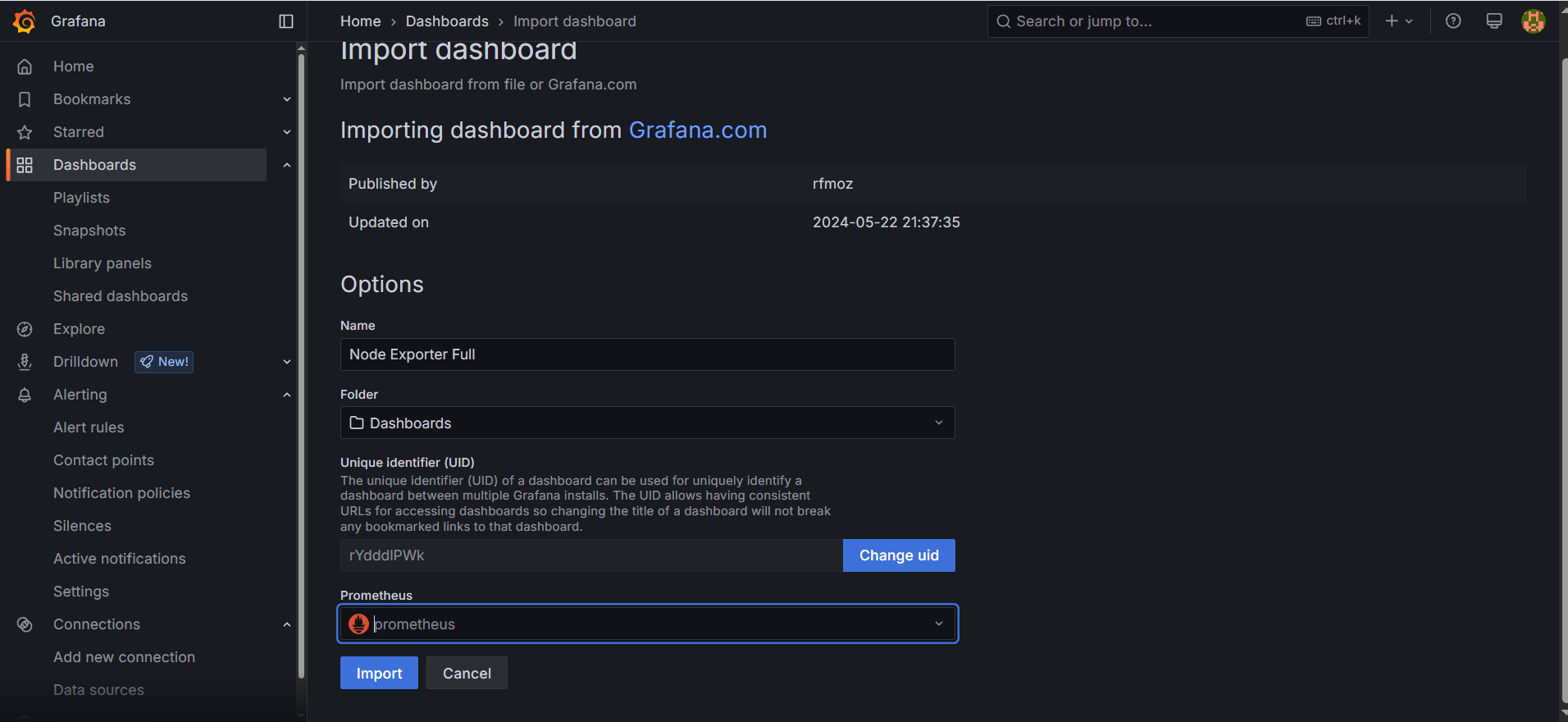

Step 10: Import a Dashboard:

To make it easier to view metrics, you can import a pre-configured dashboard. Follow these steps:

Click on the "+" (plus) icon in the left sidebar to open the "Create" menu.

Select "Dashboard."

Click on the "Import" dashboard option.

Enter the dashboard code you want to import (e.g., code 1860).

Click the "Load" button.

Select the data source you added (Prometheus) from the dropdown.

Click on the "Import" button.

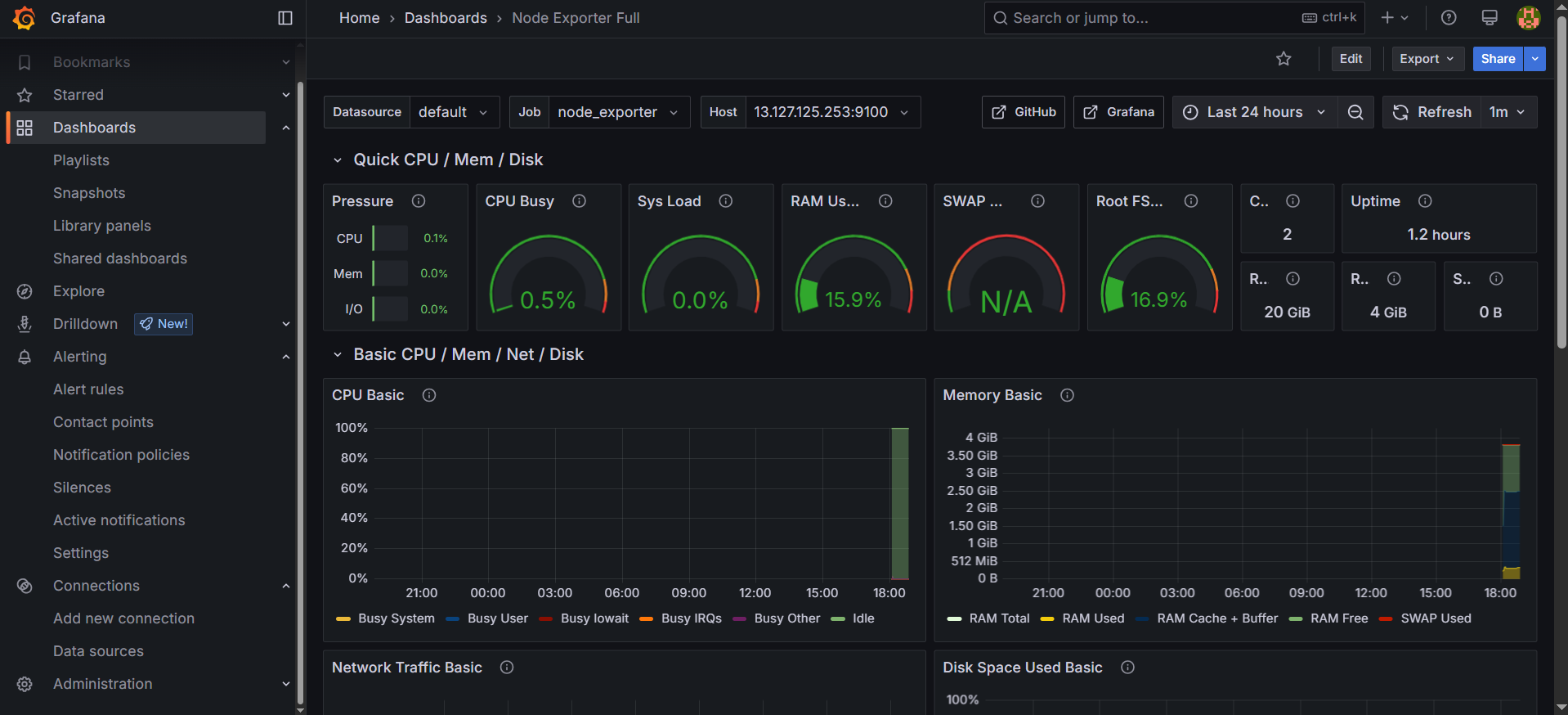

You should now have a Grafana dashboard set up to visualize metrics from Prometheus.

Grafana is a powerful tool for creating visualizations and dashboards, and you can further customize it to suit your specific monitoring needs.

That's it! You've successfully installed and set up Grafana to work with Prometheus for monitoring and visualization.

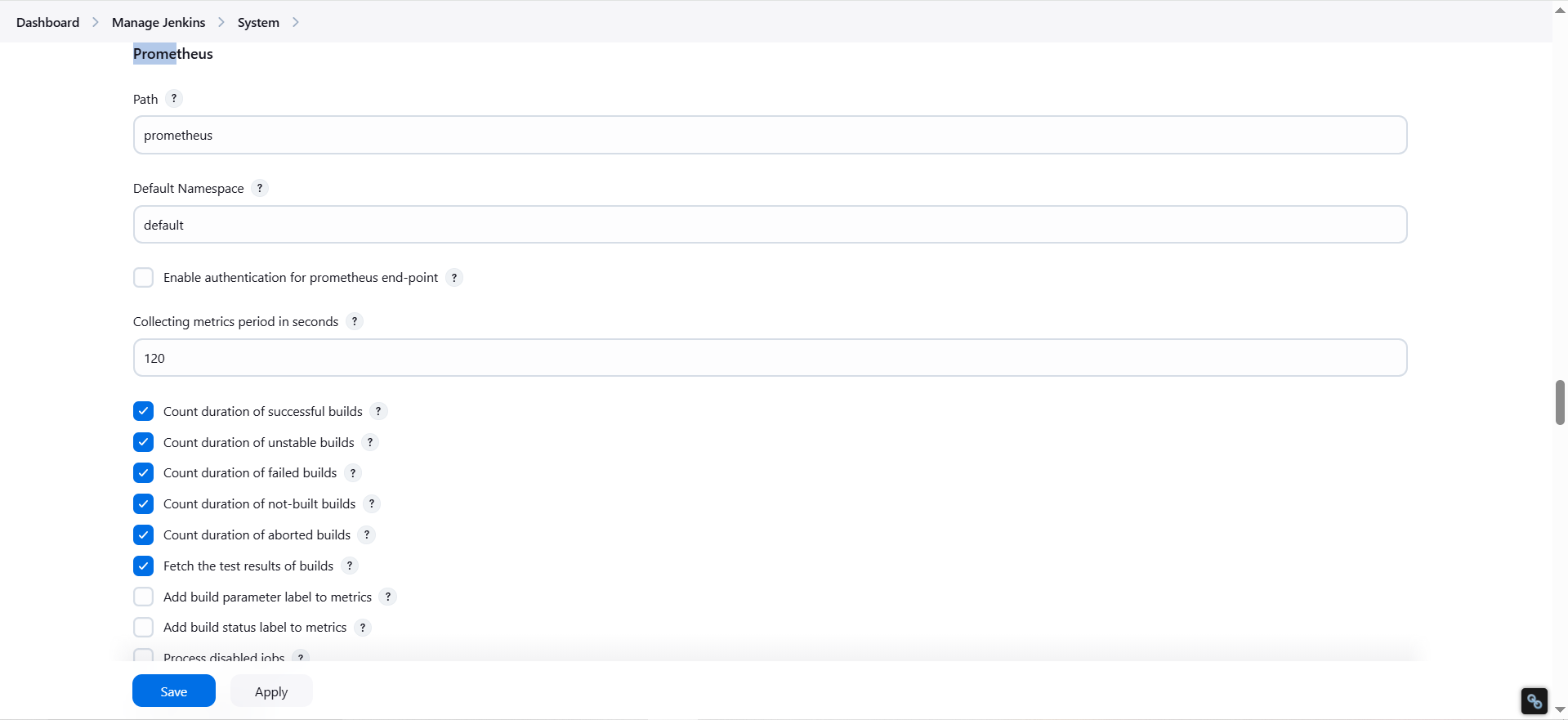

Configure Prometheus Plugin Integration:

Integrate Jenkins with Prometheus to monitor the CI/CD pipeline.

Install “Prometheus Metric” plugin, then setup system now in jenkins for prometheus server

Need to add jenkins in prometheus.yml file same like node exporter

cd /etc/prometheus nano prometheus.yml- job_name: 'jenkins' metrics_path: '/prometheus' static_configs: - targets: ['<your-jenkins-ip>:<your-jenkins-port>']Make sure to replace

<your-jenkins-ip>and<your-jenkins-port>with the appropriate values for your Jenkins setup.cd /etc/prometheus/

Check the validity of the configuration file:

promtool check config /etc/prometheus/prometheus.ymlReload the Prometheus configuration without restarting:

curl -X POST http://localhost:9090/-/reloadYou can access Prometheus targets at:

http://<your-prometheus-ip>:9090/targets

we can see Jenkins is up and running now add grafana dashboard.

search “jenkins grafana dashboard” copy ID

and load the dashboard

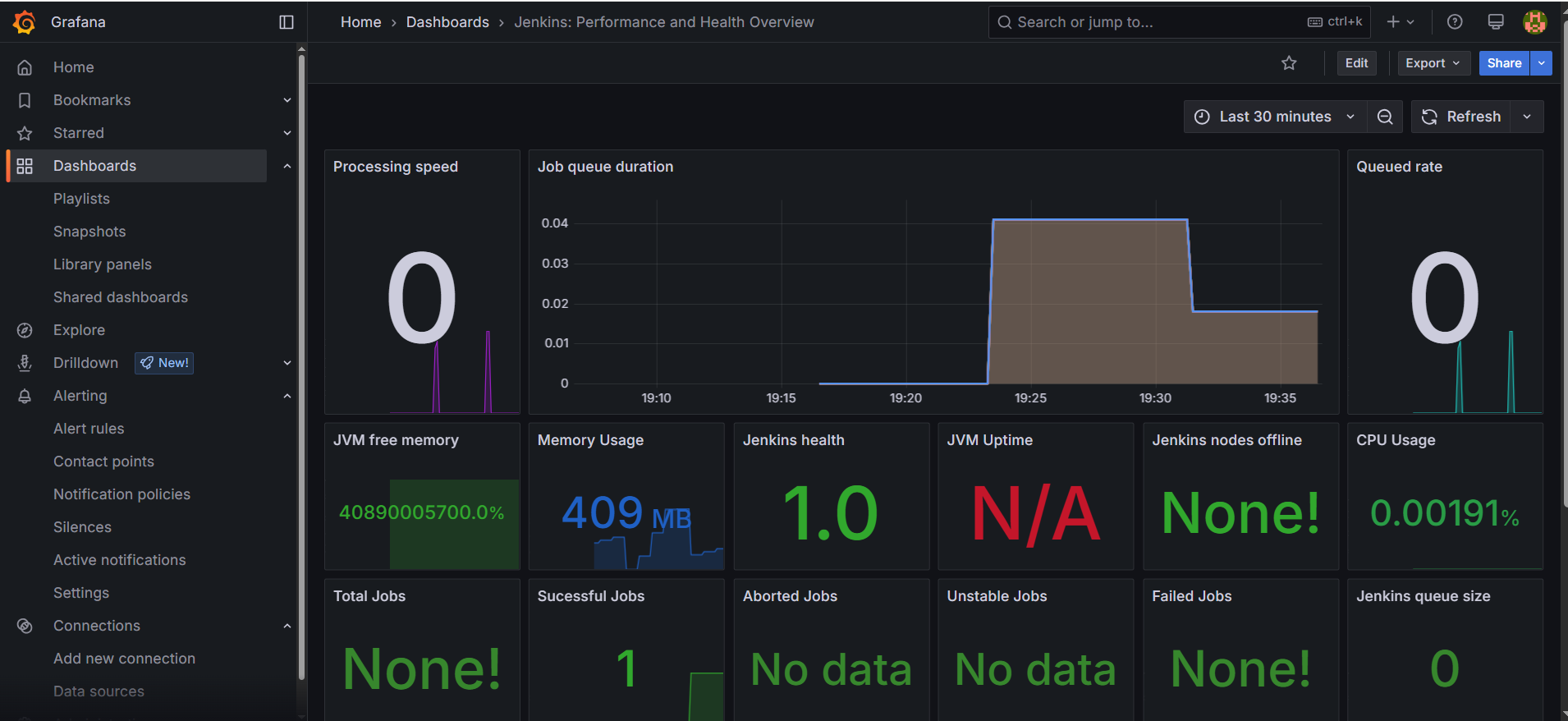

Tried running pipeline and see the data here

Set Up Gmail Email Notification

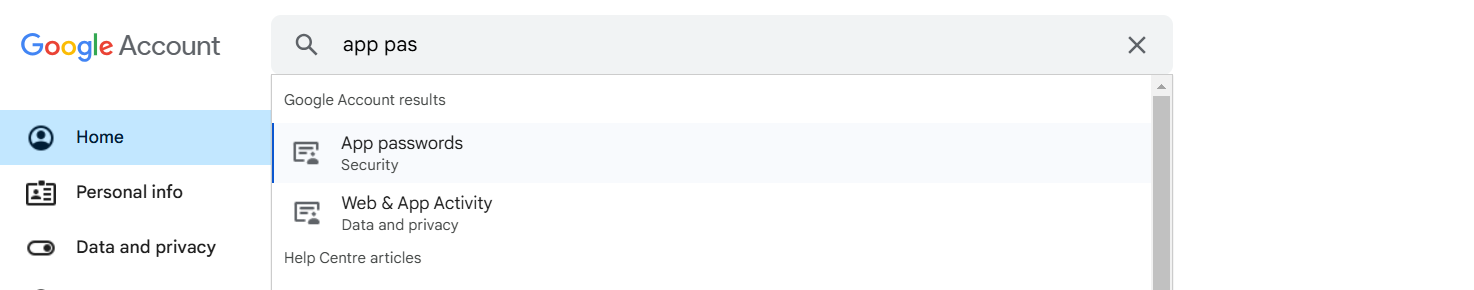

- Login to Gmail

For Gmail notifications, you’ll need to set up an app password for your Gmail account.

Log in to Gmail and create an app password by navigating to your Google Account > Security > App Passwords.

Use this app password when setting up email notifications in Jenkins.

Add to global credential in Jenkins with token

- Now go to Jenkins

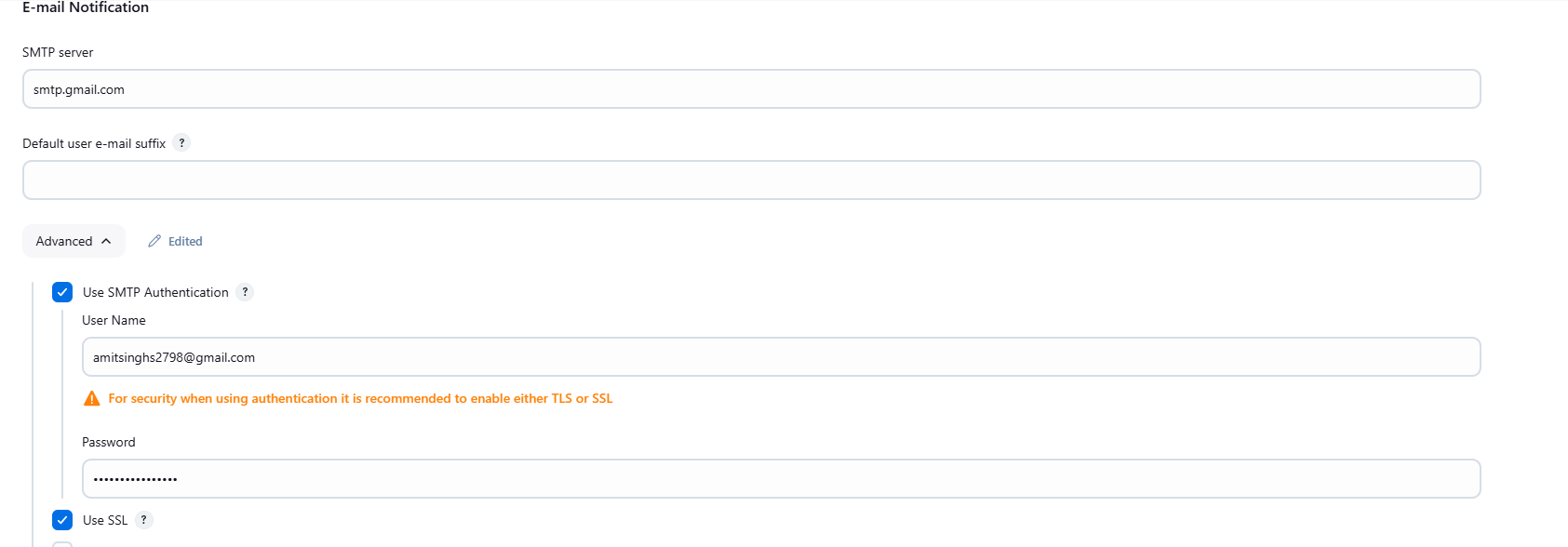

In Manage Jenkins > Configure System, scroll to Extended Email Notification.

Set the SMTP server as

smtp.gmail.com.Port:

465(for SSL).

Credentials: Use the Gmail credentials you created earlier.

Enable SSL and OAuth2 for secure communication.

Phase 6: Kubernetes

Setting Up AWS EKS Cluster

- Create an EKS cluster with help of eksctl CLI command.

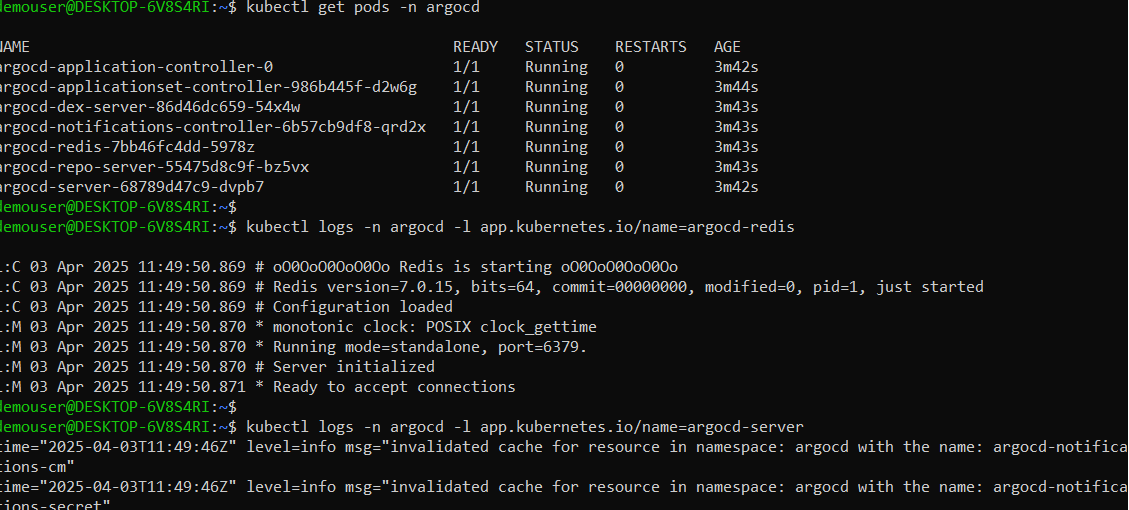

Install ArgoCD in your EKS Cluster in a Namespace

kubectl create ns argocd kubectl apply -n argocd -f https://raw.githubusercontent.com/argoproj/argo-cd/stable/manifests/install.yaml kubectl get svc -n argocd #get argocd url kubectl --namespace argocd port-forward argocd-server 80 kubectl get deployments -n argocd kubectl logs -l app.kubernetes.io/name=argocd-server -n argocd

Create Kubernetes Cluster with Nodegroups

In this phase, you'll set up a Kubernetes cluster with node groups. This will provide a scalable environment to deploy and manage your applications.

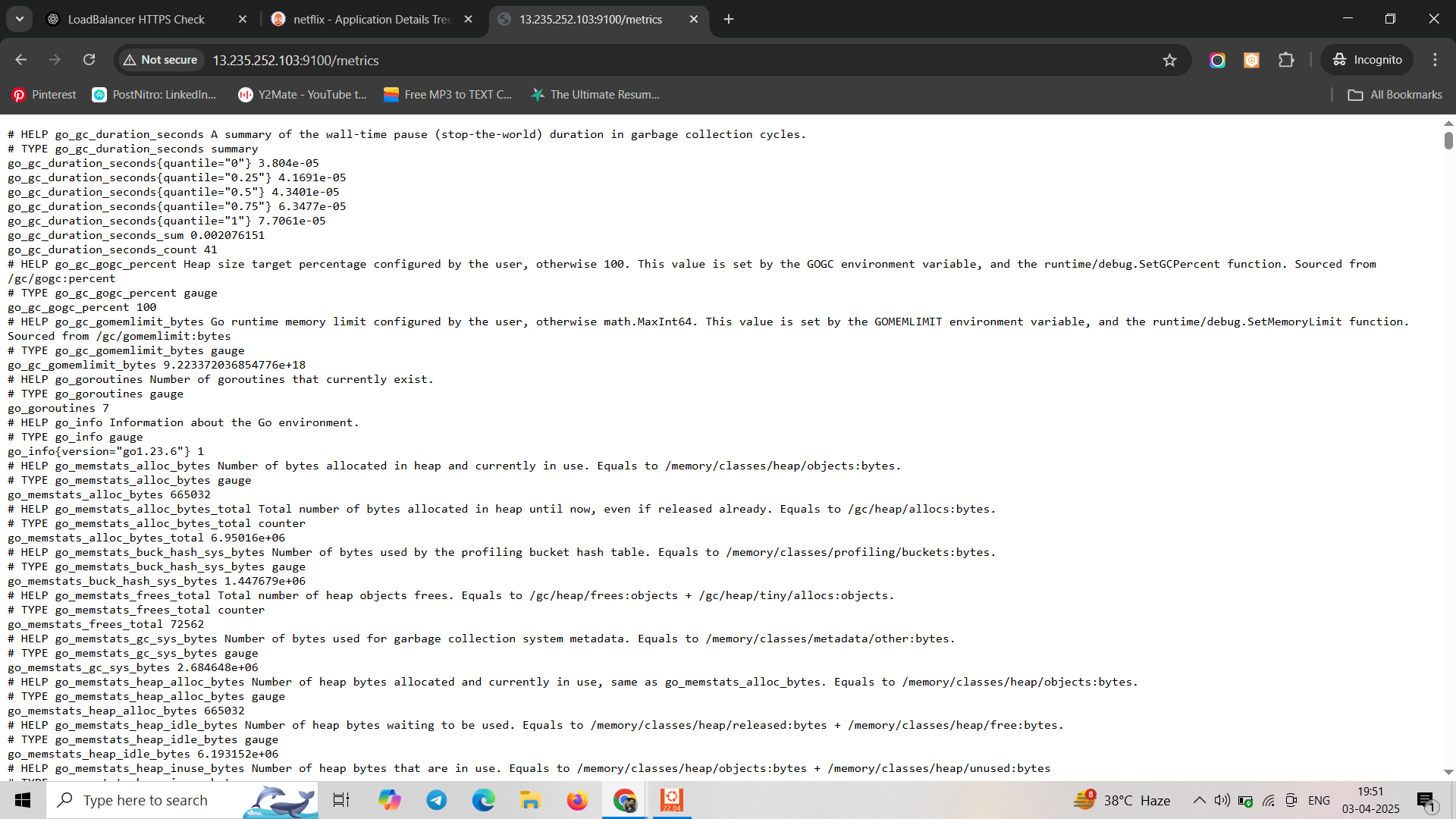

Monitor Kubernetes with Prometheus

Prometheus is a powerful monitoring and alerting toolkit, and you'll use it to monitor your Kubernetes cluster. Additionally, you'll install the node exporter using Helm to collect metrics from your cluster nodes.

Install Node Exporter using Helm

To begin monitoring your Kubernetes cluster, you'll install the Prometheus Node Exporter. This component allows you to collect system-level metrics from your cluster nodes. Here are the steps to install the Node Exporter using Helm:

Add the Prometheus Community Helm repository:

helm repo add prometheus-community https://prometheus-community.github.io/helm-chartsCreate a Kubernetes namespace for the Node Exporter:

kubectl create namespace prometheus-node-exporterInstall the Node Exporter using Helm:

helm install prometheus-node-exporter prometheus-community/prometheus-node-exporter --namespace prometheus-node-exporter

Add a Job to Scrape Metrics on nodeip:9001/metrics in prometheus.yml:

kubectl get ns

kubectl get pods -n prometheus-node-exporter

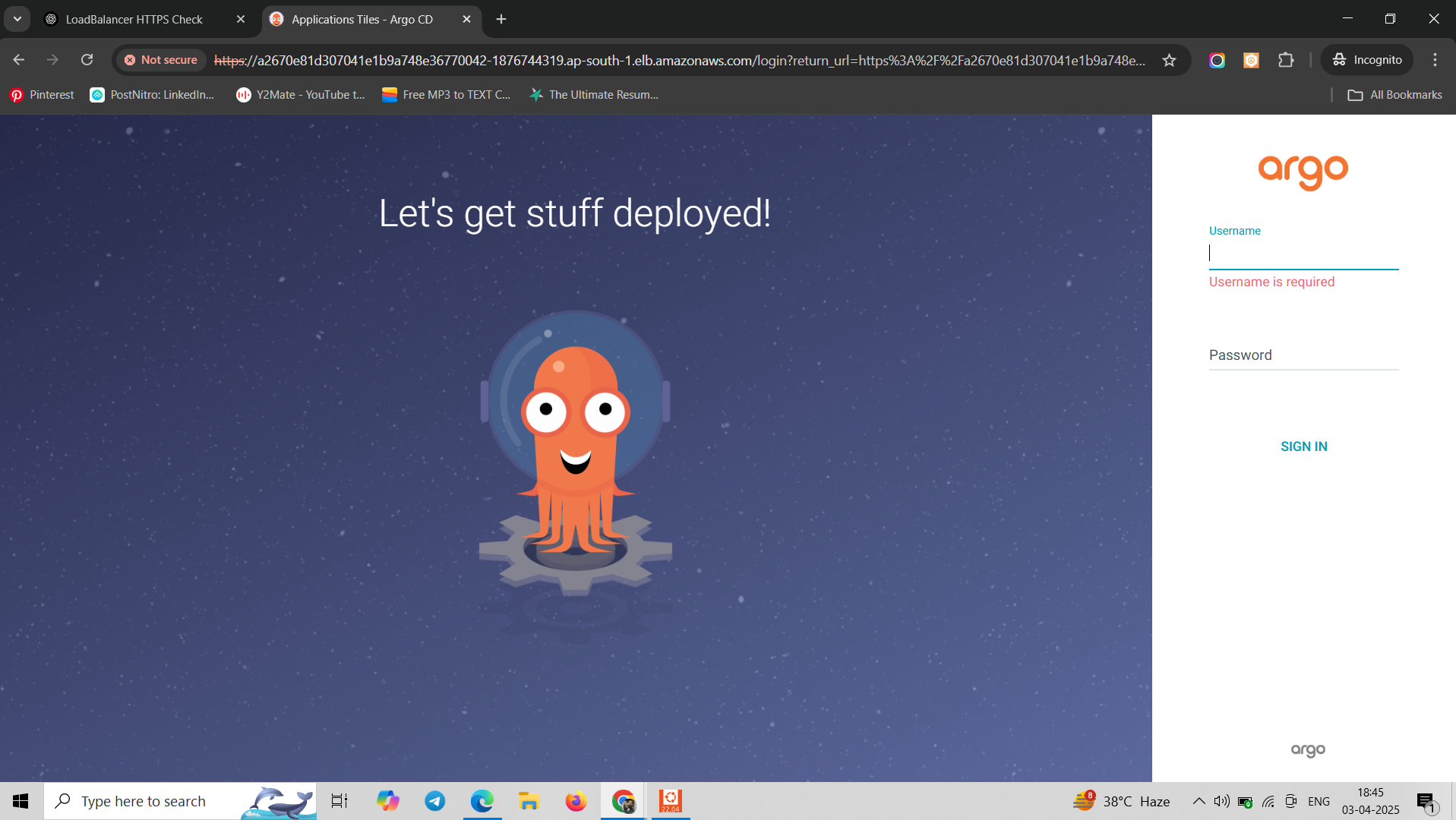

export ARGOCD_SERVER=$(kubectl get svc argocd-server -n argocd -o json | jq --raw-output '.status.loadBalancer.ingress[0].hostname')

kubectl get svc argocd-server -n argocd -o jsonpath='{.status.loadBalancer.ingress[0].hostname}'

kubectl get svc argocd-server -n argocd

echo $ARGOCD_SERVER

curl -Ik https://a2670e81d307041e1b9a748e36770042-1876744319.ap-south-1.elb.amazonaws.com

Node exporter installed using helm

To get argocd password

kubectl -n argocd get secret argocd-initial-admin-secret -o jsonpath="{.data.password}" | base64 -d

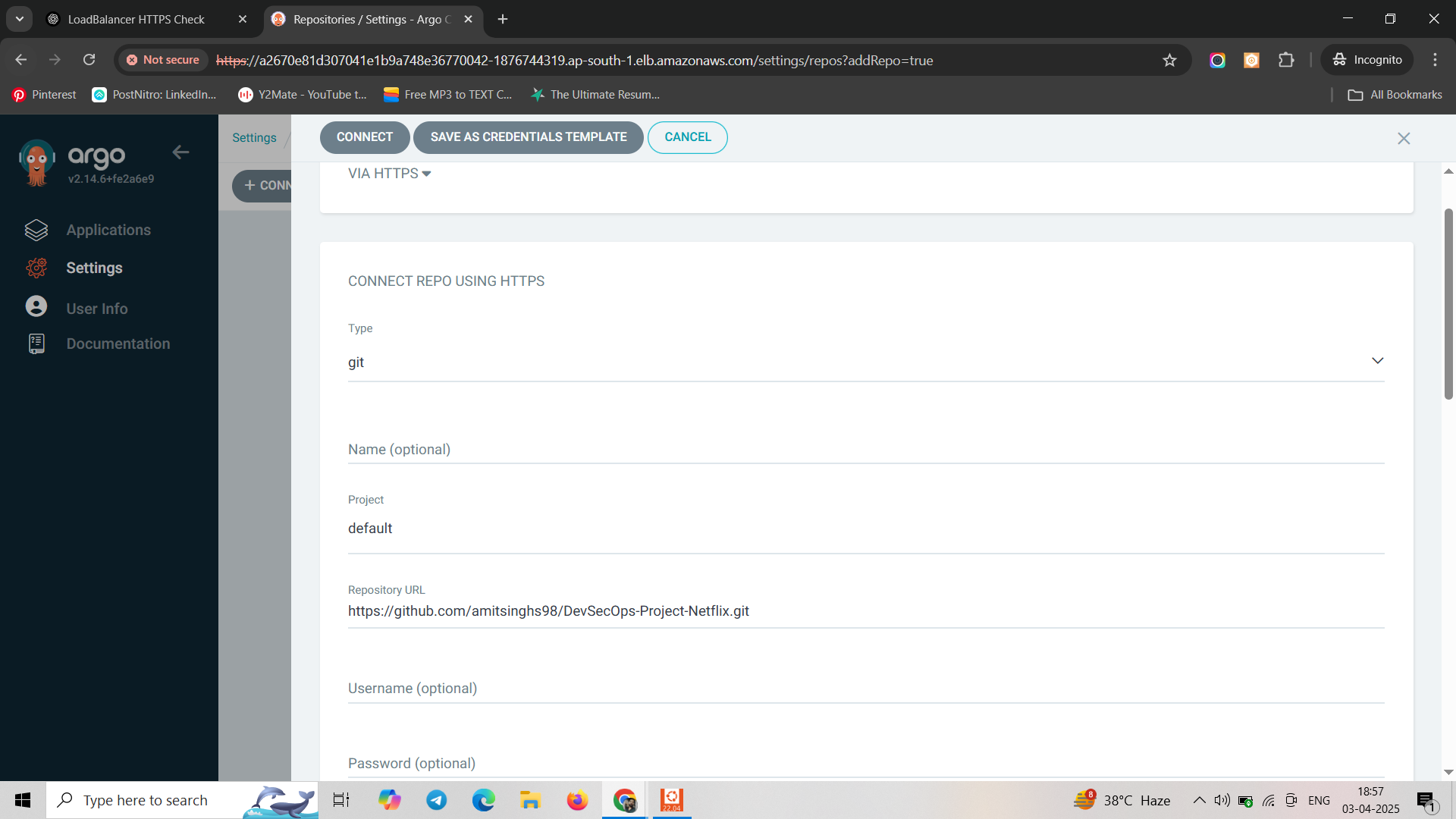

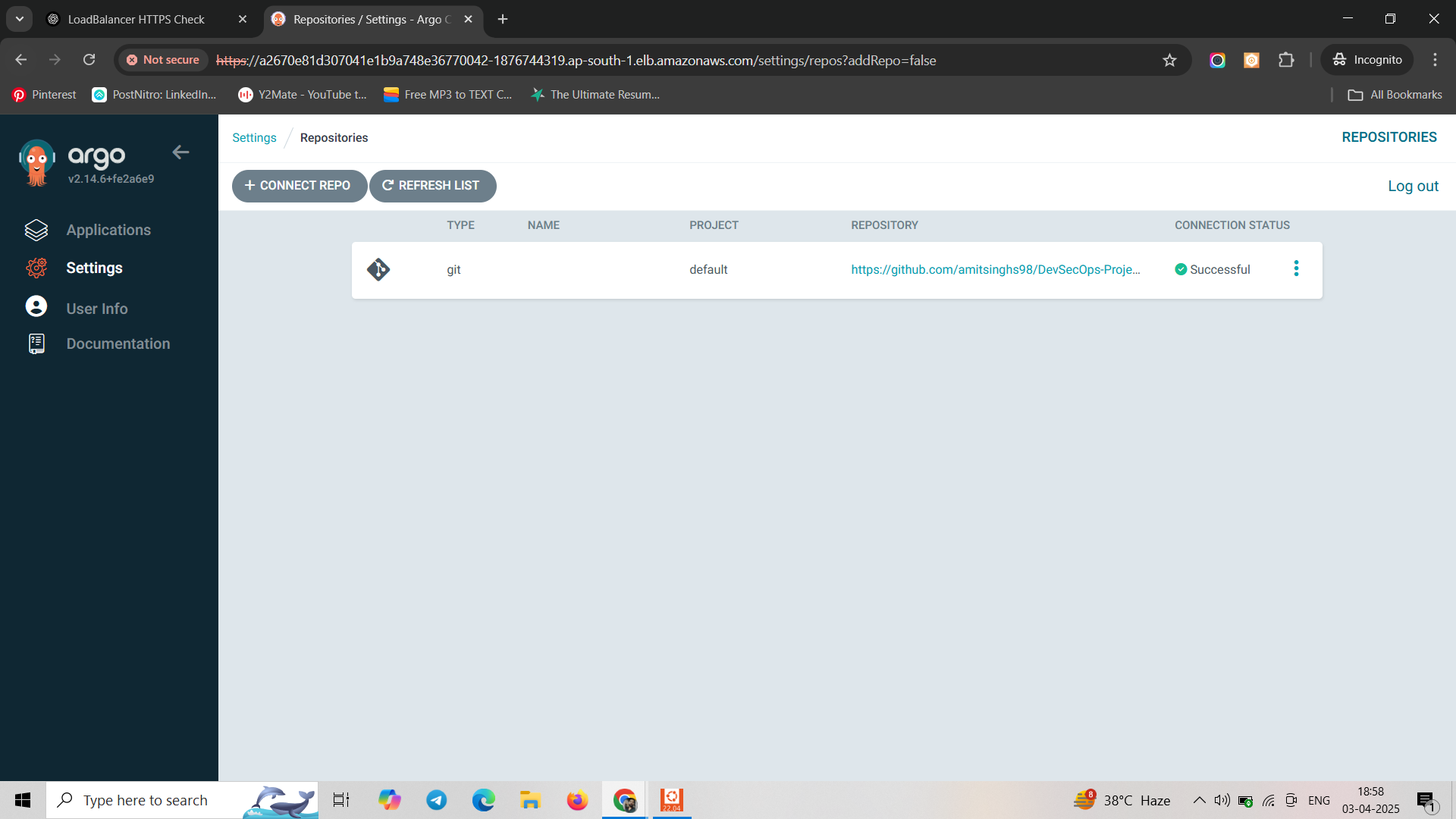

Now connect our repo with argocd

Login to argocd > Settings > Repositories > Connect a repo

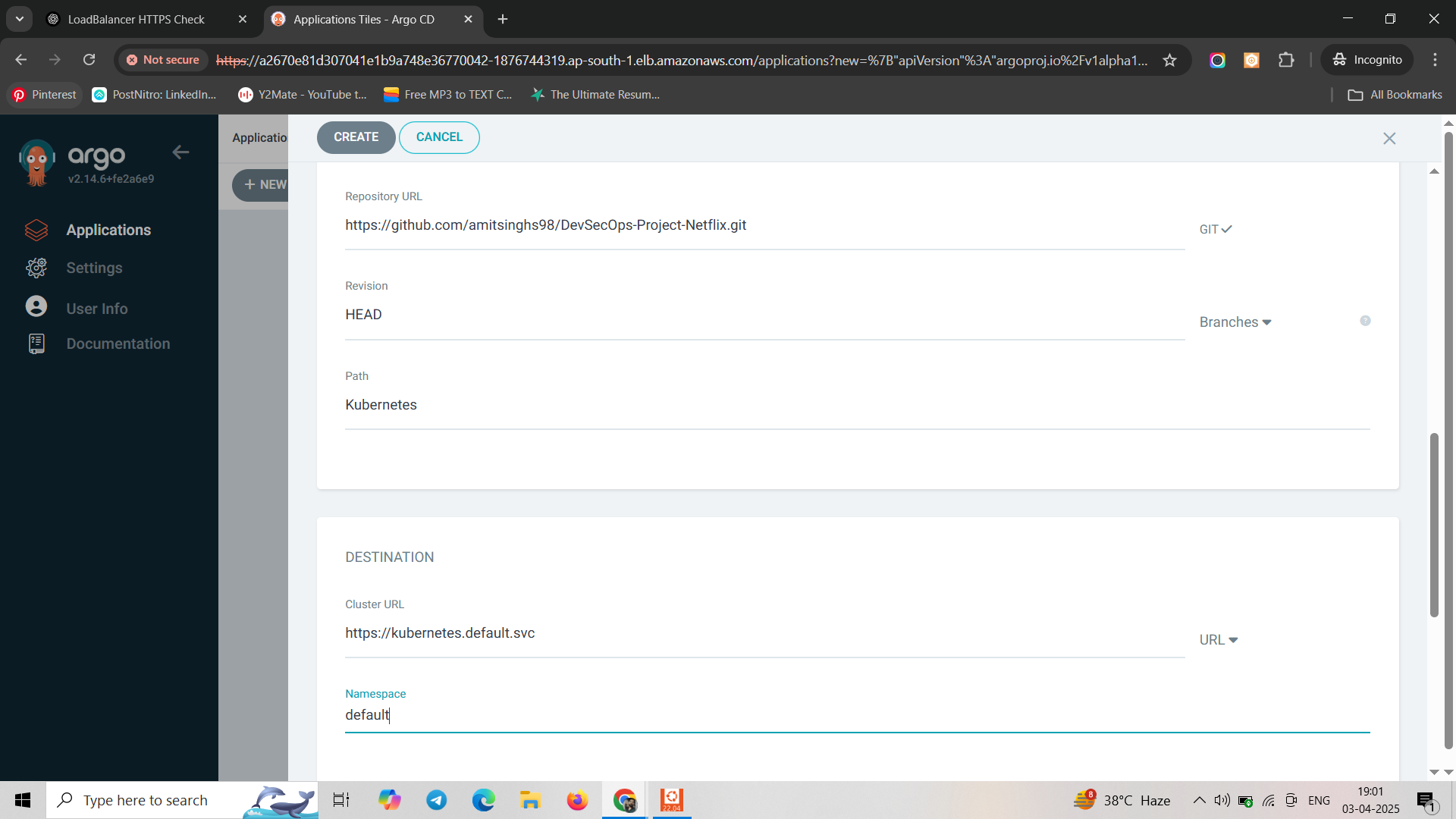

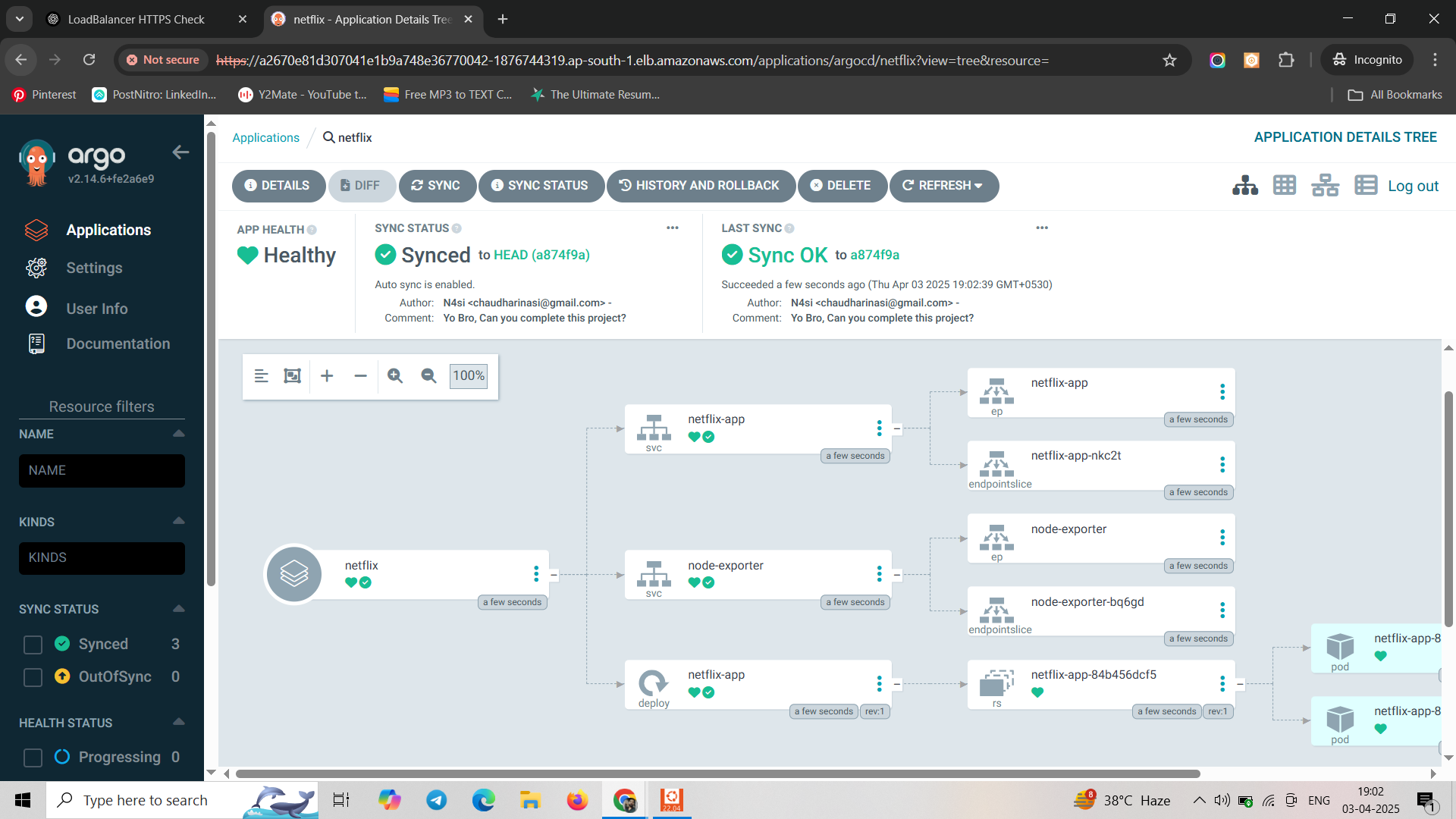

Go to apps > Create app

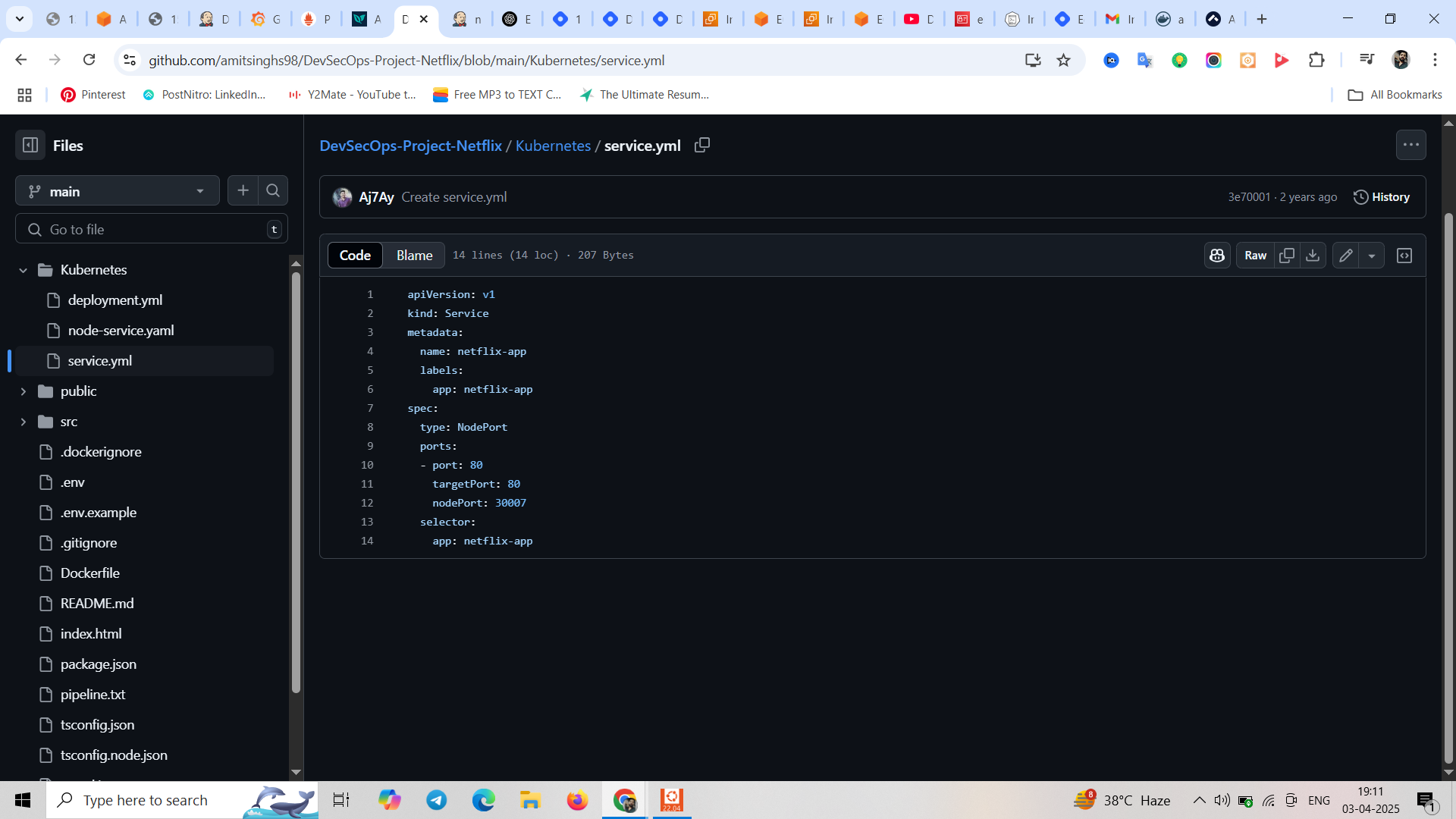

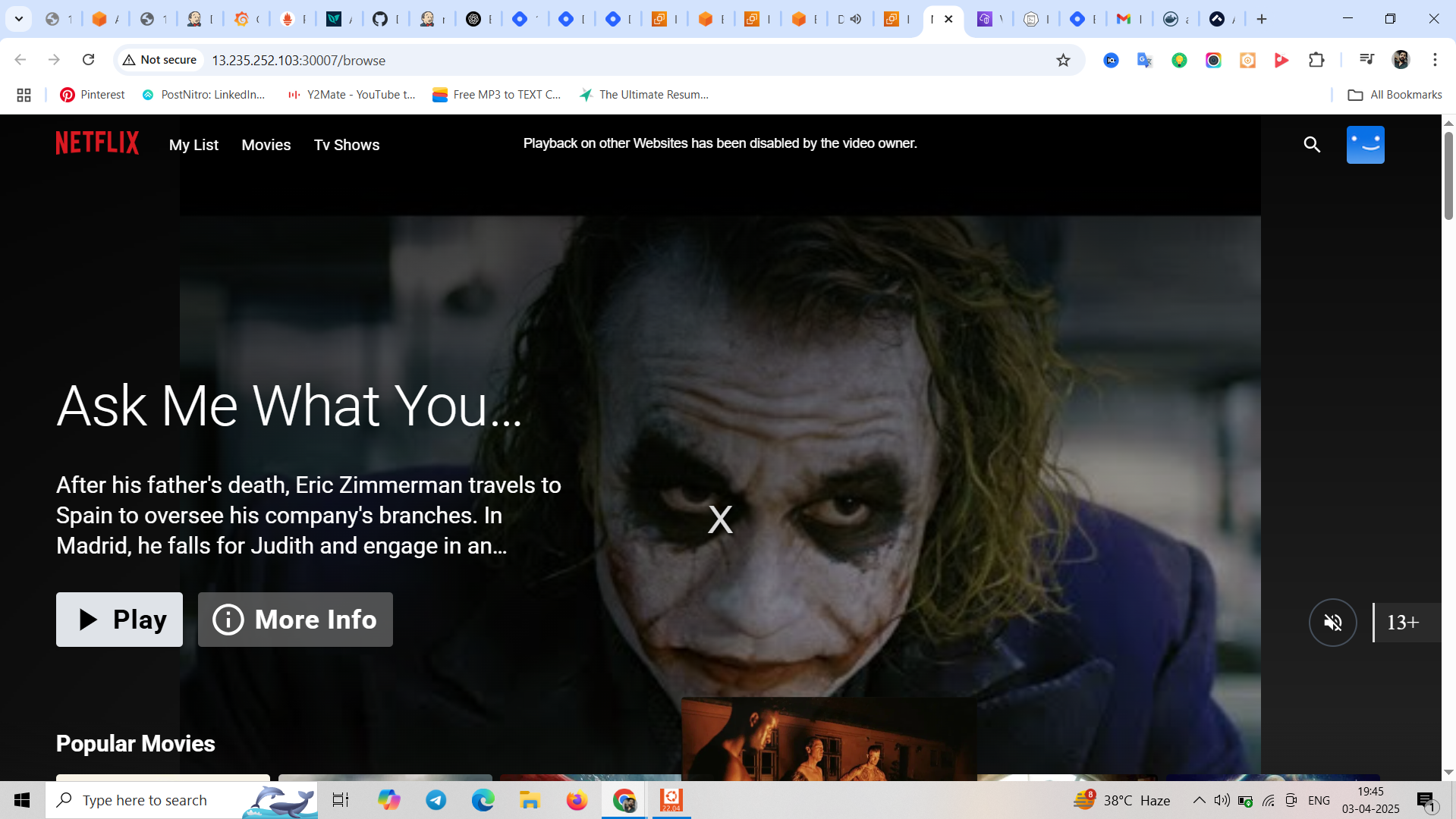

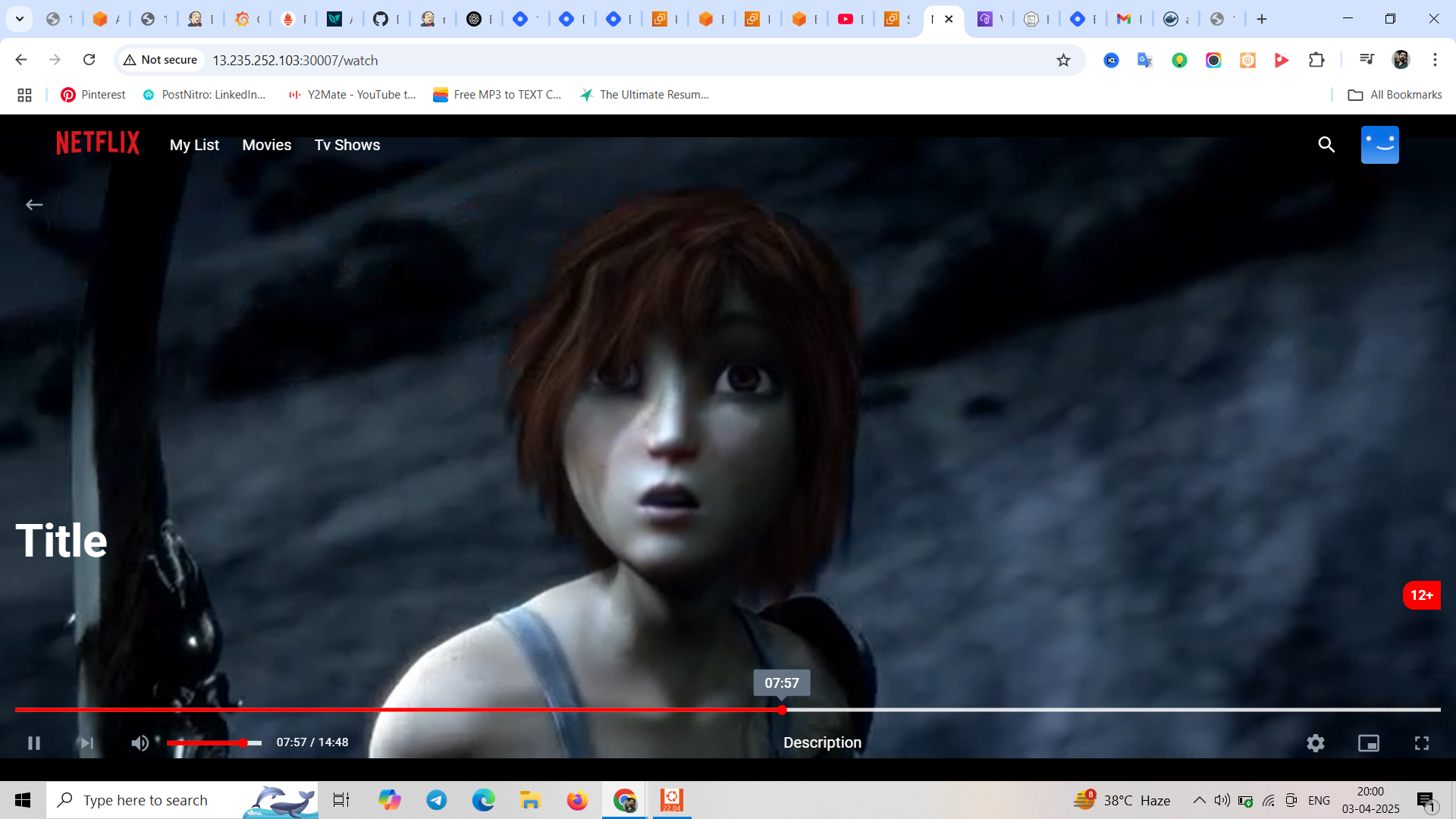

See our netflix app using nodeport service

Our application is running on port 30007 we have mentioned in our svc file.

Start inbound rule for port 30007 go to your eks > nodes > security group

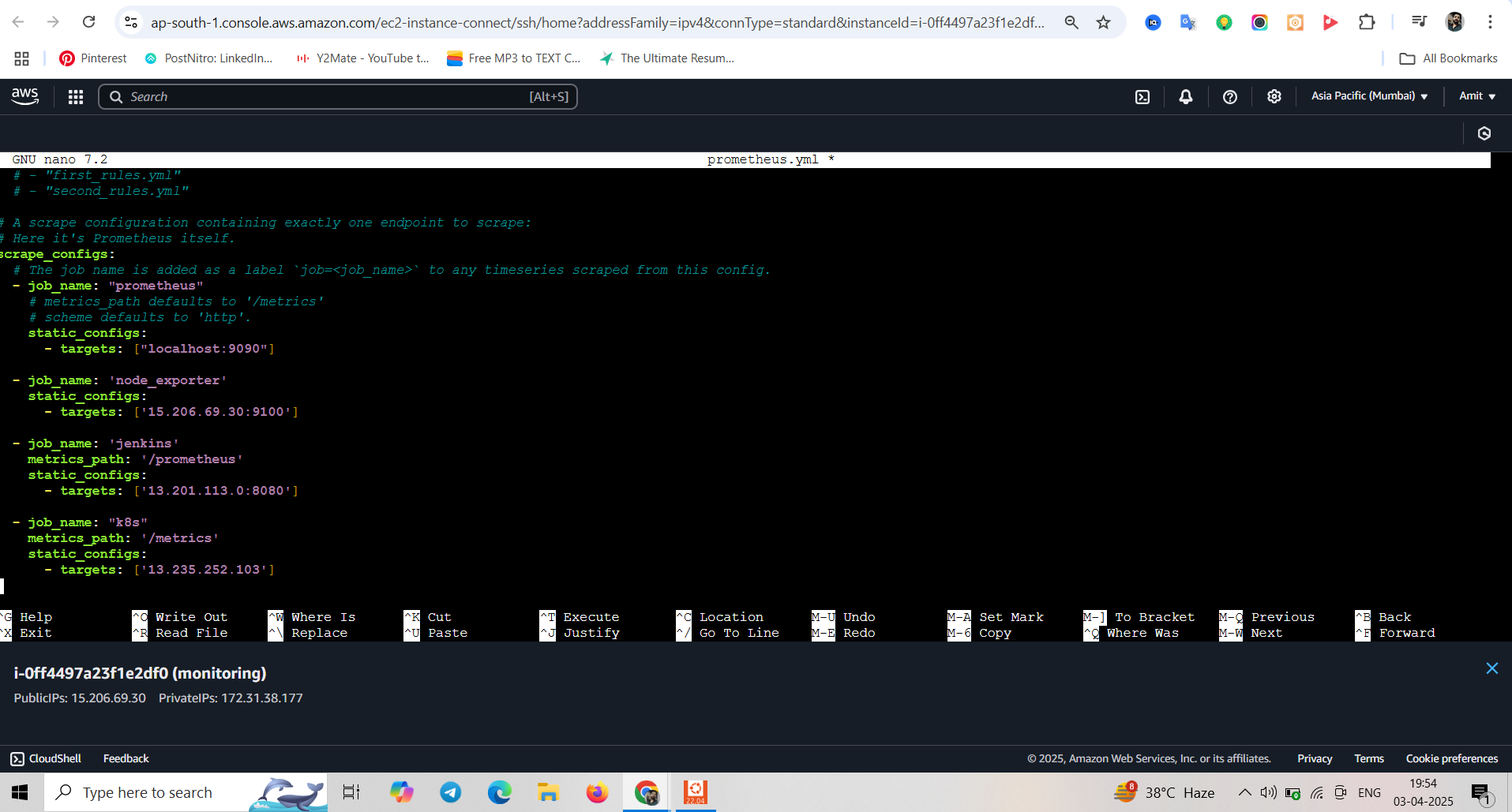

Now do monitoring for this EKS Cluster

Go to monitoring instance > cd /etc/prometheus

sudo nano prometheus.yml

Add in prometheus.yml file

Subscribe to my newsletter

Read articles from Amit singh deora directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Amit singh deora

Amit singh deora

DevOps | Cloud Practitioner | AWS | GIT | Kubernetes | Terraform | ArgoCD | Gitlab