All About Graph RAG

Jonas Kim

Jonas Kim

1. Graph RAG Definition and Advantages

Graph RAG is an innovative approach that enhances the response quality of Large Language Models (LLMs) by combining existing Retrieval-Augmented Generation (RAG) techniques with knowledge graphs. While traditional RAG relies on document retrieval based on vector embeddings, Graph RAG utilizes the structural information of knowledge graphs composed of entities (nodes) and relationships (edges). This allows LLMs to go beyond simple keyword matching or vector similarity, understanding complex connections and context between concepts to generate responses based on more accurate and richer information. Graph-based knowledge networks are particularly effective for complex queries requiring multi-step reasoning.

Limitations of Traditional RAG

According to Microsoft Research's study "GraphRAG: Unlocking LLM Discovery on Narrative Private Data", existing RAG approaches have the following significant limitations:

Difficulty Connecting Information: Traditional RAG struggles in situations requiring the logical connection of distributed information chunks to derive new, integrated insights. This limitation is particularly prominent when needing to integrate shared properties or relationships across multiple documents.

Lack of Comprehensive Understanding of Large-Scale Information: Traditional RAG's performance degrades when tasked with holistically grasping and summarizing semantic concepts across large data collections or large documents.

These limitations spurred the emergence of Graph RAG, with research progressing towards effectively solving these problems using graph structures.

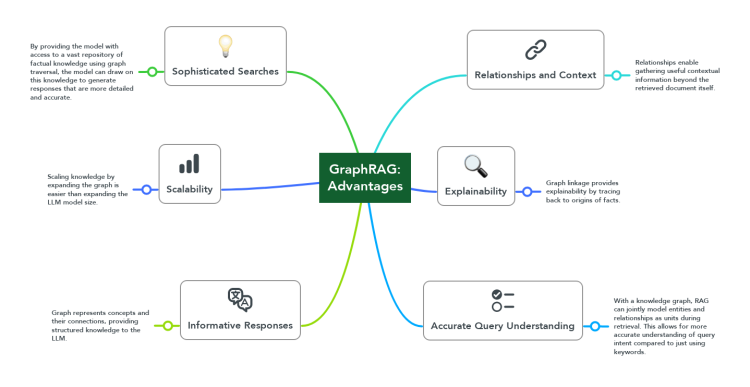

Key Advantages of Graph RAG

Sophisticated Retrieval Capabilities: Effectively finds relevant factual information from vast knowledge repositories through graph traversal, supporting LLMs in generating detailed and accurate responses.

Provides Contextual Relationships: Relationships in knowledge graphs offer valuable contextual information difficult to grasp from individual document chunks alone, enabling LLMs to generate responses considering a broader knowledge background.

Accurate Understanding of Query Intent: By modeling entities and relationships on the graph as a single semantic unit, it captures user query intent more accurately than simple keyword matching. This particularly enhances contextual accuracy for complex queries.

Enhanced Explainability: Following the links in the graph allows easy tracing of the source of facts used by the model, clearly explaining the basis for the response. This significantly increases the reliability and transparency of the results.

Rich and Comprehensive Responses: Graphs explicitly represent concepts and their complex connections, providing LLMs with more structured knowledge, enabling the generation of more comprehensive and in-depth answers.

Efficient Knowledge Scalability: It is relatively easy to expand knowledge by adding new information to the graph, making it more cost-effective to extend domain knowledge compared to increasing the parameters of the LLM itself.

Supports Multi-step Reasoning: The graph structure helps form complex reasoning chains, allowing more accurate responses to complex questions requiring step-by-step thinking.

2. Overview of Knowledge Graphs and Graph Databases (Graph DB)

To fully leverage Graph RAG, an understanding of its underlying components, knowledge graphs and graph databases, is essential.

2.1. What is a Knowledge Graph?

A knowledge graph is a data model that represents real-world entities (people, places, concepts, etc.) and their relationships in a graph structure. In this structure:

Nodes: Represent entities (e.g., person, city, product, concept)

Edges: Connect entities via relationships (e.g., 'lives in', 'founded', 'includes')

Properties: Additional information assigned to nodes and edges (e.g., name, date, weight)

This graph structure represents vast knowledge in the form of a semantic network, enabling systems to "understand" and reason about meaningful relationships between data. Consequently, knowledge graphs are used by AI models to recognize patterns from connected data and infer new associations, significantly improving performance in tasks like search, recommendation, and question answering.

2.2. Graph Databases

To effectively utilize knowledge graphs, a graph database (Graph DB) capable of storing and querying graph data is needed. A Graph DB stores and manages graph data composed of nodes and edges and is specifically designed as a DBMS to efficiently handle relationship-centric queries.

Key features of graph databases include:

Relationship-Centric Data Model: Relationships between data are treated as first-class citizens, stored and queried directly.

Flexible Schema: New node types or relationships can be easily added as needed, allowing rapid adaptation to changing requirements.

Efficient Graph Traversal: Optimized structure for quickly traversing connected data, efficiently handling complex relationship-based queries.

Intuitive Data Modeling: Naturally represents real-world relationships, enabling the construction of data models easily understood even by domain experts.

Index-free Adjacency: Many graph databases store connections between nodes as physical pointers, optimizing relationship lookup performance.

Relational DB (RDB) vs. Graph DB Comparison

| Characteristic | Relational Database (RDB) | Knowledge Graph (using Graph DB) |

| Data Storage Method | Structured table format | Composed of entities (nodes) and relationships (edges) |

| Schema | Formalized, fixed schema | Flexible schema structure |

| Data Manipulation | Manipulation via SQL | Use of graph query languages (Cypher, SPARQL, etc.) |

| Relationship Representation | Indirect representation via foreign keys | Direct representation as relationships |

| Complex Relationship Handling | Requires multiple joins (performance degradation) | Intuitive relationship traversal (excellent performance) |

| Pattern Discovery | Limited | Easy discovery of hidden connections and patterns |

| Data Integration | Schema changes difficult | Easy integration of data from various sources |

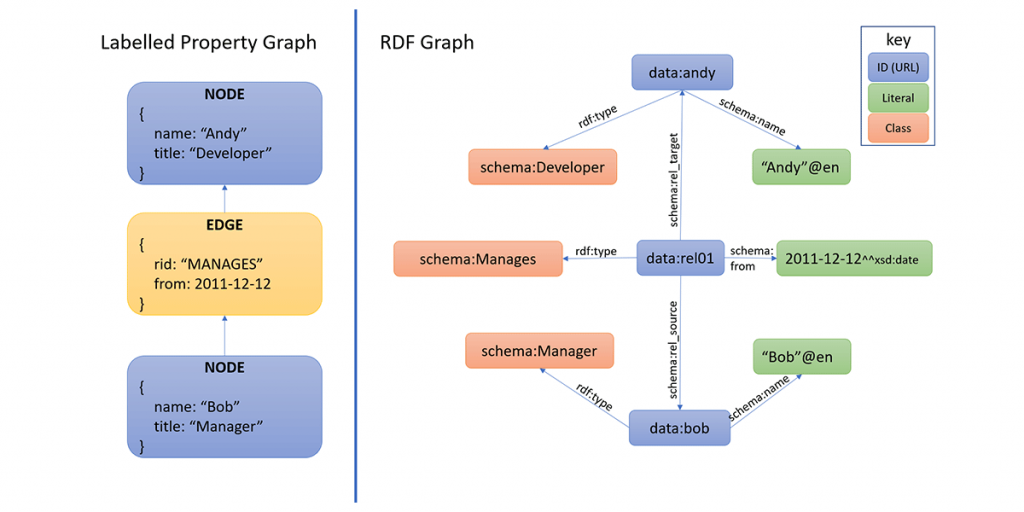

2.3. Types of Graph Models

Two main models are primarily used in graph databases.

Property Graph Model

Allows assigning properties directly to nodes and edges.

Classifies nodes and edges using labels.

Offers simplicity and intuitiveness within a single knowledge source.

Neo4j is a representative implementation, primarily using query languages like OpenCypher or Gremlin.

RDF (Resource Description Framework) Model

Uses standardized identifiers via URIs.

Represents data in a triple structure (subject-predicate-object).

Based on W3C standards, supporting integration and standardization across multiple knowledge sources.

Primarily uses the SPARQL query language.

2.4. Comparison of Graph Query Languages

Various query languages are used in graph databases depending on the type of graph stored.

Cypher

A query language for the property graph model, primarily used in Neo4j.

Features intuitive visual pattern matching syntax.

Nodes are represented by parentheses

(), relationships by arrows-->or directed arrows-[]->.

Example: Query to find the names of all people living in Seoul

MATCH (p:Person)-[:LIVES_IN]->(c:City)

WHERE c.name = "Seoul"

RETURN p.name

SPARQL

A W3C standard query language for the RDF graph model.

Uses triple pattern-based queries and clearly identifies resources via URIs.

Enables integrated queries across multiple datasets.

Example: Representing the same query as above in SPARQL

SELECT ?person

WHERE {

?person rdf:type ex:Person .

?person ex:livesIn ex:Seoul .

}

Gremlin

Part of the Apache TinkerPop framework, an imperative graph traversal language for the property graph model.

Supported by various graph databases.

Traverses the graph using a functional-style chain of calls.

Example: Representing the same query as above in Gremlin

g.V().hasLabel('Person').

out('LIVES_IN').

has('name', 'Seoul').

in('LIVES_IN').

values('name')

2.5 Comparison of Graph DBs Available on AWS

| Feature | Neo4j | Amazon Neptune |

| Provider | AWS Marketplace | AWS Managed Service |

| Supported Data Model | Property Graph | Property Graph, RDF |

| Supported Query Lang | Cypher | Gremlin, SPARQL, OpenCypher |

| Management & Ops | User Managed | Fully Managed |

| Integration w/ LangChain | Supported | Supported (Cypher, SPARQL) |

| Integration w/ LlamaIndex | Supported | Supported |

3. Graph RAG Design Patterns

Graph RAG is an approach that integrates knowledge graphs into the RAG pipeline, and standardized implementation methods are still evolving. Let's examine key design patterns based on the analysis by Ben Lorica and Prashanth Rao.

3.1. General Design Patterns

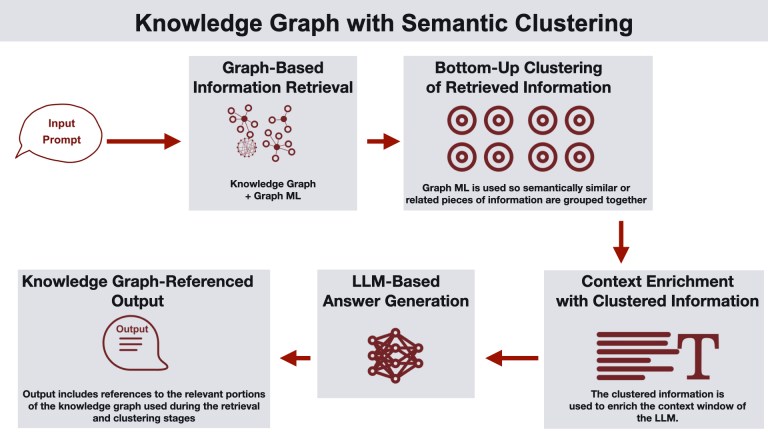

Knowledge Graph and Semantic Clustering

This pattern uses knowledge graphs and graph machine learning to retrieve information for a user query and organizes it into semantic clusters.

How it works

User submits a query.

System retrieves relevant information using knowledge graphs and graph machine learning.

Retrieved information is organized into semantic clusters via graph-based clustering.

Clustered information enriches the LLM's context, aiding more accurate answer generation.

The final answer includes references to the knowledge graph.

Use Cases: Data analysis, knowledge discovery, research fields.

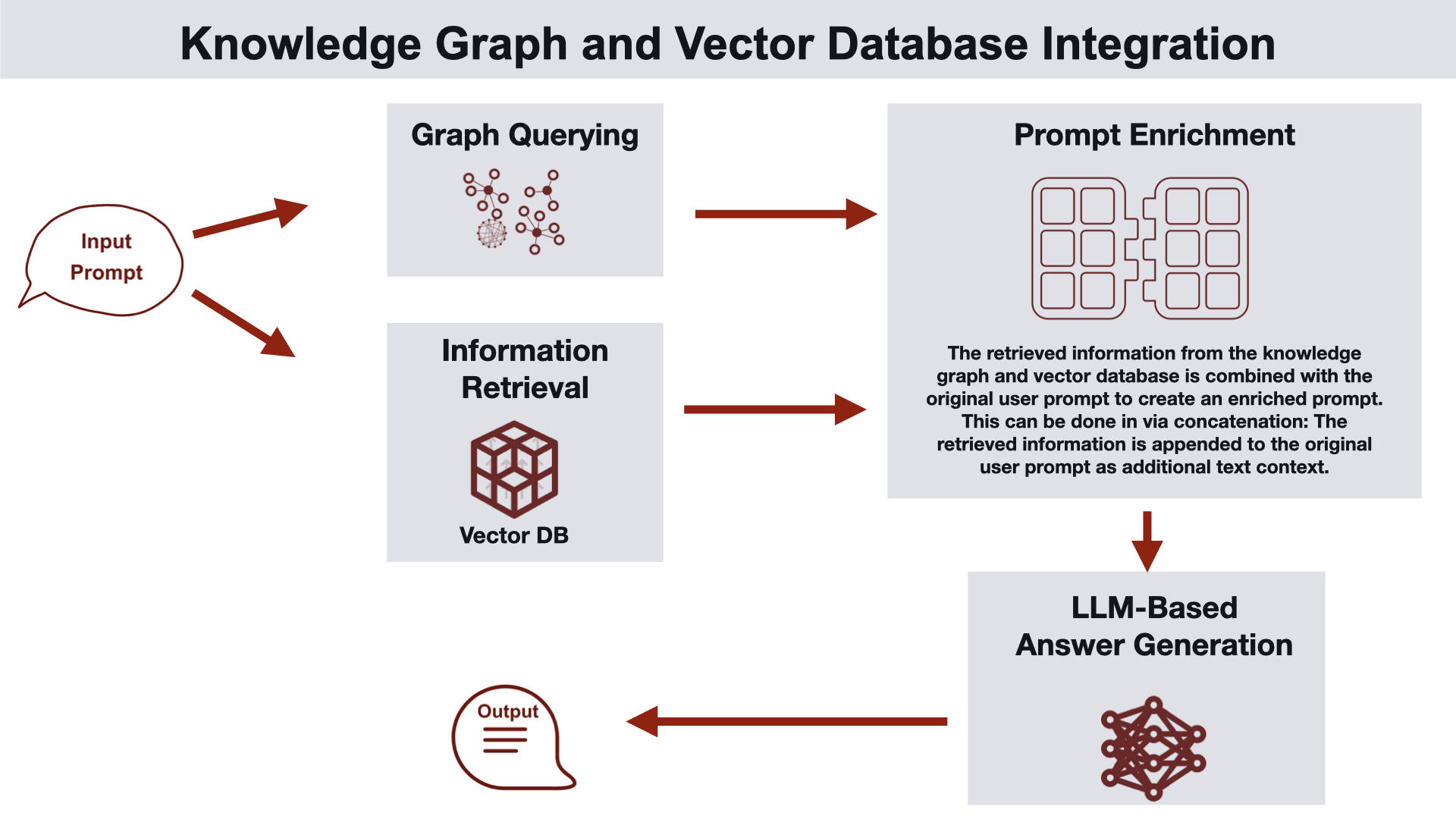

Knowledge Graph and Vector Database Integration

This approach utilizes both knowledge graphs and vector databases to gather relevant information.

How it works

The knowledge graph captures relationships between vectorized document chunks (including document hierarchy).

The knowledge graph provides structured entity information neighboring the chunks retrieved from vector search, enriching the prompt.

The enhanced prompt is input to the LLM to generate a response.

The generated answer is returned to the user.

Use Cases: Customer support, semantic search, personalized recommendation systems.

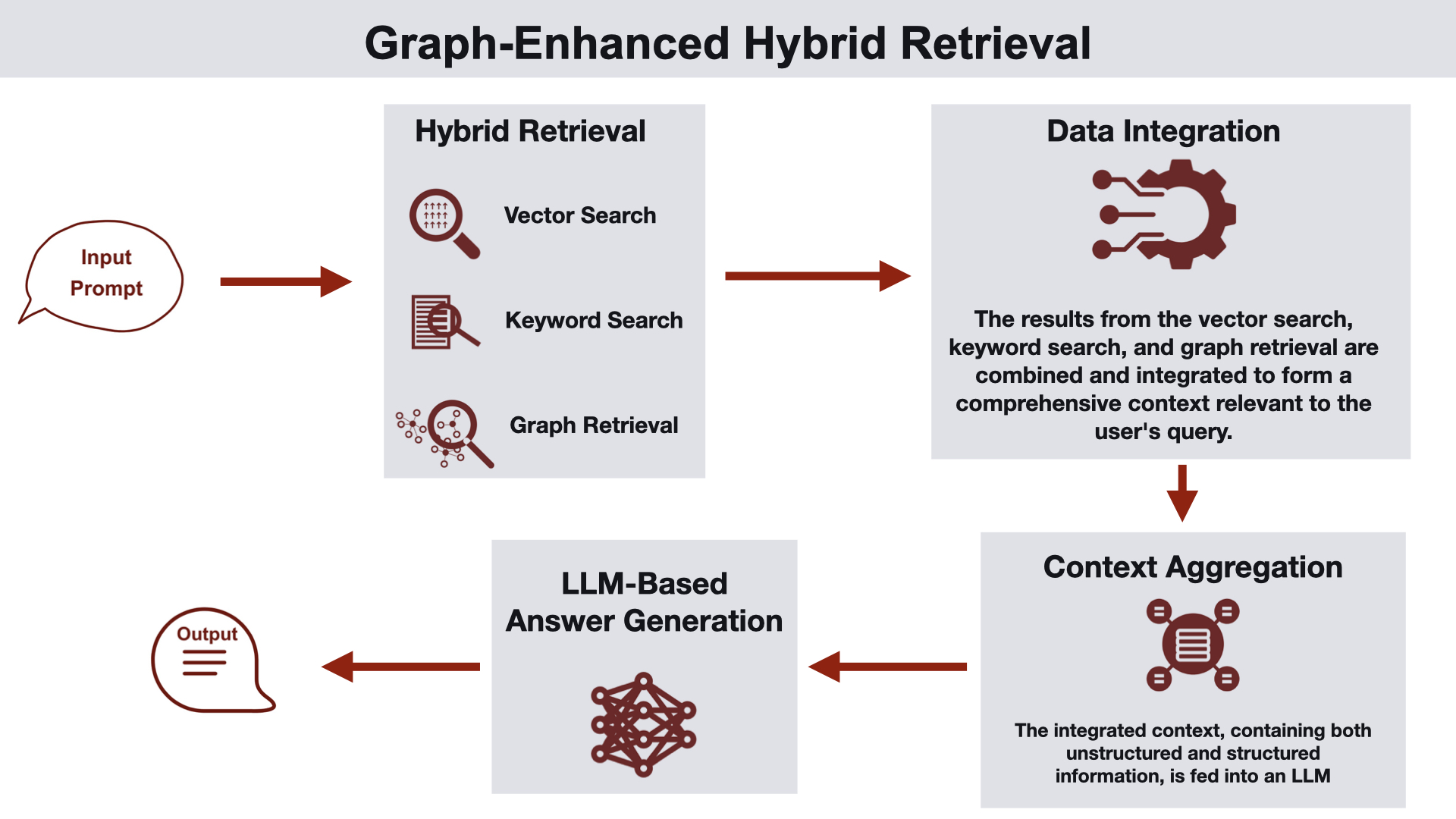

Graph-Enhanced Hybrid Search

This architecture employs a hybrid approach combining vector search, keyword search, and graph-specific queries.

How it works

User submits a query.

A hybrid search process proceeds, integrating results from unstructured data retrieval and graph data retrieval.

Vector and keyword index search results can be enhanced using reranking or rank fusion techniques.

Results from all three search types are combined to generate context for the LLM.

The response generated by the LLM is delivered to the user.

Use Cases: Enterprise search, document retrieval, knowledge discovery.

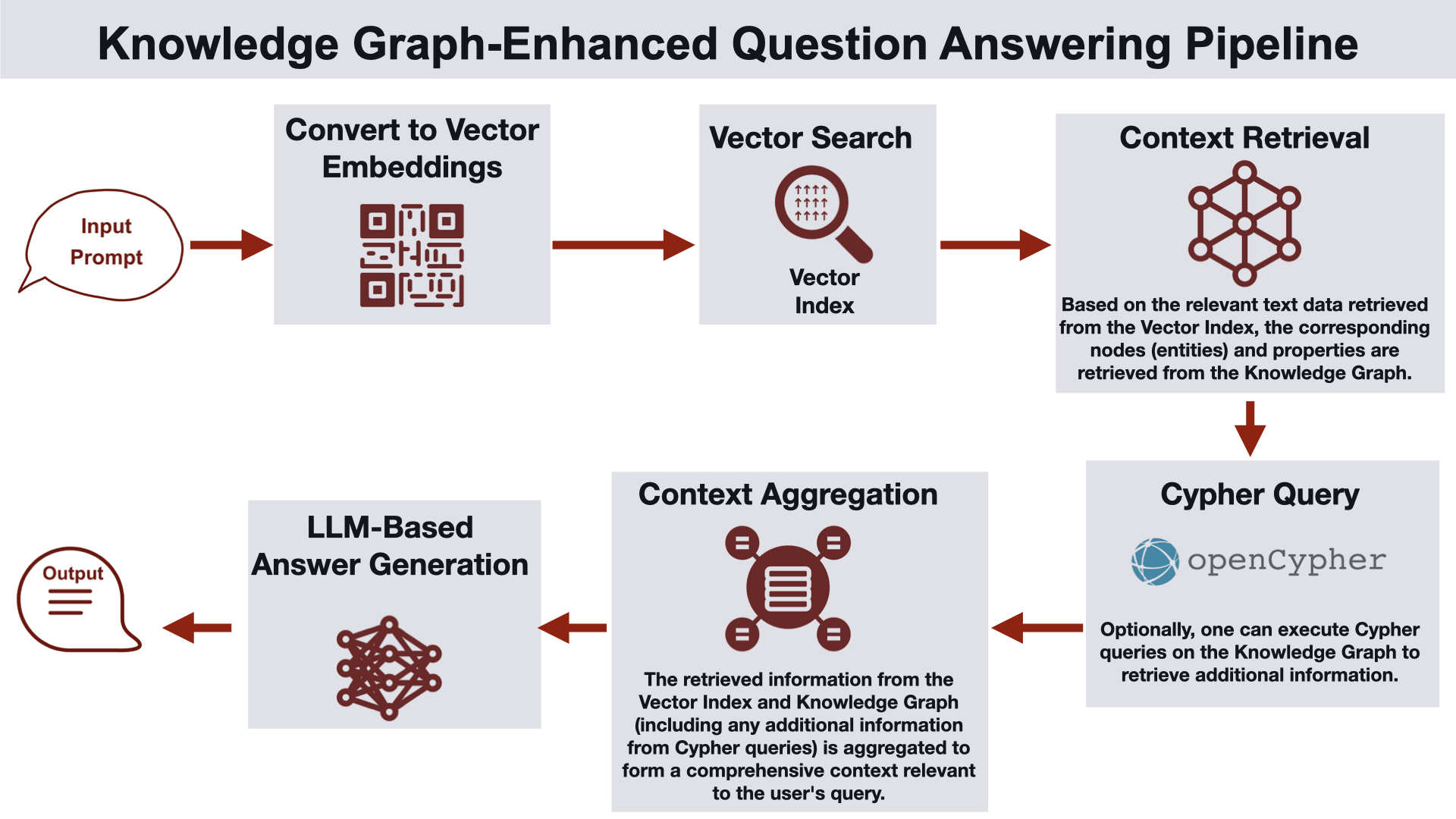

Knowledge Graph-Enhanced Q&A Pipeline

This architecture utilizes the knowledge graph in a post-vector search step to enhance the response with additional facts.

How it works

User provides a query.

Query embedding is calculated.

Vector similarity search in the vector index identifies relevant entities in the knowledge graph.

Relevant nodes and properties are retrieved from the graph database, and if found, Cypher queries are executed to retrieve additional information.

Retrieved information is synthesized to form comprehensive context, which is passed to the LLM to generate a response.

Use Cases: Environments like healthcare or legal where standard information based on entities within the response must be included with the answer.

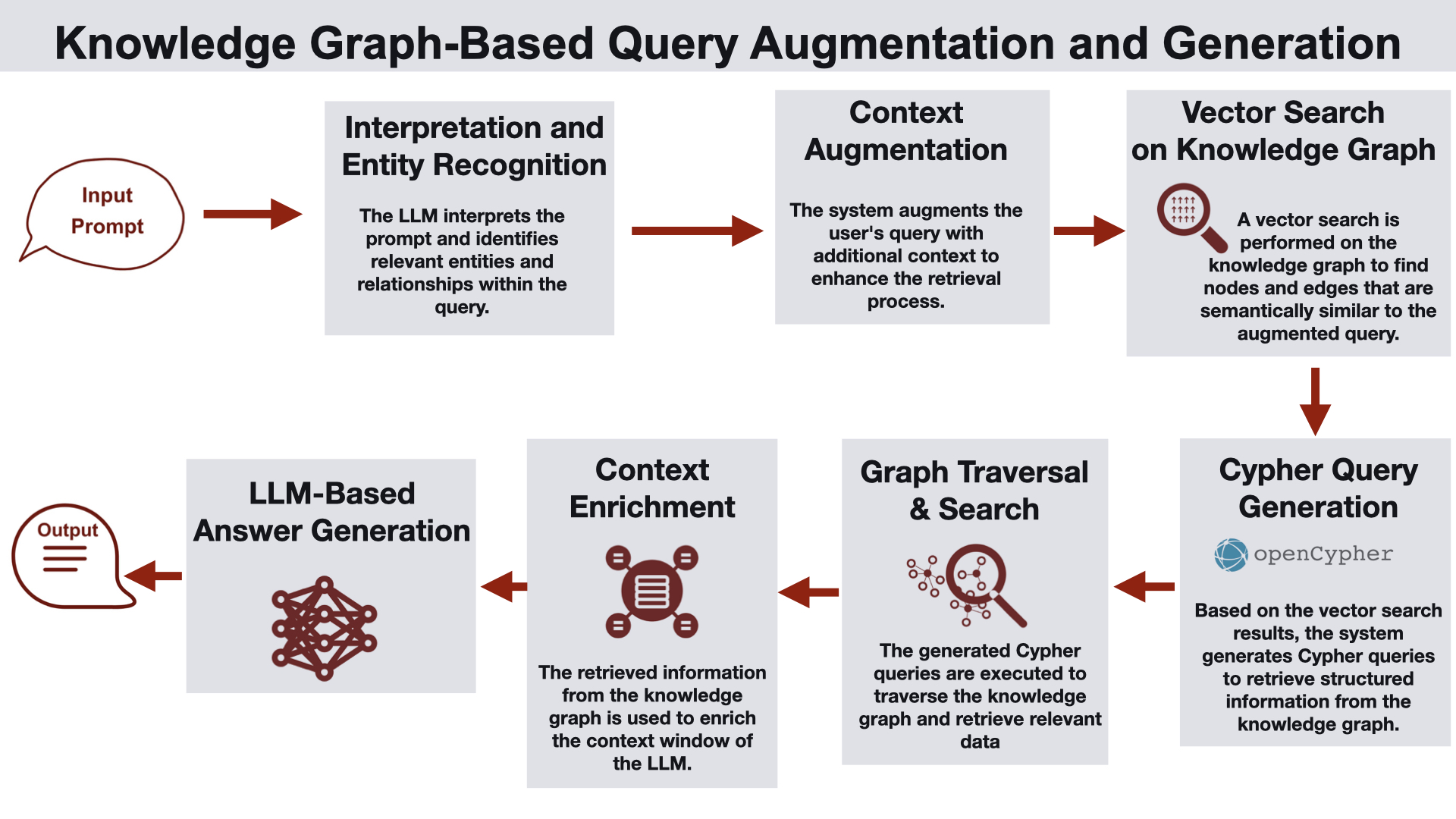

Knowledge Graph-Based Query Expansion and Generation

This architecture utilizes the knowledge graph before vector search to explore relevant nodes and edges, enriching the LLM's context.

How it works

The first step is query expansion, where the user query is processed by an LLM to extract key entities and relationships.

Vector search is performed on node properties within the knowledge graph to identify relevant nodes.

The next step is query rewriting, generating Cypher queries for the retrieved subgraph to narrow down relevant structured information from the graph.

Graph traversal results are used to enrich the LLM's context window.

The LLM generates a response based on the enhanced context.

Use Cases: Product lookups or financial report generation where relationships between entities are crucial.

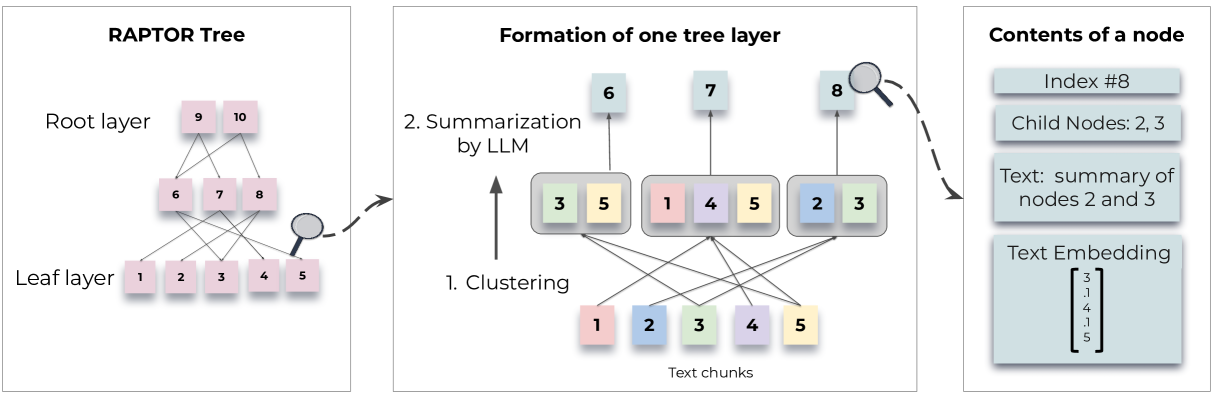

3.2. RAPTOR: Recursive Abstractive Processing for Tree-Organized Retrieval (Stanford Univ, 2024)

RAPTOR is a special RAG technique developed at Stanford University that utilizes a tree-structured hierarchical index to effectively process long documents. While traditional RAG divides documents into small chunks and retrieves them independently, RAPTOR builds a hierarchical summary tree that preserves the overall structure and context of the document.

Indexing Process

Chunk Splitting

The original document is split into small chunks of about 100 tokens.

If a sentence exceeds the token limit, it moves to the next chunk instead of being cut mid-sentence to maintain semantic consistency.

Embedding and Clustering:

Each chunk is embedded into a vector using SBERT (a BERT-based encoder).

Semantically similar chunks are clustered using a Gaussian Mixture Model (GMM).

Soft clustering allows a single node to belong to multiple clusters.

Summary Node Creation

Chunks in each cluster are summarized using a language model (e.g.,

GPT-3.5-turbo).The summary text becomes a new node and is embedded into a vector again.

Recursive Process

The embedding-clustering-summarization process is repeated until no further clustering is possible, forming a multi-layered tree structure.

This process has linear computational complexity with respect to the document size.

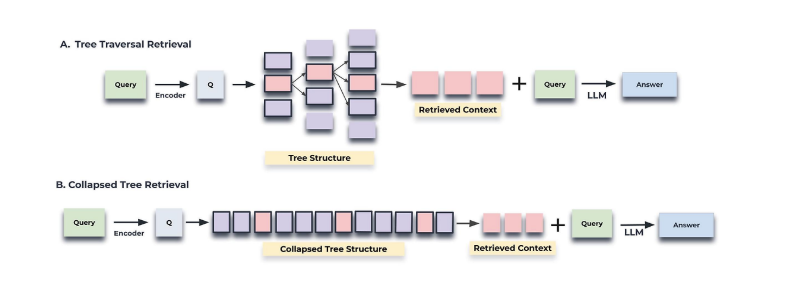

Retrieval Process

RAPTOR offers two distinct retrieval strategies.

Tree Traversal Method

Starting from the root layer, calculate cosine similarity between the query embedding and all node embeddings in that layer.

Select the top \(k\) nodes with the highest similarity scores (set \(S_1\)).

Proceed to the child nodes of the elements in set \(S_1\) and calculate cosine similarity with the query vector.

Select the top \(k\) child nodes with the highest similarity scores (set \(S_2\)).

Repeat this process for \(d\) layers, generating sets \(S_1\) through \(S_d\).

Concatenate all sets to form the context relevant to the query.

This method considers only the top \(k\) nodes per layer, maintaining a uniform amount of information at each level.

Collapsed Tree Method

Collapse the entire RAPTOR tree into a single layer.

Calculate cosine similarity between the query embedding and all node embeddings in the collapsed set.

Starting with the node having the highest similarity score, add nodes to the result set until a predefined maximum token count is reached.

Experimental results showed that the collapsed tree method consistently performed better than the tree traversal method. This is because the collapsed tree method considers all nodes simultaneously, providing flexibility to retrieve the appropriate level of detail for the question.

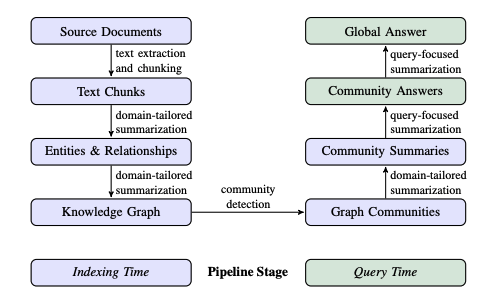

3.3. From Local to Global: A Graph RAG Approach to Query-Focused Summarization (Microsoft Research, 2024)

Microsoft Research's GraphRAG is designed to effectively answer comprehensive questions about an entire dataset, such as "What are the main themes in this dataset?", going beyond the limitations of traditional RAG which focuses on finding fragmented information relevant to a specific query.

Indexing Process

Document Chunking

Source documents are chunked into analyzable sizes.

Each chunk is processed independently and used later for graph construction.

Entity and Relationship Extraction

LLMs are utilized to identify important entities (people, places, organizations, concepts, etc.) from each chunk.

Meaningful relationships between entities are extracted, and short descriptions are generated.

Domain-specific prompts are used to capture highly relevant entities and relationships.

Knowledge Graph Construction

Extracted entities and relationships are transformed into nodes and edges, forming the graph.

Entity descriptions are aggregated and summarized, then attached to each node and edge.

Duplicate relationships are represented as edge weights, indicating the strength of the relationship.

Community Detection and Summarization

Community detection algorithms like the Leiden algorithm partition the graph into meaningful communities.

Performed hierarchically to form community structures at various levels of abstraction.

An LLM is used to generate a report-like summary for each community.

Summaries of lower-level communities are utilized in generating summaries for higher-level communities.

Retrieval Process

GraphRAG offers multiple retrieval modes, each optimized for different types of queries.

Static Global Search

A method for queries requiring a comprehensive understanding of the entire dataset.

Utilizing Predefined Community Levels

Retrieves all community reports at a specific level of the knowledge graph (e.g., level 1).

This method is simple but consumes many tokens and includes all reports, even those irrelevant to the query.

Map-Reduce Process

Map Step: Generate an answer and relevance score for the query against each community report.

Reduce Step: Integrate the answers based on relevance scores to generate the final response.

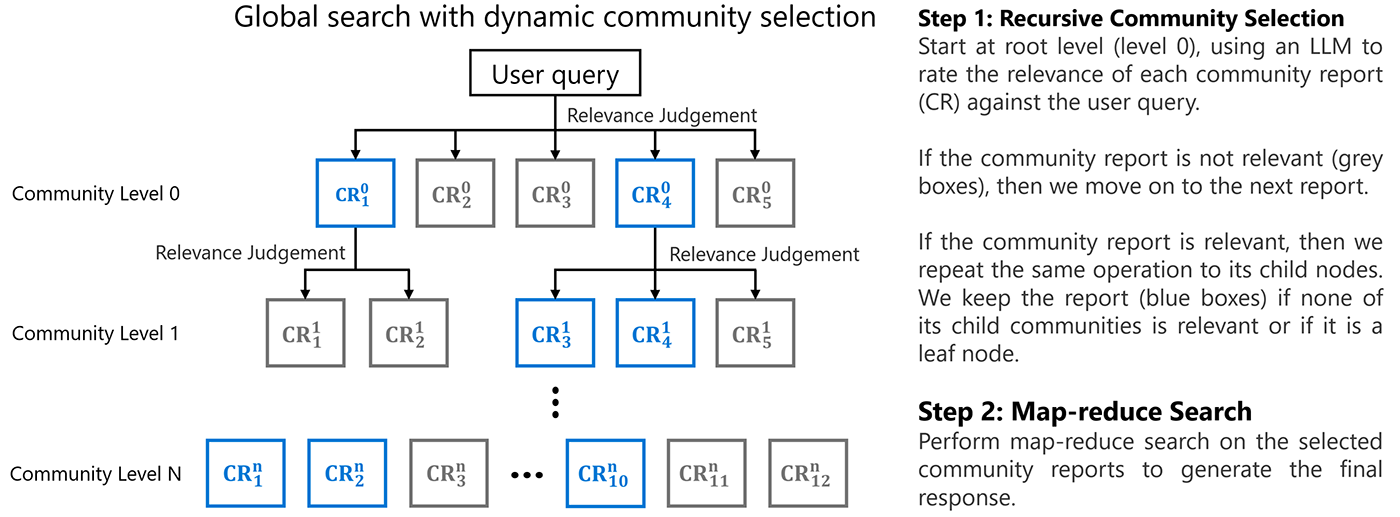

Dynamic Global Search

An approach improving the inefficiency of static global search.

Dynamic Community Selection Process

Starting from the root of the knowledge graph, evaluate the relevance of each community report using an LLM.

Reports with low relevance and their child nodes are excluded from the search process.

For highly relevant reports, move to child nodes and continue evaluation.

DRIFT(Dynamic Reasoning and Inference with Flexible Traversal) Search

DRIFT search is a hybrid approach designed to overcome the limitations of local search within the GraphRAG environment. It goes beyond traditional local search, which merely retrieves text chunks similar to the query, by utilizing community information to provide richer and more comprehensive answers.

Primer Stage: Grasping High-Level Context

Query Expansion: The user query is expanded using the HyDE (Hypothetical Document Embeddings) technique to increase search recall. HyDE generates a hypothetical ideal document from the original query and then uses the embedding of that document for retrieval.

Community Report Retrieval: The expanded query is embedded and compared against all community reports to select the top \(K\) semantically most relevant reports. These reports, sourced from GraphRAG's global index, provide a high-level overview of the dataset.

Initial Answer Generation: The selected community reports are provided to an LLM to generate an initial answer.

Follow-up Question Generation: Simultaneously, the LLM generates more detailed follow-up questions based on the original query. These questions target areas missing information or requiring deeper exploration in the initial answer.

Follow-Up Stage: Exploring Detailed Information

Execute Local Search: A standard local search variant is executed for each follow-up question. This process finds the most relevant content at the original text chunk level.

Intermediate Answer Generation: Intermediate answers are generated based on the local search results for each follow-up question.

Iterative Refinement: New follow-up questions can be generated from these intermediate answers, forming an iterative loop of information exploration. Typically, the system terminates after 2 iterations.

Output Hierarchy Stage: Integrating Results

Hierarchical Structuring: All questions and answers are organized into a hierarchy ranked by relevance to the original query.

Map-Reduce Integration: Benchmark tests used a simple map-reduce approach to aggregate all intermediate answers with equal weighting.

Final Response Generation: A final response to the user's original query is generated based on the aggregated information.

The advantage of this approach is its ability to start with a high-level overview and progressively explore details, potentially capturing important information missed by a single search process.

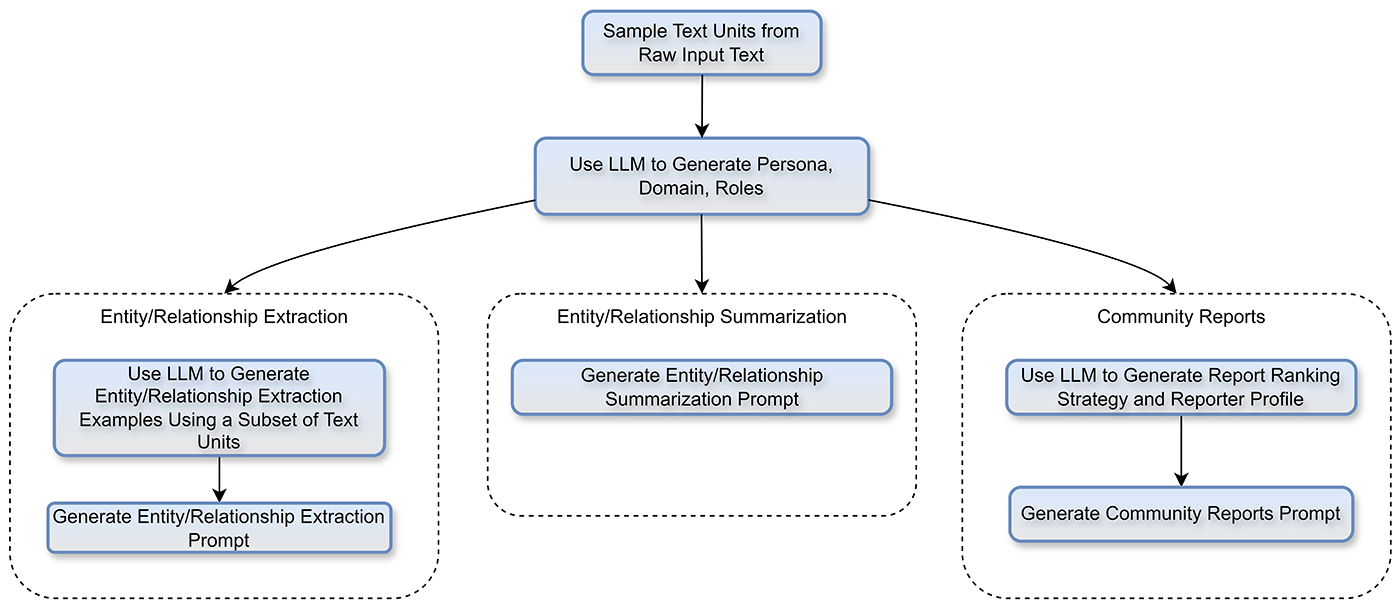

Auto-Tuning

One of GraphRAG's strengths is its auto-tuning capability for rapid adaptation to new domains:

Domain and Persona Identification

A sample of the source content (about 1% of the total data) is sent to an LLM.

The LLM identifies the domain and generates an appropriate persona.

This persona is used to tailor the extraction process.

Domain-Specific Prompt Generation

Once the domain and persona are established, multiple processes run in parallel to generate custom indexing prompts.

Example-based prompts are generated based on actual domain data.

Identifies domain-specific entities (e.g., 'molecule', 'reaction' in chemistry) beyond basic entity types (person, organization, location, etc.).

Microsoft's GraphRAG demonstrates superior performance in terms of comprehensiveness and diversity compared to traditional summarization techniques, significantly improving response quality for global questions, especially on large datasets. This approach automatically balances local details and global context, providing a flexible and effective solution applicable to various use cases.

4. AWS GraphRAG Toolkit

The AWS GraphRAG Toolkit is a Python-based open-source framework that facilitates the implementation of Graph RAG concepts within the AWS cloud environment. It leverages the LlamaIndex library to automatically build graphs and perform vector indexing from unstructured text, supporting graph-based retrieval for LLM queries.

4.1. Graph Model Design

The AWS GraphRAG Toolkit uses a lexical graph model composed of three layers.

)

1. Lineage Layer

Source Node

Stores metadata of the original document (author, URL, publication date, etc.).

Plays a crucial role in tracing and verifying document provenance.

Chunk Node

Stores the actual text content and the embedding of that text.

Maintains the structural context of the original document through relationships with previous/next, parent/child chunks.

The Lineage Layer preserves the original structure and provenance of documents, enabling the tracing of information lineage. This offers significant value, especially in enterprise environments where information reliability and verification are critical.

2. Entity-Relationship Layer

This layer forms the core structure of the graph by representing key entities within documents and their relationships.

Entity Node

Includes a value (e.g., 'Amazon') and classification (e.g., 'Company').

Serves as an entry point for keyword-based searches.

Represents real-world entities like people, places, organizations.

Relation Edge

Defines relationships between entities (e.g., 'WORKS_FOR', 'LOCATED_IN').

Guided by a preferred entity classification list to maintain consistency.

The Entity-Relationship Layer represents the main entities found within documents and the relationships between them. It forms the core structure of the graph and serves as an important starting point, particularly for keyword-based 'bottom-up' searches.

3. Summarization Layer

Topic Node

Represents a topic or theme within a specific source document.

Acts as a local connection point linking related chunks within a single source.

Groups the same topic across multiple chunks, providing a document-level summary.

Statement Node

An independent assertion or statement unit, serving as the primary context unit provided during LLM query responses.

Grouped under Topic nodes, with order maintained by a linked list.

Connected to Fact nodes, forming semantic relationships.

The basic context unit provided to the LLM during question answering.

Fact Node

A single fact unit in Subject-Predicate-Object (SPO) or Subject-Predicate-Complement (SPC) form.

Represents the same fact mentioned across multiple documents as a single node, providing global connectivity between documents.

Forms a relationship (SUPPORTS) supporting Statement nodes.

Acts as a bridge connecting information across documents.

The Summarization Layer structures the original text into various levels of abstraction, allowing the provision of information at the most appropriate level for a query. 'Topics' provide local connectivity within documents, while 'Facts' offer global connectivity across documents, forming a robust retrieval foundation.

4.2. Indexing Process

The indexing in the AWS GraphRAG Toolkit consists of two main steps.

1. Extraction Step (Extract)

Document Chunking (Optional)

Uses LlamaIndex

SentenceSplitteror similar to divide documents into chunks of a certain size.Default settings are 256 tokens per chunk with 20 tokens overlap, but customizable.

Splitting is done at the sentence level to maintain semantic coherence.

Proposition Extraction (Optional)

An LLM transforms the content of each chunk into simplified propositions.

Complex sentences are broken down into simpler ones, and pronouns are replaced with specific names.

Abbreviations are expanded where possible to improve the quality of subsequent extraction tasks.

Extracted propositions are stored under the

aws::graph::propositionsmetadata key.

Entity/Relationship/Topic/Statement/Fact Extraction (Required)

An LLM analyzes the propositions (or source chunks) from the previous step.

Extracts entities and their classifications, and identifies relationships between entities.

Groups content into topic units based on the text's theme.

Extracts statements with independent meaning and facts in triple form.

All extracted information is stored under the

aws::graph::topicsmetadata key.

The Extraction step is the core process of converting unstructured text into structured graph elements. Proposition extraction using an LLM enhances information quality but can be applied selectively considering performance and cost.

2. Build Step (Build)

Node Transformation

Transforms metadata generated in the extraction step into Source, Chunk, Topic, Statement, and Fact nodes.

Each node includes

aws::graph::indexmetadata used for vector indexing.

Graph Construction

Stores the transformed nodes and relationships in the graph database.

Establishes relationships between nodes (SUPPORTS, NEXT, etc.) to complete the graph structure.

Vector Indexing

Generates embeddings for Chunk and Statement nodes and indexes them in the vector store.

Optionally, Facts and Topics can also be embedded to support various levels of retrieval.

The Build step involves storing the extracted information into the actual graph and vector stores. This process completes the searchable knowledge graph.

3. Continuous Loading and Batch Processing

Continuous Loading

Executes extraction and building simultaneously using the

extract_and_build()method.Graph building starts immediately after extraction begins via micro-batch processing, making it usable instantly.

Suitable for scenarios requiring real-time updates.

Separate Execution

Executes

extract()andbuild()separately to decouple processing.Intermediate results can be stored and reused using

S3BasedDocsorFileBasedDocs.Useful for large datasets or utilization across different environments (dev/test/prod).

Checkpoint Management

Manages processing status with a

Checkpointinstance to prevent redundant processing.Skips successfully processed chunks upon restart after failure, increasing efficiency.

Marks only chunks whose graph building is complete as checkpoints to maintain consistency.

Batch Processing Optimization

Optimizes large-scale data processing through batch settings (

batch_config).Can integrate with Amazon Bedrock batch inference to reduce LLM call costs.

Optimizes performance by adjusting the number of workers for parallel processing and batch size.

The indexing process can be flexibly configured, allowing adjustment for various scales and requirements. Checkpoints and batch processing play crucial roles in efficiently handling large datasets.

4.3. Retrieval Process

The AWS GraphRAG Toolkit provides two main retrievers to address diverse search needs.

1. TraversalBasedRetriever

TraversalBasedRetriever uses graph traversal for retrieval, employing two complementary strategies.

Chunk-based Search: Top-down Approach

Identifies chunks highly relevant to the query using vector similarity.

Moves from found chunks to topics, then expands to related statements and facts.

Focuses on areas with direct semantic similarity to the query, providing in-depth information.

Adjusts the quantity and diversity of results with configurable

vss_top_kandvss_diversity_factorparameters.

Entity-based Search: Bottom-up Approach

Extracts keywords (entity names, abbreviations, synonyms, etc.) from the query.

Identifies relevant entities in the graph using extracted keywords, potentially including expanded entities.

Starts from entities, expands through facts to statements and topics.

Limits the number of keywords extracted with the

max_keywordsparameter to maintain relevance.

Statement Reranking

Reranks statements before returning final results to enhance relevance to the query.

Can choose between TF-IDF (

tfidf) or model-based (model) methods.Limits the number of statements returned with the

max_statementsparameter to control context size.

Complex Query Decomposition

Can decompose complex queries into multiple sub-queries using the

derive_subqueriesoption.Limits the maximum number of sub-queries generated with the

max_subqueriesparameter.Integrates search results for each sub-query to generate a more comprehensive response.

TraversalBasedRetriever, through structural exploration of the graph, can discover related information via relationships, not just direct semantic similarity, enabling comprehensive answers to complex queries.

2. SemanticGuidedRetriever

SemanticGuidedRetriever is a hybrid approach integrating vector search and graph traversal, utilizing three sub-retrievers.

StatementCosineSimilaritySearch

Calculates cosine similarity between statement embeddings and the query embedding.

Returns the specified number (

top_kparameter) of most similar statements.A direct search method based on semantic similarity, effective for finding precise information related to the query.

KeywordRankingSearch

Performs search using keywords and synonyms extracted from the query.

Ranks statements based on the number of keyword matches within them; more matches yield higher ranks.

Limits the number of keywords extracted (

max_keywords) and the number of results returned (top_k).Useful for finding relevant information through direct keyword matching.

SemanticBeamGraphSearch

Performs graph exploration using the beam search algorithm.

Explores neighboring statements based on shared entities between statements.

Calculates cosine similarity between candidate statements and the query to follow the most promising paths.

Limits search depth (

max_depth) and the number of candidates kept at each step (beam_width).Can discover related information through relationships even with low direct similarity.

RerankingBeamGraphSearch

A variation of

SemanticBeamGraphSearchthat uses a reranking model instead of cosine similarity.Can utilize

BGEReranker(GPU environment) orSentenceReranker(CPU environment).Performs more accurate beam search through more sophisticated relevance assessment.

Computationally more expensive but can provide more accurate results.

Post-processing Options

BGEReranker/SentenceReranker: Enhances final result quality with model-based reranking.StatementDiversityPostProcessor: Removes duplicate statements based on TF-IDF similarity.EnrichSourceDetails: Adds source metadata to clarify information provenance.StatementEnhancementPostProcessor: Enriches statement content using an LLM.

SemanticGuidedRetriever utilizes both semantic similarity and graph structure to effectively discover relevant information, even if not directly similar to the query. It is particularly useful for complex queries or cases requiring diverse perspectives.

4.4. Other

The AWS GraphRAG Toolkit utilizes separate graph and vector stores during graph construction and retrieval, integrating with existing AWS security mechanisms.

Dual Storage Architecture

Graph Store: Stores graph elements like entities, relationships, statements, facts in Amazon Neptune, Neptune Analytics, or FalkorDB.

Vector Store: Stores chunk and statement embeddings in Amazon OpenSearch Serverless or Neptune Analytics.

Performance Optimization Options

Batch Extraction: Large dataset processing utilizing Amazon Bedrock batch inference.

GraphRAGConfig: System performance settings like worker count, batch size, caching.Response Caching: Stores LLM call results on the local filesystem to minimize redundant calls.

The AWS GraphRAG Toolkit overcomes the limitations of traditional vector search-based RAG, enabling more comprehensive and accurate information retrieval by leveraging graph structures. It demonstrates outstanding performance, especially in scenarios involving complex queries, multi-step reasoning, or where inter-document connections are important.

5. Comparison of Graph RAG Approaches and Conclusion

| Approach | Indexing Stage | Pre-Retrieval Stage | Retrieval Stage | Post-Retrieval Stage |

| KG & Semantic Clustering | • Build Knowledge Graph | • Graph Traversal | • Semantic Clustering | |

| KG & Vector DB Integration | • Vectorize Documents • Build Knowledge Graph | • Vector Search • Graph Traversal | ||

| Graph-Enhanced Hybrid Search | • Vectorize Documents • Keyword Indexing • Build Knowledge Graph | • Vector Search • Keyword Search • Graph Traversal | • Reranking or Rank Fusion | |

| KG-Enhanced Q&A Pipeline | • Vectorize Documents • Build Knowledge Graph | • Vector Search (on chunks) • Graph Traversal (on entities & properties) | ||

| KG-Based Query Expansion/Gen | • Build Knowledge Graph • Vectorize Node Properties | • LLM Entity/Relation Extraction • Query Expansion | • Vector Search (on entities & relations) • Graph Traversal | |

| RAPTOR | • Vectorize Documents • Clustering • LLM-based Summarization • Recursive Structuring | • Vectorize Query | • Tree Traversal: Layer-wise similarity-based node selection • Collapsed Tree: Flatten tree & vector search | |

| GraphRAG (Microsoft) | • LLM Entity/Relation Extraction • Build Knowledge Graph • Community Detection (Leiden) • Community Summaries | • Query Expansion (HyDE) • Follow-up Question Gen. | • Static/Dynamic Global Search: Use community reports • DRIFT Search: Global+Local hybrid | • Map-Reduce Integration • Output Hierarchy |

| GraphRAG Toolkit (AWS) | • LLM Proposition/Entity/Relation/Topic/Statement/Fact Extraction • Build KG • Vectorize Chunks & Statements | • Keyword Extraction • Complex Query Decomposition | • TraversalBasedRetriever: Top-down/Bottom-up • SemanticGuidedRetriever: Cosine Sim, Keyword Rank, Beam Search | • Statement Reranking • Diversity Processing • Source Enrichment • Statement Enhancement |

The Graph RAG approaches examined so far overcome the limitations of traditional vector-based RAG, enabling richer and more accurate information retrieval. Key insights include:

Structural Advantage: A common thread across all approaches is the crucial role of graph structure in preserving inter-document relationships and maintaining context. This helps discover associative information missed by simple vector similarity.

Combined Retrieval Strategies: Most approaches adopt hybrid methods combining vector search, keyword search, and graph traversal. This composite strategy maximizes the strengths of each method for more accurate and comprehensive retrieval.

Hierarchical Structuring: RAPTOR, MS GraphRAG, and AWS GraphRAG all employ methods to hierarchize documents into various abstraction levels. This allows providing information at an appropriate level of detail based on query complexity and needs.

LLM Utilization: In all approaches, LLMs are utilized not just for response generation but also in various stages like entity extraction, summary generation, and query expansion. This significantly enhances the quality of the graph structure and retrieval efficiency.

Scalability and Efficiency: Particularly, AWS GraphRAG and MS GraphRAG offer optimization features (batch processing, auto-tuning, etc.) for handling large datasets, increasing their practicality in enterprise environments.

Graph RAG is still an evolving field, but it already demonstrates superior performance compared to existing RAG systems in handling complex queries, supporting multi-step reasoning, and leveraging inter-document connectivity. Future developments are expected in areas like improving the efficiency of graph structure creation, supporting diverse data types, and real-time graph updates.

References

Papers

RAPTOR: Recursive Abstractive Processing for Tree-Organized Retrieval (Stanford Univ.)

From Local to Global: A Graph RAG Approach to Query-Focused Summarization (Microsoft)

Blogs

GraphRAG: Unlocking LLM Discovery on Narrative Private Data (Microsoft)

GraphRAG: New Tool for Complex Data Discovery Now on GitHub (Microsoft)

GraphRAG Auto-Tuning Provides Rapid Adaptation to New Domains (Microsoft)

Introducing DRIFT Search: Combining Global and Local Search Methods to Improve Quality and Efficiency (Microsoft)

GraphRAG: Improving Global Search via Dynamic Community Selection (Microsoft)

LazyGraphRAG: Setting a New Standard for Quality and Cost (Microsoft)

Introducing GraphRAG 1.0 (Microsoft)

Implement Graph RAG with Amazon Bedrock (Korean original, link text kept) (AWS)

Improving Retrieval Augmented Generation Accuracy with GraphRAG (AWS)

Code Repositories

Subscribe to my newsletter

Read articles from Jonas Kim directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Jonas Kim

Jonas Kim

Sr. Data Scientist at AWS