Model Context Protocol (MCP): The USB-C for AI

Gaurav Dhiman

Gaurav Dhiman

The world of AI agentic apps is rapidly evolving, with Large Language Models (LLMs) taking center stage. However, till few months back, these powerful models often operate in isolation, lacking seamless access to the vast amounts of data and tools necessary to perform complex tasks effectively. Imagine trying to use your computer without a USB port to connect your external hard drive, printer, or other essential devices – that's the challenge LLMs face today.

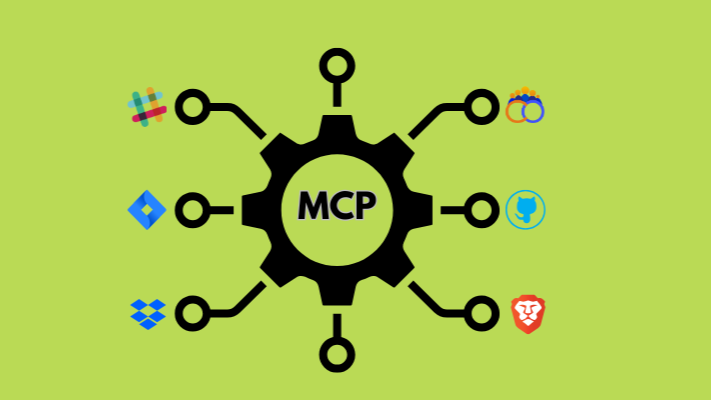

Enter the Model Context Protocol (MCP), an open standard designed to solve this very problem. Think of it as a "USB-C port for AI applications," providing a universal way for LLMs to connect with external data sources, tools, and workflows. Announced in late November 2024, MCP aims to revolutionize how AI models interact with the world around them, fostering a more integrated and efficient AI ecosystem.

What Exactly is Model Context Protocol (MCP)?

At its core, MCP is an open protocol that standardizes how applications provide context to Large Language Models (LLMs). This means it defines a common language between models (LLM) and the tool providers (external information).

Here's a breakdown of the key features and concepts:

Open Standard: Its a open protocol (a way for to systems to talk) that allows seemless and fast integration between AI agetic app and systems that can act on or can get information from.

Client-Server Architecture: MCP follows a client-server architecture. An MCP Host (the application using the LLM, like Claude Desktop, an IDE, or another AI app) acts as the client and connects to one or more MCP Servers.

MCP Clients: Protocol clients (on MCP Host) that maintain 1:1 connections with MCP servers.

MCP Servers: These are lightweight programs that expose specific capabilities through the standardized MCP protocol. They act as bridges between the LLM and external resources like databases, calendar, emails etc.

Resources: Data and content exposed from the server to LLMs. Think of files, database entries, or any other relevant information.

Prompts: Reusable prompt templates and workflows that can be integrated into AI agentic app for use with LLM. Think of

/commands you may have seen in some apps like Cursor, Windsurf, Cline etc. These/commands prompt the LLM in specific way (with prompt templates) to make the LLM behave differently.Tools: MCP enables LLMs to perform actions and get information through servers, such as creating a file, sending an email, updating calendar or triggering a workflow or reading a database schema and entries etc.

Transports: Underlying communication mechanisms for MCP.

Why is MCP Needed?

The current landscape of AI integrations is fragmented and complex. Developers often have to build custom integrations (normally called as tools) for each LLM and data source they want to use, leading to duplicated effort and maintenance headaches. MCP simplifies this process by providing a single, standardized protocol for connecting AI systems with standardized set of tools for data sources - think of how web-service / API work in web app. This offers several advantages:

Growing list of pre-built integrations: LLMs can directly plug into these integrations - tested and standardized.

Flexibility to switch between LLM providers and vendors: Different prompts work differently for different LLMs. Switching prompts with different LLMs is painless with MCP.

Improved AI Assistant Responses: By providing LLMs with better access to relevant data, MCP helps them generate more accurate, informative, and context-aware responses. This drastically reduces hallucination - the common drawback of LLMs as they are probabilistic by nature.

Simplified and More Reliable Integrations: MCP replaces ad-hoc and fragile integrations with a standardized and well tested implementations, making the integration process more reliable, easier to manage and faster to integrate.

Sustainable Architecture: The standardized nature of MCP leads to a more sustainable architecture compared to the fragmented landscape of custom integrations.

Improved Code Generation: By providing LLMs with access to relevant codebases and documentation, MCP can help them generate more nuanced and functional code with fewer attempts.

Accessibility, Transparency, and Collaboration: As an open standard, MCP promotes accessibility, transparency, and collaboration within the AI community. Every AI app developer benefits from new MCP server implementation - create once, use many pattern.

Use Cases for MCP

The potential use cases for MCP are vast and span various industries and applications. Here are a few examples:

AI-Powered IDEs: MCP can enable LLMs to access codebases, documentation, and other development resources, providing developers with intelligent code completion, debugging assistance, and code generation capabilities.

Enhanced Chat Interfaces: By connecting LLMs to customer databases, knowledge bases, and other relevant information sources, MCP can enable more personalized and informative chat interactions.

Custom AI Workflows: MCP can be used to create custom AI workflows that automate tasks, such as data analysis, report generation, and content creation.

Connecting LLMs to Internal Systems and Datasets: MCP allows LLMs like Claude, GPT-4o, Gemini and any OpenSource LLM that supports tool-calling, to seamlessly access and interact with internal systems and datasets, enabling them to perform tasks specific to an organization's needs.

Some Awesome Demos of MCP Usage by Community

Here are some great video demos of MCP servers for different capabilities getting used in AI apps like Claude Desktop, Cursor etc.

Design things in Blender with Natural Language

In this example, Cline app is using Blender MCP server to design the scene in Blender with just natural language. You can imagine the implications of such powerful workflows - it allows now anyone to play with Blender, one does not need to learn the complicated Blender app.

Generated AI Music with Ableton in Natural Language

Siddharth Ahuja created AbletonMCP, a Model Context Protocol (MCP) integration that connects Claude Desktop directly to Ableton Live, allowing for music creation using simple text prompts. This is really cool for anyone interested in the intersection of AI and music production.

Generate 3D models in Natural Language

Here's an example of a Cursor using TripoAI MCP server, and Blender MCP server to autonomously convert a reference image into textured 3D models and arranging & lighting them to match the reference in a 3D Blender scene.

Diving into the Technical Aspects

MCP offers SDKs in multiple languages. Let's see the technical details of MCP:

SDKs: MCP provides Software Development Kits (SDKs) in various popular programming languages, including TypeScript, Python, Java, Kotlin, and C#. These SDKs simplify the process of building MCP clients and servers in these languages.

Specification: The MCP specification provides detailed information about the protocol, including message formats, communication protocols, and security considerations.

Project Structure: The MCP project on GitHub is organized as follows:

Challenges and Considerations

While MCP holds immense promise, it's important to acknowledge the challenges and considerations associated with its adoption:

Maturity: As a relatively new protocol, MCP is still evolving. Its widespread adoption depends on community support and the availability of pre-built connectors.

Security: Security is paramount when connecting AI systems to data sources. MCP implementations need to adhere to security best practices to protect sensitive data.

Get Started with MCP

Ready to explore the world of MCP? Here's how to get started:

Install Pre-built MCP Servers: Explore and install pre-built MCP servers through platforms like the Github, MCP.run, Smithery, playbooks. On most these platforms you will find kind of same MCP servers. Many developers implement MCP servers for different services and publish them as open-source on Github. These servers provide immediate access to integrations with popular services.

Build Your First MCP Server: Follow the quickstart guide available on the Model Context Protocol website to build your own MCP server. This will give you a hands-on understanding of how the protocol works.

Contribute to Open-Source Repositories: Join the MCP community and contribute to open-source repositories of connectors and implementations. This is a great way to learn and help shape the future of MCP.

Use the SDKs: Leverage the available SDKs to simplify the development of MCP clients and servers.

Contributing to MCP

The Model Context Protocol is an open-source project, and contributions are highly encouraged. Whether you want to fix bugs, improve documentation, or propose new features, the contributing guide provides detailed information on how to get involved.

Resources

Here are some helpful resources to learn more about MCP:

Conclusion

The Model Context Protocol has the potential to transform the way AI models interact with the world. By providing a standardized and secure way to connect LLMs to external data sources and tools, MCP can unlock new levels of AI capabilities and drive innovation across various industries. As the protocol evolves and the community grows, we can expect to see even more exciting use cases and applications emerge.

Subscribe to my newsletter

Read articles from Gaurav Dhiman directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Gaurav Dhiman

Gaurav Dhiman

I am a software architect with around 20 years of software industry experience in varied domains, starting from kernel programming, enterprise software presales, cloud computing, scalable modern web app and big data science space. I love to explore, try and write about the latest technologies in web app development, data science, data engineering and artificial intelligence (esp. deep neural networks). I live in Phoenix, AZ with my sweet and caring family. To know more about me, please visit my website: https://gaurav-dhiman.com