The "Bitter Lesson" and "Fog of War" in Observability?

Praneet Sharma

Praneet Sharma

I’ve been looking at Observability reference architectures for a long time. The big initiator for me was always: “what tools do you all use?” Over time, and still something the industry needs to learn at large: “how do you use all these tools together?” So often, this culminates in the ultimate statement of: “well we get pinged/sometimes alerted, and then look at logs here, look at logs there, look at logs…”

The “Bitter Lesson” as it concerns Observability

The “Bitter Lesson” is a significant observation on machine learning improvement trends:

In the long run, general methods that leverage computation (like deep learning) outperform human-designed, specialized algorithms—even in domains where we think we have deep expertise.

Of course, the “bitterness” arises in individuals who spend considerable time examining carefully crafted processes due to the sheer attachment they feel to them over time. This happens quite often in the sciences (physicists becoming emotionally attached to theories). Observability practitioners suffer from the same fate, in my opinion. Not because of the state of the environment, however, but because the mantra from a commercial sense has largely propagated a varied, uniform, “perfect” set of telemetry. Maybe it's self-created, forcing us to endlessly worry about what is or isn’t ingested or structured. From a field perspective, one painstakingly tries to promote an ideal collection of telemetry, only to find that people are fine with just logs.

The “Fog of War” in Observability

When I first deployed tracing, I felt the power of what I thought of as “automatic and structured logging”. I saw spans as these efficient structures where I could stash metadata for whatever analytical purpose. What I should have realized at this point: this is what happens when you stick to a consistent pattern in your observability strategy. I was fortunate to have done this early. The temptation to collect extraneous datapoints via other ingestion frameworks wasn’t there. I was fine “extending my events”.

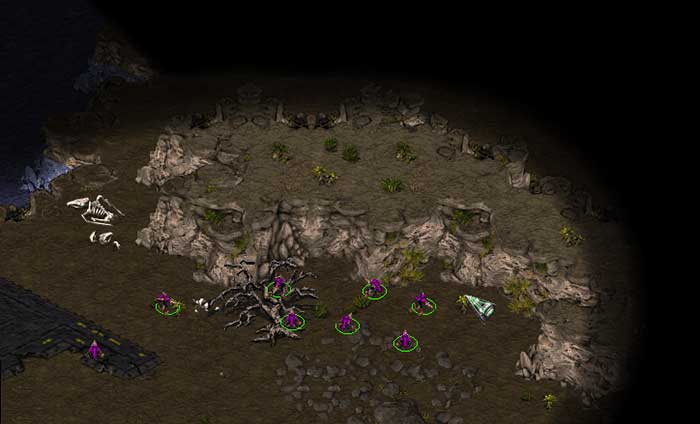

From a game-theory perspective, this is analogous to illuminating the “fog of war” in RTS (real-time strategy) games

Common in these games, players have to regularly send out “scouts” or unit groups to explore and path find. I was doing this in my own way for my services. Consequently, we must accept that individuals/groups at enterprises (small and large) do this in their own way. We must ultimately be considerate of this.

Regardless of how this fog is being removed, we are at an interesting moment. In our RTS scenario, a constant need to explore can be mentally taxing and cost us units during the game. In observability, there is a compounding cognitive toll on querying and reading. Would there be a way to eliminate the “fog” from reappearing all together? Explore everything in parallel?

*I want to highlight that a diverse group of units is a good strategy to combat opposing players in RTS, but that’s more akin to looking at this from a security perspective..

Subscribe to my newsletter

Read articles from Praneet Sharma directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by