Part 6: Persisting Data on EKS with EBS, EFS CSI Drivers & OIDC Integration (Terraform + Helm)

Neamul Kabir Emon

Neamul Kabir Emon

In this article, I’m sharing a real-world challenge I faced while deploying stateful applications on Amazon EKS—and how I solved it by configuring:

EBS CSI Driver for

ReadWriteOnceaccessEFS CSI Driver for

ReadWriteManyaccessOIDC Integration for secure IAM role bindings using IRSA

All automated with Terraform and Helm.

⚠️ The Challenge

At one point in my production cluster setup, I noticed:

Pods using EBS volumes would get stuck in

Pendingstate — especially if they moved between nodes.Sharing volume across pods didn’t work as expected—because EBS volumes are

ReadWriteOnceonly.IAM permissions for CSI drivers were messy to manage manually.

OIDC was not enabled, so IRSA (IAM Roles for Service Accounts) didn’t work either.

These problems made running stateful apps frustrating. That’s when I decided to set it up the right way—using IaC tools.

✅ The Solution

To overcome these limitations, I:

Enabled OIDC on the EKS cluster (required for IRSA-based service accounts)

Configured the EBS CSI Driver for

ReadWriteOnce(ideal for StatefulSets)Set up the EFS CSI Driver to support

ReadWriteManyvolumes across pods/nodesUsed Terraform + Helm for fully automated provisioning

📌 If You Haven’t Followed Earlier Parts…

Start with foundational components to build your EKS cluster right:

🔗 Part 2: Install Metrics Server for HPA

🔗 Part 5: NGINX Ingress Controller with Cert Manager & HTTPS

📁 GitHub Repository: terraform-eks-production-cluster

Clone this repository and start with the project.

Part 1: Set Up EBS CSI Driver (ReadWriteOnce)

When you try to deploy a StatefulSet on EKS, you might see the pod stuck in Pending. The reason? You haven’t installed the EBS CSI Driver yet, which is required to provision EBS volumes dynamically.

Step 1 – Configure IAM Role for the CSI Driver

You’ll create an IAM role with the AmazonEBSCSIDriverPolicy attached, and associate it with a Kubernetes service account using Pod Identity.

Terraform:

data "aws_iam_policy_document" "ebs_csi_driver" {

statement {

effect = "Allow"

principals {

type = "Service"

identifiers = ["pods.eks.amazonaws.com"]

}

actions = [

"sts:AssumeRole",

"sts:TagSession"

]

}

}

resource "aws_iam_role" "ebs_csi_driver" {

name = "${aws_eks_cluster.eks.name}-ebs-csi-driver"

assume_role_policy = data.aws_iam_policy_document.ebs_csi_driver.json

}

resource "aws_iam_role_policy_attachment" "ebs_csi_driver" {

policy_arn = "arn:aws:iam::aws:policy/service-role/AmazonEBSCSIDriverPolicy"

role = aws_iam_role.ebs_csi_driver.name

}

# Optional: only if you want to encrypt the EBS drives

resource "aws_iam_policy" "ebs_csi_driver_encryption" {

name = "${aws_eks_cluster.eks.name}-ebs-csi-driver-encryption"

policy = jsonencode({

Version = "2012-10-17"

Statement = [

{

Effect = "Allow"

Action = [

"kms:Decrypt",

"kms:GenerateDataKeyWithoutPlaintext",

"kms:CreateGrant"

]

Resource = "*"

}

]

})

}

# Optional: only if you want to encrypt the EBS drives

resource "aws_iam_role_policy_attachment" "ebs_csi_driver_encryption" {

policy_arn = aws_iam_policy.ebs_csi_driver_encryption.arn

role = aws_iam_role.ebs_csi_driver.name

}

resource "aws_eks_pod_identity_association" "ebs_csi_driver" {

cluster_name = aws_eks_cluster.eks.name

namespace = "kube-system"

service_account = "ebs-csi-controller-sa"

role_arn = aws_iam_role.ebs_csi_driver.arn

}

resource "aws_eks_addon" "ebs_csi_driver" {

cluster_name = aws_eks_cluster.eks.name

addon_name = "aws-ebs-csi-driver"

addon_version = "v1.30.0-eksbuild.1"

service_account_role_arn = aws_iam_role.ebs_csi_driver.arn

}

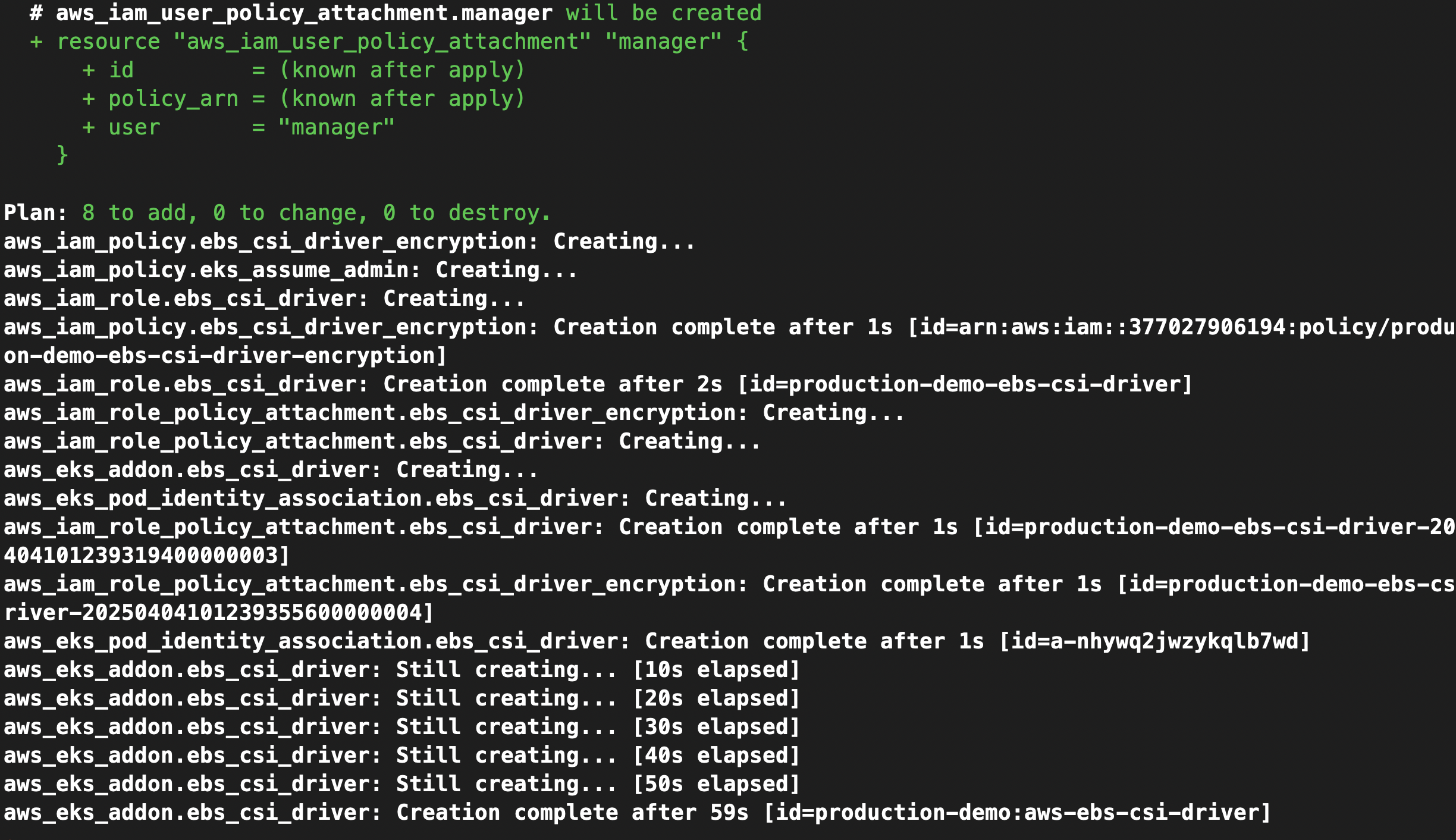

Then run:

terraform apply -auto-approve

Step 2 – Test EBS Volume with a StatefulSet

Let’s verify it works by deploying a basic StatefulSet app that requests a ReadWriteOnce EBS volume.

📁 Folder: 10-statefulset-persistent-storage

0-namespace.yaml

---

apiVersion: v1

kind: Namespace

metadata:

name: 10-example

1-statefulset.yaml

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: myapp

namespace: 10-example

spec:

serviceName: nginx

replicas: 1

selector:

matchLabels:

app: myapp

template:

metadata:

labels:

app: myapp

spec:

containers:

- name: myapp

image: aputra/myapp-195:v2

ports:

- name: http

containerPort: 8080

volumeMounts:

- name: data

mountPath: /data

volumeClaimTemplates:

- metadata:

name: data

spec:

accessModes: [ReadWriteOnce]

resources:

requests:

storage: 5Gi

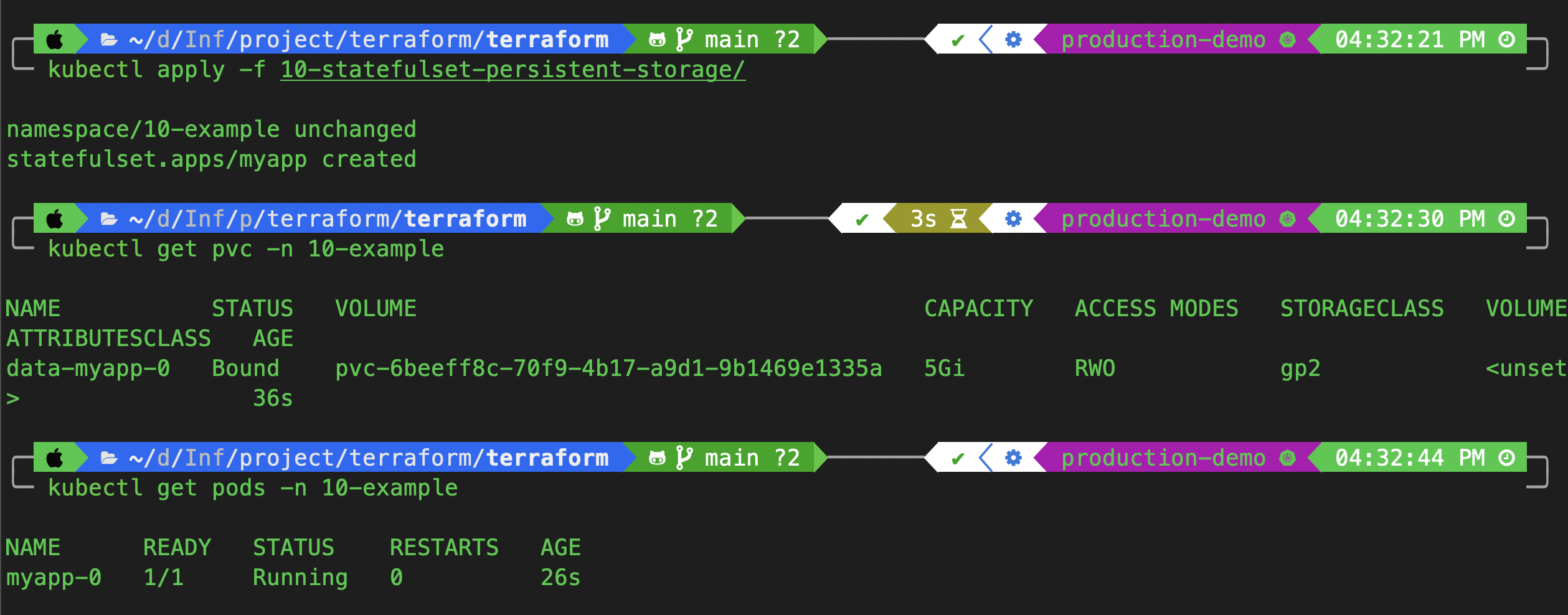

Apply the config

cd ..

kubectl apply -f 10-statefulset-persistent-storage/

Check:

kubectl get pvc -n 10-example

kubectl get pods -n 10-example

✅ Your pod should now be running, with an EBS volume attached dynamically!

Part 2: Set Up OIDC Provider (for IRSA)

In order to associate IAM roles with Kubernetes service accounts securely, you must enable OIDC on your EKS cluster.

Terraform config:

data "tls_certificate" "eks" {

url = aws_eks_cluster.eks.identity[0].oidc[0].issuer

}

resource "aws_iam_openid_connect_provider" "eks" {

client_id_list = ["sts.amazonaws.com"]

thumbprint_list = [data.tls_certificate.eks.certificates[0].sha1_fingerprint]

url = aws_eks_cluster.eks.identity[0].oidc[0].issuer

}

📦 Part 3: Set Up EFS CSI Driver (ReadWriteMany)

EFS supports shared volumes across multiple pods and nodes. This is useful for web apps, ML workloads, or distributed systems.

Why EFS?

Supports

ReadWriteMany(RWX)Fully managed, elastic storage

Mountable from multiple pods across AZs

📌 But it’s more expensive than EBS, so use only when needed.

Step 1 – Provision EFS

resource "aws_efs_file_system" "eks" {

creation_token = "eks"

performance_mode = "generalPurpose"

throughput_mode = "bursting"

encrypted = true

# lifecycle_policy {

# transition_to_ia = "AFTER_30_DAYS"

# }

}

resource "aws_efs_mount_target" "zone_a" {

file_system_id = aws_efs_file_system.eks.id

subnet_id = aws_subnet.private_zone1.id

security_groups = [aws_eks_cluster.eks.vpc_config[0].cluster_security_group_id]

}

resource "aws_efs_mount_target" "zone_b" {

file_system_id = aws_efs_file_system.eks.id

subnet_id = aws_subnet.private_zone2.id

security_groups = [aws_eks_cluster.eks.vpc_config[0].cluster_security_group_id]

}

data "aws_iam_policy_document" "efs_csi_driver" {

statement {

actions = ["sts:AssumeRoleWithWebIdentity"]

effect = "Allow"

condition {

test = "StringEquals"

variable = "${replace(aws_iam_openid_connect_provider.eks.url, "https://", "")}:sub"

values = ["system:serviceaccount:kube-system:efs-csi-controller-sa"]

}

principals {

identifiers = [aws_iam_openid_connect_provider.eks.arn]

type = "Federated"

}

}

}

resource "aws_iam_role" "efs_csi_driver" {

name = "${aws_eks_cluster.eks.name}-efs-csi-driver"

assume_role_policy = data.aws_iam_policy_document.efs_csi_driver.json

}

resource "aws_iam_role_policy_attachment" "efs_csi_driver" {

policy_arn = "arn:aws:iam::aws:policy/service-role/AmazonEFSCSIDriverPolicy"

role = aws_iam_role.efs_csi_driver.name

}

resource "helm_release" "efs_csi_driver" {

name = "aws-efs-csi-driver"

repository = "https://kubernetes-sigs.github.io/aws-efs-csi-driver/"

chart = "aws-efs-csi-driver"

namespace = "kube-system"

version = "3.0.3"

set {

name = "controller.serviceAccount.name"

value = "efs-csi-controller-sa"

}

set {

name = "controller.serviceAccount.annotations.eks\\.amazonaws\\.com/role-arn"

value = aws_iam_role.efs_csi_driver.arn

}

depends_on = [

aws_efs_mount_target.zone_a,

aws_efs_mount_target.zone_b

]

}

# Optional since we already init helm provider (just to make it self contained)

data "aws_eks_cluster" "eks_v2" {

name = aws_eks_cluster.eks.name

}

# Optional since we already init helm provider (just to make it self contained)

data "aws_eks_cluster_auth" "eks_v2" {

name = aws_eks_cluster.eks.name

}

provider "kubernetes" {

host = data.aws_eks_cluster.eks_v2.endpoint

cluster_ca_certificate = base64decode(data.aws_eks_cluster.eks_v2.certificate_authority[0].data)

token = data.aws_eks_cluster_auth.eks_v2.token

}

resource "kubernetes_storage_class_v1" "efs" {

metadata {

name = "efs"

}

storage_provisioner = "efs.csi.aws.com"

parameters = {

provisioningMode = "efs-ap"

fileSystemId = aws_efs_file_system.eks.id

directoryPerms = "700"

}

mount_options = ["iam"]

depends_on = [helm_release.efs_csi_driver]

}

Terraform creates:

EFS file system

Two mount targets in private subnets (multi-AZ)

IAM Role for CSI controller using IRSA

Use Helm to deploy the driver with the correct role annotations.

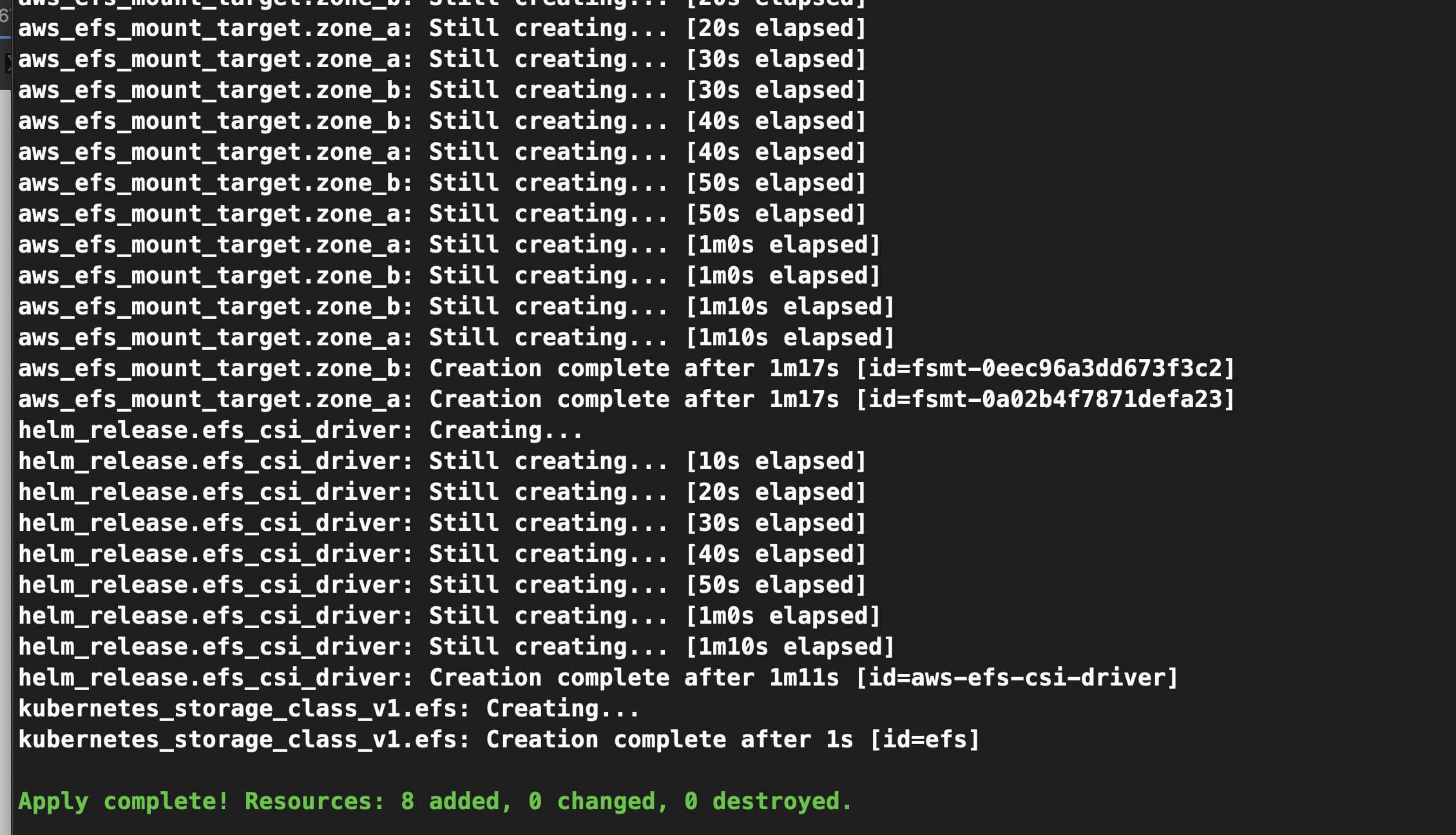

Apply it:

terraform init

terraform apply -auto-approve

it will install efs csi driver to the cluster

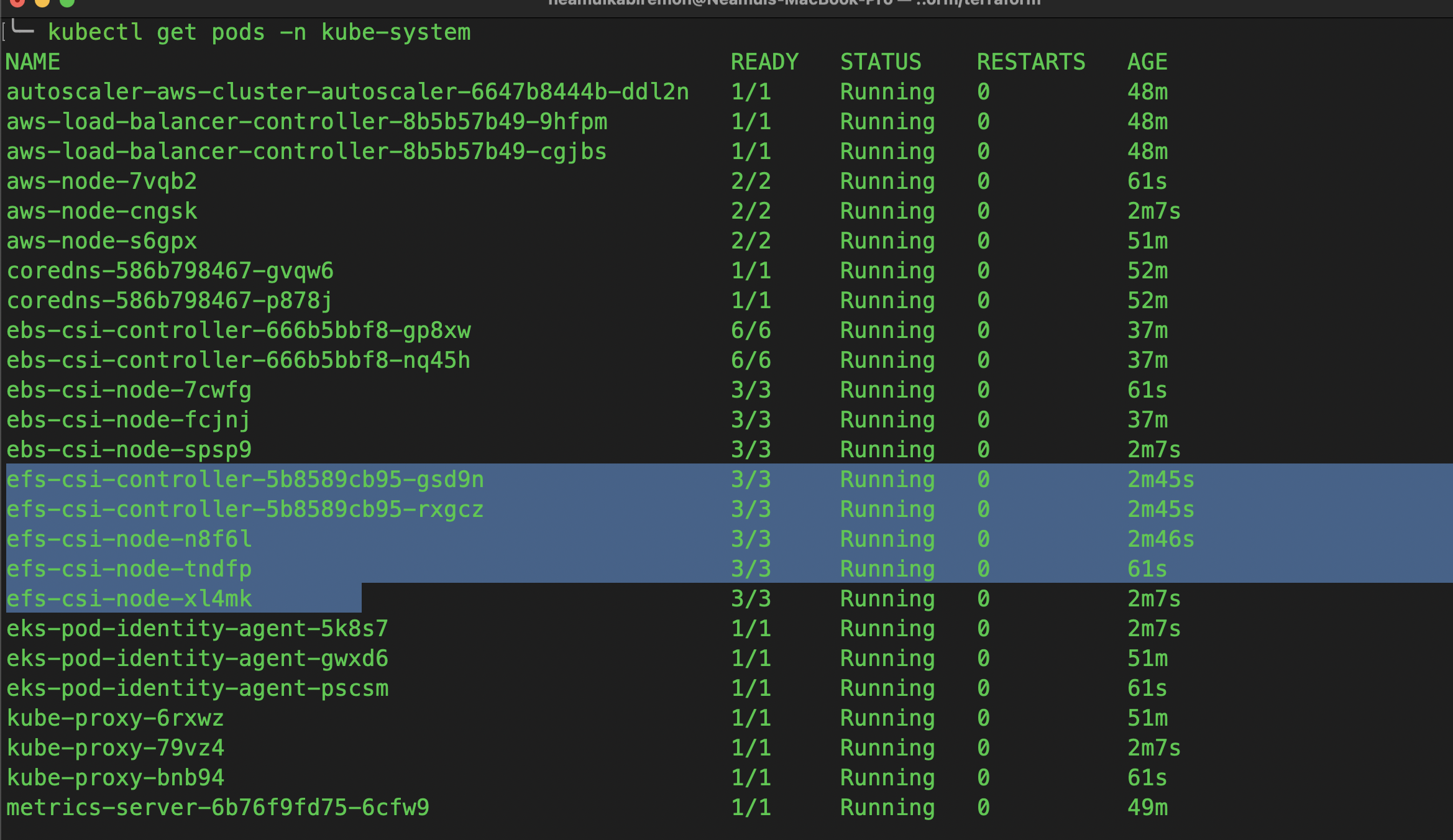

Let’s get the pod and verify it

kubectl get pods -n kube-system

You should now see EFS CSI driver pods running in kube-system.

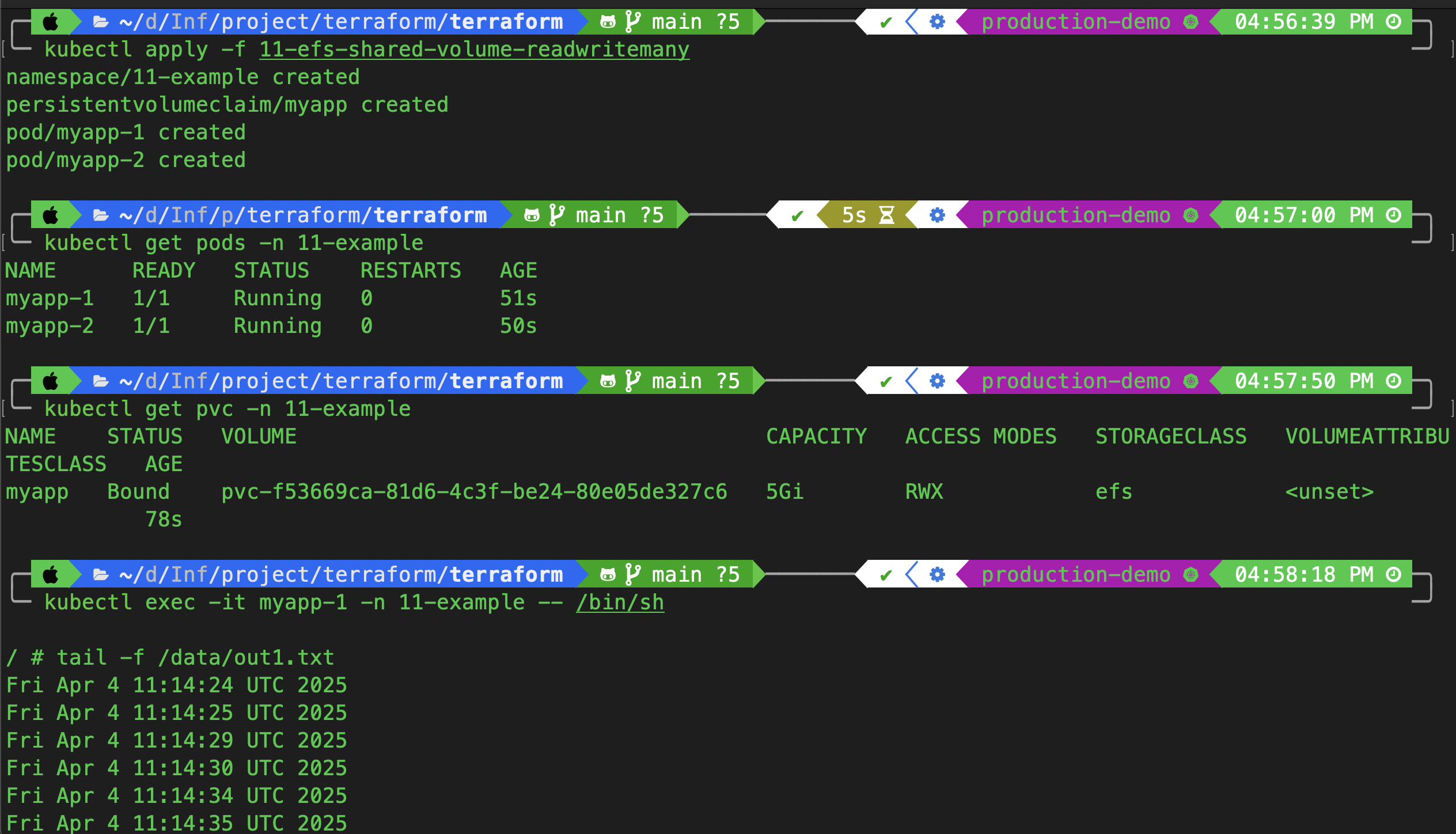

Step 3 – Create a Shared Volume Test with RWX

Let’s test with two pods writing to the same file in /data.

📁 Folder: 11-efs-shared-volume-readwritemany

0-namespace.yaml

---

apiVersion: v1

kind: Namespace

metadata:

name: 11-example

1-pvc.yaml

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: myapp

namespace: 11-example

spec:

accessModes:

- ReadWriteMany

storageClassName: efs

resources:

requests:

storage: 5Gi # Don't matter, it's elastic

2-pods.yaml

---

apiVersion: v1

kind: Pod

metadata:

name: myapp-1

namespace: 11-example

spec:

containers:

- name: myapp-1

image: busybox

command: ["/bin/sh"]

args:

[

"-c",

"while true; do echo $(date -u) >> /data/out1.txt; sleep 5; done",

]

volumeMounts:

- name: persistent-storage

mountPath: /data

volumes:

- name: persistent-storage

persistentVolumeClaim:

claimName: myapp

---

apiVersion: v1

kind: Pod

metadata:

name: myapp-2

namespace: 11-example

spec:

containers:

- name: myapp-2

image: busybox

command: ["/bin/sh"]

args:

[

"-c",

"while true; do echo $(date -u) >> /data/out1.txt; sleep 5; done",

]

volumeMounts:

- name: persistent-storage

mountPath: /data

volumes:

- name: persistent-storage

persistentVolumeClaim:

claimName: myapp

Apply the config:

kubectl apply -f 11-efs-shared-volume-readwritemany/

kubectl get pods -n 11-example

Verify the file /data/out1.txt from both pods—it should be updating in real-time from both sources.

What You’ve Achieved

You now have:

✅ EBS volumes working with StatefulSets (RWO)

✅ OIDC + IRSA setup for secure IAM roles

✅ EFS volumes working across multiple pods (RWX)

✅ All fully automated via Terraform + Helm

Your cluster is now stateful, secure, and production-ready.

📢 What’s Coming in Part 7?

Next, we’ll integrate AWS Secrets Manager into EKS workloads—so you never have to hardcode credentials or API keys again.

Subscribe to my newsletter

Read articles from Neamul Kabir Emon directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Neamul Kabir Emon

Neamul Kabir Emon

Hi! I'm a highly motivated Security and DevOps professional with 7+ years of combined experience. My expertise bridges penetration testing and DevOps engineering, allowing me to deliver a comprehensive security approach.