Building a Highly Available 3-Tier AWS Architecture with Multi-AZ RDS and NAT Gateway Redundancy

Alex Ndirangu

Alex Ndirangu

Introduction

In modern cloud architectures, high availability and fault tolerance are essential to ensure continuous service delivery. In this post, I will walk you through creating a highly available architecture on AWS, focusing on the integration of Amazon EC2, Auto Scaling, Elastic Load Balancer (ELB), RDS Multi-AZ deployment, and NAT Gateway redundancy.

This is a project I have been working on as part of my AWS Solutions Architect Journey.

We'll design a solution that is fault-tolerant and resilient, leveraging multiple availability zones (AZs) and applying best practices to secure our infrastructure. At the end of this exercise, you'll understand how to set up and manage a robust cloud application architecture.

Let’s Build!

Core Architecture Overview

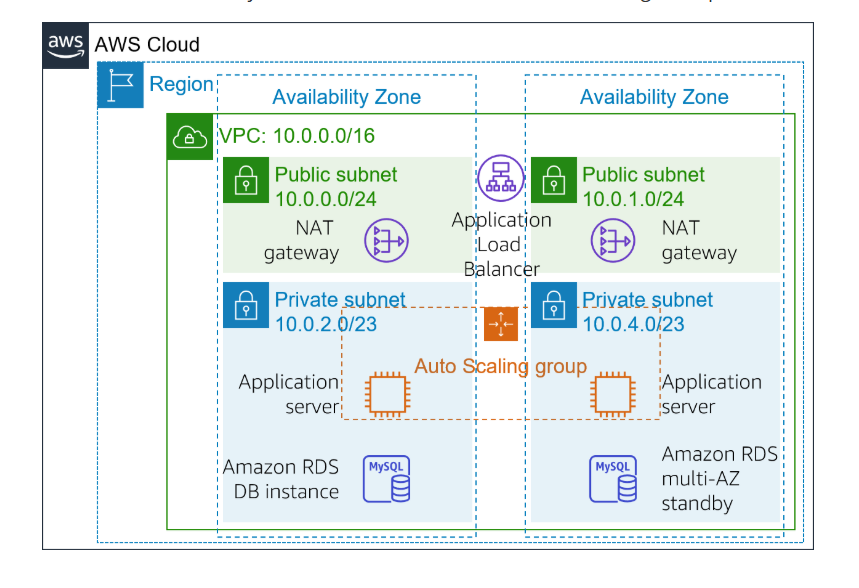

Our architecture consists of the following components:

Amazon EC2 instances running the web application.

Auto Scaling Groups (ASG) to ensure the application maintains the desired number of healthy instances.

Elastic Load Balancer (ELB) to distribute incoming traffic across instances.

RDS Multi-AZ deployment for the database layer to ensure high availability.

NAT Gateway redundancy to provide internet access to private subnets in case of failure.

We’ll also walk through the following tasks in detail:

Setting up a highly available VPC with public and private subnets across two Availability Zones.

Configuring a load balancer that forwards HTTP/HTTPS traffic to EC2 instances.

Securing the application, database, and load balancer using security groups.

Ensuring high availability by deploying RDS in a Multi-AZ configuration.

Implementing NAT Gateway redundancy to ensure internet access for private subnets.

Step 1: Designing the VPC and Subnets

We start by creating a Virtual Private Cloud (VPC) with two public and two private subnets across two different availability zones. Public subnets host the load balancer, while private subnets house the application and database servers.

Public Subnets will contain the Load Balancer and NAT Gateways.

Private Subnets will host EC2 instances (web servers) and the Amazon RDS database.

VPC Configuration:

CIDR Block: 10.0.0.0/16

Public Subnets: 10.0.1.0/24 and 10.0.2.0/24

Private Subnets: 10.0.3.0/24 and 10.0.4.0/24

Step 2: Deploying the Load Balancer and EC2 Instances

We set up an Application Load Balancer (ALB) in the public subnets that listens for HTTP and HTTPS traffic. The ALB will distribute traffic across multiple EC2 instances that reside in the private subnets.

- The ALB forwards requests to an Auto Scaling Group (ASG), which dynamically adjusts the number of running EC2 instances based on traffic demands.

Key Steps for ALB:

Security Groups: Configured the load balancer security group to allow incoming HTTP/HTTPS traffic from the internet.

Target Group: Created a target group to forward requests to EC2 instances in the private subnets.

Listeners and Routing: Set up listeners on port 80 (HTTP) and 443 (HTTPS) to forward traffic to the target group, which then sent it to the application servers.

Step 3: Setting Up Auto Scaling for EC2 Instances

I then launched EC2 instances within a Private Subnet to host the application. These instances were part of an Auto Scaling Group (ASG) that ensures there are always enough instances to handle traffic.

Auto Scaling Configuration:

Launch Template: Used a launch template with the Amazon Machine Image (AMI) of the desired application stack.

Scaling Policies: Set policies to scale in/out based on traffic load. This means the number of instances automatically adjusts based on demand.

By using Auto Scaling, we ensure that the application remains responsive during traffic spikes and does not waste resources when traffic is low.

Step 4: Securing the Architecture with Security Groups

We now configure security groups to enforce a layered security approach:

The Load Balancer Security Group allows all inbound HTTP and HTTPS traffic.

The Application Server Security Group allows inbound HTTP traffic only from the Load Balancer's security group.

The Database Security Group allows traffic from the application servers on port 3306 (MySQL) but denies external internet traffic.

Step 5: Enabling High Availability with RDS Multi-AZ Deployment

To ensure database availability, we configure Amazon RDS for Multi-AZ deployment. This setup automatically provisions a standby replica of the database in another availability zone, providing automatic failover in case of a primary database failure.

RDS Configuration:

MySQL Version: 5.7

VPC Security Group: Configured to only allow connections from the application servers.

Multi-AZ Deployment: To improve availability, I deployed the RDS instance in a Multi-AZ configuration, where Amazon RDS automatically replicates data to a standby instance in a different AZ.

Step 6: Implementing NAT Gateway Redundancy

To allow our private EC2 instances to access the internet (e.g., for updates), we need to route traffic through a NAT Gateway. However, a single NAT Gateway in one AZ introduces a potential single point of failure. To resolve this, we deploy NAT Gateway redundancy:

Create a Second NAT Gateway: Deploy a second NAT Gateway in the second public subnet (Public Subnet 2).

Update Route Tables: Modify the route tables for each private subnet to route internet-bound traffic through the appropriate NAT Gateway, ensuring redundancy in case one AZ experiences a failure.

NB: We cannot have two 0.0.0.0/0 routes in a single route table ie. We cannot have 2 routes with the same destination in a single route table. It will give an error, “Duplicate Route in Destination” which I found out in the process.

Step 7: Monitoring and Maintenance

After the application was up and running, I set up CloudWatch Alarms to monitor key metrics like CPU utilization, memory usage, and disk I/O for the EC2 instances.

Step 8: Testing the Architecture

Now that we have everything configured, we can test the system’s functionality and high availability.

Test Load Balancer: By accessing the DNS of the ALB, we can verify that it properly forwards requests to the EC2 instances and that the application is functioning as expected.

Test Auto Scaling: By terminating an EC2 instance manually, we simulate a failure. The ALB should reroute traffic to the remaining healthy instance, and EC2 Auto Scaling will launch a replacement instance to maintain the desired capacity.

Test Database Failover: In the event of a failure on the primary RDS instance, the failover to the standby instance should be automatic and seamless to the application.

Step 9: Conclusion

With the configuration of RDS Multi-AZ, NAT Gateway redundancy, and proper security groups, our application architecture is not only highly available but also resilient to failures. Whether it's an EC2 instance or an AZ failure, the system is designed to handle disruptions without affecting the service’s availability.

This architecture demonstrates how AWS can be leveraged to build a highly available, secure, and fault-tolerant application, providing robust solutions for businesses that require continuous uptime.

Hope you learned something. See you in the Next One!

Subscribe to my newsletter

Read articles from Alex Ndirangu directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by