How caching database can handle billions of requests

Muhammad yasir

Muhammad yasir

I was building a database similar to redis and after messing with research papers and Operating system specific books and what not and then i finally knew secret sauce is on the way.

Understanding LRU and LFU Caching: Exploring Concepts, Implementations, and Real-World Applications

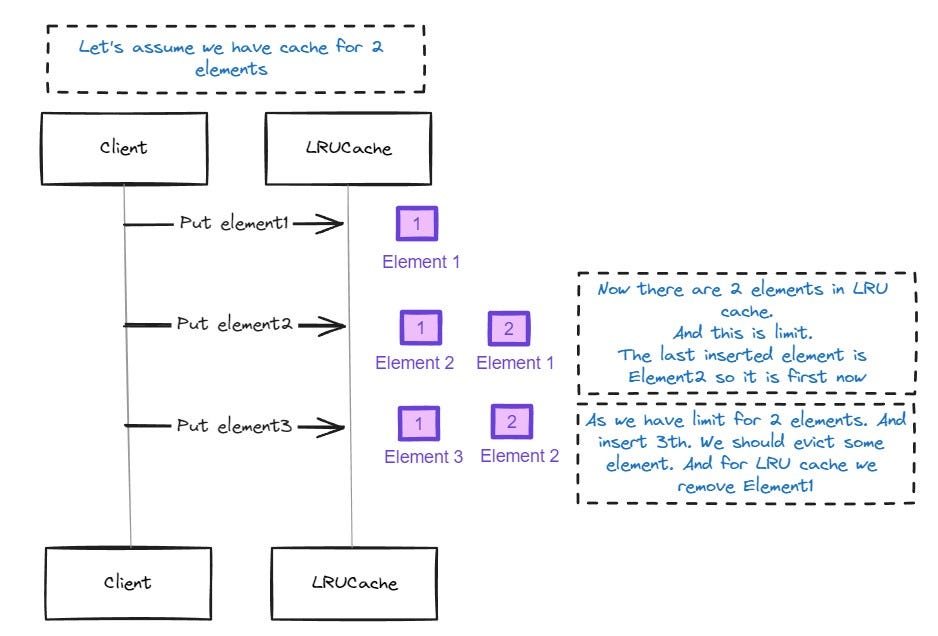

Caching is a fundamental technique used to improve the performance and efficiency of computer systems by storing frequently accessed data in a readily accessible location. Among the various caching strategies, Least Recently Used (LRU) and Least Frequently Used (LFU) are two of the most common and effective methods. These caching algorithms are widely adopted in numerous applications, from web browsers to database systems, to enhance speed and reduce latency.

In this article, we will delve deeply into the workings of LRU and LFU caching mechanisms. We’ll explore the theoretical foundations of each approach, highlighting their differences and the scenarios where each is most effective. Understanding these concepts is crucial for software developers and engineers who aim to optimize the performance of their applications.

you can this this man right here simple cache library.

Why Review LRU and LFU Caching?

They are used in almost not all but many caching databases and it is the gold that you can get in today modern world so stay calm and keep grinding.

Given the prevalence of caching in modern computing, a thorough understanding of LRU and LFU is essential. These algorithms are not only pivotal in everyday applications but also serve as a basis for more advanced caching techniques. By reviewing LRU and LFU, we can gain insights into:

Performance Optimization: Learn how these caching strategies can significantly reduce data retrieval times.

Resource Management: Understand how efficient caching can minimize memory usage and improve system resource allocation.

Implementation Challenges: Explore the common challenges faced during the implementation of these algorithms and how to overcome them.

Implementing LRU and LFU Caches

In this comprehensive review, we will provide detailed implementations of LRU and LFU caches. We’ll guide you through the process of coding these algorithms from scratch, ensuring that you grasp the underlying principles and logic. Additionally, we will discuss the importance of these implementations in real-world scenarios, helping you apply these concepts effectively in your projects.

Implementing an LRU (Least Recently Used) cache in Java can be done using various approaches. One of the most common methods is by using a combination of a LinkedHashMap and overriding its removeEldestEntry method to automatically remove the least recently accessed entries when the cache reaches its maximum capacity.

LRU

It is used by redis

Here’s a straightforward implementation of an LRU cache in C++ using LinkedHashMap:

#include #include #include <unordered_map>

template <typename K, typename V> class LRUCache { private: int capacity;

// Doubly linked list to maintain order (most recent at front) std::list<std::pair<K, V>> itemList;

// Map key to list iterator (for fast access and update) std::unordered_map<K, typename std::list<std::pair<K, V>>::iterator> itemMap;

public: LRUCache(int cap) : capacity(cap) {}

void put(K key, V value) { // If key exists, erase it from both map and list if (itemMap.find(key) != itemMap.end()) { itemList.erase(itemMap[key]); itemMap.erase(key); }

// Insert the new item at the front itemList.push_front({key, value}); itemMap[key] = itemList.begin();

// If over capacity, remove the least recently used item (back of the list) if (itemMap.size() > capacity) { auto last = itemList.end(); --last; itemMap.erase(last->first); itemList.pop_back(); } }

V get(K key) { if (itemMap.find(key) == itemMap.end()) { throw std::runtime_error("Key not found"); }

// Move the accessed item to the front of the list auto it = itemMap[key]; std::pair<K, V> kv = *it; itemList.erase(it); itemList.push_front(kv); itemMap[key] = itemList.begin();

return kv.second; }

void printCache() { std::cout << "Cache: "; for (const auto& pair : itemList) { std::cout << "[" << pair.first << " => " << pair.second << "] "; } std::cout << std::endl; } };

int main() { LRUCache<int, std::string> cache(3); cache.put(1, "one"); cache.put(2, "two"); cache.put(3, "three"); cache.printCache();

cache.get(1); // Access key 1 cache.printCache();

cache.put(4, "four"); // This should evict key 2 (least recently used) cache.printCache();

return 0; }

Explanation

Class Declaration: The LRUCache class extends LinkedHashMap. This class is generic, with K as the type for keys and V as the type for values.

Constructor: The constructor accepts an int parameter specifying the maximum capacity of the cache. The super(capacity, 0.75f, true) call initializes the LinkedHashMap with the specified capacity, a load factor of 0.75, and access order (the true parameter).

removeEldestEntry: This method is overridden to provide the logic for removing the eldest entry when the size of the map exceeds the specified capacity. The size() method returns the current size of the map, and the method returns true if the size is greater than the capacity, causing the eldest entry to be removed.

Main Method: The main method demonstrates the usage of the LRUCache. It creates an instance of LRUCache with a capacity of 3, adds some elements, accesses an element to change its order, and then adds another element to trigger the eviction of the least recently used element.

Key Points

Access Order: By setting the access order to true in the LinkedHashMap constructor, the map maintains the order based on the most recently accessed elements.

Eviction Policy: The removeEldestEntry method is called by LinkedHashMap after each put and putAll operation. If it returns true, the eldest entry is removed.

Capacity Management: This implementation ensures that the cache does not exceed the specified capacity, maintaining only the most recently accessed elements.

This implementation is efficient and leverages the built-in functionality of LinkedHashMap to provide a simple and effective LRU cache.

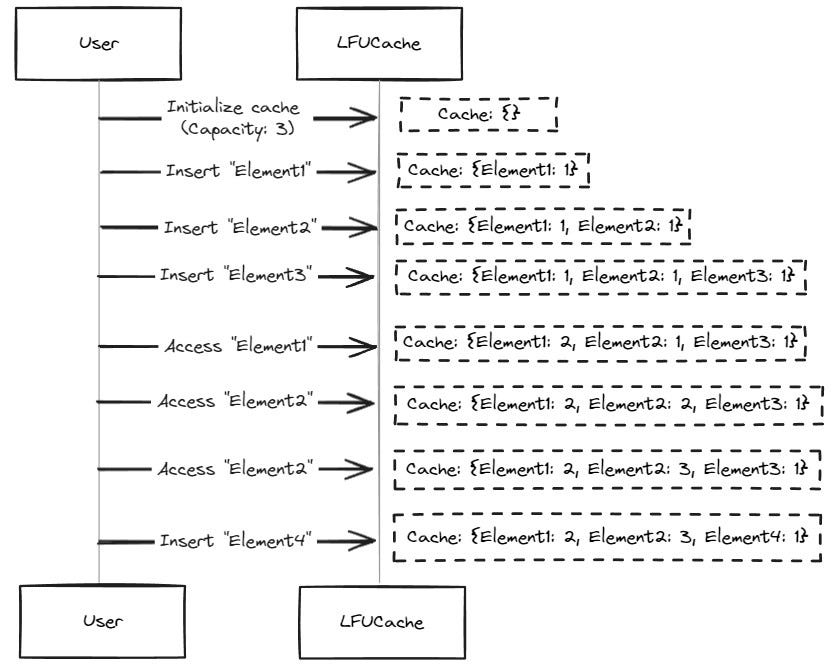

LFU

It is also used by redis and memcached nd also dragonfly uses it but with its own implementations and it is also used in Major Operating systems.

Implementing an LFU (Least Frequently Used) cache in Java is a bit more complex than an LRU cache because it requires tracking the frequency of access for each element. A common approach involves using a combination of data structures, such as a HashMap for storing the values and their frequencies, and a TreeMap or LinkedHashMap to maintain the frequency order.

Below is an example of an LFU cache implementation in C++:

#include #include <unordered_map> #include <unordered_set> #include #include

template<typename K, typename V> class LFUCache { private: int capacity; int minFrequency;

std::unordered_map<K, V> cache; // key -> value std::unordered_map<K, int> keyFrequency; // key -> frequency std::unordered_map<int, std::list> frequencyList; // frequency -> list of keys std::unordered_map<K, typename std::list::iterator> keyIterators; // key -> iterator in frequencyList

public: LFUCache(int cap) : capacity(cap), minFrequency(0) {}

V get(K key) { if (cache.find(key) == cache.end()) { return V(); // Return default value if not found }

int freq = keyFrequency[key];

// Remove from current frequency list frequencyList[freq].erase(keyIterators[key]);

if (frequencyList[freq].empty()) { frequencyList.erase(freq); if (minFrequency == freq) { minFrequency++; } }

// Add to next frequency list freq++; frequencyList[freq].push_back(key); keyIterators[key] = --frequencyList[freq].end(); keyFrequency[key] = freq;

return cache[key]; }

void put(K key, V value) { if (capacity <= 0) return;

if (cache.find(key) != cache.end()) { cache[key] = value; get(key); // Update frequency return; }

if (cache.size() >= capacity) { // Evict least frequently used key K evictKey = frequencyList[minFrequency].front(); frequencyList[minFrequency].pop_front();

if (frequencyList[minFrequency].empty()) { frequencyList.erase(minFrequency); }

cache.erase(evictKey); keyFrequency.erase(evictKey); keyIterators.erase(evictKey); }

// Insert new key cache[key] = value; keyFrequency[key] = 1; minFrequency = 1; frequencyList[1].push_back(key); keyIterators[key] = --frequencyList[1].end(); }

void printCache() { std::cout << "Cache: "; for (const auto& pair : cache) { std::cout << "[" << pair.first << " => " << pair.second << "] "; } std::cout << std::endl; } };

// Example usage int main() { LFUCache<int, std::string> lfuCache(3); lfuCache.put(1, "one"); lfuCache.put(2, "two"); lfuCache.put(3, "three"); std::cout << "Cache after adding 1, 2, 3: "; lfuCache.printCache();

lfuCache.get(1); lfuCache.get(1); lfuCache.get(2); std::cout << "Cache after accessing 1 twice and 2 once: "; lfuCache.printCache();

lfuCache.put(4, "four"); // Should evict key 3 std::cout << "Cache after adding 4 (evict key 3): "; lfuCache.printCache();

lfuCache.get(4); lfuCache.get(4); lfuCache.put(5, "five"); // Should evict key 2 std::cout << "Cache after adding 5 (evict key 2): "; lfuCache.printCache();

return 0; }

Explanation

Class Declaration: The LFUCache class uses generics with K for keys and V for values.

Constructor: Initializes the capacity, minimum frequency, and the necessary data structures (cache, keyFrequency, and frequencyList).

Data Structures:

cache: Stores the actual key-value pairs.

keyFrequency: Tracks the frequency of access for each key.

frequencyList: A map where the key is the frequency and the value is a LinkedHashSet of keys with that frequency.

get(K key): Retrieves the value associated with the key, updates the frequency of the key, and adjusts the frequencyList.

put(K key, V value): Adds a new key-value pair to the cache or updates an existing key. If the cache is at capacity, it evicts the least frequently used key. It then updates the frequency of the key.

Eviction Policy: When the cache reaches its capacity, the least frequently used key is removed. If multiple keys have the same frequency, the least recently added/used key among them is evicted (due to LinkedHashSet maintaining insertion order).

Main Method: Demonstrates the usage of the LFUCache. It adds elements to the cache, accesses them to change their frequencies, and adds more elements to trigger evictions.

This implementation ensures that the least frequently used items are evicted first and handles ties by evicting the least recently added/used items among those with the same frequency.

Utilizing Google Guava for Caching

If you need some ghost touch you can learn about google Guava which is used in its internal database at level db https://github.com/google/leveldb

After reading this article my assignment your you is to see leveldb source code and understand from bottom to top by how it works.

we can use Google Guava, a powerful open-source Java-based library. Google Guava provides a robust framework for caching, making it easier to implement and manage these algorithms in your applications. We will cover:

Setting Up Google Guava: Step-by-step instructions for integrating Guava into your project.

Implementing LRU and LFU: Practical examples and code snippets demonstrating how to use Guava for LRU and LFU caching.

Performance Considerations: Analysis of the performance benefits and trade-offs when using Guava for caching.

Google Guava provides a simple and efficient way to implement an LRU cache through its CacheBuilder class. Below is an example of how to implement an LRU cache using Google Guava.

Conclusion

By the end of this article, you will have a solid understanding how these caching databases work under the hood and LRU and LFU caching mechanisms, practical implementation skills, and the ability to leverage Google Guava for advanced caching solutions. Whether you are a novice programmer or an experienced developer, this in-depth review will enhance your knowledge and expertise in caching strategies, empowering you to optimize your applications effectively.

Subscribe to my newsletter

Read articles from Muhammad yasir directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by