Complete GitOps Journey: Automating Deployments from Code to EKS

Subroto Sharma

Subroto SharmaTable of contents

- What You'll Build

- Prerequisites

- Creating Your EKS Cluster

- Deploying Your Go Application

- Building and Publishing Your Docker Image

- Testing Your Deployment

- Setting Up GitHub Actions for Your CI/CD Pipeline

- Setting Up Argo CD for GitOps

- Testing Your Automated Pipeline

- Cleanup (When You're Done)

- What You've Accomplished

- Happy automating!

GITHUB REPO: https://github.com/subrotosharma/end-to-end-go-project

Introduction

Ready to transform your development workflow? In this guide, I'll walk you through creating a powerful end-to-end DevOps pipeline specifically designed for cloud-native applications. We'll start with a practical Go application, containerize it with Docker, deploy it to Amazon EKS, and implement a complete GitOps workflow.

My approach focuses on real-world implementation rather than just theory. By the end, you'll have a fully automated pipeline that takes your code from commit to production with minimal manual intervention.

What You'll Build

In this hands-on tutorial, I'll guide you through building this complete workflow:

Development: You'll write a Go application and prepare it for deployment

Containerization: Package the app using Docker with production-ready practices

Kubernetes: Deploy to Amazon EKS and manage with Kubernetes tools

GitOps: Implement continuous delivery with Argo CD and Helm

By implementing this pipeline, you'll experience how modern DevOps practices can streamline your development process, improve reliability, and speed up your deployment cycles.

Prerequisites

Before we begin, you'll need to set up these tools on your local machine:

1. kubectl

Install kubectl to interact with your Kubernetes cluster:

# On macOS with Homebrew |

2. eksctl

The eksctl tool makes creating Amazon EKS clusters much simpler:

# On macOS with Homebrew |

3. AWS CLI Setup

You'll need AWS credentials to create and manage AWS resources:

Install the AWS CLI from the official download page

Create a dedicated IAM user with these permissions:

AmazonEKSFullAccess

EC2FullAccess

CloudFormationFullAccess

IAMFullAccess

- Configure your credentials:

aws configure |

Verify your setup works:

aws eks list-clusters |

Note: Running an EKS cluster will incur AWS charges. For a free alternative, consider Minikube for local development.

Creating Your EKS Cluster

Let's provision our Kubernetes environment on AWS:

eksctl create cluster \ |

This process takes approximately 10 minutes as AWS creates:

A VPC with public and private subnets

EC2 instances for worker nodes

IAM roles and security groups

The EKS control plane

When complete, you'll see:

[✔] EKS cluster "my-devops-cluster" in "us-east-1" region is ready |

Deploying Your Go Application

Now let's deploy our sample Go application to Kubernetes:

- Clone the sample repository:

git clone https://github.com/subrotosharma/end-to-end-go-project.git |

2. Examine the Kubernetes manifests in k8s/manifests/:

deployment.yaml - Manages the application pods

service.yaml - Creates an internal network endpoint

ingress.yaml - Sets up external access

3. Apply the manifests:

kubectl apply -f k8s/manifests/deployment.yaml |

4. Check your deployment:

kubectl get pods,svc,ing |

If you see ImagePullBackOff errors, your Docker image isn't available yet. Let's fix that next!

Building and Publishing Your Docker Image

Let's containerize the application and make it available to Kubernetes:

Create a Docker Hub account if you don't have one

Build your Docker image:

docker buildx build --platform linux/amd64 \ |

Tip: The --platform flag ensures compatibility between different CPU architectures (important if you're using Apple Silicon but deploying to x86 servers)

If you are having issue to build the image using docker buildx then to install docker buildx

Run the following:

mkdir -p ~/.docker/cli-plugins |

Then check:

file ~/.docker/cli-plugins/docker-buildx |

Push your image to Docker Hub:

docker push yourusername/go-app-devops:v1 |

Update the deployment manifest with your image:

# In deployment.yaml |

Redeploy the application:

kubectl delete -f k8s/manifests/deployment.yaml |

Testing Your Deployment

To verify everything works:

- Check pod status:

kubectl get pods |

2. Temporarily modify the service for testing:

# Edit service.yaml to use NodePort |

3. Get the assigned port:

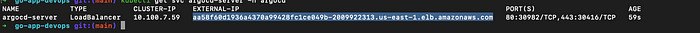

kubectl get svc |

4. Find your worker node's public IP:

kubectl get nodes -o wide |

5. Open your EC2 security group to allow inbound traffic on your NodePort

6. Access your application:

kubectl get nodes -o wide |

From Browser hit the below IP with nodeport in this model

http://EC2-Public-IP:30114 |

Success! Your Go application is now running on Kubernetes in AWS.

What's Next?

In the next part, we'll automate this entire workflow by setting up:

GitHub Actions for CI/CD pipelines

Helm charts for Kubernetes configuration management

Argo CD for GitOps-style continuous delivery

NGINX Ingress Controller for proper external access

Automating Your DevOps Workflow: From CI/CD to GitOps with Kubernetes

In my above guide, we built the foundation by setting up our infrastructure and deploying a Go application to AWS EKS. Now let's take your DevOps pipeline to the next level by implementing full automation through CI/CD, Helm, and GitOps practices

Setting Up GitHub Actions for Your CI/CD Pipeline

Let's configure a seamless CI/CD pipeline using GitHub Actions that will automate all the manual work we did previously.

Creating Your Workflow File

In your repository, create a .github/workflows/ci.yml file with these three stages:

# CICD using GitHub actions |

Setting Up GitHub Secrets

For this workflow to function properly, you'll need to add these secrets in your GitHub repository settings:

Go to your repository → Settings → Secrets and variables → Actions

Add the following secrets:

DOCKERHUB_USERNAME: Your Docker Hub username

DOCKERHUB_TOKEN: Your Docker Hub access token (create one in Docker Hub account settings)

TOKEN: A GitHub personal access token with repo scope

Now whenever you push to your main branch, GitHub Actions will:

Build your Go application as a Docker image

Tag it with a unique timestamp-based version

- Push it to Docker Hub

Deploy:

The final stage uses Helm to update your Kubernetes deployment automatically. With every push, Helm reads the updated image tag from the values.yaml file, ensuring your cluster is always running the latest

Setting Up Argo CD for GitOps

Argo CD implements the GitOps pattern by continuously syncing your Git repository with your Kubernetes cluster.

Installing Argo CD

# Create a dedicated namespace |

Getting Your Login Credentials

# Get the initial admin password |

The username is admin and the password is what you just decoded. Make sure to note this down.

Configuring Your Application in Argo CD

Access the Argo CD UI at the LoadBalancer external IP

Log in with your credentials

Click "New App" and configure:

Application Name: go-app

Project: default

Sync Policy: Automatic

Repository URL: Your GitHub repository URL

Path: helm

Cluster URL: https://kubernetes.default.svc (in-cluster)

Namespace: default

Once configured, Argo CD will continuously monitor your repository for changes and automatically apply them to your cluster. This means whenever GitHub Actions updates your Helm values with a new image tag, Argo CD will detect that change and deploy the new version.

Setting Up Ingress for External Access

Let's configure proper external access to your application through NGINX Ingress:

# Install NGINX Ingress Controller |

The controller will provision an AWS Load Balancer automatically. Now configure your domain:

Verify the Ingress IP

kubectl get ing |

- Update your /etc/hosts file with:

<load-balancer-ip> your-app.local |

- Access your application at http://your-app.local

In a production environment, you would:

Configure a real domain with your DNS provider

Set up TLS certificates for HTTPS

Configure additional security headers

Testing Your Automated Pipeline

Now let's see your entire pipeline in action:

Make a code change to your application

Commit and push to your GitHub repository

Watch GitHub Actions build and push a new Docker image

See the Helm values update with the new tag

Observe Argo CD detecting the change and updating your cluster

Access your updated application at your ingress URL

All of this happens automatically, without any manual intervention!

Cleanup (When You're Done)

Once you've explored and learned from this setup, remember to clean up to avoid unnecessary AWS charges:

eksctl delete cluster --name devops-learners-cluster --region us-east-1 |

What You've Accomplished

Congratulations! You've built a professional-grade DevOps pipeline that:

✅ Automatically builds, tests, and deploys your application

✅ Implements GitOps principles for version control and auditing

✅ Provides continuous delivery with minimal manual steps

✅ Leverages container orchestration for resilience and scalability

✅ Follows security best practices for credentials management

This workflow is similar to what many tech companies use in production environments. You now have hands-on experience with tools and techniques that are highly valuable in modern DevOps roles.

Happy automating!

Subscribe to my newsletter

Read articles from Subroto Sharma directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Subroto Sharma

Subroto Sharma

I'm a passionate and results-driven DevOps Engineer with hands-on experience in automating infrastructure, optimizing CI/CD pipelines, and enhancing software delivery through modern DevOps and DevSecOps practices. My expertise lies in bridging the gap between development and operations to streamline workflows, increase deployment velocity, and ensure application security at every stage of the software lifecycle. I specialize in containerization with Docker and Kubernetes, infrastructure-as-code using Terraform, and managing scalable cloud environments—primarily on AWS. I’ve worked extensively with tools like Jenkins, GitHub Actions, SonarQube, Trivy, and various monitoring/logging stacks to build secure, efficient, and resilient systems. Driven by automation and a continuous improvement mindset, I aim to deliver value faster and more reliably by integrating cutting-edge tools and practices into development pipelines.