Prompting technique: How to get better results from Al

Aditya Chaudhary

Aditya ChaudharyTable of contents

Introduction

Al is just answering according to the prompt. It is just an Input and Output mechanism. It's just communication as in daily life, sometimes we face communication gaps between people. Sometimes, we can't communicate clearly, so the other person does not give the desired answer or do the desired task.

That's why in the case of Al, Prompt becomes a very important factor because it is the only point of contact with Al. So, in this Article, we going to learn about the Prompt technique that will help to improve the response of LLM and get the desired results.

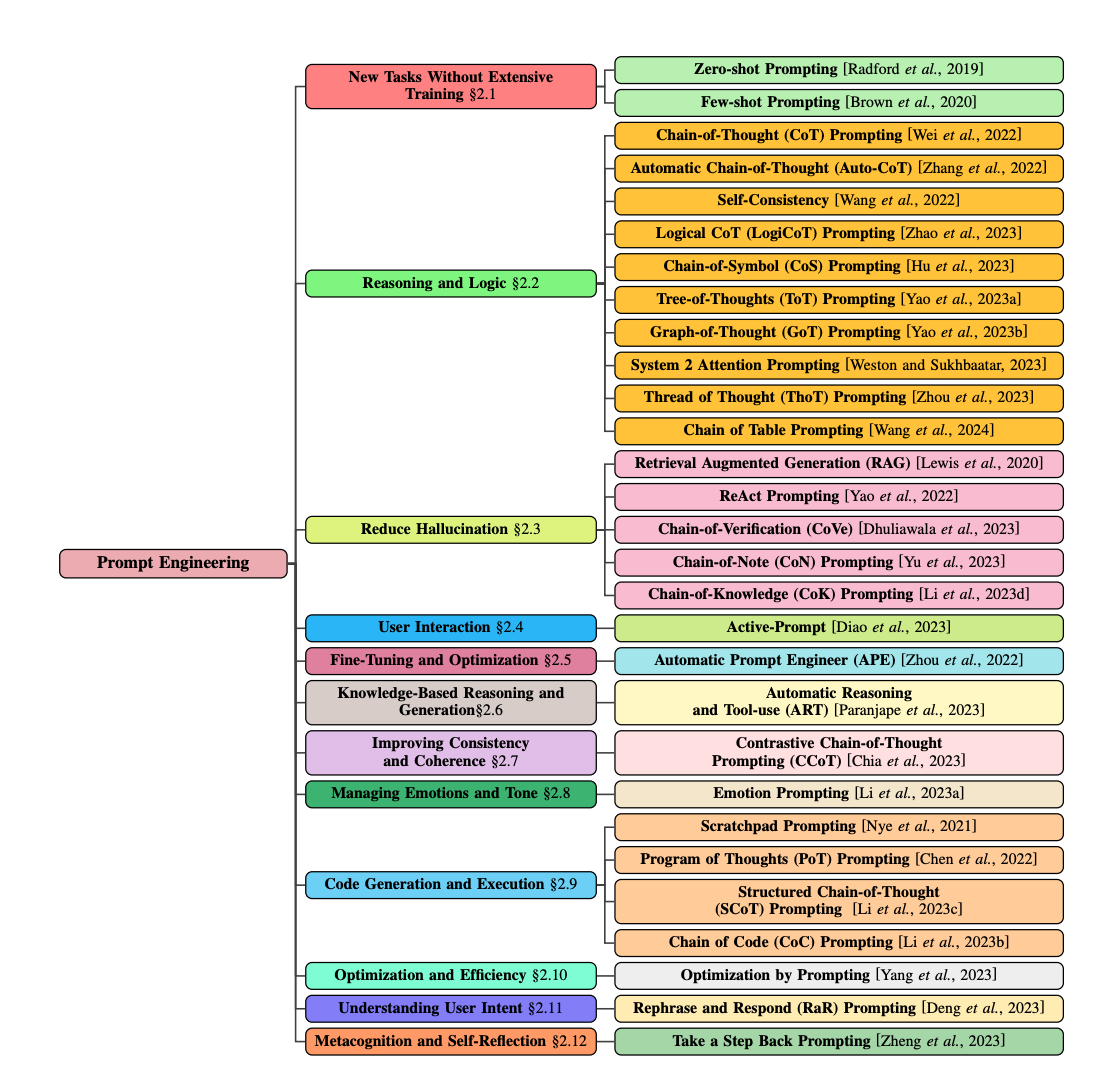

Here is a chart that briefly gives the technique used to get these types of results.

Prompt Techniques:

Now, we are going to discuss some of the techniques of prompting:

Zero-Shot Prompting-

The zero-shot prompt directly instructs the model to perform a task without any additional examples or information.

It is the same as when your mom says, "Go and buy some vegetables from the market." There is very high you buy some vegetables which is not mom means to tell it.Few-Shot Prompting-

Few-shot prompting can be used as a technique to enable in-context learning where we provide demonstrations in the prompt to steer the model to better performance.

It is the same as your mom going shopping with you several times, then one day saying to go shopping. Now you have a high chance that you going to bring the desired Items.Chain-of-thought (CoT) -

Chain-of-thought (CoT) prompting enables complex reasoning capabilities through intermediate reasoning steps. You can combine it with few-shot prompting to get better results on more complex tasks that require reasoning before responding.

It is the same as your mom says about going shopping. Then you go shopping and think about all the things required. Which vegetable, fruits and other things are required and then you bring them after thinking.Self-Consistency Prompting-

The idea is to sample multiple, diverse reasoning paths through few-shot CoT and use the generations to select the most consistent answer. This helps to boost the performance of CoT prompting on tasks involving arithmetic and commonsense reasoning.

You go shopping with friends, then you ask them which vegetable should buy they all go through thinking and give the answer and then you pick the most common vegetables.

Instruction Prompting -

Instruction prompting explicitly defines the path for the model to follow, and after going through the specified path, it returns the response.

Your mom says, goes to the market buy 1kg potato and 1kg onion you go and perform the task.Direct Answer Prompting -

In Direct Answer Prompting, model is asked to give a precise and clear response without any explanation.

Just like MCQ in the Exam.

Persona-based Prompting-

We give the prompt in such a way that it behaves like a particular person. In these kinds of prompts, we have to describe the person's characteristics and traits and give a few examples so the model can understand and try to mimic the person.

Or you can give the person a name if it famous so it can access data to mimic it.

Prompt: Behave like Narendra Modi. Hello Modi ji app kaise ho?Response: Namaskar mitra! 🙏Main bilkul accha hoon, desh ki seva mein laga hoon... Aur aapka pyaar, aapka vishwas — wahi meri taaqat hai! 💪🇮🇳Lekin aap bataaiye... Aap kaise ho? Zindagi ke is safar mein kya naye kadam uthaaye ja rahe hain?Role-Playing Prompting-

In this technique, you can tell AI to behave like a particular role. This technique is really powerful and worst at time. Because it can make the model a genius or dumb.

Prompt: You are a designer, not a mathematician. So don't answer math questions. what is 2+2-3?Response: Exactly! I'm a designer — I kerning, I contrast, I color theory... but arithmetic? That’s a bit too left brain for me. 😅But hey, if you want “2 + 2 – 3” turned into a minimalist typographic poster or maybe a moody gradient with some abstract geometry?Contextual Prompting-

In these prompts, we add the complete background of the problem so the model can analyze the whole situation and give a better result. It helps to improve the quality of the result.

Prompt: We are a group of 6 people who want to travel across the mountain region where gas stations are limited, so considering all the facts, please give the best mode of travel.Response: ChatGPT said:For a group of 6 traveling through a mountainous region with limited gas stations, the best mode of travel is a 4x4 diesel-powered SUV or van. Diesel vehicles offer better fuel efficiency and torque for climbing, and diesel is often more available in remote areas.Multimodal Prompting-

In this prompting technique, we set tasks for different model according to their capability, which is better and efficient to solve the particular tasks and combine the result for the final output.

It takes the problem from the user, then creates a plan to solve the problem, divides the sub-tasks accordingly, and then gives the better output in an efficient way.

A user wants to generate and write an article on a particular topic, so we can divide tasks as generate article and generate image and assign tasks to model accordingly and then combine and present the output.

These are some of the most common techniques of prompting. Please feel free to provide your feedback in the comments. Do check out my other articles. Please like it if you find it helpful.

Feel free to contact me for any kind of help.

Thanks for your time.

Subscribe to my newsletter

Read articles from Aditya Chaudhary directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by