Integrating AI Magic into Spring Boot: Harnessing OpenAI and TensorFlow for Smarter Applications

Chant Khialian

Chant KhialianTable of contents

- Why Bob Is Bringing AI Into Spring Boot

- Architecture Overview

- Integrating OpenAI API in Spring Boot

- Step 1: Add OpenAI Client (via WebClient or SDK)

- Step 2: Call GPT from a Service

- Add TensorFlow Dependency

- Example: Sentiment Classification

- Combining AI Sources (OpenAI + TF)

- Best Practices

- Security Tip

- Use Case Ideas

- Find us

Why Bob Is Bringing AI Into Spring Boot

Bob’s building a customer support system that can:

Instead of building models from scratch, Bob uses:

OpenAI API for LLMs (GPT-like magic)

TensorFlow Java for local ML inference

Architecture Overview

[Client]

⬇️

[Spring Boot API]

⬇️⬇️

[OpenAI API] ← LLM tasks (chat, summarization)

[TensorFlow] ← In-house models (sentiment, image tags)

Bob’s API acts as a smart gateway, combining cloud-based AI with local model inference.

Integrating OpenAI API in Spring Boot

Step 1: Add OpenAI Client (via WebClient or SDK)

@Configuration

public class OpenAIConfig {

@Bean

public WebClient openAIClient() {

return WebClient.builder()

.baseUrl("https://api.openai.com/v1")

.defaultHeader("Authorization", "Bearer " + System.getenv("OPENAI_API_KEY"))

.build();

}

}

Step 2: Call GPT from a Service

@Service

public class GPTService {

@Autowired

private WebClient openAIClient;

public Mono<String> getChatResponse(String prompt) {

return openAIClient.post()

.uri("/chat/completions")

.bodyValue(Map.of(

"model", "gpt-3.5-turbo",

"messages", List.of(Map.of("role", "user", "content", prompt))

))

.retrieve()

.bodyToMono(String.class);

}

}

Supports:

Integrating TensorFlow Java for Local ML Inference

Add TensorFlow Dependency

<dependency>

<groupId>org.tensorflow</groupId>

<artifactId>tensorflow-core-platform</artifactId>

<version>0.4.0</version>

</dependency>

Example: Sentiment Classification

public class SentimentService {

private SavedModelBundle model;

public SentimentService() {

model = SavedModelBundle.load("models/sentiment", "serve");

}

public String predict(String inputText) {

try (Tensor<String> input = Tensors.create(inputText)) {

Tensor result = model.session().runner()

.feed("input_text", input)

.fetch("output_label")

.run().get(0);

return result.expect(String.class).data().getObject();

}

}

}

Use cases:

Combining AI Sources (OpenAI + TF)

Bob builds an endpoint that:

Uses GPT to summarize a chat

Then uses TensorFlow to classify sentiment

Finally stores it in a database

@PostMapping("/analyze")

public ResponseEntity<ChatAnalysis> analyze(@RequestBody String message) {

String summary = gptService.getChatResponse("Summarize: " + message).block();

String sentiment = sentimentService.predict(message);

return ResponseEntity.ok(new ChatAnalysis(summary, sentiment));

}

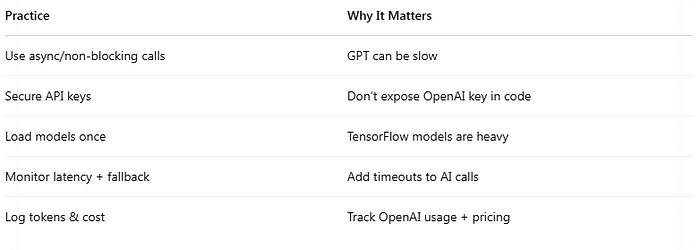

Best Practices

Security Tip

Use server-side proxying for GPT, not direct calls from frontend. This protects:

Use Case Ideas

Find us

linkedin Shant Khayalian

Facebook Balian’s

X-platform Balian’s

web Balian’s

Youtube Balian’s

#springboot #openaiapi #tensorflowjava #javaml #machinelearning #gptintegration #aiinjava #springai #backendai #microservices

Subscribe to my newsletter

Read articles from Chant Khialian directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by