Strategic Step Back Prompting: Enhancing LLM Outputs with Smarter Queries

Yogyashri Patil

Yogyashri Patil

What is Step Back Prompting?

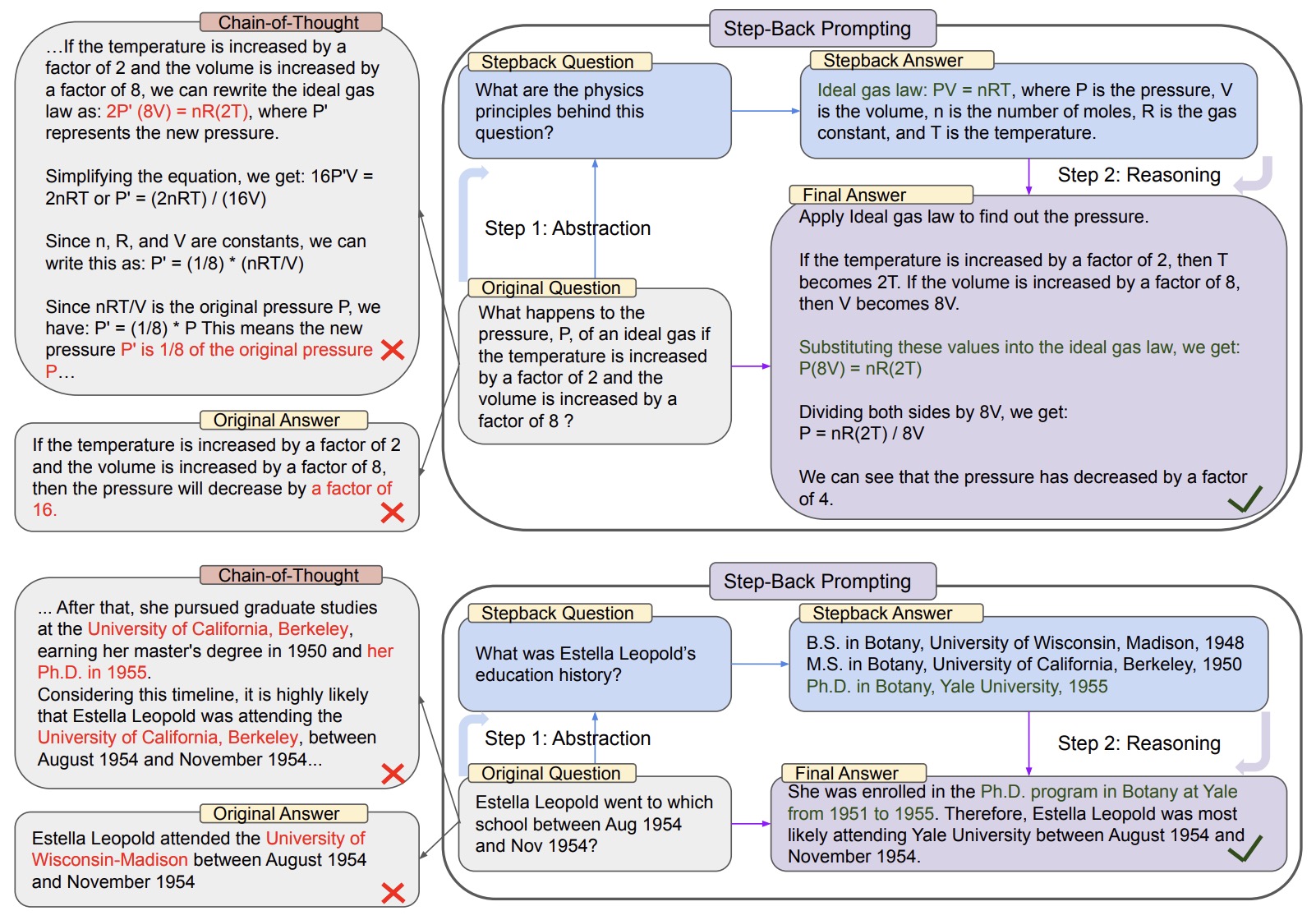

Step Back Prompting in Retrieval-Augmented Generation (RAG) involves rephrasing a user’s specific query into a broader, more generalized question before initiating the search process. By taking a moment to "step back," the system can better discern the user's underlying intent, retrieve more comprehensive and contextually relevant knowledge chunks, and subsequently generate an answer that is precise and well supported. This method significantly reduces ambiguity and minimizes hallucinations, resulting in outputs that are both accurate and insightful.

Step Back Prompting is an effective strategy designed to guide a language model (such as GPT or Gemini) to first abstract the core essence of a task before attempting to solve it.

The approach can be likened to saying:

"Hold on. Don’t rush to solve this right away. First, identify what type of problem we’re dealing with."

Rather than immediately presenting the model with a complex or detailed query, Step Back Prompting encourages a preliminary step: asking the model to rephrase, summarize, or generalize the problem into a broader context. By focusing on the underlying concept first, the model gains a deeper understanding, leading to more accurate, contextually relevant, and well-grounded solutions.

Why Use Step-Back Prompting?

Handling Ambiguous User Queries

Reducing Errors in Complex Problem-Solving

Preventing Hallucinated Responses

Enhancing Creative Problem-Solving

Enhancing Creative Problem-Solving

Facilitating Better Knowledge Retrieval in RAG Systems

How step back prompting work ?

Code Implementation

1. Import Required Libraries

pythonCopyEditimport openai

import os

# Set your OpenAI API key

openai.api_key = os.getenv("OPENAI_API_KEY")

2. Define Helper Functions

pythonCopyEdit# Function to reframe the query

def step_back_prompt(query):

step_back_instructions = (

"Reframe the following specific question into a broader, more general question. "

"Think about what type of problem it is trying to solve:\n\n"

f"Specific question: {query}\n\n"

"Broad, general question:"

)

response = openai.ChatCompletion.create(

model="gpt-4",

messages=[{"role": "user", "content": step_back_instructions}],

temperature=0.7,

max_tokens=100,

)

return response["choices"][0]["message"]["content"].strip()

# Function to generate the final answer

def generate_answer(broad_question, original_query):

reasoning_instructions = (

"Use the broader question to provide context and then answer the original query.\n\n"

f"Broad question: {broad_question}\n"

f"Original query: {original_query}\n\n"

"Final answer:"

)

response = openai.ChatCompletion.create(

model="gpt-4",

messages=[{"role": "user", "content": reasoning_instructions}],

temperature=0.7,

max_tokens=300,

)

return response["choices"][0]["message"]["content"].strip()

3. Implement Step-Back Prompting

pythonCopyEditdef step_back_prompting_workflow(user_query):

# Step 1: Generate a broader question

print("Step 1: Reframing the Query")

broad_question = step_back_prompt(user_query)

print(f"Broader Question: {broad_question}\n")

# Step 2: Generate the final answer

print("Step 2: Generating the Final Answer")

final_answer = generate_answer(broad_question, user_query)

print(f"Final Answer: {final_answer}")

return final_answer

4. Example Usage

pythonCopyEditif __name__ == "__main__":

# User's specific query

user_query = "What are the key AI advancements made by Tesla in March 2025?"

# Execute the Step-Back Prompting Workflow

print("User Query:", user_query)

print("="*50)

step_back_prompting_workflow(user_query)

This approach demonstrates the power of Step Back Prompting in action, guiding the model to produce more thoughtful, well-grounded responses.

conclusion

Step-Back Prompting is a powerful approach to handling complex questions by emphasizing abstraction and context. By guiding the model to first generalize the problem and then refine the answer, it enables more thoughtful, accurate, and insightful responses, especially in nuanced or multi layered queries.

Subscribe to my newsletter

Read articles from Yogyashri Patil directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by