How to Build a GPT-4o AI Agent from Scratch in 2025

Olamide David Oluwamusiwa

Olamide David Oluwamusiwa

Forget hiring a team to qualify leads or respond to repetitive support queries. What if you could build your own digital employee, a GPT-4o-powered AI agent that works 24/7, scales instantly, and actually learns over time?

This isn’t just another AI tutorial. It’s a battle-tested blueprint for building intelligent agents, grounded in OpenAI’s newly released official guide: A Practical Guide to Building Agents. With their latest best practices as our foundation, we’ll walk you through how to create agents that are modular, measurable, and production-ready.

This tutorial is fully aligned with OpenAI’s blueprint making it one of the most trusted and up-to-date resources available online.

In this hands-on guide, you’ll learn how to build a real-world GPT-4o AI agent using LangChain, memory, and tool usage from setup to user interface, and all the way through to deployment with Docker. Whether you’re a developer, product manager, or curious founder, this tutorial breaks everything down into clear, actionable steps even if you’re just getting started.

What is a GPT-4o AI Agent?

A GPT-4o AI agent is more than a chatbot. It’s an intelligent system powered by OpenAI’s GPT-4o model that can read, listen, see, and respond. It understands natural language, takes action, and uses tools like search engines, databases, or APIs to get work done.

What makes it different?

GPT-4o is multimodal it supports text, audio, and vision inputs natively. Paired with an agent framework like LangChain, it can:

Answer technical support questions

Book meetings or qualify leads

Extract structured data from unstructured conversations

Make decisions based on logic and real-time inputs

Image by Sora

What is an LLM (Large Language Model)?

An LLM, or Large Language Model, is a type of artificial intelligence trained to understand and generate human language. But it’s much more than just autocomplete it’s a neural network with billions of parameters designed to recognize patterns in language.

Here’s how it works, in simple terms: It reads a massive amount of content websites, books, code, documentation and learns how language works. Then, when you ask a question, it doesn’t “know” the answer. It predicts the most likely next word using probability.

Example:

Input: “How do I reset my…”

LLM prediction: “password” — because it has seen that phrase thousands of times in training.

Modern LLMs like GPT-4o go further:

Understand context, not just keywords

Maintain multi-turn conversations

Support text, vision, and voice natively

LLMs are the brains of AI agents. But to act like real assistants, they need a framework like LangChain that gives them memory, tools, and decision logic.

Real-World Examples of LLM Agents in Action

Let’s look at how top companies use agents like the one you’re about to build:

Klarna

Result: Replaced most of their human support team

Impact: 2.3 million customer conversations per month

Success Rate: 65% of requests handled without human help

(Source: OpenAI x Klarna)

Intercom

Product: Fin (LLM-powered support agent)

Impact: Resolved over 50% of customer questions instantly

(Source: Intercom Blog)

These aren’t experiments. They’re production-grade AI agents delivering real business value.

Image by Gpt4o

What You Need (Prerequisites)

“This is a bit technical but trust me, if you follow the steps, you’ll get it.”

Python 3.10+: Install from python.org

VS Code or Terminal: To write and run your code (Download VS Code)

OpenAI API Key: Get yours from platform.openai.com

Basic Python Knowledge: Know how to run

.pyfiles, use functions, and install packages (Learn here)(Optional) Ngrok: For exposing your local app online via ngrok.com

Setting Up GPT-4o + LangChain (Step-by-Step)

Step 1: Create your virtual environment

python -m venv gpt4o_env

source gpt4o_env/bin/activate # On Windows: gpt4o_env\\Scripts\\activate

Step 2: Install required packages

pip install openai langchain python-dotenv

Step 3: Add your API key to .env

OPENAI_API_KEY=your_api_key_here

Step 4: Initialize your GPT-4o LLM with LangChain

from langchain.llms import OpenAI

import os

from dotenv import load_dotenv

load_dotenv()

llm = OpenAI(model_name="gpt-4o", openai_api_key=os.getenv("OPENAI_API_KEY"))

How Agent Frameworks Work

LangChain acts as the orchestrator between your LLM and real-world tools.

An agent does 3 key things:

Understands the user’s request

Picks the right tool to use (e.g., search, calculator)

Responds based on results

Example:

from langchain.agents import initialize_agent, Tool

tools = [

Tool(name="Search", func=lambda x: "Searching: " + x, description="General knowledge queries")

]

agent = initialize_agent(tools, llm, agent="zero-shot-react-description", verbose=True)

print(agent.run("What's trending in AI today?"))

Building Real-World AI Agents (Backed by OpenAI’s Best Practices)

You’re not just learning how to make an agent that talks you’re learning how to build a digital teammate that thinks, acts, and solves problems in the real world.

OpenAI recently published their official guide titled A Practical Guide to Building Agents, and it outlines what separates a basic chatbot from a production-grade agent.

Here’s what you should know (and how this tutorial follows that path):

1. Start with a Focused Use Case

“Pick a high-leverage task your agent can reliably own.” OpenAI

Real-world agents thrive when they’re designed to solve specific, repeatable problems. In this guide, we focus on lead qualification, support automation, and task execution clear, measurable, high-leverage tasks.

2. Design with Modular Architecture

“Successful agents separate thinking, memory, and action.” OpenAI

We’ve implemented this with:

GPT-4o for perception (understanding inputs)

LangChain agents for planning

Tools for action (e.g., calculate_discount)

This mirrors OpenAI’s blueprint for scalable, production-level agents.

3. Build for Safety and Control

“Start with limited tools and clearly define boundaries.” OpenAI

We restrict agent behavior to approved tools, use logging, and provide fallback strategies core to deploying safe, reliable agents.

4. Monitor and Improve Over Time

“Agents improve with iteration track what they do, and refine.”

Once your agent is live, track how it behaves, collect user feedback, and improve prompts and tools over time.

This makes your agent not only functional but dependable.

This guide incorporates all four pillars from OpenAI’s framework so what you’re building here is more than just functional. It’s future-ready.

Full Working Example: GPT-4o Agent with Memory + Tools

Here’s how to build a real agent with memory and a custom tool:

# File: gpt4o_agent.py

import os

from dotenv import load_dotenv

from langchain.llms import OpenAI

from langchain.agents import initialize_agent, Tool

from langchain.agents.agent_types import AgentType

from langchain.memory import ConversationBufferMemory

# Load API Key

load_dotenv()

OPENAI_API_KEY = os.getenv("OPENAI_API_KEY")

# Initialize GPT-4o

llm = OpenAI(model_name="gpt-4o", temperature=0.3, openai_api_key=OPENAI_API_KEY)

# Custom Tool

def calculate_discount(product_price):

price = float(product_price)

discount_price = price * 0.9

return f"The discounted price is ${discount_price:.2f}"

# Tool configuration

tools = [

Tool(

name="DiscountCalculator",

func=calculate_discount,

description="Calculates 10% discount on a product price"

)

]

# Memory for chat history

memory = ConversationBufferMemory(memory_key="chat_history")

# Initialize LangChain agent

agent = initialize_agent(

tools=tools,

llm=llm,

agent=AgentType.CONVERSATIONAL_REACT_DESCRIPTION,

verbose=True,

memory=memory

)

# CLI Loop

print("GPT-4o AI Agent (type 'exit' to quit)")

while True:

user_input = input("\nYou: ")

if user_input.lower() == "exit":

break

response = agent.run(user_input)

print("\nAgent:", response)

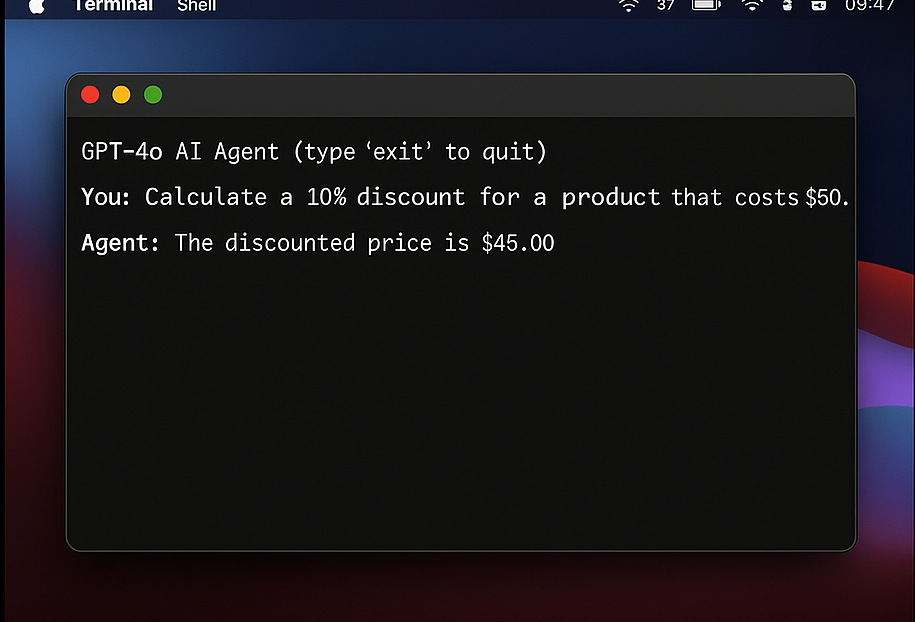

After running the code, You should see something this:

Use Case: Lead Generation Agent (With Real Logic)

Here’s how to turn the agent into a digital sales assistant:

def qualify_lead():

print("👋 Hi! I'd like to ask you a few questions to understand your project.")

name = input("What's your name? ")

email = input("Your email? ")

budget = input("What's your budget? ")

timeline = input("When do you want to start? ")

decision = input("Are you the decision-maker? (Yes/No) ")

return {

"name": name,

"email": email,

"budget": budget,

"timeline": timeline,

"decision_maker": decision

}

lead = qualify_lead()

print("📬 Lead Captured:", lead)

To send it to Zapier:

import requests

def send_to_zapier(data):

zap_url = "https://hooks.zapier.com/hooks/catch/your-zap-id"

requests.post(zap_url, json=data)

Avoiding Common Mistakes

Overloading the LLM: Use tools for math, logic, and lookups

No Fallback: Add a manual escalation option

Long Prompts: Keep prompts simple and consistent

No Logging: Log every request/response to monitor performance

Bonus: Get the Full Tutorial as a Download

For following this far, here’s a bonus for you: the complete source code in PDF format from backend agent setup to UI and Docker deployment.

Use it to enhance your project, wrap your agent in a user-friendly UI, or launch it live using Docker.

Conclusion: Where This Is Going

We’re entering a phase where every product, platform, or service will have its own AI agent. Whether it’s a SaaS dashboard or a startup support bot, the tools you’ve seen today GPT-4o, LangChain, and agent frameworks are the building blocks of tomorrow’s digital workforce.

The best time to learn how to build these agents? Right now.

References

OpenAI API Documentation

Learn more about how to use the OpenAI API for text generation:

https://platform.openai.com/docs/LangChain Documentation

Official guide to orchestrating RAG pipelines with LangChain:

https://www.langchain.com/Klarna x OpenAI Case Study

See how Klarna uses GPT-powered agents to handle millions of support tickets:

https://openai.com/customer-stories/klarnaIntercom Fin AI Agent

Discover how Intercom’s Fin uses LLMs to solve over 50% of support requests instantly:

https://www.intercom.com/blog/fin-launch/Ngrok Official Documentation

Step-by-step guide to exposing your local apps securely using Ngrok:

https://ngrok.com/docsPython Official Downloads Page

Download the latest version of Python for your operating system:

https://www.python.org/downloads/W3Schools Python Tutorial

A beginner-friendly introduction to Python programming:

https://www.w3schools.com/python/

Subscribe to my newsletter

Read articles from Olamide David Oluwamusiwa directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by