How to Build a Simple Math Assistant using Langchain's Tool Calling and OpenAI

Indranil Maiti

Indranil Maiti

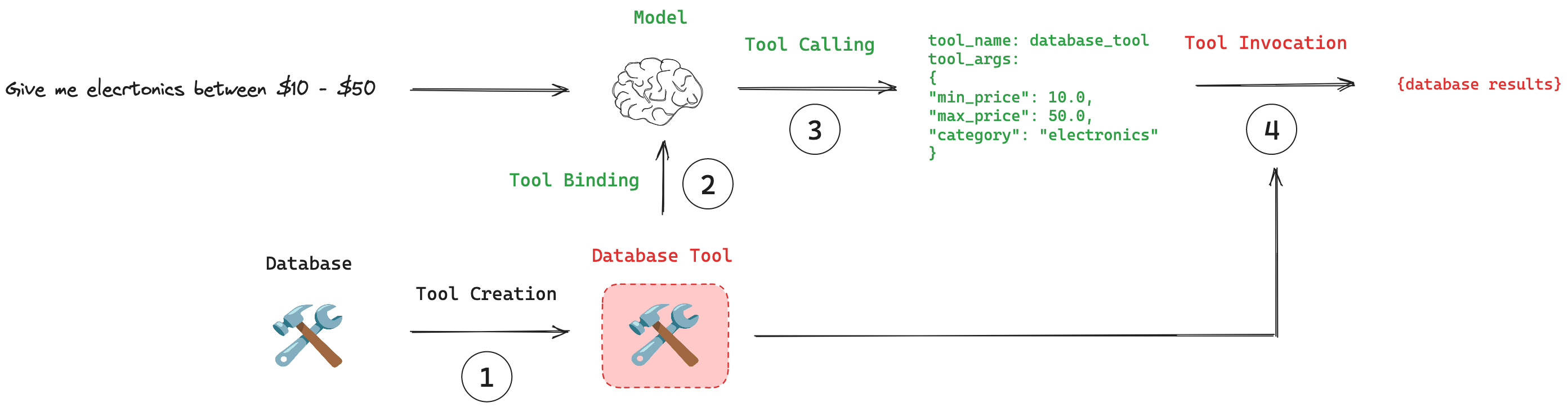

Whether you want to create a simple AI agent or a complex RAG system, calling a tool is an essential step. For example, if you are building a simple math assistant chatbot to solve user queries related to math, you need to tell your chatbot to call several operations (e.g., addition, subtraction, multiplication, etc.) depending on the user's query. Similar way, if you are building a complex RAG system where a database operation is needed to resolve user query you need to call tool in your RAG system.

Therefore learning how to resolve query by calling outside tools is an important step.

Today, we will build a simple math assistant and see how a tool can be called from outside. We will use Langchain, a open source library for this.

I built this live in my youtube channel: You can check this here.

Prerequisites

You need to have your own OPENAI_API_KEY

Installation

Install the Langchain first

pip install langchain

We will use openAI for AI model. For this we need start the chat model using langchain.chat_models. Before this don’t forget to set up your .env file and load it.

Start the LLM model : chatGPT

from dotenv import load_dotenv

load_dotenv()

openai_api_key = os.getenv("OPENAI_API_KEY")

from langchain.chat_models import init_chat_model

llm = init_chat_model("gpt-4o-mini", model_provider="openai", temperature=0.0, openai_api_key=openai_api_key)

Write the tools

Once we set up our model lets define the tools. For demonstration purpose we will only create four functions here using tool from langchain_core. This @tool decorator is the simplest way to define a custom tool. The decorator uses the function name as the tool name by default, but this can be overridden by passing a string as the first argument. Additionally, the decorator will use the function's docstring as the tool's description - so a docstring MUST be provided.

from langchain_core.tools import tool

@tool

def add(a: int, b: int) -> int:

"""Adds a and b."""

return a + b

@tool

def multiply(a: int, b: int) -> int:

"""Multiplies a and b."""

return a * b

@tool

def subtract(a: int, b: int) -> int:

"""Subtracts b from a."""

return a - b

@tool

def divide(a: int, b: int) -> float:

"""Divides a by b."""

if b == 0:

return "Error: Cannot divide by zero"

return a / b

There are several methods to define external tools. You can check them all in langchain documentation.

Add the tools to LLM

Next step is to tell our model about the tools we have created so that it can use the tools whenever it is necessary. LangChain provides a standardized interface for connecting tools to models. The .bind_tools() method can be used to specify which tools are available for a model to call. We have used llm that we have created using openAI in the first step and bind the tools using .bind_tools().

# Creating the tool list

tools = [add, multiply, subtract, divide]

# Binding the tools to the llm

llm_with_tools = llm.bind_tools(tools)

Now, we have to setup our LLM i.e. openAI with system prompt and user prompt. We will use HumanMessage, SystemMessage from Langchain. We create an array configuring our system prompt and user query.

from langchain_core.messages import HumanMessage, SystemMessage

messages = [SystemMessage(

content="You are a helpful assistant! Your resolves user quereies by calling tools. If it is not a math problem reply Ask me valid question, this is not math problem"

),

HumanMessage(

content="what is 2*3"

)]

Let us ask the question to the model. With .invoke() we asked the message to llm_with_tools. We catch the response and add the response to messages array.

ai_msg = llm_with_tools.invoke(messages)

messages.append(ai_msg)

There is a beauty in Langchain. If we do such tool calling the output, ai_msg also contains about the tools that has been called. We can see that by ai_msg.tool_calls. We have called an multiplication opeartion.

[{'name': 'multiply', 'args': {'a': 2, 'b': 3}, 'id': 'call_ZAjJMNx5XRjZiEjWhB2y2wCI', 'type': 'tool_call'}]

Now we will use this to get the return from the specific function that the model has used. Think about the flow at this point. You asked a question to the model it checked for the function, the function gives a result and we need to send the result to the model so that it gives final output

Get the tool Output

for tool_call in ai_msg.tool_calls:

if tool_call["name"].lower() == "add":

selected_tool = add

elif tool_call["name"].lower() == "multiply":

selected_tool = multiply

elif tool_call["name"].lower() == "subtract":

selected_tool = subtract

elif tool_call["name"].lower() == "divide":

selected_tool = divide

tool_msg = selected_tool.invoke(tool_call)

print(tool_msg)

messages.append(tool_msg)

In the for loop we first checked which function is called and then we use selected_tool.invoke(tool_call) to get the output from the tool. How the tool_msg looks like

#Output

content='6' name='multiply' tool_call_id='call_N69jTaXjCoVMZjudHn8mBBGd'

Clearly, the multiply tool has been called. Now we must need to provide this information to the model once again so that it provides final output. We append this information to messages list once again using messages.append(tool_msg). Finally, give the whole message to the model and get the output.

ai_message = llm_with_tools.invoke(messages)

ai_message

Final output

AIMessage(content='The result of \\( 2 \\times 3 \\) is 6.', additional_kwargs={'refusal': None}, response_metadata={'token_usage': {'completion_tokens': 18, 'prompt_tokens': 136, 'total_tokens': 154, 'completion_tokens_details': {'accepted_prediction_tokens': 0, 'audio_tokens': 0, 'reasoning_tokens': 0, 'rejected_prediction_tokens': 0}, 'prompt_tokens_details': {'audio_tokens': 0, 'cached_tokens': 0}}, 'model_name': 'gpt-4o-mini-2024-07-18', 'system_fingerprint': 'fp_0392822090', 'id': 'chatcmpl-BRfRlVmBhg7sRxqJyJOdo1CMiStGg', 'finish_reason': 'stop', 'logprobs': None}, id='run-7b2cb652-bd9e-4155-b538-a5a1dc6fbe94-0', usage_metadata={'input_tokens': 136, 'output_tokens': 18, 'total_tokens': 154, 'input_token_details': {'audio': 0, 'cache_read': 0}, 'output_token_details': {'audio': 0, 'reasoning': 0}})

Full Code:

#https://python.langchain.com/docs/how_to/tool_results_pass_to_model/

#https://python.langchain.com/docs/how_to/tool_calling/

from langchain_core.messages import HumanMessage, SystemMessage

from langchain_core.tools import tool

from langchain.chat_models import init_chat_model

llm = init_chat_model("gpt-4o-mini", model_provider="openai", temperature=0.9, openai_api_key=openai_api_key)

@tool

def add(a: int, b: int) -> int:

"""Adds a and b."""

return a + b

@tool

def multiply(a: int, b: int) -> int:

"""Multiplies a and b."""

return a * b

# Creating the tool list

tools = [add, multiply]

# Binding the tools to the llm

llm_with_tools = llm.bind_tools(tools)

messages = [SystemMessage(

content="You are a helpful assistant! Your resolves user quereies by calling tools. If it is not a math problem reply Ask me valid question, this is not math problem"

),

HumanMessage(

content="what is 2*3"

)]

ai_msg = llm_with_tools.invoke(messages)

messages.append(ai_msg)

print(ai_msg.tool_calls)

for tool_call in ai_msg.tool_calls:

if tool_call["name"].lower() == "add":

selected_tool = add

elif tool_call["name"].lower() == "multiply":

selected_tool = multiply

tool_msg = selected_tool.invoke(tool_call)

print(tool_msg)

messages.append(tool_msg)

ai_message = llm_with_tools.invoke(messages)

ai_message

If you want to build a chatbot system where users can repeatedly ask the model questions, here is the full version of it.

from langchain_core.messages import HumanMessage, SystemMessage

from langchain_core.tools import tool

from langchain.chat_models import init_chat_model

llm = init_chat_model("gpt-4o-mini", model_provider="openai", temperature=0.0, openai_api_key=openai_api_key)

@tool

def add(a: int, b: int) -> int:

"""Adds a and b."""

return a + b

@tool

def multiply(a: int, b: int) -> int:

"""Multiplies a and b."""

return a * b

@tool

def subtract(a: int, b: int) -> int:

"""Subtracts b from a."""

return a - b

@tool

def divide(a: int, b: int) -> float:

"""Divides a by b."""

if b == 0:

return "Error: Cannot divide by zero"

return a / b

def create_math_chat():

# Initialize the language model

llm = init_chat_model(

"gpt-4o-mini",

model_provider="openai",

temperature=0.5,

openai_api_key=openai_api_key

)

# Create the list of tools

tools = [add, multiply, subtract, divide]

# Bind the tools to the LLM

llm_with_tools = llm.bind_tools(tools)

# Initialize the message history with a system message

messages = [

SystemMessage(

content="You are a helpful math assistant. You can solve simple math problems by calling tools. For mathematical operations, use the appropriate tool. If the user's query is not a math problem, politely respond that you're specialized in math operations."

)

]

print("Math Chat started! Type 'exit' to end the chat.")

print("Example queries: 'what is 5*7?', 'can you add 123 and 456?'")

# Chat loop

while True:

# Get user input

user_input = input("\nYou: ")

# Check if user wants to exit

if user_input.lower() in ['exit', 'quit', 'bye']:

print("Assistant: Thank you for chatting! Goodbye.")

break

# Add user message to history

messages.append(HumanMessage(content=user_input))

# Get AI response

ai_msg = llm_with_tools.invoke(messages)

messages.append(ai_msg)

# Display AI's initial response

print(f"\nAssistant: {ai_msg.content}")

# Process any tool calls

if hasattr(ai_msg, 'tool_calls') and ai_msg.tool_calls:

print("(Using tools to calculate...)")

for tool_call in ai_msg.tool_calls:

# Select the appropriate tool

tool_name = tool_call["name"].lower()

if tool_name == "add":

selected_tool = add

elif tool_name == "multiply":

selected_tool = multiply

elif tool_name == "subtract":

selected_tool = subtract

elif tool_name == "divide":

selected_tool = divide

else:

continue

# Invoke the tool and add the result to the message history

tool_msg = selected_tool.invoke(tool_call)

messages.append(tool_msg)

# Print tool result for debugging (optional)

print(f"(Tool '{tool_name}' result: {tool_msg.content})")

# Get final AI response after tool use

final_ai_msg = llm_with_tools.invoke(messages)

messages.append(final_ai_msg)

# Display final response

print(f"\nAssistant: {final_ai_msg.content}")

if __name__ == "__main__":

create_math_chat()

Math Chat started! Type 'exit' to end the chat.

Example queries: 'what is 5*7?', 'can you add 123 and 456?'

Assistant:

(Using tools to calculate...)

(Tool 'add' result: 5)

Assistant: The addition of 2 and 3 is 5.

Assistant:

(Using tools to calculate...)

(Tool 'multiply' result: 100000)

Assistant: The multiplication of 200 and 500 is 100,000.

Assistant: Thank you for chatting! Goodbye.

Indranil, signing off….

Watch it live here.

Don’t forget to share your thoughts and remarks on this. Share what you are building. I read every comments very carefully.

Subscribe to my newsletter

Read articles from Indranil Maiti directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Indranil Maiti

Indranil Maiti

I am a MERN stack developer and an aspiring AI application engineer. Lets grab a cup of coffee ad chat.