Optimizing AWS Lambda Performance: Cold Start Mitigation Techniques

Maitry Patel

Maitry PatelWhen you first start building with AWS Lambda, everything feels fast and magical. Code runs without servers, scales automatically, and costs less when idle— what’s not to love? But then comes the hiccup that almost every serverless developer faces sooner or later: the cold start

It’s that subtle, annoying delay that creeps in when your function hasn’t been used for a while—and in high-performance applications, even a few hundred millisecond can feel like forever.

In this blog, we will break down cold starts are, why they happen, and most importantly, how to outsmart them using some smart, practical optimization techniques.

What is a Cold Start?

A cold start occurs when AWS needs to create a new execution environment for your Lambda Function:

AWS downloads your function code.

Initializes the runtime.

Runs any initialization code outside your handler.

This entire setup can introduce extra latency, especially in:

VPC-connected Lambdas

Large deployment packages

Rarely-involved functions

Conversely, a warm start reuses an existing environment—no noticeable delay.

What causes Cold Start?

| Factor | Impact on cold start time |

| Language runtime | Some(java, .NET) are slower than others (Node.js, Python) |

| Package size | Larger deployment = Longer cold starts |

| VPC networking | Functions inside a VPC take Longer due to ENI (Elastic Network Interface) setup. |

| Initialization complexity | Heavy code outside the handler increases cold start time. |

| Memory configuration | Higher memory= faster CPU = faster cold start |

Techniques to Mitigate Cod Starts

1. Choose a Faster Runtime

If possible, pick faster startup runtimes:

Best: Node.js, Python, Go

Slower: Java, .NET (but can be optimized)

Pro tip: For ultra-low latency, Go and Rust are excellent.

2. Minimize Package Size

Large functions take longer to download and initialize.

Use Lambda Layers to separate common libraries.

Remove unnecessary files (e.g., README, test data).

Use tools like

webpack,esbuild, orserverless frameworkto bundle and tree-shake.

Pro tip: Keep deployment packages < 10 MB when possible.

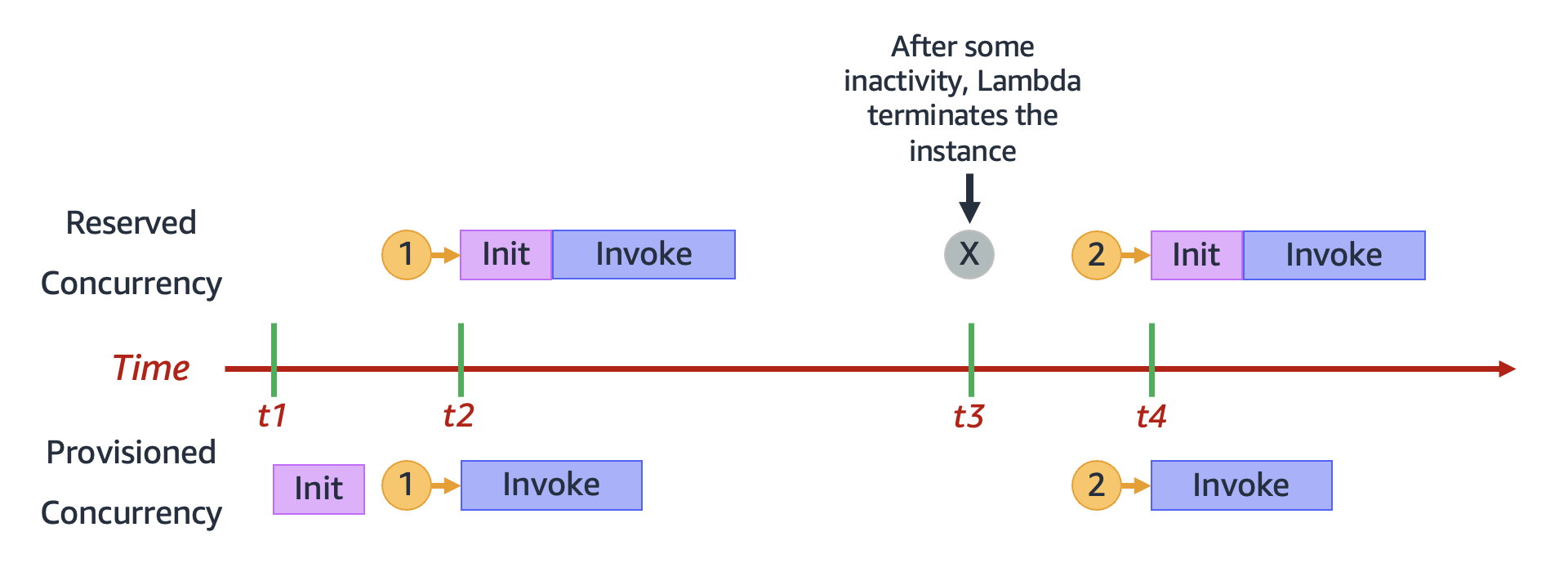

3. Use Provisioned Concurrency

Provisioned Concurrency keeps a specified number of Lambda instances always warm and ready.

How it works:

Pre-initializes Lambda environments.

Guarantees no cold starts.

Scales automatically.

When to use:

Customer-facing APIs

Real-time processing (chat apps, trading apps)

Critical SLAs (Service Level Agreements)

Note: Provisioned Concurrency costs extra but is cheaper than losing customers to latency.

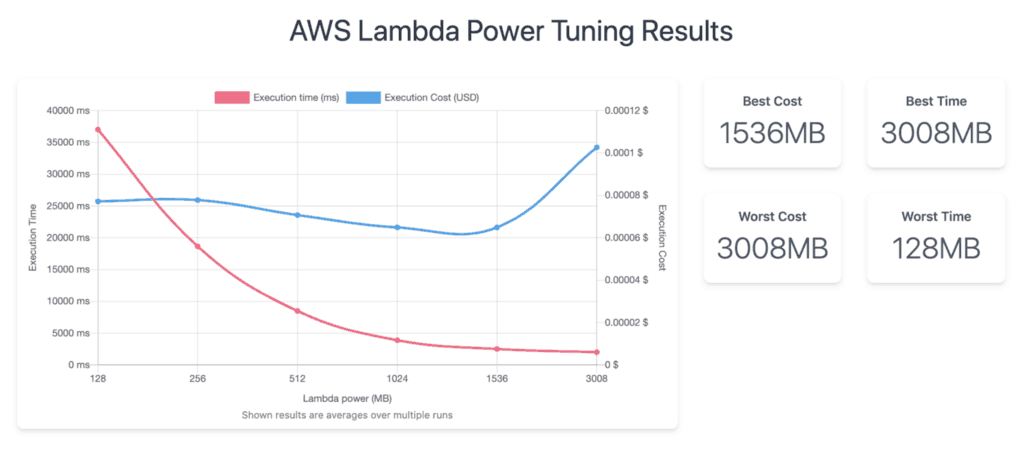

4. Tune Memory and CPU

AWS Lambda automatically allocates CPU power proportional to memory.

More memory = faster execution AND faster cold starts.

Experiment by increasing memory even if your function doesn’t use much RAM—because you get more CPU!

5. Keep Initialization Code Lightweight

Move heavy logic inside the handler function instead of the global scope.

# BAD: Heavy work outside handler

db_connection = create_heavy_db_connection()

def handler(event, context):

result = db_connection.query(...)

return result

# GOOD: Initialize inside handler or lazy load

db_connection = None

def handler(event, context):

global db_connection

if db_connection is None:

db_connection = create_heavy_db_connection()

result = db_connection.query(...)

return result

This way, only warm starts pay the initialization penalty, not every cold start.

6. Optimize VPC Configuration

Use VPC Endpoints and PrivateLink for faster networking.

Prefer AWS-managed networking where possible.

Reduce dependency on VPC unless necessary (e.g., databases).

In 2020, AWS improved VPC cold start times significantly—but still, non-VPC Lambdas are faster.

| Optimization | Benefit |

| Provisioned Concurrency | Eliminates cold starts completely |

| Smaller package size | Reduces startup time |

| Faster runtime | Speeds initialization |

| Higher memory allocation | Boosts CPU & reduces delay |

| SnapStart for Java | Game-changer for Java apps |

| Efficient VPC usage | Cuts network initialization time |

Wrapping Up: Giving Cold Starts the Boot

Cold starts don’t have to be a headache. With a few tricks up your sleeve—like provisioned concurrency, trimming down your code, and managing those initialization tasks—you can keep things running smooth and fast.

By keeping an eye on your functions with tools like AWS CloudWatch, you’ll always stay one step ahead. Lambda is a powerful tool, and with these optimizations, you’ll make sure it’s running at its best, letting you focus on building great experiences without the lag.

“When your Lambda functions go from cold start to full speed ahead! ”

“Ready to see your Lambda functions race at full speed? What optimization techniques have you tried, and which ones worked best for you?”

Subscribe to my newsletter

Read articles from Maitry Patel directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by