Securing Infrastructure by Hardening IaC Configurations

Siri Varma Vegiraju

Siri Varma Vegiraju

Infrastructure as Code (IaC) became the de facto standard for managing infrastructure resources for many organizations. According to Markets and Markets, a B2B research firm, the IaC market share is poised to reach USD 2.3 Billion by 2027.

What Is Infrastructure as Code?

Before IaC, a developer would use the cloud provider GUI, clicking through different configurations and settings to provision a resource like a Virtual Machine. When you need to provision a single instance, this is easy, but modern workloads are more than one single machine, 1000s of VMs, and hundreds of storages — not to forget this is for one region. To achieve high availability, the same stamp needs to be created in multiple regions and availability zones. One way organizations automated this process is, through scripts, though it had challenges like versioning and, most importantly, the redundancy of each team repeatedly creating scripts from scratch.

Infrastructure as Code came as a solution to these problems. The term was first introduced in 2009 by "Puppet," stating new ways are required to scale infrastructure and adapt to increasing application system complexity.

Example of IaC code template:

JSON

{

"type": "Microsoft.Storage/storageAccounts",

"apiVersion": "2022-09-01",

"name": "[parameters('storageAccountName')]",

"location": "[resourceGroup().location]",

"sku": {

"name": "Standard_LRS"

},

"kind": "StorageV2",

"identity": {

"type": "SystemAssigned"

},

"properties": {

"allowBlobPublicAccess": true

},

"resources": []

},

What we see above is an ARM (Azure Resource Manager) Template to provision a Storage account in Azure. Similarly, GCP and Amazon have their templates. With multi-cloud gaining a lot of traction, vendor-agnostic products like Terraform are being used widely.

We solved the scale and complexity problem, but the security problem remains. According to Gartner, by 2025, 99% of cloud security failures will be due to customer misconfigurations.

Security Risks With IaC

Increased Attack Surface

Because the Templates are shared increasing reusability, a small bug in it impacts all the deployments.

For example: In the above JSON, allowing public access is set to

true, which means any deployment using the template will have public access which can be a security risk.

Excessive Privileges

- When deploying infrastructure resources, high privileges are required. If this identity is compromised, threat actors could gain privileged access to the environment.

So how can we help organizations keep their infrastructure secure?

Infrastructure as Code Security

The most basic way of identifying misconfigurations is through Static Code Analysis. Let's consider an example,

Imagine there is a baseline that states storage resources should not have public access.

Control Domain | ASB Control Title | Guidance | Responsibility |

Network Security | Secure cloud services with network controls | Disable public network access by either using Azure Storage service-level IP ACL filtering or a toggling switch for public network access. | Customer |

The baseline can be converted to code.

Python

class StorageAccountDisablePublicAccess(BaseResourceValueCheck):

def __init__(self) -> None:

name = "Ensure that Storage accounts disallow public access"

id = "DISABLE_PUBLIC_ACCESS"

supported_resources = ("azurestorageaccount",)

super().__init__(

name=name,

id=id,

categories=categories,

supported_resources=supported_resources,

)

def get_inspected_key(self) -> str:

return "allowBlobPublicAccess"

def get_expected_values(self) -> list[Any]:

return [False]

check = StorageAccountDisablePublicAccess()

And your organization uses Terraform to manage this resource.

resource "azapi_resource" "symbolicname" {

type = "Microsoft.Storage/storageAccounts@2023-01-01"

name = "string"

location = "string"

identity {

type = "string"

identity_ids = []

}

body = jsonencode({

properties = {

allowBlobPublicAccess = "true"

}

})

}

Static Analysis

The Terraform resource provisioning is assessed against a baseline to ensure compliance, and this process can be integrated into build checks so that unsecured configurations are not deployed in production. What we've implemented is a shift-left approach, notifying teams of misconfigurations during development rather than after deployment. This allows risk mitigation before changes are deployed.

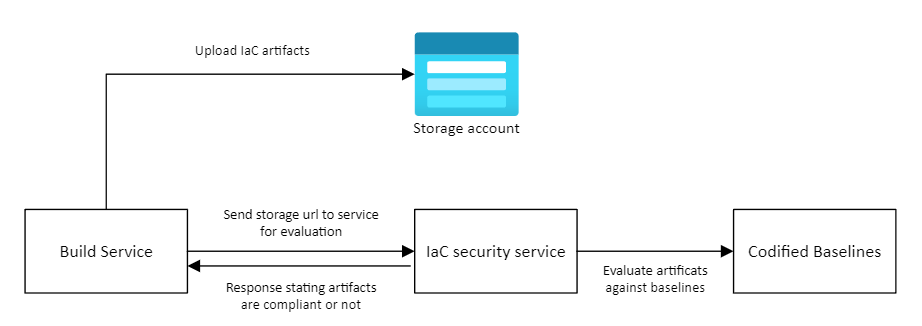

The above diagram describes a more sophisticated approach where there is an "IaC Security Service" that does the evaluation. In other words, the build process uploads the artifacts to the storage account and requests the security service to check for misconfigurations. The service then evaluates the artifacts against the baselines and notifies the build if the configuration is compliant.

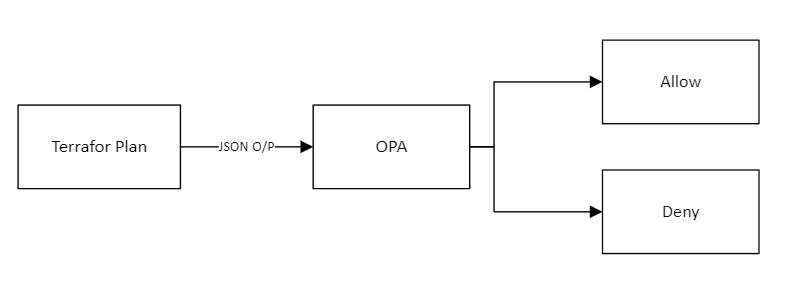

What we have discussed till now is Static Analysis. Open Policy Agent (OPA) allows run-time policy determination.

Dynamic Analysis

OPA allows defining policies against which your Input is evaluated. The result of the evaluation is an allow or deny.

Rego Policy, which OPA will use:

JSON

package storage_account_public_access

# Deny if public network access is enabled

deny[msg] {

input.resource.type == "azurerm_storage_account"

input.resource.config.public_network_access_enabled == true

msg := "Public network access to the storage account must be disabled."

}

The JSON output from the plan is sent to OPA which checks if the "public network access enabled" is set to true. If it is, the action is denied.

Cloud Security Posture Management

While code scanning will help to a certain extent, infrastructure resources can still be deployed using GUI, scripts (without using IaC), and other venues. For these scenarios, we need tools that continuously scan the organization's Cloud environment and alert teams about misconfigurations. As per a recent survey, using a CSPM tool can reduce security incidents due to misconfigurations by 80%.

Providers like AWS and Microsoft offer services that monitor cloud environments and prioritize risk based on attack surface. With the multi-cloud workload growing, customers are looking for provider-agnostic tools. Prisma Cloud and Tenable have offerings in this space.

When selecting a solution in this space, it's preferable to choose one with an agentless offering. An agentless solution scans the infrastructure through the cloud provider's API, rather than deploying an agent on the resources.

Benefits of Agentless CSPM

Lower overhead: Because there are no agents, agentless solutions don’t introduce extra compute or memory usage on cloud resources, reducing operational complexity.

Higher coverage: These solutions can scan a higher number of infrastructures and services without being restricted by the limitations or the scope of agents.

Other features to look out for in CSPM are:

Automated remediation: Some tools go beyond just detection and offer automated or semi-automated remediation workflows, reducing manual toil for the teams.

Customization and scalability: No single solution can address all of an organization's needs. Therefore, selecting a platform that allows for custom policy creation to extend its functionality can be beneficial.

Conclusion

The increasing adoption of cloud services has expanded the threat surface for organizations. Now, more than ever, it is crucial to invest in safeguards that prevent insecure configurations in your infrastructure, protecting your customers and your organization from cybersecurity threats.

Subscribe to my newsletter

Read articles from Siri Varma Vegiraju directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Siri Varma Vegiraju

Siri Varma Vegiraju

Siri Varma Vegiraju is a seasoned expert in healthcare, cloud computing, and security. Currently, he focuses on securing Azure Cloud workloads, leveraging his extensive experience in distributed systems and real-time streaming solutions. Prior to his current role, Siri contributed significantly to cloud observability platforms and multi-cloud environments. He has demonstrated his expertise through notable achievements in various competitive events and as a judge and technical reviewer for leading publications. Siri frequently speaks at industry conferences on topics related to Cloud and Security and holds a Masters Degree from University of Texas, Arlington with a specialization in Computer Science.