MLOps Uncovered: A Complete Guide to Open Source Tools and Techniques for Scalable AI Workflows

Pronod Bharatiya

Pronod BharatiyaTable of contents

- Introduction

- 1. The Foundation of Machine Learning: A Deep Dive into Data Management in MLOps

- 2. Streamlining Model Versioning & Delivery with MLOps Excellence

- 3. Model Development, Experimentation and Monitoring

- The Canonical Data Science Stack: Evolving into a Unified MLOps Framework

- Summary

- Conclusion

Introduction

Machine Learning Operations (MLOps) has emerged as a critical foundation for the reliable, reproducible, and scalable deployment of machine learning systems. As artificial intelligence becomes deeply integrated into products, services, and strategic decision-making, the need for robust operational practices around ML has never been greater.

MLOps brings together principles from software engineering, data engineering, and DevOps to form a unified framework for managing the full lifecycle of machine learning models—from initial experimentation to deployment and ongoing monitoring.

(Image Credit: What is MLOps?)

In this comprehensive article, we explore the three fundamental landscapes that define MLOps:

Data Management

Model Versioning & Delivery

Model Development & Experimentation

Each section will unpack the core objectives and methodologies behind these areas, while also highlighting leading open-source tools, and advanced capabilities that practitioners can apply in real-world scenarios.

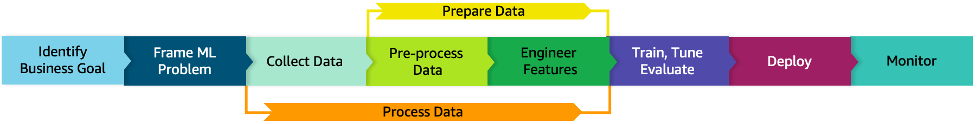

MLOps in Machine Learning Lifecycle

(Image Credit: What is MLOps?)

Whether you're building your first ML pipeline or scaling an enterprise-grade AI infrastructure, understanding these MLOps landscapes is essential for success in today’s data-driven world.

This article delves into the critical role of Machine Learning Operations (MLOps) in deploying reliable and scalable machine learning systems. It outlines the integral landscapes of MLOps, focusing on data management, model versioning and delivery, and model development and experimentation. The piece highlights the importance of data management, exploring its core components like data versioning and feature stores, and emphasizes model versioning practices crucial for production environments. Additionally, it examines model development and monitoring, showcasing open-source tools like MLflow, DVC, Pachyderm, and Kubernetes-native solutions for a seamless MLOps experience. The discussion extends to the Canonical Data Science Stack, proposing enhancements to transform it into a robust MLOps framework. Overall, the article serves as a comprehensive guide for integrating MLOps principles into modern AI workflows to ensure efficiency, scalability, and success.

1. The Foundation of Machine Learning: A Deep Dive into Data Management in MLOps

In the evolving world of machine learning (ML), data is more than just a starting point—it's the backbone of the entire model lifecycle. Without a strong approach to data management, even the most sophisticated algorithms can falter. That’s where MLOps (Machine Learning Operations) steps in, embedding best practices and infrastructure to ensure data is not just available, but accurate, traceable, and ready for production at scale.

Why Data Management Matters in MLOps

At its core, data management in MLOps is about ensuring that the right data is available at the right time in the right format. It involves the careful handling of data throughout its lifecycle—from ingestion and storage to transformation and feature engineering. A well-structured data management strategy enables teams to maintain consistency, ensure quality, and foster collaboration across ML workflows.

This practice supports several critical goals:

Consistency: Data used for training and inference must be aligned to prevent data drift and ensure reliable performance.

Version Control: Historical data needs to be accessible to recreate models, debug issues, or audit decisions.

Scalability: As data volumes grow, systems must be capable of handling storage, processing, and retrieval efficiently.

Core Components of MLOps Data Management

Let’s explore the key pillars that make up a modern data management approach in MLOps:

1. Data Versioning

Data versioning is the practice of tracking and managing changes to datasets over time. Just like code versioning in software development, it ensures that ML teams can reproduce results, trace back model behavior, and maintain full transparency throughout the lifecycle. This becomes crucial when debugging models or meeting regulatory compliance requirements.

For example, being able to tie a production model’s prediction to a specific version of a dataset allows teams to investigate anomalies and retrain models with confidence.

2. Feature Stores

Feature stores are centralized platforms for storing, managing, and serving ML features. They serve as the connective tissue between data engineering and model training, ensuring features are consistently computed and available in both offline (batch training) and online (real-time inference) environments.

Key benefits of feature stores include:

Reusability of features across different models or teams

Reduced duplication and error-prone feature engineering efforts

Real-time feature serving for low-latency model predictions

3. ETL Pipelines (Extract, Transform, Load)

ETL pipelines are automated workflows that extract raw data from various sources, transform it into meaningful features, and load it into storage systems or feature stores. These pipelines are essential for maintaining the freshness, quality, and performance of data used in ML applications.

In MLOps, ETL processes must be robust, auditable, and scalable. This includes handling schema changes, ensuring data quality, and monitoring pipeline performance.

4. Data Lineage & Reproducibility

Data lineage refers to the ability to track the origin, transformations, and usage of data throughout its lifecycle. This is a cornerstone of reproducibility—an essential aspect of trustworthy AI. Lineage tools map out data flows and dependencies, offering visibility into how datasets are created and how changes ripple through the system.

With strong lineage tracking, organizations can confidently say which data fed into a model, how it was processed, and whether it meets compliance standards.

Data Management Is Strategic, Not Just Operational

Effective data management is no longer a back-office concern—it’s a strategic enabler for successful ML initiatives. In the context of MLOps, it underpins model reliability, accelerates experimentation, and supports governance and auditability. By investing in robust data versioning, feature stores, ETL pipelines, and lineage tracking, organizations set the stage for scalable and sustainable machine learning systems.

Key Open-Source Tools and Use Cases for Data Management in MLOps

Effective data management is the backbone of successful Machine Learning Operations (MLOps). As machine learning models rely heavily on high-quality, consistent, and reproducible data, choosing the right open-source tools is crucial for building scalable, maintainable ML pipelines. Here, we’ll explore some of the most impactful open-source tools in the MLOps landscape and their specific use cases, focusing on areas like data versioning, orchestration, feature management, and reproducibility.

1. DVC (Data Version Control)

DVC brings version control to datasets and machine learning models, offering Git-like functionality for large files and data workflows. It enables teams to track, version, and share datasets and ML experiments efficiently.

Key Features:

Seamless integration with Git for code-data synchronization

Lightweight

.dvcfiles to represent the data stateSupport for remote storage back-ends (S3, Azure Blob, Google Cloud Storage, and more)

Imagine you're training an ML model using a dataset that frequently evolves. DVC helps you version each dataset iteration, track data lineage, and link changes with your code base for full reproducibility.

Example:

dvc init

dvc add data/train.csv

git add data/train.csv.dvc .gitignore

dvc remote add -d myremote s3://mybucket/path

dvc push

2. Pachyderm

Pachyderm is a powerful, Kubernetes-native platform focused on data versioning and lineage. It allows building scalable, reproducible pipelines that automatically trigger when data changes, ensuring every step in the process is traceable.

Key Features:

Git-like semantics for versioning data

Native support for parallel data processing

Full lineage tracking for data science workflows

Pachyderm is ideal for building data pipelines where reproducibility is critical, like in regulated industries or collaborative research environments. For example, if raw data is updated, downstream transformations and models can automatically re-run using Pachyderm pipelines.

Pipeline Configuration Example:

{

"pipeline": {"name": "preprocess"},

"transform": {"cmd": ["python3", "preprocess.py"], "image": "python:3.8"},

"input": {"pfs": {"repo": "raw_data", "branch": "master", "glob": "/"}}

}

3. Feast (Feature Store)

Feast is a feature store built for both real-time and batch ML pipelines. It centralizes feature storage and ensures consistency between training and inference, solving one of the most common pitfalls in production ML systems.

Key Features:

Central repository for storing and serving features

Supports both online (e.g., Redis) and offline (e.g., BigQuery, PostgreSQL) stores

Integrates easily with cloud providers like AWS, GCP, and Azure

Feast excels when multiple models need access to a common set of features, or when real-time predictions must be consistent with batch training features.

Example:

from feast import FeatureStore

store = FeatureStore(repo_path=".")

features = store.get_online_features(

features=["customer:total_purchases"],

entity_rows=[{"customer_id": 123}]

).to_dict()

4. Apache Airflow

Apache Airflow is an industry-standard orchestration tool used to programmatically author, schedule, and monitor workflows as Directed Acyclic Graphs (DAGs). Its Python-native nature makes it especially friendly for data engineering and ML teams.

Key Features:

DAG-based pipeline creation

Python-first design for maximum flexibility

Built-in web UI for visualization and logs

Commonly used to schedule and monitor data pipelines, such as nightly ETL jobs that prepare training data for ML models.

Example DAG:

from airflow import DAG

from airflow.operators.python_operator import PythonOperator

from datetime import datetime

def preprocess():

print("Processing data")

dag = DAG('etl_pipeline', start_date=datetime(2023, 1, 1), schedule_interval='@daily')

process_task = PythonOperator(task_id='process', python_callable=preprocess, dag=dag)

5. Delta Lake

Delta Lake is an open-source storage layer that brings ACID transactions to big data workloads on Apache Spark. It ensures data reliability and consistency for large-scale data engineering and machine learning workflows.

Key Features:

Built on top of Apache Parquet

ACID compliance with support for schema evolution and enforcement

Time travel functionality to query past versions of data

Delta Lake is ideal for storing large-scale training data where version control, rollback capabilities, and schema integrity are essential.

Example:

from delta.tables import *

deltaTable = DeltaTable.forPath(spark, "/delta/events")

deltaTable.updateExpr("eventType = 'click'", {"eventCount": "eventCount + 1"})

Additional Tools Enhancing the MLOps Data Pipeline

In addition to the primary tools above, several other open-source solutions contribute to robust data management by supporting validation, exploration, and versioning:

Validation Tools

Tools like Apache Hadoop and Apache Spark perform automated data quality checks. These systems identify duplicates, handle missing values, and ensure consistency at scale—critical for production-grade ML workflows.

Exploration Tools

Jupyter Notebooks remain the go-to solution for interactive data exploration. They allow teams to visualize data, document insights, and iterate on models in a shareable, executable format.

Versioning Tools

Beyond DVC and Pachyderm, new tools such as DocLing focus on tracking data evolution, particularly in NLP pipelines where document-level changes can significantly affect downstream models.

Data management is at the core of any successful MLOps strategy. The tools discussed above provide a foundation for building scalable, reproducible, and automated data workflows. Whether you're handling structured tabular data or complex unstructured documents, these open-source solutions can help streamline your pipeline from experimentation to deployment.

2. Streamlining Model Versioning & Delivery with MLOps Excellence

Managing machine learning models in production is very crucial for the success of any AI project. In today’s fast-evolving machine learning (ML) landscape, production environments rarely operate with a single static model. Instead, they often host multiple versions of models—each potentially optimized for different datasets, use cases, or performance metrics. Effectively managing these model versions is critical to maintaining a robust, scalable, and traceable ML workflow.

The primary goal of model management in production is to ensure traceability, rollback capability, and reproducibility. Traceability allows teams to understand which model version was used for a specific prediction. Rollback capability ensures that if a new model under-performs or fails, a previously validated version can be reinstated without disruption. Reproducibility, on the other hand, supports consistent results across teams, environments, and time.

Beyond management, the delivery of models plays an equally important role. Efficient delivery processes automate the promotion of models across different stages—testing, staging, and production—enabling faster iteration cycles while minimizing human error.

To streamline this process, several foundational concepts come into play:

Model Registry

A model registry serves as a centralized system to store, version, and access trained models. It acts as the single source of truth, ensuring that models can be easily retrieved, compared, and deployed across teams and environments.

Versioning

Model versioning is essential for identifying, tracking, and managing the lifecycle of each model iteration. It allows data scientists and ML engineers to maintain a clear history of changes, enabling performance comparisons, reproducibility, and informed decision-making during deployment.

Validation

Before a model is deployed, it must pass through rigorous automatic validation steps. These include performance evaluation on test datasets, bias detection, and integration testing within the target system. Automated validation ensures only high-quality, reliable models reach production.

CI/CD for Machine Learning

Borrowed from software engineering, Continuous Integration and Continuous Delivery (CI/CD) pipelines automate the process of building, testing, and deploying models. In an ML context, CI/CD pipelines handle everything from retraining and validation to deployment, ensuring that updates are seamless and consistent across environments.

By implementing these core principles, organizations can build scalable ML systems that are not only performant but also maintainable and resilient in real-world production settings. Now let us look at the important open source tool available as on date for the same purpose in the section below.

Open-Source Tools for Machine Learning Model Versioning and Delivery

As machine learning (ML) solutions mature from experimental notebooks to robust, production-grade systems, the demand for scalable, maintainable, and transparent ML workflows grows significantly. A wide array of open-source tools has emerged to streamline this journey, from experiment tracking and model versioning to scalable serving and orchestration. In this post, we explore some of the most impactful tools, their key features, and real-world use cases that showcase their utility in modern ML pipelines.

1. MLflow: Lifecycle Management for ML Models

MLflow is a widely adopted open-source platform for managing the complete machine learning lifecycle. It offers modules for experiment tracking, model packaging, and deployment, making it an ideal starting point for teams aiming to maintain reproducibility and auditability.

Specifications:

Experiment Tracking: Log hyperparameters, metrics, and artifacts.

Model Registry: Manage lifecycle stages—Staging, Production, Archived.

Deployment Tools: REST API and CLI support for quick integrations.

It is mainly used to track multiple experiments with different hyperparameters and deploy the most performant model to production.

Code Example:

import mlflow

with mlflow.start_run():

mlflow.log_param("alpha", 0.01)

mlflow.log_metric("rmse", 1.23)

mlflow.sklearn.log_model(model, "model")

mlflow.register_model("runs:/<run_id>/model", "ModelA")

2. MLflow Serving: Simple Model Serving

Once a model is logged and registered, MLflow Serving allows you to expose it as a REST endpoint with minimal configuration.

An important use case that MLflow serving does is to serve a registered model locally or in the cloud to enable real-time predictions.

Command-Line Example:

mlflow models serve -m runs:/<run_id>/model -p 5000

3. Seldon Core: Kubernetes-Native Model Deployment

Seldon Core is built specifically for deploying ML models in Kubernetes environments. It supports advanced deployment strategies like A/B testing and canary rollouts while also offering integrated explainability.

Specifications:

Protocol Support: REST and gRPC APIs

Explainability: Integration with Alibi

Advanced Deployments: Traffic splitting, version control, and drift detection

Seldon Core deploys multiple versions of a model with traffic routing and monitors for data drift.

YAML Example:

apiVersion: machinelearning.seldon.io/v1

kind: SeldonDeployment

metadata:

name: iris-classifier

spec:

predictors:

- graph:

name: classifier

implementation: SKLEARN_SERVER

modelUri: gs://mybucket/model

name: default

replicas: 2

4. Triton Inference Server: Optimized GPU Inference

Developed by NVIDIA, Triton Inference Server is designed for high-performance inference, supporting multiple frameworks like TensorRT, PyTorch, ONNX, and TensorFlow.

Key Features:

Multi-framework Support

Batching and Scheduling

Metrics for Performance Monitoring

Triton Inference Server serves multiple deep learning models with low latency on GPU-accelerated hardware.

Docker Example:

docker run --rm -p8000:8000 -v/path/to/models:/models nvcr.io/nvidia/tritonserver:21.08-py3 tritonserver --model-repository=/models

5. DAGsHub: Version Control for ML Collaboration

DAGsHub brings the best of Git, DVC (Data Version Control), and MLflow into one collaborative platform for machine learning projects.

Specifications:

Git-Based Workflow

Native Integration: MLflow and DVC are supported out of the box

Web UI for Collaboration and Reviews

DAGsHub enables cross-functional teams to track datasets, code, and models in one place for efficient collaboration.

6. Kubeflow: End-to-End ML Orchestration on Kubernetes

Kubeflow is an end-to-end platform for building, training, and deploying scalable ML workflows on Kubernetes. It integrates tightly with tools like TensorFlow, PyTorch, and Katib (for hyperparameter tuning).

Features:

Pipelines for reproducible workflows

Built-in notebook servers

Centralized experiment tracking

Kubeflow automates and orchestrate training, tuning, and deployment pipelines in a Kubernetes environment.

7. Metaflow: Human-Centric ML Workflow Management

Developed by Netflix, Metaflow focuses on enabling data scientists to build and manage real-world data science projects with ease, without needing deep DevOps expertise.

Highlights:

Python-native API

Versioned data and models

AWS-native integrations

Metaflow helps prototype ML workflows locally and scale them to the cloud with minimal refactoring.

8. Flyte: Scalable, Type-Safe Workflow Orchestration

Flyte is a cloud-native platform for orchestrating ML and data workflows. It emphasizes reproducibility and type safety, making it ideal for production-ready pipelines.

Core Features:

Declarative workflows using Python

Automatic versioning and caching

Kubernetes-based execution

Flyte execute ML workflows in a reproducible, scalable way with strong typing and scheduling features.

Comparison Table for Open-Source Tools for Model Versioning and Delivery

| Tool | Primary Function | Platform Support | Deployment Complexity | Kubernetes Native | Model Serving | Version Control | Best Suited For |

| MLflow | Experiment tracking, model registry, serving | On-premise, Cloud, Hybrid | Low | No | Basic REST/CLI | Integrated via DAGsHub | Lightweight ML lifecycle management |

| MLflow Serving | Model serving via REST API | On-premise, Cloud | Low | No | Yes (REST API) | N/A | Quick, local model serving |

| Seldon Core | Model serving on Kubernetes | Cloud, Hybrid | Medium | Yes | Advanced (REST, gRPC, A/B testing) | External (Git, DVC) | Enterprise-grade model serving |

| Triton Server | GPU-accelerated model serving | On-premise, Cloud | Medium | No (compatible) | High-performance (multi-framework) | External | High-throughput inference on GPUs |

| DAGsHub | Version control and collaboration | Cloud | Low | No | No | Yes (Git, DVC, MLflow) | Collaborative ML development |

| Kubeflow | End-to-end ML pipeline orchestration | Cloud, Hybrid | High | Yes | Yes (via KFServing/Seldon) | External | Complete ML workflow automation |

| Metaflow | Workflow orchestration and tracking | Cloud, On-premise | Medium | Optional | No | Yes (via S3/versioned data) | Data scientist-friendly workflows |

| Flyte | Workflow orchestration with type safety | Cloud, Hybrid | High | Yes | No (external integration) | Yes (containers and caching) | Scalable, production-ready workflows |

Diagrammatic Representation for the Open-Source Tools

(Image Credit: Generated with ChatGPT)

Choosing the right open-source tools for your ML stack depends heavily on your team’s goals, infrastructure maturity, and deployment strategy. While MLflow and Metaflow offer accessible entry points for experiment management, tools like Seldon, Triton, and Flyte shine in robust, cloud-native production environments. By thoughtfully combining these tools, teams can build ML pipelines that are not only scalable and efficient but also maintainable and collaborative.

3. Model Development, Experimentation and Monitoring

The Model Development, Experimentation, and Monitoring phase is at the heart of any successful machine learning lifecycle. It involves transforming raw ideas into high-performing, production-ready models through disciplined experimentation, rigorous evaluation, and scalable infrastructure. This phase is not just about coding models—it's about creating a robust ecosystem that supports continuous improvement, collaboration, and traceability.

Building for Performance and Reproducibility

To ensure models are both effective and maintainable, this stage integrates principles of software engineering, data science, and systems design. By embracing structured experimentation and reproducibility, teams can test hypotheses systematically, avoid common pitfalls, and accelerate model iteration cycles. Moreover, scalable training solutions ensure that models can handle growing data volumes and computational demands. Lets discuss the core concepts involved in this phase.

1. Experiment Management

Experiment management involves tracking the parameters, datasets, code versions, and outcomes of each model training run. Without it, comparing model performance over time or debugging unexpected results becomes chaotic. Tools such as MLflow, Weights & Biases, and Neptune.ai help teams log, visualize, and compare experiments, fostering transparency and collaboration across data science teams.

2. Hyperparameter Optimization

Hyperparameters can significantly influence a model’s accuracy and generalization capabilities. Rather than relying on manual tuning, this process can be automated using techniques like grid search, random search, Bayesian optimization, or evolutionary algorithms. Efficient hyperparameter tuning is essential for achieving optimal performance, especially in deep learning models with complex architectures.

3. Model Debugging & Explainability

As models grow in complexity, understanding their decisions becomes critical. Model debugging helps identify training issues, such as data leakage, over-fitting, or gradient vanishing. Meanwhile, explainability tools—like SHAP, LIME, or integrated gradients—offer insights into model predictions, ensuring that stakeholders can trust and interpret the results, especially in regulated industries like healthcare or finance.

4. Distributed and Scalable Training

Modern ML workloads often exceed the capacity of a single machine. Distributed training techniques enable teams to leverage multiple GPUs or even entire clusters to accelerate training times. Frameworks like TensorFlow, PyTorch with Horovod, and Ray Train make it easier to scale training workflows efficiently, supporting massive datasets and complex models without compromising speed or accuracy.

Thus, effective model development is not a one-off effort but a continuous, iterative process. By embedding best practices around experimentation, tuning, debugging, and scalability, organizations can ensure that their machine learning models are not only high-performing but also resilient, explainable, and ready for production deployment.

ML Lifecycle with data processing

(**Image Credit: AWS Well Architecture Framework)

ML lifecycle with detailed phases and expanded components

(**Image Credit: AWS Well Architecture Framework)

Open-Source Tools for Machine Learning Development, Experimentation, and Monitoring

The modern machine learning (ML) lifecycle—from model development to deployment and monitoring—is supported by a growing ecosystem of powerful open-source tools. Whether you're an ML engineer, data scientist, or MLOps practitioner, selecting the right tools can greatly enhance productivity, experimentation efficiency, reproducibility, and operational robustness.

In the below section, we discuss the most impactful open-source tools categorized by their core use cases, development, hyperparameter tuning, orchestration, monitoring, and explainability.

1. MLflow: Lifecycle Management and Experiment Tracking

MLflow is a popular open-source platform for managing the complete ML lifecycle. Its Tracking component enables reproducible experiments by logging metrics, parameters, artifacts, and code versions.

Key Features:

Log and visualize experiments across multiple runs.

Compare model performance interactively via the MLflow UI.

Integrate with any ML library (e.g., Scikit-learn, TensorFlow, PyTorch).

It is mainly used for centralized experiment results for large teams or long-running training cycles.

2. Optuna: Hyperparameter Optimization Framework

Optuna is a lightweight yet powerful hyperparameter optimization library. It uses an intelligent sampling strategy called Asynchronous Tree-structured Parzen Estimator (TPE) to efficiently explore the parameter space.

Highlights:

Pruning of unpromising trials to save compute.

Interactive visualizations (e.g., parallel coordinates, optimization history).

Supports both single-node and distributed execution.

Example:

import optuna

def objective(trial):

lr = trial.suggest_loguniform('lr', 1e-5, 1e-1)

model = SomeModel(lr=lr)

acc = model.train_and_validate()

return acc

study = optuna.create_study(direction='maximize')

study.optimize(objective, n_trials=100)

It is mainly used to automate the tuning of model parameters for better accuracy with minimal manual intervention.

3. Ray Tune: Scalable Hyperparameter Tuning

Ray Tune brings distributed hyperparameter tuning to large-scale machine learning. It integrates well with deep learning frameworks and supports multiple search strategies like HyperOpt and ASHA (Asynchronous Successive Halving Algorithm).

Key Capabilities:

Distributed execution across CPUs and GPUs.

Native support for early stopping.

Fault-tolerant execution.

Example:

from ray import tune

from ray.tune.schedulers import ASHAScheduler

def train_model(config):

acc = some_train(config)

tune.report(mean_accuracy=acc)

scheduler = ASHAScheduler(metric="mean_accuracy", mode="max")

tune.run(train_model, config={"lr": tune.loguniform(1e-4, 1e-1)}, scheduler=scheduler)

It is used for efficient tuning in cloud or high-performance computing environments.

4. Weights & Biases (WandB): Experiment Tracking and Visualization

Weights & Biases offers a comprehensive suite for experiment tracking, model monitoring, and team collaboration.

Core Features:

Real-time logging of metrics, predictions, and system performance.

Interactive dashboards to monitor experiment runs.

Collaboration features for sharing results with teammates.

Example:

import wandb

wandb.init(project="my_project")

wandb.log({"accuracy": 0.92, "loss": 0.3})

It is used for scalable tracking and visualization for ML experiments in team-based settings.

5. Horovod: Distributed Deep Learning Framework

Horovod simplifies distributed training of deep learning models across GPUs and nodes. Developed by Uber, it supports TensorFlow, PyTorch, and MXNet.

Advanced Capabilities:

Elastic training: dynamically scale workers during training.

Optimized for NVIDIA GPUs using NCCL back-end.

MPI and Gloo back-end support.

Usage Example:

horovodrun -np 8 -H localhost:8 python train.py

It is used to accelerate training for large models by parallelizing across multiple GPUs or machines.

6. Kubeflow Pipelines: ML Workflow Orchestration on Kubernetes

Kubeflow Pipelines is an orchestration platform designed for deploying reproducible and portable ML workflows on Kubernetes.

Powerful Features:

Define complex pipelines with Python SDK.

Support for CI/CD workflows and recurring training jobs.

Seamless versioning and tracking of pipeline runs.

Example:

import kfp

client = kfp.Client()

client.create_run_from_pipeline_func(my_pipeline, arguments={...})

It is used to orchestrate and automate ML workflows in a Kubernetes-native environment.

7. ZenML: Reproducible ML Pipelines

ZenML is a pipeline-centric MLOps framework that emphasizes reproducibility, scalability, and flexibility across various stacks (e.g., cloud providers, orchestration engines).

Why Use ZenML:

Modular and extensible pipelines.

First-class support for experiment tracking and artifact management.

Integration with tools like MLflow, Kubeflow, and Seldon.

It is used to standardize and version every step of your ML development process.

8. Seldon: Model Deployment at Scale

Seldon Core provides tools to deploy, scale, and monitor ML models in Kubernetes environments.

Highlights:

Supports multiple ML runtime (e.g., SKLearn, XGBoost, TensorFlow).

Traffic splitting, A/B testing, and canary deployments.

Native explainability and monitoring via Alibi and Prometheus.

It is used to deploy models as micro-services with advanced routing and monitoring.

9. Bentoml: Model Packaging and Serving

BentoML simplifies the packaging of trained models for production-ready APIs.

Features:

Define models as services with FastAPI or Flask.

Containerization and Docker integration.

Deploy to AWS Lambda, Kubernetes, or REST endpoints.

It is used to seamless transition from model development to REST API deployment.

10. MLRun: End-to-End MLOps Platform

MLRun is a server-less framework that abstracts infrastructure for running ML workloads.

Capabilities:

Automated feature store integration.

Native support for data versioning and pipeline orchestration.

Compatible with multiple back-ends (Kubernetes, local, cloud).

It is used to scalable MLOps with less boilerplate and more automation.

11. Prometheus & Grafana: Monitoring Infrastructure and Models

Prometheus (metrics collection) and Grafana (visualization) are powerful open-source tools to monitor ML systems and infrastructure.

Together They Offer:

Real-time metrics collection from services and models.

Custom dashboards and alerting mechanisms.

Integration with Kubernetes and model inference endpoints.

It is used for production-grade monitoring of ML services and system performance.

12. Neptune.ai: Experiment Management and Collaboration

Neptune.ai is an experiment tracking tool focused on metadata management and collaboration.

Key Advantages:

Organize and compare thousands of runs.

Query experiments using a flexible UI or API.

Works well with Jupyter notebooks and CI/CD pipelines.

It is used to Track and share experimental results across distributed teams.

13. Explainability Tools: SHAP, LIME, Integrated Gradients

Model interpretability is crucial, especially in high-stakes domains. Several open-source tools make black-box models more transparent:

SHAP (SHapley Additive exPlanations): Provides consistent feature attributions.

LIME (Local Interpretable Model-agnostic Explanations): Explains predictions by approximating models locally.

Integrated Gradients: Attribution method for deep learning models based on gradients.

It is used to build trust with stakeholders and comply with regulatory requirements in sensitive applications (e.g., healthcare, finance).

Comparison table for Open-Source Tools for Machine Learning Development, Experimentation, and Monitoring

| Tool Name | Deployment Complexity | Model Serving Capabilities | Version Control | Best Suited For | Platform Compatibility | Notes |

| MLflow | Medium | Yes | Yes | Experiment tracking, model registry | On-premise, Cloud, Hybrid | Supports deployment to various targets including local, AWS SageMaker, Azure ML, and Kubernetes. |

| Optuna | Low | No | Limited | Hyperparameter optimization | On-premise, Cloud | Integrates with various ML frameworks; supports pruning and visualization. |

| Ray Tune | Medium | No | Limited | Distributed hyperparameter tuning | On-premise, Cloud | Scales tuning across multiple nodes; integrates with Ray ecosystem. |

| Weights & Biases | Low | No | Yes | Experiment tracking, collaboration | SaaS, On-premise | Offers SaaS, dedicated cloud, and customer-managed deployments. |

| Horovod | High | No | No | Distributed deep learning training | On-premise, Cloud | Optimized for TensorFlow, Keras, PyTorch, and MXNet; uses MPI for communication. |

| Kubeflow Pipelines | High | Via KFServing | Yes | ML workflow orchestration | On-premise, Cloud | Requires Kubernetes; integrates with various components like KFServing and Katib. |

| ZenML | Medium | Via integrations | Yes | Reproducible ML pipelines | On-premise, Cloud | Supports multiple orchestrators; integrates with MLflow, Seldon, and others. |

| Seldon Core | Medium | Yes | Limited | Model deployment on Kubernetes | On-premise, Cloud | Provides advanced deployment patterns like A/B testing and canary releases. |

| BentoML | Low | Yes | Yes | Packaging and deploying ML models | On-premise, Cloud | Creates Docker containers for models; supports various ML frameworks. |

| MLRun | High | Yes | Yes | End-to-end MLOps orchestration | On-premise, Cloud | Built on Kubernetes; integrates with Nuclio and other tools. |

| Prometheus | Medium | No | No | Monitoring metrics | On-premise, Cloud | Collects metrics; often used with Grafana for visualization. |

| Grafana | Low | No | No | Visualizing metrics and logs | On-premise, Cloud | Dashboards for various data sources; integrates with Prometheus. |

| Neptune.ai | Low | No | Yes | Experiment tracking and model registry | SaaS, On-premise | Offers both SaaS and self-hosted options; integrates with various ML frameworks. |

| SHAP | Low | No | No | Model interpretability | On-premise, Cloud | Provides Shapley value-based explanations; supports various model types. |

| LIME | Low | No | No | Local model explanations | On-premise, Cloud | Offers local surrogate models for interpretability. |

| Integrated Gradients | Low | No | No | Deep learning model explanations | On-premise, Cloud | Attribution method for neural networks; requires access to model gradients. |

Visual Architecture Diagram: Integrating Tools in a Modern MLOps Pipeline

Below is a conceptual architecture diagram illustrating how these tools can be integrated into a modern MLOps pipeline:

+---------------------+ +---------------------+ +---------------------+

| Data Ingestion & | | Experimentation | | Model Training |

| Preprocessing | | & Tracking | | & Optimization |

| (e.g., Spark, Dask, | | (MLflow, W&B, | | (Horovod, Ray Tune, |

| Kafka) | | Neptune.ai) | | Optuna) |

+---------------------+ +---------------------+ +---------------------+

| | |

v v v

+---------------------+ +---------------------+ +---------------------+

| Pipeline Orchestration | Model Deployment | | Monitoring & |

| (Kubeflow, ZenML, MLRun) | & Serving | | Observability |

| | (Seldon, BentoML) | | (Prometheus, |

| | | | Grafana) |

+---------------------+ +---------------------+ +---------------------+

| | |

v v v

+----------------------------------------------------------------------------------+

| Model Explainability |

| (SHAP, LIME, Integrated Gradients) |

+----------------------------------------------------------------------------------+

It will not be wrong to say that the open-source ML ecosystem is thriving, offering solutions for every stage of the machine learning lifecycle. Whether you're optimizing hyper-parameters, orchestrating training pipelines, or ensuring explainability, there's a tool tailored for the job. By combining the right stack—from MLflow to Seldon to Prometheus—you can build scalable, efficient, and trustworthy ML systems that thrive in both research and production environments.

The Canonical Data Science Stack: Evolving into a Unified MLOps Framework

Machine learning (ML) projects often begin in a Jupyter Notebook and end in a disjointed maze of scripts, tools, and manual processes. While this may suffice for experimentation, it rarely scales to production without significant re-engineering. Yet, a common set of tools—what Canonical refers to as the Canonical Data Science Stack—continues to serve as the foundation for many ML workflows.

With the right integrations and extensions, this familiar stack can do more than support model development—it can become the bedrock of a robust MLOps framework.

Here, we’ll explore the Canonical Data Science Stack’s core components, benefits, limitations, and how it can evolve to meet modern MLOps demands.

What Is the Canonical Data Science Stack?

According to Canonical’s official announcement, the Canonical Data Science Stack is a curated, containerized collection of best-in-class, open-source tools for end-to-end machine learning workflows. Built on Ubuntu and integrated with tools like Jupyter, MLflow, and TensorFlow, it supports everything from data preprocessing to model deployment.

Canonical emphasizes the goal of providing a turnkey environment that’s both developer-friendly and production-ready, particularly in multi-cloud and hybrid setups. This stack is designed to run on any infrastructure, including Kubernetes environments, enabling scalable machine learning without vendor lock-in.

Core Components of the Stack

The Canonical Data Science Stack includes several tightly integrated tools:

Jupyter Notebooks: Facilitates interactive coding, visualization, and narrative documentation—perfect for experimentation and sharing insights.

Pandas: A cornerstone for data manipulation, offering a powerful and intuitive API for wrangling tabular data.

NumPy/SciPy: The backbone for numerical and scientific computing in Python.

Scikit-learn: A mature library for classical ML, supporting preprocessing, modeling, and evaluation out of the box.

TensorFlow / PyTorch: The dominant frameworks for deep learning, offering extensive model-building capabilities and GPU acceleration.

MLflow: Manages the full ML lifecycle—tracking experiments, packaging models, and ensuring reproducibility.

This combination allows data scientists to quickly move from exploration to modeling, all within a cohesive Python-native environment.

Why It Works: Strengths of the Stack

The Canonical Stack provides several strategic advantages:

Ease of Use: Python-based tools with rich documentation and consistent APIs reduce learning curves and boost productivity.

Reproducibility: Integrated tools like MLflow and Git make it easier to track and reproduce experiments, enhancing collaboration and reliability.

Portability: Canonical’s packaging in charmed operators ensures the entire stack can be deployed on Kubernetes across any cloud or on-premises environment.

Community Support: Each tool benefits from active maintenance, broad adoption, and a wealth of online resources and tutorials.

Limitations: Where the Stack Falls Short

While the stack excels during the experimentation phase, it wasn’t initially built with enterprise-scale production in mind. Key gaps include:

Scalability: Tools like Pandas and Scikit-learn are not optimized for distributed processing or large datasets.

Feature Engineering & Lineage: No native support for maintaining consistent feature sets across training and inference phases.

Automation & CI/CD: Lacks built-in tools for orchestrating workflows, conducting automated tests, or integrating with DevOps pipelines.

Model Serving: Minimal native support for deploying models as APIs or services in real-time production environments.

Bridging the Gaps: Toward a Unified MLOps Framework

To evolve the Canonical Stack into a comprehensive MLOps solution, teams can integrate complementary tools:

Data Versioning: Tools like DVC or Pachyderm enable version control for datasets and pipelines.

Feature Stores: Solutions such as Feast manage features consistently across training and serving environments.

Workflow Orchestration: Use Apache Airflow or Prefect to manage data pipelines, schedules, and dependencies.

Model Deployment: Combine MLflow with serving tools like Seldon Core or KServe for scalable, containerized deployment.

Pipeline Management: Frameworks such as Kubeflow or Metaflow allow you to build and manage production-grade ML pipelines on Kubernetes.

Canonical’s charm-based deployment model makes integrating these tools more seamless. By using Juju, DevOps and data science teams can orchestrate the entire stack as reusable, scalable services—bringing true modularity to ML operations.

A Practical Workflow: From Notebook to Production

Here’s how a typical ML project might evolve using this enhanced Canonical Stack:

Development: A data scientist builds and iterates on a model in Jupyter, using Pandas and Scikit-learn or PyTorch.

Versioning: Data and model code are versioned using Git and DVC.

Experiment Tracking: MLflow logs parameters, metrics, and artifacts, enabling robust experiment comparison.

Orchestration: Airflow schedules and manages the data processing and training pipelines.

Model Serving: The final model is deployed with Seldon Core or MLflow's REST API, accessible in production environments.

Monitoring: Performance is tracked via Prometheus and Grafana, providing observability and alerts.

Visual Architecture Diagram: Unified Canonical MLOps Lifecycle

Building Modern MLOps with Canonical

The Canonical Data Science Stack offers an elegant starting point for data science initiatives. Its familiar, open-source tools reduce friction in development and provide a consistent, Python environment.

Thanks to Canonical’s Kubernetes-native packaging and open-source philosophy, teams can now extend this stack to serve modern MLOps use cases—supporting everything from versioning and orchestration to deployment and monitoring.

By embracing this hybrid approach, organizations gain the agility of rapid experimentation and the robustness of production-ready workflows—bridging the critical gap between data science and engineering.

Explore Canonical’s full release and deployment details here.

Summary

MLOps integrates DevOps principles into the machine learning lifecycle to ensure scalable, reproducible, and production-ready AI systems.

Data management is foundational in MLOps, focusing on data quality, consistency, and traceability.

Key elements of MLOps data management include data versioning, feature stores, ETL pipelines, and data lineage.

Data versioning tools like DVC enable consistent data reuse and reproducibility.

Feature stores (e.g., Feast) support centralized, real-time access to engineered features.

ETL orchestration with tools like Apache Airflow and Delta Lake supports scalable, automated pipelines.

Data lineage ensures traceability, critical for audits and model interpretability.

Data management in MLOps is strategic, driving long-term value beyond operations.

Open-source tools like Pachyderm and Delta Lake enhance modular, scalable pipelines.

Model versioning and delivery are essential for tracking experiments and safely deploying models.

MLflow and Seldon Core enable seamless model tracking and Kubernetes-native deployment.

Triton and Flyte provide high-performance inference and workflow orchestration, respectively.

CI/CD practices in MLOps bring automation, consistency, and faster time-to-market for models.

Experimentation tools such as Optuna, Ray Tune, and WandB help with hyper-parameter tuning and tracking.

Distributed training frameworks like Horovod boost scalability across compute environments.

Model monitoring is crucial for ensuring post-deployment performance and drift detection.

Monitoring stacks like Prometheus & Grafana offer metrics and visualization for infrastructure and models.

Explainability tools (e.g., SHAP, LIME) aid in debugging and building trust in models.

The Canonical Data Science Stack unifies multiple tools like JupyterHub, MLflow, and Kubeflow for a complete MLOps lifecycle.

Gaps in the stack are being bridged with integrations and open standards to approach a fully unified, end-to-end MLOps platform.

Conclusion

The evolution of MLOps has transformed how machine learning workflows are managed—from isolated scripts and models to robust, scalable pipelines built on open-source foundations. The blog underscores that success in modern AI operations hinges on mastering core pillars: data management, model versioning, experiment tracking, deployment orchestration, and continuous monitoring. Tools like DVC, MLflow, Kubeflow, and Seldon form the backbone of this ecosystem, empowering teams to collaborate, iterate, and scale with confidence. By adopting a structured MLOps framework—such as the Canonical Data Science Stack—organizations can reduce friction between development and production, enhance reproducibility, and unlock true business value from AI investments.

Subscribe to my newsletter

Read articles from Pronod Bharatiya directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Pronod Bharatiya

Pronod Bharatiya

As a passionate Machine Learning and Deep Learning enthusiast, I document my learning journey on Hashnode. My experience encompasses various projects, from exploring foundational algorithms to implementing advanced neural networks. I enjoy breaking down complex concepts into digestible insights, making them accessible for all. Join me as I share my thoughts, tutorials, and tips to navigate the exciting world of ML and DL. Connect with me on LinkedIn to explore collaboration opportunities!