Scaling your Apps: The Why, How, and When

Tanmay Bansal

Tanmay Bansal

As I’ve been diving deeper into backend systems and real-world web applications, I’ve come across terms like horizontal scaling, vertical scaling, and capacity estimation more times than I can count. These aren't just buzzwords—they're essential for building scalable, high-performance systems.

Today, let’s start exploring these concepts one by one, beginning with Vertical Scaling.

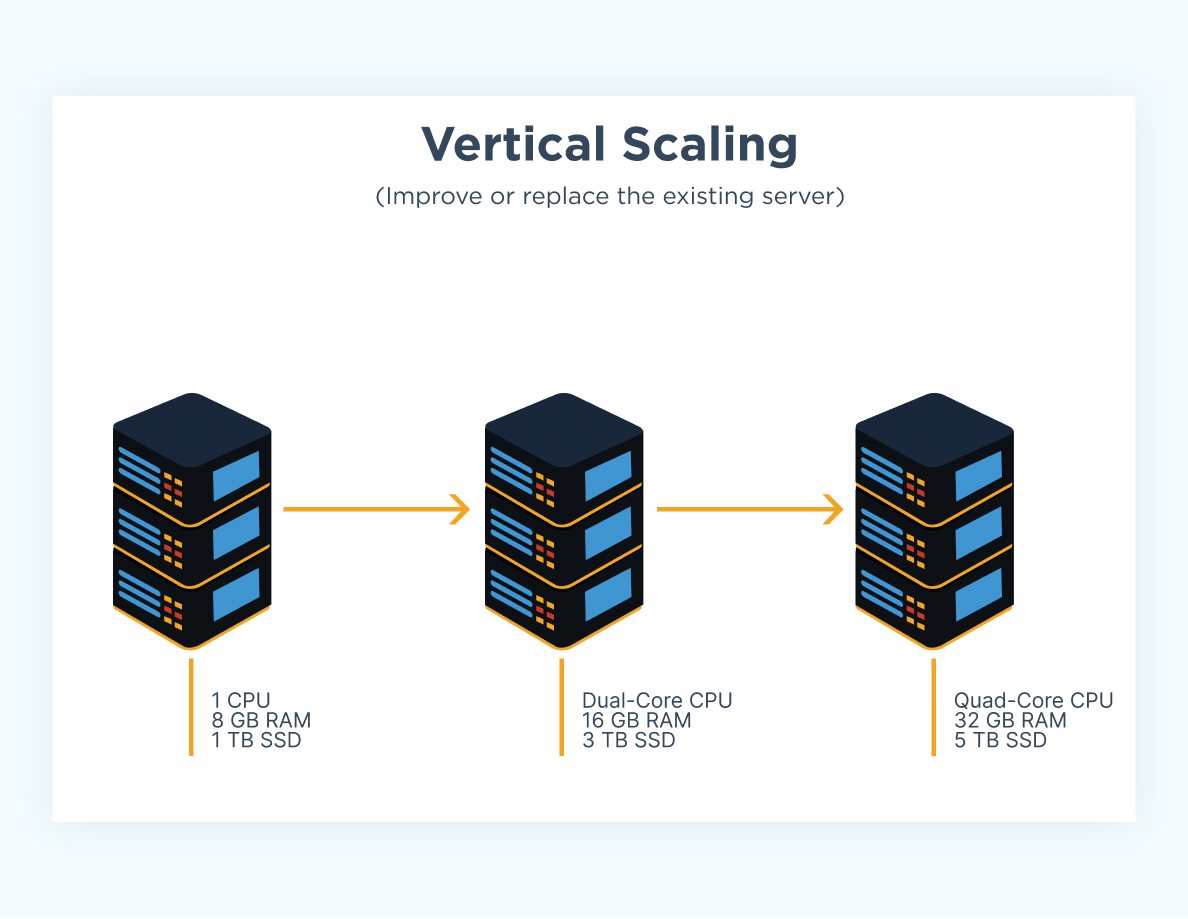

🏗️ What Is Vertical Scaling?

Vertical scaling (aka scaling up) means upgrading your existing server by adding:

🧠 More RAM

🧮 More CPU cores

⚡ Faster storage (SSD over HDD)

Image Source: Vertical vs Horizontal Scaling

🔍 Real Example in Deployment

Say you're deploying a Node.js app on a DigitalOcean droplet. You start with:

Basic Plan: 1 vCPU, 1 GB RAM

As traffic increases, you upgrade to:

Upgraded Plan: 4 vCPU, 8 GB RAM

No code changes needed — your server just has more horsepower to handle requests. This is vertical scaling in action: same code, better infrastructure.

⚙️ How Apps Should React to Vertical Scaling

While you don’t scale vertically in code, your application should be designed to efficiently use the increased resources. For example:

Use asynchronous I/O

Optimize database queries

Use memory and CPU wisely (avoid memory leaks, unbounded loops, etc.)

The most important point? With vertical scaling, your code doesn’t need to know the machine is upgraded — it just runs better.

✅ Pros of Vertical Scaling

+ Simple to implement

+ No change to application code

+ Great for monolithic apps

❌ Cons of Vertical Scaling

- Hardware limitations (you can’t scale forever)

- Downtime during upgrades

- Cost increases rapidly at higher tiers

👨🍳 Analogy: Your Burger Stall

You’re running a small burger stall. Orders start piling up. You:

Get a bigger grill 🍳

Hire more people 👨🍳

Add more tables 🪑

This is vertical scaling — making the existing setup more powerful.

But… what if there's no more space? That’s when horizontal scaling comes in — multiple burger stalls! 🍔🍔🍔 (We’ll get to that next.)

📈 When to Consider Vertical Scaling

Use monitoring tools like:

htop / top (Linux CPU usage)

pm2 (Node.js process manager)

NewRelic / Datadog (Application metrics)

Example with htop:

sudo apt install htop

htop

If your CPU is constantly above 80–90% or RAM is fully used, it’s a clear sign to scale vertically.

Let me know if you’ve ever hit a performance wall and what your fix was—I’d love to hear your stories!

Capacity Estimation: Planning for Growth

Before scaling — vertically or horizontally — it’s essential to estimate how much capacity you’ll actually need.

Think of this as resource budgeting for your system. Without it, you’re either overspending or under-preparing.

🧠 What Is Capacity Estimation?

Capacity estimation helps answer:

🧾 How many requests can my current setup handle?

📈 What happens when traffic grows 10x?

🧯 When will my system crash under load?

It’s the process of calculating the maximum throughput of your system and identifying bottlenecks before they cause outages.

📐 Estimating Capacity Step-by-Step

Let’s go through a practical framework using a backend API as an example:

1. Measure Baseline Performance

Start with a single machine setup. Use tools like:

wrk– HTTP benchmarkingab(Apache Bench)artillery,k6, orlocust

bashCopyEditwrk -t12 -c400 -d30s http://localhost:3000

Let’s say this outputs:

bashCopyEditRequests/sec: 500

That means 1 server can handle 500 RPS (requests per second).

2. Factor in Peak Load

If your app gets ~100,000 daily users, and on average each makes 20 requests:

txtCopyEdit100,000 users × 20 reqs = 2,000,000 total requests/day

Spread across 24 hours:

txtCopyEdit2,000,000 ÷ 86,400 seconds ≈ ~23 RPS average

But traffic is not uniform — you’ll likely have peak hours. Multiply by a safety factor (say 10x):

txtCopyEditPeak RPS ≈ 230

3. Decide Based on Throughput

If 1 server = 500 RPS, and you need 230 peak RPS, you’re good.

But if you're expecting a marketing campaign or viral event to push this to 2,000 RPS, you’ll need:

txtCopyEdit2000 ÷ 500 = 4 servers (horizontally scaled)

OR upgrade a single machine vertically if the cost and performance align.

4. Monitor and Revisit

Capacity estimation isn’t a one-time thing.

✅ Use:

Grafana + Prometheus for dashboards

Datadog, New Relic, Elastic APM

Alerts for CPU/RAM usage, request latency, DB slow queries

📉 Regularly revisit as your feature set, traffic patterns, or codebase evolve.

📦 Capacity Estimation for Databases

Don’t forget your database!

Measure average query time under load

Identify slow queries with indexes (more on that in the next section!)

Use read replicas or DB sharding if DB is the bottleneck

🧮 Example Capacity Chart

| Component | Capacity (RPS) | Bottleneck? |

| Node.js Server | 500 | ❌ |

| DB (Postgres) | 300 | ✅ |

| Redis Cache | 5,000 | ❌ |

In this example, even if the Node server can handle more, the DB becomes the limiting factor. This is where indexing or horizontal DB scaling comes in.

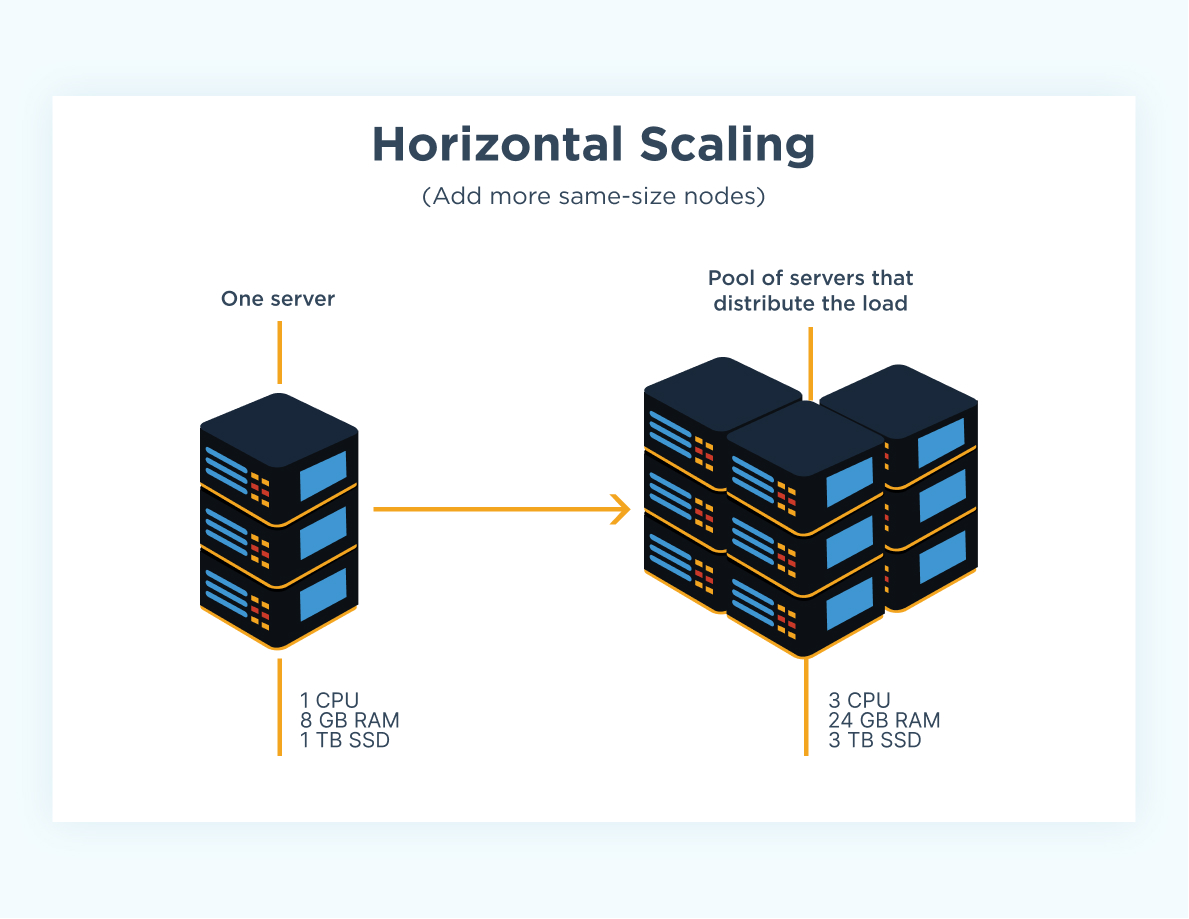

🌐 What Is Horizontal Scaling?

Horizontal scaling (aka scaling out) means running multiple instances of your application on different servers or machines.

Instead of upgrading the size of one machine, you add more machines to distribute the load.

Image Source: Vertical vs Horizontal Scaling

🚀 Real Example in Deployment

Let’s say you have a web server receiving a ton of traffic. One instance can’t handle it alone. Instead of just upgrading it (vertical), you:

Deploy 3 instances of the server

Put a load balancer (like NGINX or HAProxy) in front

Distribute incoming traffic across instances

Incoming Request ─▶ Load Balancer ─▶ Server A

└▶ Server B

└▶ Server C

🔄 Load Balancer Code Example (NGINX)

Here’s a super simple nginx.conf snippet:

http {

upstream backend {

server 127.0.0.1:3000;

server 127.0.0.1:3001;

server 127.0.0.1:3002;

}

server {

listen 80;

location / {

proxy_pass http://backend;

}

}

}

👨💻 Node.js Cluster Example for Horizontal Scaling

Node.js also allows horizontal scaling via its built-in cluster module:

const cluster = require('cluster');

const os = require('os');

const http = require('http');

if (cluster.isMaster) {

const cpuCount = os.cpus().length;

for (let i = 0; i < cpuCount; i++) {

cluster.fork();

}

} else {

http.createServer((req, res) => {

res.writeHead(200);

res.end(`Handled by worker ${process.pid}\n`);

}).listen(8000);

}

This code runs multiple worker processes across available CPU cores, effectively simulating horizontal scaling on a single machine.

✅ Pros of Horizontal Scaling

+ High availability (one instance goes down, others stay up)

+ Can scale beyond hardware limits

+ Better fault tolerance

❌ Cons of Horizontal Scaling

- Requires load balancers

- Adds complexity to deployment and state management

- Needs inter-service communication strategies

👩🍳 Analogy: Expanding the Burger Empire

Remember your burger stall? You’ve maxed out your space. So, you:

Open another stall down the street 🍔

Then another in the next neighborhood 🍔

This is horizontal scaling — adding more stalls (servers) to meet demand.

You might even hire a delivery service (load balancer!) to route orders intelligently.

⚖️ When to Choose Horizontal Over Vertical

Vertical scaling hits hardware or cost limits

You need redundancy and high availability

You’re designing a distributed system

📌 Often, companies start with vertical scaling (it’s easier), and move to horizontal scaling as they grow.

🏁 Conclusion: Building For Tomorrow, Today!

Scaling is not just about brute-forcing hardware or adding more servers—it's about planning, measuring, and evolving as your system grows.

Whether you're vertically scaling a hobby project, horizontally scaling a startup backend, or capacity planning for a production-grade system—understanding these concepts helps you make smart, cost-effective, and reliable decisions.

🔁 Keep measuring. 📈 Keep optimizing. 🧠 Keep learning.

If you’ve found this article helpful, stay tuned for the next one where we’ll cover Indexing in Databases and how it plays a massive role in scaling efficiently!

Follow along, share your thoughts in the comments, and happy scaling! 🚀

Subscribe to my newsletter

Read articles from Tanmay Bansal directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Tanmay Bansal

Tanmay Bansal

build. ship, publish