Session URL: How to Ensure User Privacy During Human-Computer Interaction?

Scraper0024

Scraper0024

Scrapeless Scraping Browser now fully supports automation tasks through Session-based workflows. Whether initiated via the Playground or API, all program executions can be synchronously tracked in the Dashboard.

Open Live View to monitor runtime status in real time.

Share the Live URL for remote user interaction—such as login pages, form filling, or payment completion.

Review the entire execution process with Session Replay.

But you might wonder:

What exactly are these Session features? How do they benefit me? And how do I use them?

In this blog, we’ll explore Scrapeless Scraping Browser’s Session in depth, covering:

The concept and purpose of Live View

What the Live URL is

How to use Live URL for direct user interaction

Why Session Replay is essential

Live View: Real-Time Program Monitoring

The Live View feature in Scrapeless Scraping Browser allows you to track and control browser sessions in real time. Specifically, it enables you to observe clicks, inputs, and all browser actions, monitor automation workflows, debug scripts manually, and take direct control of the session if needed.

Creating a Browser Session

First, you need to create a session. There are two ways to do this:

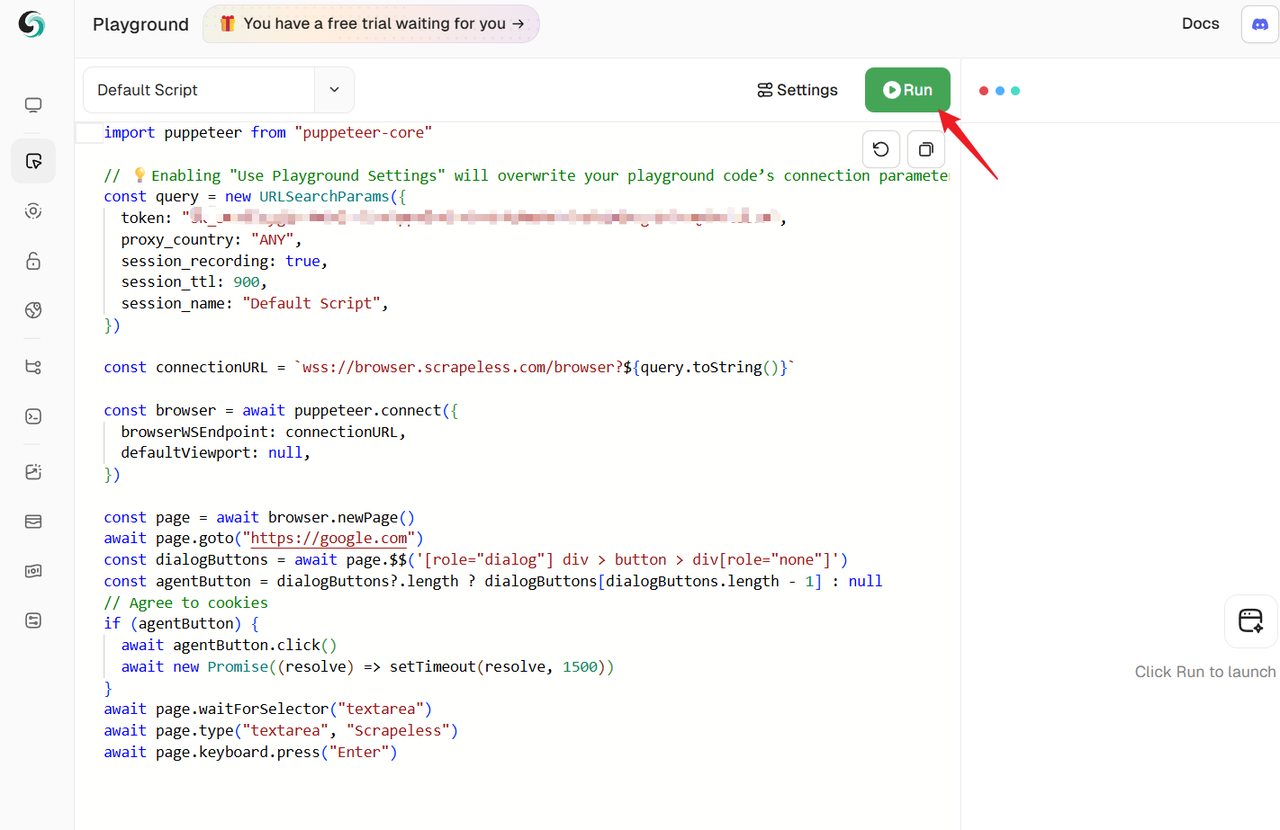

Method 1: Create a session via Playground

Method 2: Create a session via API

You can also create a session using our API. Please refer to the API documentation: Scraping Browser API Documentation. Our session feature will help you manage your session, including real-time viewing capabilities.

const puppeteer =require('puppeteer-core');

const token = 'API Key'

// custom fingerprint

const fingerprint = {

platform: 'Windows',

}

const query = new URLSearchParams({

session_ttl: 180,

session_name: 'test_scraping', // session name

proxy_country: 'ANY',

token: token,

fingerprint: encodeURIComponent(JSON.stringify(fingerprint)),

});

const connectionURL = `wss://browser.scrapeless.com/browser?${query.toString()}`;

(async () => {

const browser = await puppeteer.connect({browserWSEndpoint: connectionURL});

const page = await browser.newPage();

await page.goto('https://www.scrapeless.com');

await new Promise(res => setTimeout(res, 3000));

await page.goto('https://www.google.com');

await new Promise(res => setTimeout(res, 3000));

await page.goto('https://www.youtube.com');

await new Promise(res => setTimeout(res, 3000));

await browser.close();

})();

View Live Sessions

In the Scrapeless session management interface, you can easily view live sessions:

Method 1: View Live Sessions Directly in the Dashboard

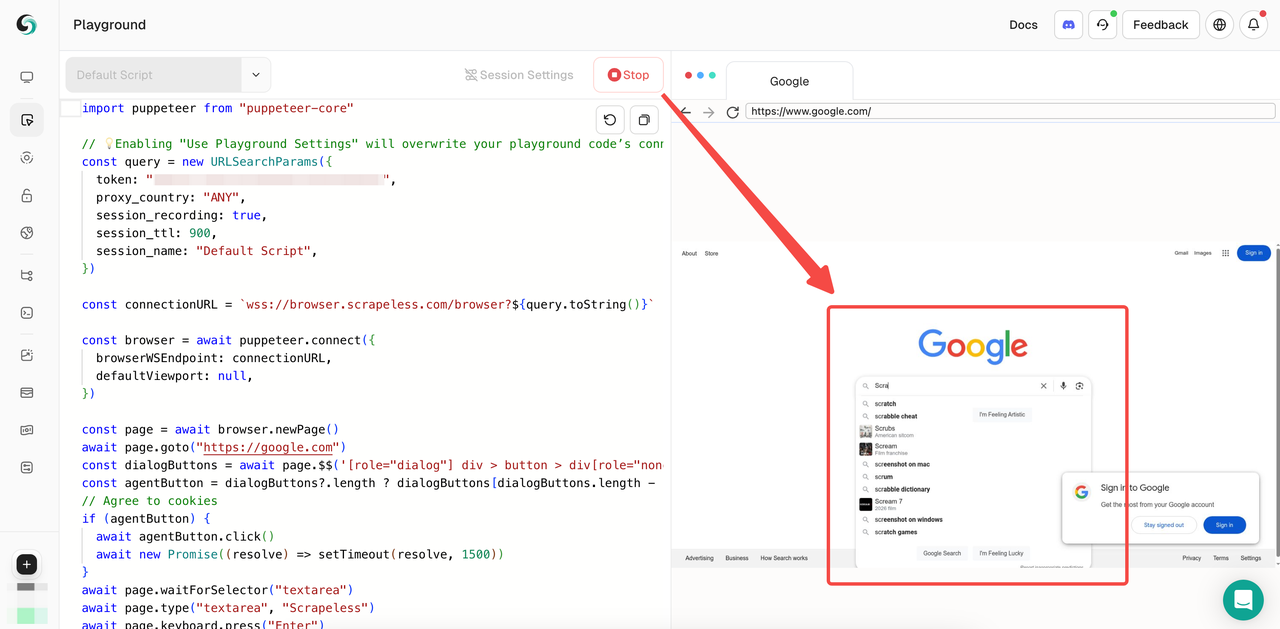

After creating a session in the Playground, you’ll see the live running session on the right side.

Or, you can check session status on the Live Sessions page:

Method 2: View Session via Live URL

A Live URL is generated for a running session, allowing you to watch the process live in a browser.

Live URLs are useful for:

Debugging & Monitoring: Watch everything in real time or share it with teammates.

Human Interaction: Control or input directly—let the user enter sensitive info like passwords securely.

You can copy the Live URL by clicking the "🔗" icon on the Live Sessions page. Both Playground and API-created sessions support Live URL.

- Get Live URL from the Dashboard

See our tutorial below:

- Get Live URL via API

You can also retrieve the Live URL through API calls. The sample code below fetches all running sessions via the session API, then uses the Live URL API to retrieve the live view for a specific session:

import requests

API_CONFIG = {

"host": "https://api.scrapeless.com",

"headers": {

"x-api-token": "API Key",

"Content-Type": "application/json"

}

}

async def fetch_live_url(task_id):

try:

live_response = requests.get(f"{API_CONFIG['host']}/browser/{task_id}/live", headers=API_CONFIG["headers"])

if not live_response.ok:

raise Exception(f"failed to fetch live url: {live_response.status_code} {live_response.reason}")

live_result = live_response.json()

if live_result and live_result.get("data"):

print(f"taskId: {task_id}")

print(f"liveUrl: {live_result['data']}")

else:

print("no live url data available for this task")

except Exception as error:

print(f"error fetching live url for task {task_id}: {str(error)}")

async def fetch_browser_sessions():

try:

session_response = requests.get(f"{API_CONFIG['host']}/browser/running", headers=API_CONFIG["headers"])

if not session_response.ok:

raise Exception(f"failed to fetch sessions: {session_response.status_code} {session_response.reason}")

session_result = session_response.json()

sessions = session_result.get("data")

if not sessions or not isinstance(sessions, list) or len(sessions) == 0:

print("no active browser sessions found")

return

task_id = sessions[0].get("taskId")

if not task_id:

print("task id not found in the session data")

return

await fetch_live_url(task_id)

except Exception as error:

print(f"error fetching browser sessions: {str(error)}")

import asyncio

asyncio.run(fetch_browser_sessions())

- Get Live URL via CDP command

To obtain the Live URL while the code is running, use the CDP command Agent.liveURL:

import asyncio

from pyppeteer import launcher

async def main():

try:

browser = await launcher.connect(

browserWSEndpoint="wss://browser.scrapeless.com/browser?token=APIKey&session_ttl=180&proxy_country=ANY"

)

page = await browser.newPage()

await page.goto('https://www.scrapeless.com')

client = await page.target.createCDPSession()

result = await client.send('Agent.liveURL')

print(result)

except Exception as e:

print(e)

asyncio.run(main())

A Highlight Worth Mentioning:

Live URL not only allows real-time monitoring, but also human-machine interaction.

For example: You need the user to enter their login password.

“Oh no! Are you trying to steal my private info? No way!”

Actually, the user can input the data themselves on the screen — and everything remains 100% private. This direct yet secure method is what Live URL enables — remote interaction.

Live URL: How It Enables Collaboration and User Interaction

Let’s take registering and logging into Scrapeless as an example and walk through how to interact directly with users.

Here’s the code you'll need:

const puppeteer = require("puppeteer-core");

(async () => {

const fingerprint = {

// custom screen fingerprint

screen: {

width: 1920,

height: 1080,

},

args: {

// set windows size with same value to screen fingerprint

"--window-size": "1920,1080",

},

};

const query = new URLSearchParams({

token: "APIKey",

session_ttl: 600,

proxy_country: "ANY",

fingerprint: encodeURIComponent(JSON.stringify(fingerprint)),

});

const browserWsEndpoint = `wss://browser.scrapeless.com/browser?${query.toString()}`;

try {

const browser = await puppeteer.connect({

browserWSEndpoint: browserWsEndpoint,

});

const page = await browser.newPage();

await page.setViewport(null);

await page.goto(`https://app.scrapeless.com/passport/register`, {

timeout: 120000,

waitUntil: "domcontentloaded",

});

const client = await page.createCDPSession();

const result = await client.send("Agent.liveURL");

// you can share the live url to any user

console.log(`${result.liveURL}`);

// wait for 5 minutes for user registration

await page.waitForSelector("#none-existing-selector", {timeout: 300_000});

} catch (e) {

console.log(e);

}

})()

Run the above and share the Live URL with the user, such as: Scrapeless Registration URL.

Every steps before like:

Navigating to the website

Visiting Scrapeless homepage

Clicking login and entering the registration page

All of these can be done directly by creating a session using the above code. The most critical step is that the user needs to enter their email and password to complete the registration.

After you share the Live URL with the user, you can remotely track the program execution process. The program will automatically run and jump until the page that requires user interaction. The password entered by the other party will be completely hidden, and the user does not need to worry about password leakage.

In order to more intuitively reflect the user operation process, please refer to the following interaction steps:

The following interactive process is completely executed in the Live URL

Session Replay: Replay Program Execution to Debug Everything

Session Replay is a video-like recreation of a user session built using the Recording Library. Replays are created based on snapshots of the web application DOM state (the HTML representation in the browser's memory). When you replay each snapshot, you'll see a record of the actions taken during the entire session: including all page loads, refreshes, and navigations that occurred during your visit to the website.

Session Replay can help you troubleshoot all aspects of your program's operation. All page operations will be recorded and saved as a video. If you find any problems during the session, you can troubleshoot and adjust them through replay.

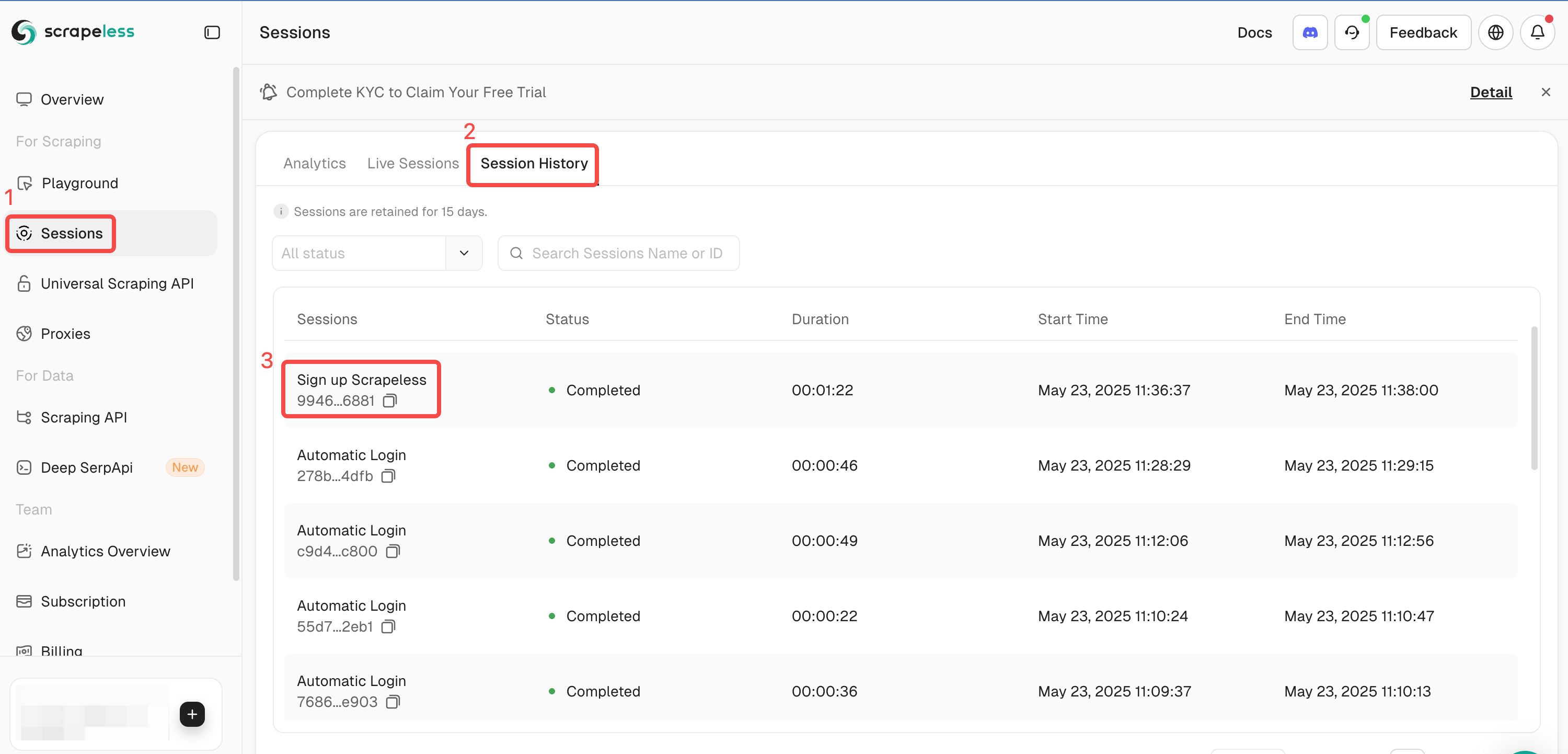

Go to Sessions

Click Session History

Locate the session

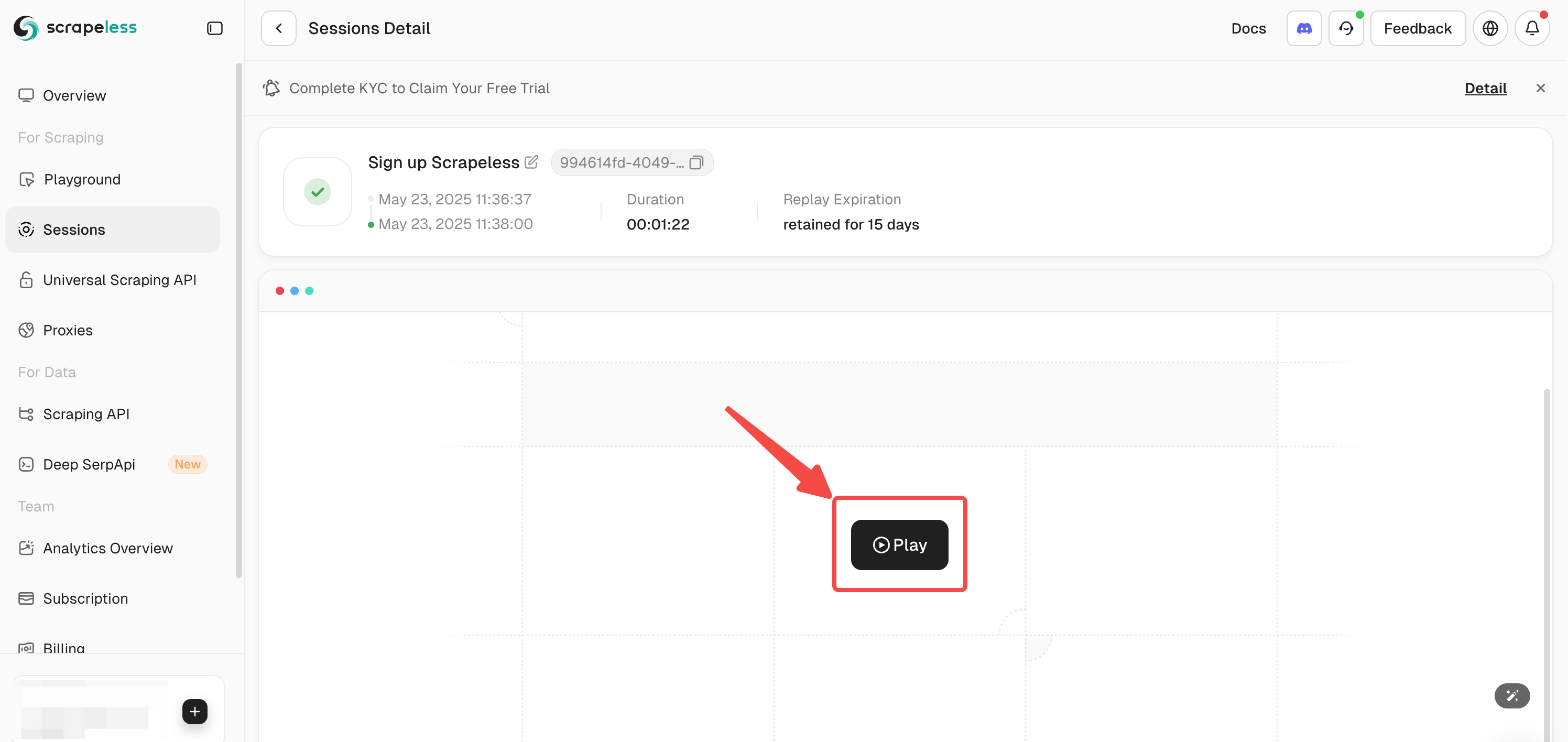

- In the session details, click the Play button to watch and review execution:

The Bottom Lines

Scrapeless Scraping Browser lets you monitor in real-time, interact remotely, and replay every steps.

Live View: Watch browser activity like a live stream. See every click and input!

Live URL: Generate a shareable link where users can input their data directly. Fully private, completely secure.

Session Replay: Debug like a pro by replaying exactly what happened — no need to rerun the program.

Whether you’re a developer debugging, a PM demoing, or customer support guiding a user — Scrapeless Sessions have your back.

It’s time to make automation smart and human-friendly.

Subscribe to my newsletter

Read articles from Scraper0024 directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by