A Complete Toolkit for Building Robust AI Applications in 2025

Lamri Abdellah Ramdane

Lamri Abdellah Ramdane

So, you want to build an AI application. Not just a fragile demo, but a robust, production-ready powerhouse. In 2025, the game has changed. It’s no longer just about picking a model; it’s about mastering the entire lifecycle, from your local machine to the cloud and back again.

Building a serious AI app is like assembling a high-performance vehicle. You need the right parts for the engine, a state-of-the-art workshop for assembly, and a sophisticated dashboard to keep it running smoothly.

Here’s the complete, no-fluff toolkit you need to build AI that truly delivers in 2025.

Part 1: The Foundation — Your Model & Data Hub

Every great AI application starts with two things: a powerful model and clean data. This is your engine.

The Model Hub: Hugging Face There’s no way around it: Hugging Face is the GitHub of AI. It’s an essential, sprawling ecosystem of pre-trained models, datasets, and tools. Instead of starting from scratch, you can leverage state-of-the-art models for natural language processing, computer vision, and more. It’s the ultimate starting line for your project.

The Data Workbench: Jupyter Notebooks Before you can train anything, you need to understand your data. Jupyter Notebooks remain the undisputed king for data exploration, cleaning, and visualization. They provide an interactive, step-by-step environment to wrangle your data into a pristine state, ready for training.

Part 2: The Workshop — Your Local Dev & Experimentation Lab

This is where the magic happens. Before you burn through expensive cloud credits, you need a private, powerful local environment to experiment, fine-tune models, and build your application logic.

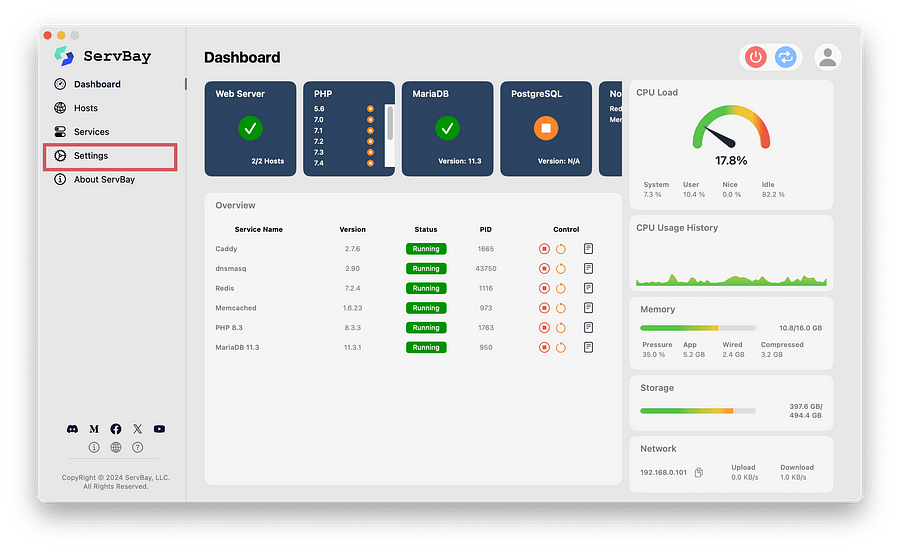

The AI-Ready Environment: ServBay The biggest bottleneck in AI development is often your local machine. Trying to manage Python versions, CUDA drivers, and multiple AI frameworks can bring your progress to a grinding halt. This is why a dedicated local server environment like ServBay is a game-changer. With ServBay, you can instantly spin up isolated environments with everything you need: specific Python versions, a blazing-fast Caddy server to test your APIs, and crucial databases like PostgreSQL for vector storage.

The Local LLM Runner: Ollama Want to run models like Llama 3 or Phi-3 on your own machine for instant feedback and zero API cost? Ollama is the tool. It makes running and managing LLMs locally incredibly simple. When integrated within a powerful environment managed by ServBay, you have a private, end-to-end AI sandbox for rapid prototyping and development, completely offline.

Part 3: The Factory — Your Training & Deployment Engine

Once you’ve prototyped locally, it’s time to scale up. This means training on larger datasets and deploying your application for the world to use.

The Training Powerhouse: PyTorch & TensorFlow These are the two titans of deep learning. PyTorch is often favored in research for its flexibility and Python-native feel, while TensorFlow (with Keras) excels at production-grade, scalable deployments. Your choice will depend on your project, but proficiency in at least one is non-negotiable for serious AI work.

The Deployment Standard: Docker & Kubernetes To ensure your application runs reliably anywhere, you need to containerize it. Docker packages your app and all its dependencies into a neat little box. Kubernetes then takes those boxes and manages them at scale, handling everything from load balancing to automatic rollbacks. This duo is the gold standard for deploying robust, resilient AI services.

Part 4: The Cockpit — Your MLOps & Monitoring Dashboards

Your app is live! But the job isn’t done. MLOps (Machine Learning Operations) is all about monitoring, managing, and improving your models in production.

The Experiment Tracker: Weights & Biases When you’re running dozens of training experiments, you need to keep track of what works. Weights & Biases (W&B) is a stunningly useful tool for logging metrics, comparing model performance, and collaborating with your team. It’s the lab notebook that ensures your research is reproducible and your results are clear.

The Production Monitor: Grafana How is your model performing in the real world? Is it fast enough? Are users getting errors? Grafana is an open-source observability platform that allows you to create beautiful, insightful dashboards to monitor your application’s health, performance, and resource usage in real-time.

Building a robust AI application in 2025 is a holistic process. By equipping yourself with this complete toolkit, you can move confidently from a simple idea to a powerful, scalable, and reliable product.

Subscribe to my newsletter

Read articles from Lamri Abdellah Ramdane directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by