How to Securely Expose Applications from a Private AKS Cluster Using Azure Application Gateway for Containers

George Ezejiofor

George Ezejiofor

Introduction

Deploying applications in a private Azure Kubernetes Service (AKS) cluster is a widely adopted strategy to enhance security by isolating workloads and Kubernetes API server from the public internet. However, one of the biggest challenges DevOps and platform engineers face is how to expose those private services securely to external consumers — whether they're internal teams, partner systems, or customers — without compromising on network security, scalability, or operational agility.

Traditionally, this required complex combinations of internal load balancers, Application Gateway Ingress Controller (AGIC), or service meshes. But now, Azure has introduced a powerful solution: Application Gateway for Containers.

This new capability brings layer 7 load balancing natively to Kubernetes environments using the Kubernetes Gateway API, while offering real-time updates, mutual TLS (mTLS), traffic splitting, and seamless support for private networking. It significantly simplifies ingress management, especially for private AKS clusters where outbound and inbound traffic control is critical.

In this guide, we’ll walk you through how to securely expose your application from a private AKS cluster using Application Gateway for Containers, integrated with cert-manager for automated TLS certificate management. You’ll learn how to deploy the full setup, issue certificates from Let’s Encrypt, and test HTTPS access to your workloads — all within a locked-down, private Kubernetes environment.

🔍 What is Application Gateway for Containers?

🚀 Overview

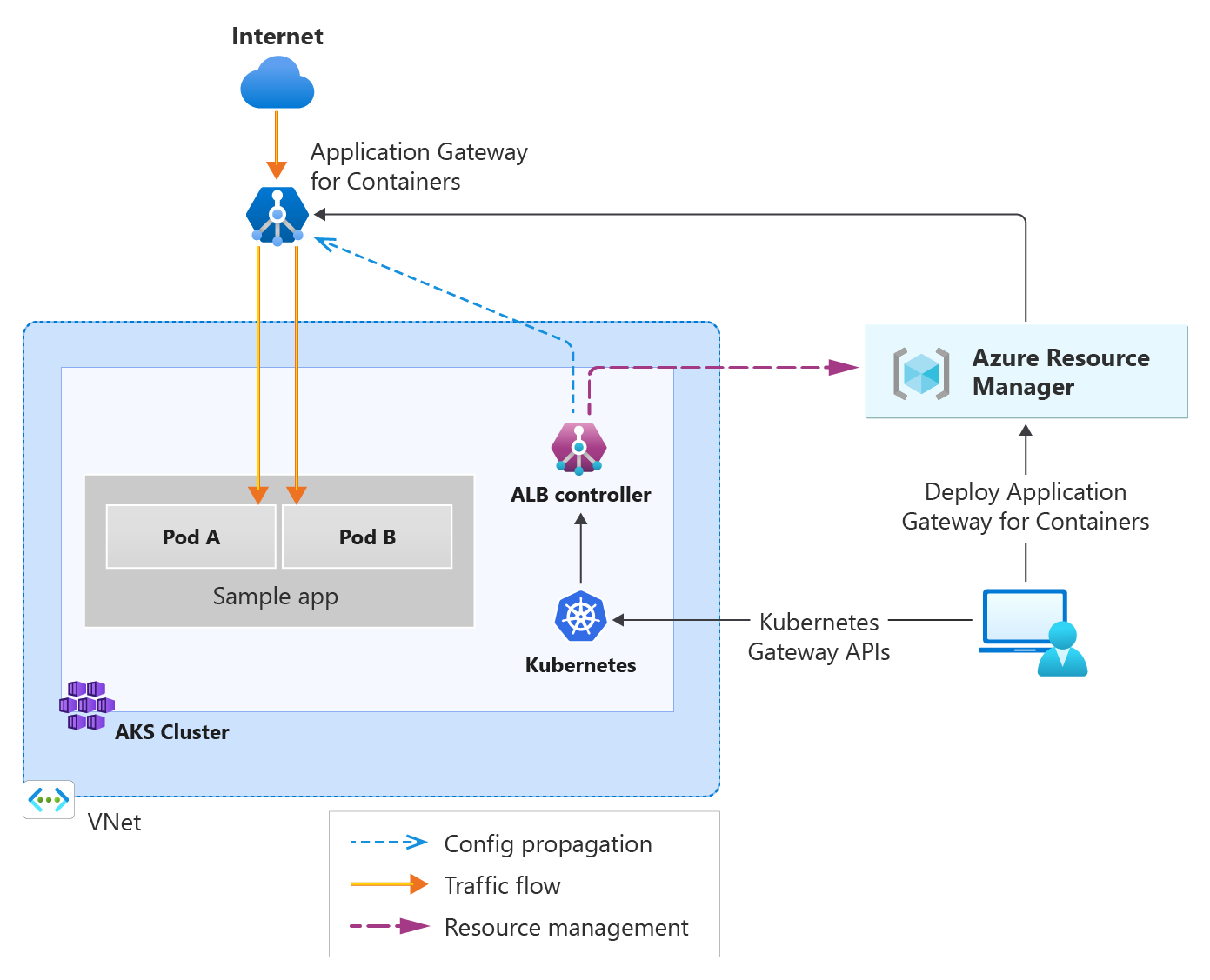

Azure Application Gateway for Containers is a Kubernetes-native, Layer 7 load balancer designed for secure and scalable ingress in AKS workloads. It extends Azure’s load balancing capabilities by enabling:

Advanced routing,

TLS and mTLS termination,

Real-time updates, and

Kubernetes Gateway API support.

Unlike traditional load balancers, it operates outside the AKS data plane, managed by an Azure Load Balancer (ALB) Controller running inside the cluster. This architecture is ideal for exposing private AKS apps to external users — without sacrificing security or performance.

🔄 From AGIC to AGC

Application Gateway for Containers (AGC) is the evolution of the Application Gateway Ingress Controller (AGIC). While AGIC relied on ARM calls and had limitations in scale and update speed, AGC offers:

Native support for Gateway API

Near real-time configuration updates

Support for >1400 pods

Simplified ops with fewer moving parts

It removes the bottlenecks of AGIC by offloading most control logic to Azure-managed components while retaining Kubernetes-native interfaces.

🛠️ Core Components

AGC is composed of three main resources:

Gateway

The parent Azure resource that manages the control plane, mapping Kubernetes Ingress/Gateway API resources to actual proxy configuration.Frontends

Define public/private entry points (with FQDNs) for client traffic. Frontends are referenced in Kubernetes Gateway/Ingress specs.Associations

Bind the Gateway to a delegated subnet in the AKS VNet. Each association maps traffic to the correct backend pods. Requires a /24 subnet (≥ 256 IPs).

🔗 Key Dependencies

To deploy AGC, you need:

🔒 User-Assigned Managed Identity

Grants the ALB Controller access to manage Azure resources securely (requires roles like AppGw for Containers Config Manager and Network Contributor).🌐 Subnet Delegation

A subnet delegated toMicrosoft.ServiceNetworking/trafficControllers. This hosts the AGC proxies. Only one gateway per delegated subnet.📡 Private IP Address

AGC uses this as the listener endpoint for incoming traffic. It’s not a standalone ARM resource but critical for routing.

This modern ingress solution empowers teams to expose apps from private AKS clusters confidently — with TLS/mTLS, autoscaling, traffic splitting, and seamless GitOps or IaC integration via Azure CLI, Terraform, or native K8s YAML.

Prerequisites

Before setting up Azure Application Gateway for Containers to securely expose applications from a private AKS cluster, ensure you have the following:

✅ Azure Subscription: An active Azure subscription with Contributor role permissions to create and manage AKS clusters, Application Gateway resources, and networking components. Sign up for Azure.

🏗️ Private AKS Cluster: A private AKS cluster deployed using Terraform in Terraform Cloud. Ensure the cluster is running and accessible via kubectl. The cluster should have a user-assigned managed identity and a delegated subnet for Application Gateway integration. Learn about private AKS clusters.

🛠️ Azure CLI: Installed (version 2.30 or later) and configured with az login. Use it to manage Azure resources and AKS. Install Azure CLI.

🖥️ kubectl: Installed and configured to interact with your AKS cluster. Verify connectivity with kubectl get nodes. Install kubectl.

📦 Helm: Installed (version 3.x) for deploying cert-manager and other Helm charts. Install Helm.

🔐 Workload Identity Federation – for secure, passwordless authentication to Azure resources without secrets (for cert-manager deployment)

🔒 Cert-Manager: Ready to install via Helm or already installed in your AKS cluster. Cert-manager will automate TLS certificate issuance (e.g., from Let’s Encrypt). Basic familiarity with cert-manager’s ClusterIssuer or Issuer resources is recommended. Cert-Manager Documentation.

🌐 Domain for TLS: A registered domain

dripwithstyle.comfor configuring HTTPS with TLS certificates. Ensure you can manage DNS records to point to the Application Gateway’s public IP or DNS name. A subdomain (e.g.,echotls.dripwithstyle.com) is typically used for the application.🔑 User-Assigned Managed Identity: A managed identity assigned to the AKS cluster for Application Gateway integration. This enables secure communication between AKS and Azure resources. Learn about managed identities.

🌐 Subnet Delegation: A dedicated subnet in your AKS virtual network, delegated for Application Gateway for Containers. This ensures proper networking configuration. Configure subnet delegation.

📚 Knowledge Requirements:

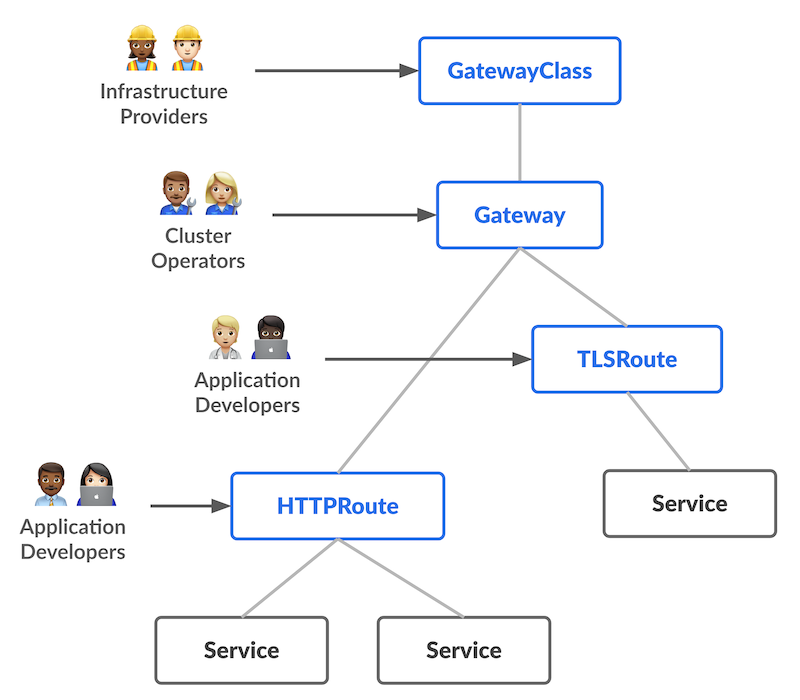

Familiarity with Kubernetes Ingress and Gateway API concepts to configure routing rules.

Basic understanding of Azure networking (e.g., VNets, private IPs, DNS).

Awareness of TLS certificate management (e.g., Let’s Encrypt or custom CAs).

Ensure all tools are installed and configured on your local machine or CI/CD environment. Verify Terraform state in Terraform Cloud to confirm the AKS cluster’s configuration, including networking and identity settings.

🚀 Deployment Strategies

When deploying Application Gateway for Containers, Azure offers two distinct strategies for managing the lifecycle of the gateway and its associated resources. The choice depends on how much control you want over the infrastructure and how tightly you want it integrated with Kubernetes.

🧩 1. Bring Your Own (BYO) Deployment (I will use this strategy)

In the BYO model, you manually provision and manage all AGC resources — including the Gateway, Frontend, and Association — using tools like the Azure Portal, CLI, PowerShell, or Terraform.

You create the Frontend resource in Azure before referencing it in your Kubernetes

Gatewaydefinition.The lifecycle of these resources is decoupled from Kubernetes — deleting a

Gatewayin Kubernetes does not delete the Azure Frontend.This model gives you full control over infrastructure but requires more manual management.

✅ Best for: Teams that prefer explicit control over Azure resources or already manage infrastructure via Terraform or Bicep.

⚙️ 2. Managed by ALB Controller

In this fully managed model, the Azure Load Balancer (ALB) Controller — running inside your AKS cluster — handles the lifecycle of AGC resources automatically.

You define an

ApplicationLoadBalancercustom resource in Kubernetes.The ALB Controller provisions the Gateway, Frontend, and Association resources in Azure.

When you create a

Gatewayresource that references theApplicationLoadBalancer, the controller automatically creates and manages the corresponding Frontend.Deleting the Kubernetes

Gatewayalso cleans up the Azure resources.

✅ Best for: Teams that want a Kubernetes-native experience with minimal Azure-side configuration.

🧠 Summary

| Strategy | Resource Management | Automation Level | Ideal For |

| Bring Your Own | Manual (Azure CLI, Terraform) | Low | Infra-heavy teams, Terraform users |

| Managed by ALB Controller | Kubernetes-native (via CRDs) | High | DevOps teams, GitOps workflows |

kubectl get pod -A | grep -E 'alb|cert|wi-webhook'

I use Azure active directory to connect to my jumpbox.

Then Verified the tools on the prerequisites

Verify user assigned identity client id

az identity federated-credential list \

--identity-name certmanager-uami-terranetes \

--resource-group MC_rg-terranetes-aks-prod_aks-terranetes-cluster-prod_westeurope \

--query "[?name=='cert-manager'].{Name:name,Subject:subject,Audience:audience,Issuer:issuer}" -o table

Verify user assigned identity resource federated identity

az identity federated-credential list \

--identity-name certmanager-uami-terranetes \

--resource-group MC_rg-terranetes-aks-prod_aks-terranetes-cluster-prod_westeurope \

--query "[?name=='cert-manager'].{Name:name,Subject:subject,Audience:audience,Issuer:issuer}" -o table

Export AGC values

## Export values for Application Gateway for container

export AGC_RESOURCE_ID=$(az network alb show \

--resource-group rg-terranetes-aks-prod \

--name terranetes-aks-alb \

--query id -o tsv)

export AGC_FRONTEND_NAME="terranetes-aks-alb-frontend"

## verify the exported values

echo "AGC_RESOURCE_ID=${AGC_RESOURCE_ID}"

echo "AGC_FRONTEND_NAME=${AGC_FRONTEND_NAME}"

Deploy echo pod application and services

cat << EOF | kubectl apply -f -

# 1. BLUE Deployment

apiVersion: apps/v1

kind: Deployment

metadata:

name: blue-echo-pod

labels:

version: blue

spec:

replicas: 2

selector:

matchLabels:

version: blue

template:

metadata:

labels:

version: blue

spec:

containers:

- name: echo-pod

image: georgeezejiofor/echo-pod:blue-v1

imagePullPolicy: Always

ports:

- containerPort: 80

env:

- name: NODE_NAME

valueFrom:

fieldRef:

fieldPath: spec.nodeName

- name: POD_COLOR

value: "BLUE"

---

# 2. BLUE Service

apiVersion: v1

kind: Service

metadata:

name: blue-echo-service

spec:

selector:

version: blue

ports:

- protocol: TCP

port: 80

targetPort: 80

---

# 3. GREEN Deployment

apiVersion: apps/v1

kind: Deployment

metadata:

name: green-echo-pod

labels:

version: green

spec:

replicas: 2

selector:

matchLabels:

version: green

template:

metadata:

labels:

version: green

spec:

containers:

- name: echo-pod

image: georgeezejiofor/echo-pod:green-v1

imagePullPolicy: Always

ports:

- containerPort: 80

env:

- name: NODE_NAME

valueFrom:

fieldRef:

fieldPath: spec.nodeName

- name: POD_COLOR

value: "GREEN"

---

# 4. GREEN Service

apiVersion: v1

kind: Service

metadata:

name: green-echo-service

spec:

selector:

version: green

ports:

- protocol: TCP

port: 80

targetPort: 80

EOF

cat << EOF | kubectl apply -f -

apiVersion: gateway.networking.k8s.io/v1

kind: Gateway

metadata:

name: echopod-gateway-tls

namespace: default

annotations:

alb.networking.azure.io/alb-id: "/subscriptions/xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx/resourceGroups/rg-terranetes-aks-prod/providers/Microsoft.ServiceNetworking/trafficControllers/terranetes-aks-alb"

cert-manager.io/cluster-issuer: letsencrypt-dns01-istio

service.beta.kubernetes.io/port_80_no_probe_rule: "true"

service.beta.kubernetes.io/port_443_no_probe_rule: "true"

spec:

gatewayClassName: azure-alb-external

listeners:

- name: http

port: 80

protocol: HTTP

hostname: "echotls.dripwithstyle.com"

allowedRoutes:

namespaces:

from: All

- name: https

port: 443

protocol: HTTPS

hostname: "echotls.dripwithstyle.com"

tls:

mode: Terminate

certificateRefs:

- name: terranetes-echopod-tls

kind: Secret

group: ""

allowedRoutes:

namespaces:

from: All

EOF

Deploy clusterissuer for Azuredns with dns01

cat <<EOF | kubectl apply -f -

apiVersion: cert-manager.io/v1

kind: ClusterIssuer

metadata:

name: letsencrypt-dns01-istio

spec:

acme:

server: https://acme-v02.api.letsencrypt.org/directory

email: george@gmail.com

privateKeySecretRef:

name: letsencrypt-dns01-istio-key

solvers:

- dns01:

azureDNS:

subscriptionID: "xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx" # Subscription ID

resourceGroupName: "rg-terranetes-poc" # dns zone resource group

hostedZoneName: "dripwithstyle.com" # This should be the Azure DNS zone name

environment: AzurePublicCloud

managedIdentity:

clientID: "yyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyy" # This should be the client ID of the user-assigned managed identity

EOF

Create argocd certificate

cat <<EOF | kubectl apply -f -

apiVersion: cert-manager.io/v1

kind: Certificate

metadata:

name: terranetes-istio-cert

namespace: istio-ingress # Must match the namespace of the Istio ingress gateway

spec:

secretName: terranetes-istio-tls # This is the credentialName in the Gateway resource

duration: 2160h # 90 days

renewBefore: 360h # 15 days

isCA: false

privateKey:

algorithm: RSA

encoding: PKCS1

size: 4096

issuerRef:

name: letsencrypt-dns01-istio

kind: ClusterIssuer

group: cert-manager.io

dnsNames:

- "echotls.dripwithstyle.com"

EOF

Deploy echo-pod gateway (Bring your own deployment strategy)

cat << EOF | kubectl apply -f -

apiVersion: gateway.networking.k8s.io/v1

kind: Gateway

metadata:

name: echopod-gateway-tls

namespace: default

annotations:

alb.networking.azure.io/alb-id: "/subscriptions/xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx/resourceGroups/rg-terranetes-aks-prod/providers/Microsoft.ServiceNetworking/trafficControllers/terranetes-aks-alb"

cert-manager.io/cluster-issuer: letsencrypt-dns01-istio

service.beta.kubernetes.io/port_80_no_probe_rule: "true"

service.beta.kubernetes.io/port_443_no_probe_rule: "true"

spec:

gatewayClassName: azure-alb-external

listeners:

- name: http

port: 80

protocol: HTTP

hostname: "echotls.dripwithstyle.com"

allowedRoutes:

namespaces:

from: All

- name: https

port: 443

protocol: HTTPS

hostname: "echotls.dripwithstyle.com"

tls:

mode: Terminate

certificateRefs:

- name: terranetes-echopod-tls

kind: Secret

group: ""

allowedRoutes:

namespaces:

from: All

EOF

Deploy HTTPRoute tls

cat <<EOF | kubectl apply -f -

apiVersion: gateway.networking.k8s.io/v1

kind: HTTPRoute

metadata:

name: terranetes-echopod-route-tls

namespace: default

spec:

parentRefs:

- name: echopod-gateway-tls

hostnames:

- echotls.dripwithstyle.com

rules:

- matches:

- path:

type: PathPrefix

value: /

backendRefs:

- name: blue-echo-service

port: 80

weight: 50

- name: green-echo-service

port: 80

weight: 50

EOF

Verify the deployed objects

kubectl get pod,svc,gateway,httproute,certificate -n default | grep echo

Traffic routing for blue and green echo pod services

🎯Conclusion

You’ve successfully deployed a secure, production-grade ingress for your private Azure Kubernetes Service (AKS) cluster using Azure Application Gateway for Containers, integrated with cert-manager for TLS and leveraging Kubernetes Gateway APIs with Infrastructure-as-Code principles.

✅ What’s Been Achieved

🔒 Exposed private AKS services securely to external users without compromising network security.

⚙️ Automated TLS certificate management with cert-manager for seamless HTTPS.

🚀 Built a scalable, low-latency ingress using modern Gateway API standards.

🔁 Implemented flexible deployment strategies, including ALB Controller automation.

🧭 What’s Next?

My next project explores advanced cloud-native networking:

🔧 Managing and exposing frontend services for 10 microservices with the Azure-managed AKS Istio add-on, using service mesh patterns and zero-trust security. Stay tuned for updates!

🤝 Stay Connected

Found this guide helpful? Follow my journey into Istio and microservices on LinkedIn! Click the blue LinkedIn button to connect: George Ezejiofor on LinkedIn. Let’s keep building scalable, secure cloud-native systems, one project at a time! 🌐🔧

Subscribe to my newsletter

Read articles from George Ezejiofor directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

George Ezejiofor

George Ezejiofor

As a Senior DevSecOps Engineer, I’m dedicated to building secure, resilient, and scalable cloud-native infrastructures tailored for modern applications. With a strong focus on microservices architecture, I design solutions that empower development teams to deliver and scale applications swiftly and securely. I’m skilled in breaking down monolithic systems into agile, containerised microservices that are easy to deploy, manage, and monitor. Leveraging a suite of DevOps and DevSecOps tools—including Kubernetes, Docker, Helm, Terraform, and Jenkins—I implement CI/CD pipelines that support seamless deployments and automated testing. My expertise extends to security tools and practices that integrate vulnerability scanning, automated policy enforcement, and compliance checks directly into the SDLC, ensuring that security is built into every stage of the development process. Proficient in multi-cloud environments like AWS, Azure, and GCP, I work with tools such as Prometheus, Grafana, and ELK Stack to provide robust monitoring and logging for observability. I prioritise automation, using Ansible, GitOps workflows with ArgoCD, and IaC to streamline operations, enhance collaboration, and reduce human error. Beyond my technical work, I’m passionate about sharing knowledge through blogging, community engagement, and mentoring. I aim to help organisations realize the full potential of DevSecOps—delivering faster, more secure applications while cultivating a culture of continuous improvement and security awareness.