🧠 How Generative AI Works (And Why It Feels So Smart)

Abhradeep Barman

Abhradeep BarmanArtificial Intelligence (AI) is no longer just a sci-fi dream. It’s everywhere. It’s helping you finish your sentences, write essays, generate art, compose music, and even write code. From Siri to ChatGPT, from DALL·E to your Netflix recommendations—AI seems like it “understands” us. But does it really? And how does it actually work?

Let’s peel back the curtain and explore the secret sauce behind Generative AI. Grab your virtual lab coat—we’re diving into the tech magic that lets machines speak, write, paint, and imagine like us.

🤖 What is Generative AI?

Let’s break it down:

"Generative" = It can create new things. Text, images, poems, code, music—you name it.

"AI" = Software that learns from data to solve tasks that usually need human smarts.

So, Generative AI is an AI model that learns from tons of data and uses that knowledge to generate new, never-before-seen content that feels surprisingly human.

This is how the GPT (Generative Pre-trained Transformer) model looks like:

🧩 Step-by-Step: How It Actually Works

Let’s follow the journey of how AI turns raw text into something smart, creative, and useful.

Step 1: Tokenization - Breaking Down Language

AI doesn’t understand words like we do. “Hello” doesn’t mean anything to a computer. So, first, we break down text into tokens.

Tokens are chunks of text—could be whole words (“hello”), parts of words (“run”, “##ning”), or even single letters (“a”, “b”).

For example:

"AI is amazing" → ["AI", "is", "amazing"]

Each token is then mapped to a number (because computers loooove numbers). Now we’ve got a sequence of IDs like [1051, 312, 9876]. Still gibberish, but we’re getting there.

Step 2: Vector Embeddings — Giving Numbers Meaning

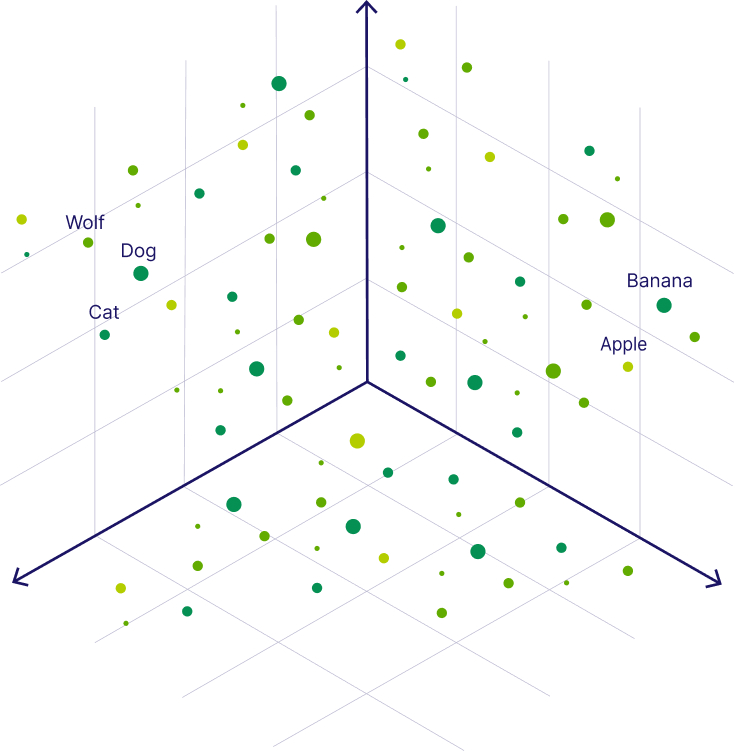

Okay, we’ve got numbers. But how does the model know “cat” is similar to “dog” and not “sandwich”?

This is where embeddings step in. Think of embeddings as semantic fingerprints. Each token is mapped to a multi-dimensional vector (a list of, say, 768 numbers) that represents its meaning.

“King” and “Queen” will have similar embeddings.

“Apple” (fruit) and “Apple” (company)? Different contexts, different embeddings.

These vectors live in something called vector space, where words with related meanings are literally closer together.

Step 3: Positional Encoding — Order Matters

“Cats chase mice” ≠ “Mice chase cats.” The words are the same, but the order flips the meaning.

Neural networks don’t naturally understand word order, so we add positional encodings. These are little clues we attach to each token to indicate where it is in the sentence (1st, 2nd, 3rd…).

So now the model knows:

Token 0: “Cats”

Token 1: “chase”

Token 2: “mice”

And it can respect the sequence, not just the content.

Step 4: Self-Attention — “What Words Should I Care About?”

Here’s where the magic happens. It’s called self-attention.

Imagine every word in a sentence looking around and asking:

“Who else in this sentence should I be paying attention to right now?”

Let’s take the sentence:

“The dog didn’t bark because it was tired.”

What does “it” refer to? The dog? The bark? Self-attention helps the model figure that out by letting every word peek at the others, weigh them, and decide who’s relevant.

Each word becomes a little detective, investigating context clues.

This lets AI models handle ambiguity, context, and long-range dependencies like a champ.

Step 5: Layers of Reasoning

Once self-attention has done its job, the AI passes the information through multiple layers of neural networks. Each layer refines the model’s understanding a little more—first picking up basic associations, then more complex structures, and eventually deep meaning.

Each layer takes in the information from the previous one and makes adjustments, learning which words influence others, which phrases carry weight, and what ideas are being expressed.

With each step, the AI is thinking harder—not memorizing, but recognizing patterns and building a sense of what the input really means.

📚 Training Time: How AI Gets “Smart”

Before AI can start generating poems or writing code, it goes through an intense training phase.

Think of it like raising a super-smart baby with the internet as its teacher.

Here’s how it works:

Feed it tons of data: books, websites, Wikipedia, Reddit threads, forums—you name it.

Give it a challenge: predict the next token in a sentence.

If it guesses wrong, calculate the loss (a math score for “how wrong was that guess?”).

Use backpropagation to update its internal weights so it makes slightly better guesses next time.

Repeat millions (or billions) of times.

For example:

Input: “The sky is __”

Target: “blue”

Guess: “green” → wrong → adjust → try again

Over time, it learns language structures, facts, grammar, tone, humor—even sarcasm (kind of).

🎯 Inference Time: How AI Generates on the Fly

Training’s done. Now it’s time for the model to shine—this is called inference.

You give it a prompt, like:

“Write a bedtime story about a robot and a dragon.”

The model:

Tokenizes your prompt.

Embeds the tokens with meaning + position.

Runs them through self-attention and all the layers.

Predicts the next token, one at a time.

Adds the new token to the sequence and repeats until done.

And voilà! Out comes a story that didn’t exist a second ago.

🔮 But Wait—Is AI Thinking?

Not really. AI doesn’t think or understand like humans—it predicts. When you type a prompt, it uses patterns learned from massive amounts of data to guess the next word, one token at a time. That’s it.

It doesn’t know what things mean—it has no emotions, goals, or awareness. It’s just extremely good at matching patterns and generating content that feels smart.

Subscribe to my newsletter

Read articles from Abhradeep Barman directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by