cOS (Container OS): Why cOS Is the Minimalist OS Every Container-First Data Center Needs

Anuja Sawant

Anuja SawantTable of contents

- What is cOS?

- Technical Features of cOS

- Why Traditional OSes Don’t Work for Containers

- Installing cOS: Developer Guide

- DevOps Integration with Kubernetes

- Infrastructure as Code with Terraform

- cOS in Edge Data Centers

- Security Benefits of cOS

- Performance & Resource Optimization

- Real-World Use Cases

- Atomic Updates & Rollback

- How cOS Compares to Other OSes

- Tools That Work Seamlessly with cOS

- Conclusion

In the age of Kubernetes and DevOps, data centers are rapidly moving away from traditional monolithic operating systems. Today, the goal is speed, efficiency, and immutability, not bloated packages and legacy compatibility. That’s where cOS (Container OS) enters the picture.

cOS is a minimalist Linux-based operating system purpose-built for container-native infrastructure. Designed to be immutable, atomic, and cloud-agnostic, cOS strips away unnecessary components and presents a secure, efficient runtime for Kubernetes, Docker, and OCI-compliant containers.

This blog takes a deep dive into how cOS works, what makes it unique, and why it’s becoming an essential OS for container-first data centers — especially when paired with modern orchestration tools like Kubernetes, Nomad, and K3s.

What is cOS?

cOS (Container Operating System) is:

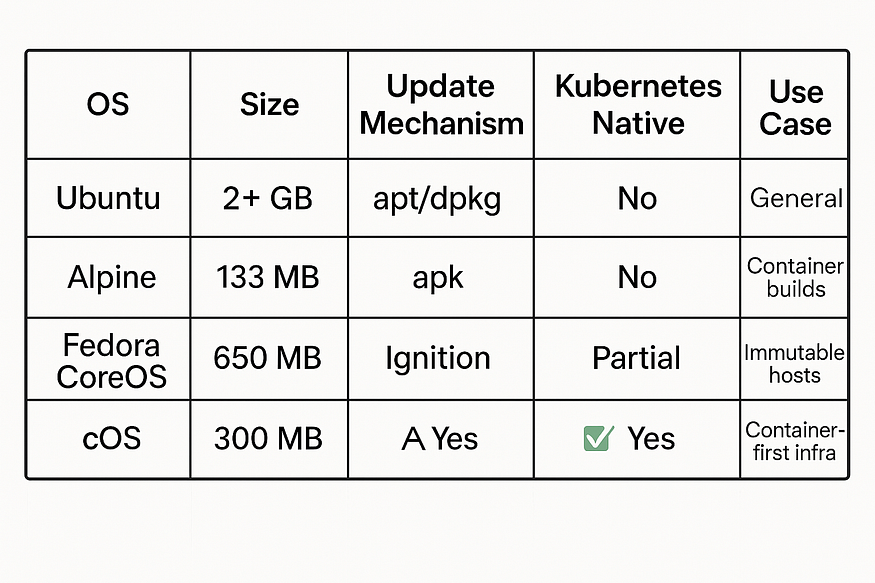

A minimal Linux OS, typically under 300 MB

Built with read-only root filesystem

Supports OTA (Over-the-Air) atomic upgrades

Based on openSUSE, Alpine, or Ubuntu Core, depending on the distribution

Designed to boot fast, update fast, and recover fast

It’s not a general-purpose OS. There’s no package manager like apt or yum—you deploy containers, and that’s it.

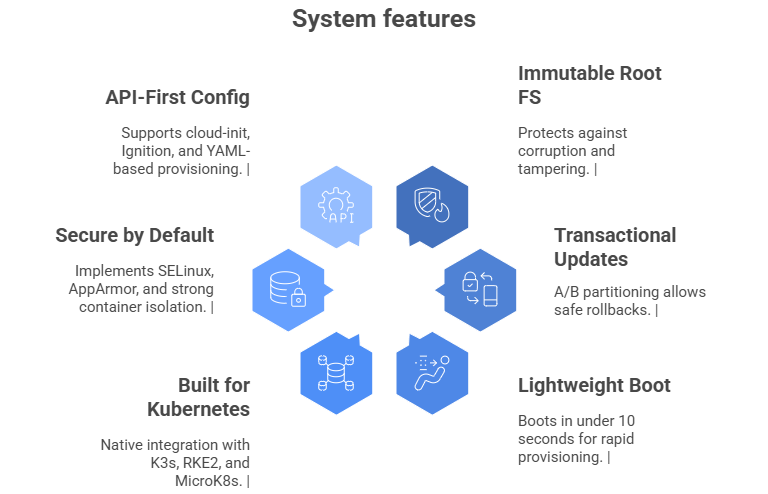

Technical Features of cOS

Let’s break down the technical characteristics that make cOS stand out:

Immutable Root FS: Protects against corruption and tampering

Transactional Updates: A/B partitioning allows safe rollbacks

Lightweight Boot: Boots in under 10 seconds for rapid provisioning

Built for Kubernetes: Native integration with K3s, RKE2, and MicroK8s

Secure by Default: Implements SELinux, AppArmor, and strong container isolation

API-First Config: Supports cloud-init, Ignition, and YAML-based provisioning

Why Traditional OSes Don’t Work for Containers

Most legacy OSes are built with:

Kernel modules and packages you’ll never use

System services designed for monolithic apps

Slow and complex update mechanisms

Mutable states that are prone to corruption

In contrast, cOS is built solely to run containers, with none of the extra baggage.

Installing cOS: Developer Guide

A typical cOS install takes under 5 minutes. Here’s a simplified flow for bare metal or VM deployment:

Step 1: Flash cOS ISO

dd if=cos.iso of=/dev/sdX bs=4M status=progress

Step 2: Boot into cOS Live

On boot, it presents a cloud-init like screen to configure network and cluster settings.

Step 3: Use system installer

cos-installer --device /dev/sda --config ./cloud-config.yaml

Sample cloud-config.yaml

hostname: node-1

users:

- name: cosadmin

passwd: $6$rounds=4096$RANDOM

sudo: ['ALL=(ALL) NOPASSWD:ALL']

install:

bundles:

- k3s

reboot: true

DevOps Integration with Kubernetes

cOS integrates deeply with lightweight Kubernetes distros such as:

K3s from Rancher (ideal for edge and IoT)

RKE2 for production-grade K8s clusters

MicroK8s from Canonical

Example: Bootstrapping K3s

curl -sfL https://get.k3s.io | sh -

systemctl enable k3s

This simplicity makes it easy to deploy container clusters on bare metal, cloud VMs, or edge devices.

Infrastructure as Code with Terraform

You can automate cOS provisioning using Terraform and cloud-init compatible providers.

Example: Terraform snippet for cOS on AWS

resource "aws_instance" "cos_node" {

ami = "ami-xxxxxxxx"

instance_type = "t3.micro"

user_data = file("cloud-config.yaml")

tags = {

Name = "cOS_Node"

}

}

Combine this with tools like Packer, Pulumi, or Crossplane for complete GitOps-driven deployments.

cOS in Edge Data Centers

One of cOS’s biggest strengths is in edge computing. Why?

Fast boot time = less downtime at remote locations

Immutable OS = no drift, no surprises

Remote management = OTA updates and YAML-driven configs

You can deploy a 3-node Kubernetes cluster on Raspberry Pi or Jetson Nano using cOS + K3s in under 10 minutes.

Security Benefits of cOS

Security is a key concern in container-native environments. Here’s how cOS helps:

Because there’s no general-purpose access, even accidental misconfigurations are less likely.

Performance & Resource Optimization

cOS uses a custom-compiled kernel with only container-required modules:

Lower boot times

Better memory utilization

Less overhead on low-end hardware

This makes it suitable for:

AI inference nodes (Jetson + cOS)

CI/CD runners (GitLab on cOS)

CDN or DNS edge servers

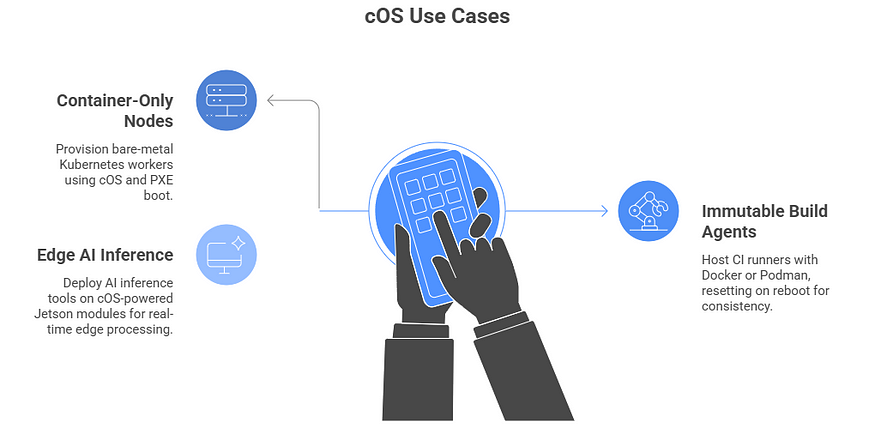

Real-World Use Cases

1. Container-Only Kubernetes Nodes

Provision hundreds of bare-metal Kubernetes workers using cOS and PXE boot.

2. Immutable Build Agents

Use cOS to host CI runners with Docker or Podman. They reset on reboot, ensuring consistency.

3. Edge AI Inference

Deploy TensorRT, Triton Server, or YOLOv8 in containers on cOS-powered Jetson modules for real-time inference at the edge.

Atomic Updates & Rollback

Inspired by CoreOS, cOS supports A/B partition-based updates.

sudo cos-upgrade --source https://example.com/cos-latest.img

If the new update fails, it reverts to the older one automatically. No need to manually rollback.

How cOS Compares to Other OSes

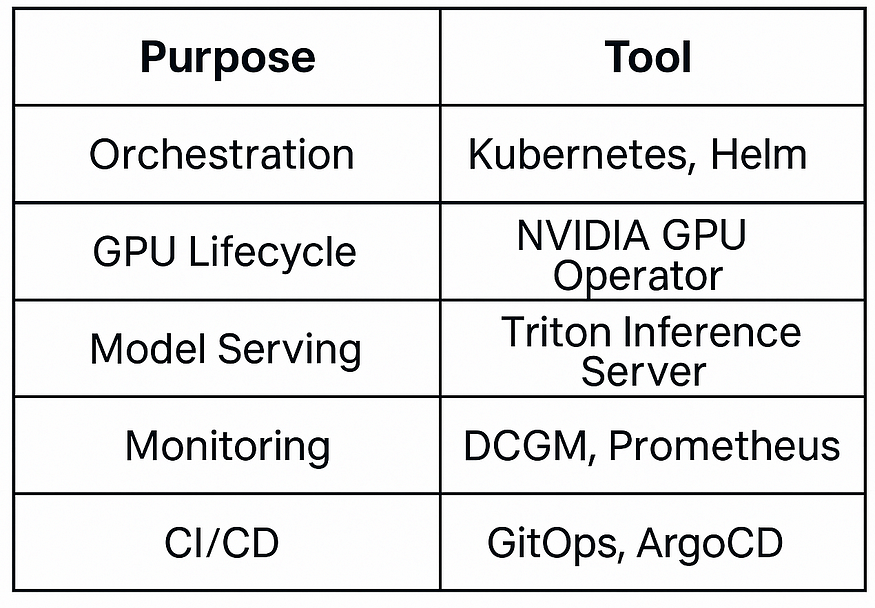

Tools That Work Seamlessly with cOS

K3s, RKE2, MicroK8s — Lightweight Kubernetes

Podman, Docker, Containerd

Ignition, cloud-init, Terraform

Flux, ArgoCD — GitOps agents

Grafana, Prometheus — Lightweight monitoring

Conclusion

In a world where containers dominate application delivery, traditional operating systems fall short. cOS is the future of OS design — lean, secure, and built purely to support Kubernetes-native workloads.

With immutable architecture, container-centric design, and DevOps-friendly deployment, cOS is ideal for data centers, CI/CD systems, and edge infrastructure.

Subscribe to my newsletter

Read articles from Anuja Sawant directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by