Traditional IO vs mmap vs Direct IO: How Disk Access Really Works

Sachin Tolay

Sachin TolayIn our earlier deep dive into Direct Memory Access (DMA), we explored how data can bypass the CPU to move efficiently between storage and memory. In this article, we will break down and compare three major approaches to disk access:

Traditional (Buffered) I/O

Memory-Mapped Files

Direct I/O

Traditional IO (Buffered IO)

When you run something like:

int fd = open("data.txt", O_RDONLY);

read(fd, buf, 4096); // read 4096 bytes from fd into buf

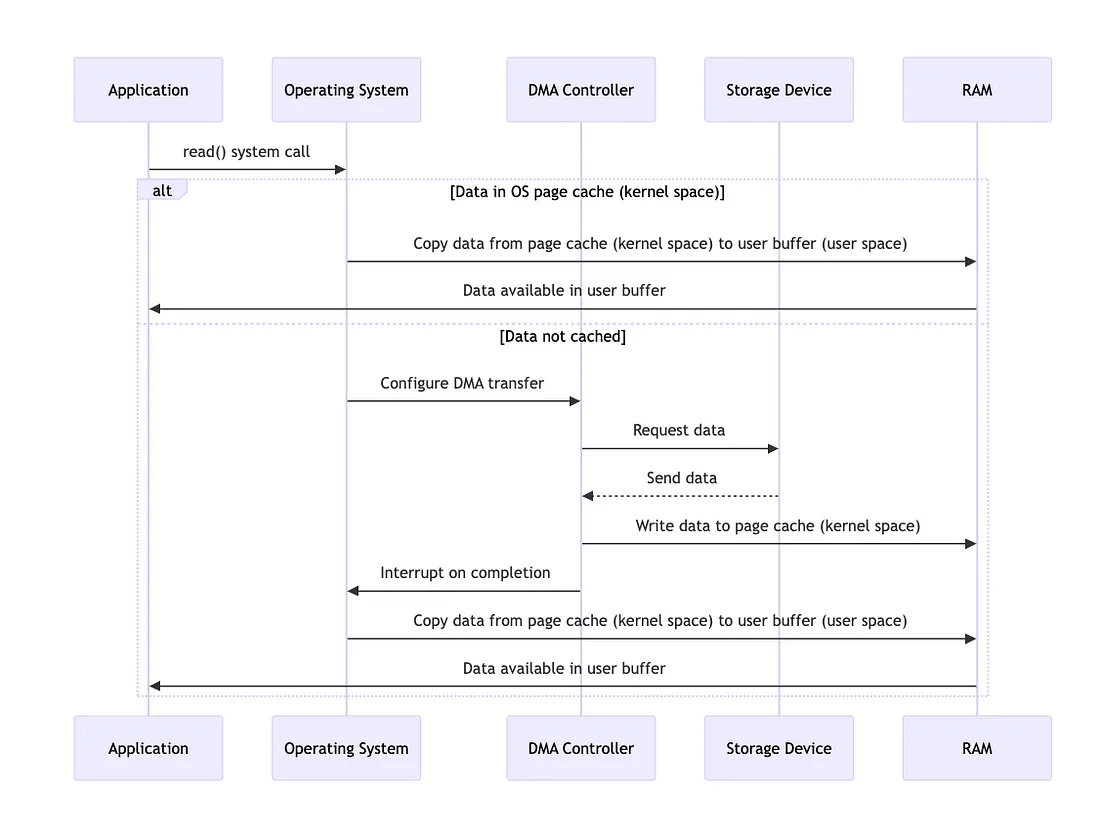

Page Cache Lookup → The OS first checks its page cache, a large shared memory pool used to avoid redundant disk access. This cache holds recently accessed file data from all processes.

Read-Ahead → If the OS needs to fetch data from disk, it doesn’t just fetch the 4 KB block you asked for. It reads ahead, often 32 KB or more, anticipating sequential access patterns. We will use this information later in the article against Traditional IO (and mmap too).

Double Copy → Data is first loaded into the OS-managed page cache via DMA, then copied again into your application buffer.

System Call Overhead → Every read() triggers a system call, which is costly → especially during sequential reads when the data is already in the cache.

Memory-Mapped Files (mmap)

mmap offers a powerful way to access files: instead of copying file data into a user buffer via read(), the OS maps the file directly into the process’s virtual memory space.

When you do this:

int fd = open("data.txt", O_RDONLY);

char *mapped = mmap(NULL, 4096, PROT_READ, MAP_PRIVATE, fd, 0);

char c = mapped[0]; // Triggers a page fault on first access

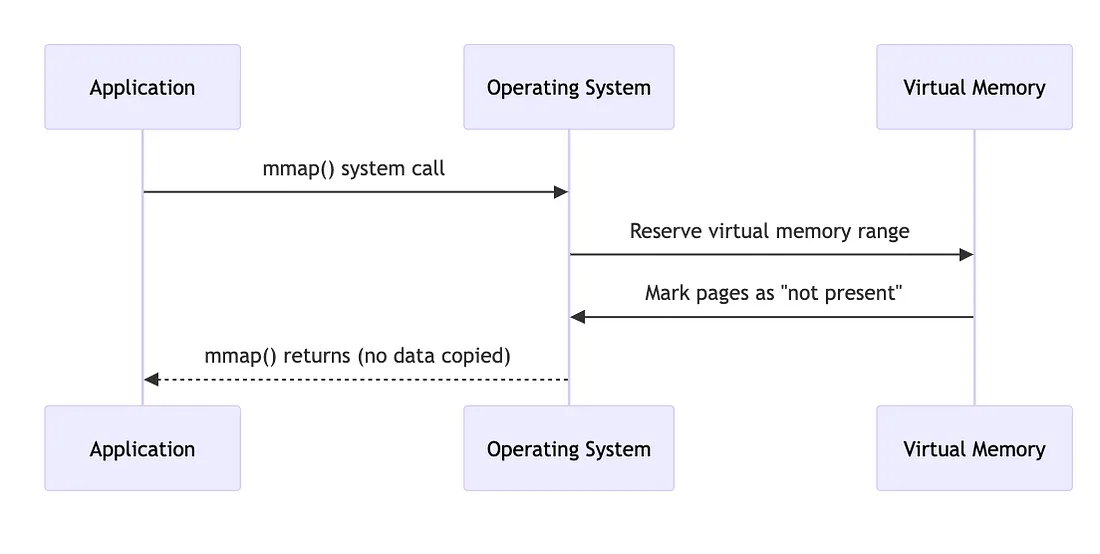

Step 1: Setting up the Mapping

You’re telling the OS → Map this file into my memory space. I’ll access it like a memory, not like a file.

No data is fetched from disk yet, just pages are marked as not present, so any access triggers a page fault.

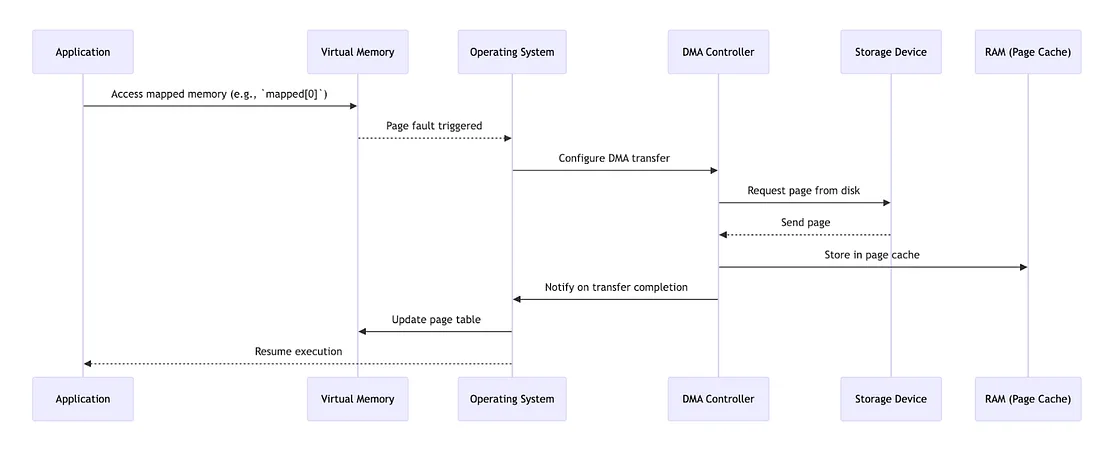

Step 2: First Access Per Page → Page Not in Cache → Major Page Fault

When the app accesses a file-backed page for the first time and it’s not in the page cache, a major page fault occurs:

This happens only for the first access to each page.

Page fault is handled transparently → the app just sees a memory access.

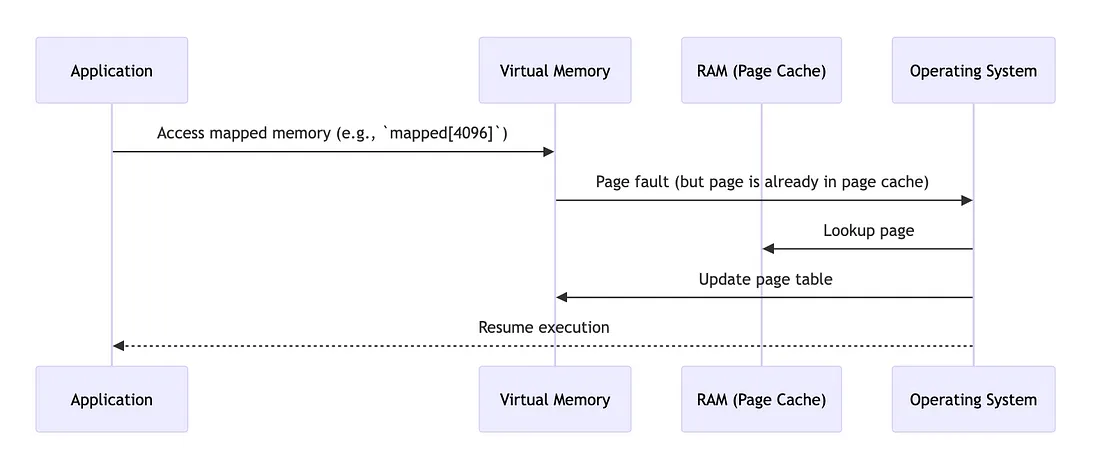

Step 3: First Access → Page Cached But Not Mapped → Minor Page Fault

If another process or earlier access already loaded the page into the page cache, but this process hasn’t mapped it yet, a minor page fault occurs:

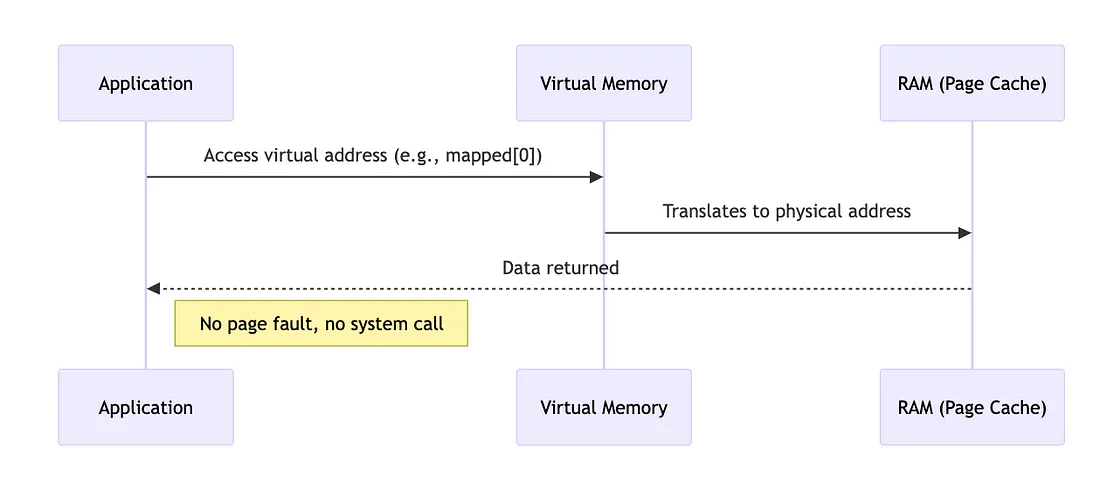

Step 4: Subsequent Access → Page Already Mapped and Cached → No Fault

If the page is already mapped in the page table and the corresponding data is cached in RAM, then the CPU can directly read it through virtual memory.

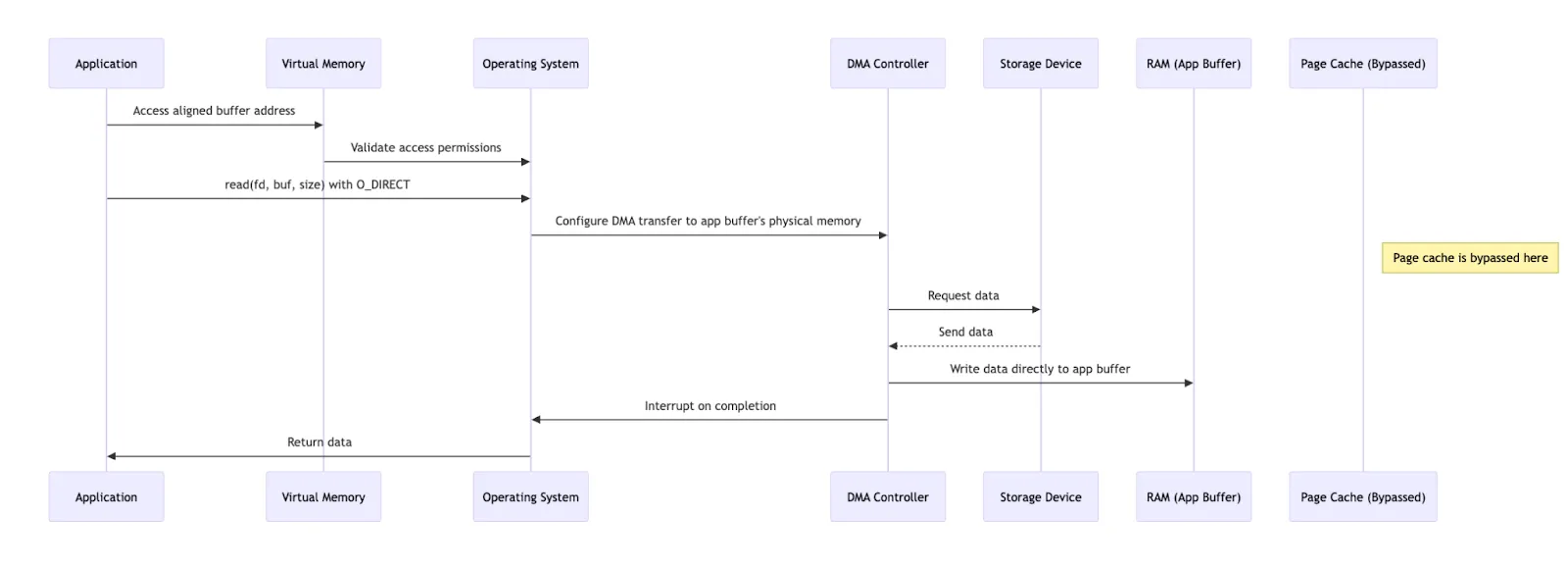

Direct I/O

Direct I/O transfers data directly between the storage device and the application buffer, bypassing the OS page cache entirely. This avoids the double copy of data, reducing CPU overhead and preventing page cache pollution due to read-aheads, but requires the application to carefully manage aligned buffers.

When you do this :-

int fd = open("data.txt", O_DIRECT);

void* buf;

posix_memalign(&buf, 4096, 4096); // Allocate 4KB aligned buffer

read(fd, buf, 4096);

The buffer needs to start at a memory address that’s a multiple of 4 KB (or another fixed size). This is called alignment.

If the buffer isn’t aligned properly, the read operation will usually fail or the system might fall back to traditional I/O.

The application buffer is mapped in virtual memory as usual.

The OS validates access and instructs the DMA controller to transfer data directly to the buffer’s physical memory.

Page cache is bypassed completely.

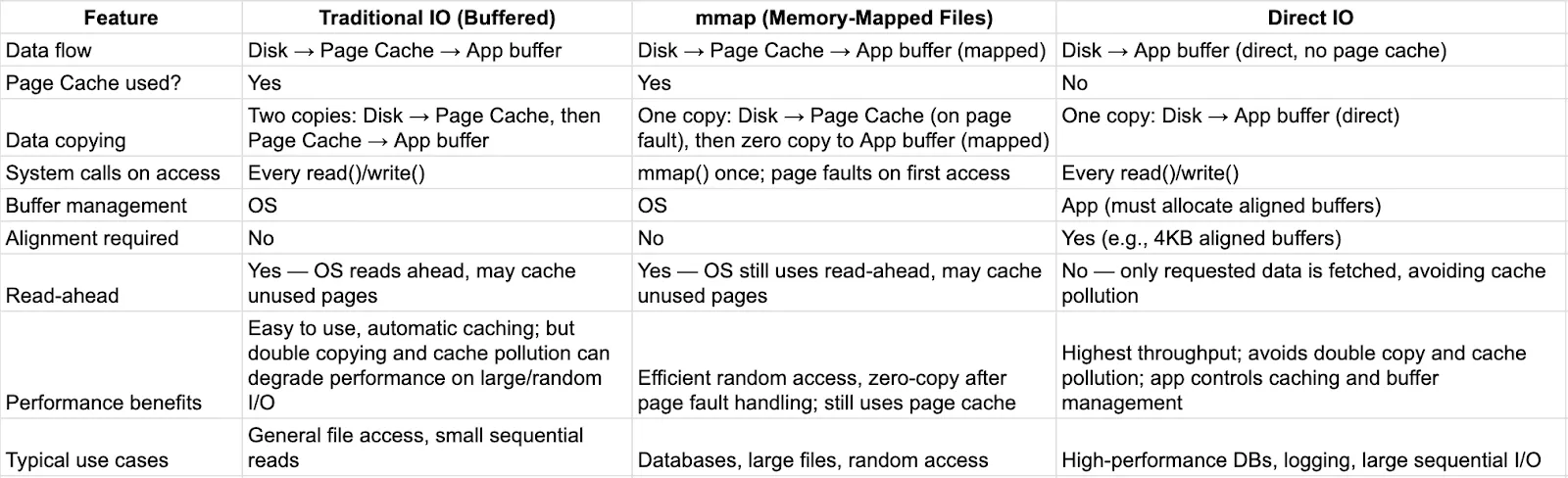

Comparison

Subscribe to my newsletter

Read articles from Sachin Tolay directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Sachin Tolay

Sachin Tolay

I am here to share everything I know about computer systems: from the internals of CPUs, memory, and storage engines to distributed systems, OS design, blockchain, AI etc. Deep dives, hands-on experiments, and clarity-first explanations.