Under the Hood of Large Language Models

Aditi Saxena

Aditi Saxena

How to build an LLM?

It’s been almost eight years since OpenAI introduced its first large language model — what would later evolve into ChatGPT, and now, more casually, just GPT. The impact has been so profound that the act of searching for information through these models has earned its own verb: GPTing.

Today, the world can hardly imagine life without AI — and that, in itself, is a powerful statement. But I’m not here to discuss AI’s impact or the sweeping societal changes it’s driving. Instead, I want to take you behind the scenes, into the heart of what it takes to build a large language model — and what it might take for you to create one of your own.

The first bit

The architecture of an LLM is basically based on a transformer. The Transformer architecture is based on the concept of self-attention, which allows the model to weigh the importance of different parts of the input sequence when processing it. There are a lot of resources on Transformers and their relation with LLMs . Thus, we will focus on the less talked about parts — Training algorithms, Losses, Data and Evaluation. I will be simplifying each of these for you and make sure that you are able to gauge the power of such nuances when you create something as discrete as a LLM model like GPT.

The Not so Classical Paradigm — Pre-Training

Imagine teaching someone to write poetry. You wouldn’t start by asking them to write a sonnet on day one. You’d first expose them to thousands of poems, books, and stories. They’d pick up patterns, vocabulary, and rhythm ; before you ever asked them to craft their own verses. That first phase — soaking in information without any specific task — is kind of what pre-training is for AI models.

Until around 2018, most natural language models were trained for specific tasks — like sentiment analysis or translation — without much general knowledge of language as a whole. That changed with OpenAI’s GPT (Generative Pre-trained Transformer), the very first model in the GPT series. It was the first time pre-training was used at this scale in a language model, setting the stage for everything that followed.

In simple terms: Pre-training gave AI the chance to read the entire internet (or a big chunk of it) before being asked to answer questions, write essays, or chat like a human. It’s like giving your AI a childhood full of reading before sending it out into the world.

What is Language Modelling ?

Language Modelling is basically a probability distribution over sequences of words / sentences . The objective is to generate the probability of a sequence in that particular order to be found on the internet or in resources it has been trained on.

For example :

P1 (remarkable, you, are , student) = 0.01

P2 (remarkable, you, you, are, student)=0.001 — Syntactic knowledge

P3 (student, are, you, remarkable)=0.12 — Semantic Knowledge

This covers the NLP (Natural Language Processing) Angle and condenses it to a probabilistic distribution giving the LMs the generative power.

The word ‘Generative’ in generative power is sometimes misleading — A model can only generate only on what it is trained on — not something that can be creatively or artistically distinct. This is where it differentiates from human intelligence.

Each of these probabilities must be expressed as the Chain Rule of Probability — typically called as Autoregressive Model.

Predicting the Next Word

Predicting the next word — applies two concepts : Tokenisation and Autoregressive Model.

Tokenisation: Each word , character, syllable and maybe even a sentence cld treated as a token — This what builds on predictability later.

However, a tokeniser considers a sequence of characters (~3 letters) or a common subsequence as a valid Token.

For Example : ‘I am Aditi. I like reading about llms. I also like chocolates’

Here each character, repeating words , and other words could be tokens.

Note : Each Token has it’s own unique ID.

Autoregressive models — are used to predict a plausible next word — using the probability of a token coming in place after a certain selected word. A plausible combination of words are given weights accordingly for them to be able to generate a semantically and syntactically correct sentence.

How are LLMs evaluated :

Building a large language model (LLM) is only half the story. The real question is: How do you know if it’s any good?

That’s where evaluation comes in.

1. Perplexity: The “How Surprised Are You?” Test

One of the classic ways to evaluate language models — especially during pretraining — is by using something called perplexity.

Think of perplexity as a measure of how surprised your model is when it sees the next word in a sentence. A lower perplexity means the model is less surprised — it predicted the word well. A higher perplexity means the model had no clue.

For example:

If your model sees:

“The cat sat on the ___”

and confidently predicts “mat,” that’s low perplexity.But if it predicts something odd like “government,” perplexity shoots up.

Mathematically:

Perplexity = e^{cross-entropy loss}

It’s the exponentiation of the average negative log-likelihood of the correct word.

Key takeaway: Lower perplexity = better model at predicting language.

However: Perplexity only measures how good the model is at predicting next words, not necessarily how helpful or factual its answers are in real-world usage (this is where human evals & task-specific metrics come in).

2. Cross-Validation: The “Don’t Fool Yourself” Check

In classic machine learning, we often use cross-validation to make sure our models generalize well — not just memorize the training data.

The idea is simple: Split your data into K chunks (folds). Train on K-1 folds and test on the remaining one. Repeat this for each fold.

This ensures your model is tested on every part of the data at least once.

In LLMs, though, cross-validation isn’t used in the same way because:

The datasets are massive (terabytes), so splitting and retraining multiple times is computationally infeasible.

Instead, a train/validation/test split is more common.

Sometimes, cross-validation ideas are borrowed in smaller setups like fine-tuning small models or domain-specific models.

You can apply cross-validation when:

You’re working with smaller fine-tuning datasets (e.g., customer service chats, legal documents, healthcare data).

You need robust estimates of model performance before deploying.

Data

The most important, sensitive and tedious part of building an LLM is the Data. Yes, a lot of research has to be done. There are a lot of constraints. You need to be careful what you choose as this could be the primary factor to drive analysis, biasness and decision taking abilities of your model.

Conventionally , More data should lead to larger models converting to Overfitting — too many clusters or points close to eachother on a graph — when one is unable to detect a model’s decision boundary (outliers getting undetected or so) . However, while training LLMs — it is noted that more the data , better the performance.

This was not conceived naturally, it was a result of observation. As we think about it — it probably makes sense — To generate data — one needs data , data which covers more aspects than one.

HyperParameter Tuning

The Art of Finding the “Just Right” Settings for Your Model

When you build a machine learning model (or even a deep learning model like GPT), there are certain settings you have to choose before the training even begins. These settings are called hyperparameters — they control how the model learns, not what the model learns.

When you’re training small machine learning models, hyperparameter tuning is all about finding the right knobs to twist: learning rate, batch size, model size, regularization, etc.But when you move into LLMs — we’re talking billions or trillions of parameters — the game changes. This is where Scaling Laws come in.

When you scale models massively, your hyperparameters don’t stay fixed. The values that worked for a 100M parameter model won’t work for a 10B or 100B parameter model. This is where hyperparameter scaling — or hyperparameter tuning guided by scaling laws — becomes crucial.

So we need to learn patterns — that are Scaling Laws.

Scaling laws are emerging patterns that arise as we train smaller models. For example: Learning Rate shows the following trend :

Needs to be adjusted as model size grows.

Too high → Divergence. Too low → Wasted compute.

Weight Decay / Regularization:

- Needs careful balancing to avoid overfitting in smaller models or underfitting in gigantic ones.

Post-Training

This is that segment of building an LLM that makes it capable to assist a user. Post-training refers to everything you do after the base language model has been pretrained on massive amounts of text (like books, articles, web pages). Pretraining teaches the model how language works but not necessarily how to be useful, safe, or aligned to human needs.

There are three main algorithms that are used for PostTraining :

Supervised Fine-Tuning

Reinforcement Learning

Instruction Tuning

Supervised Fine-Tuning (SFT’s)

The idea behind SFTs is that one can do Language Modelling (predicting the next word) of the desired answers (supervised).

Interestingly, one doesn’t need a lot of data (only few thousands) to train a model on SFT. It is really about making intuition, tone and safety a part of the prediction that an LLM is expected to do. It is trained to not generate violating content — through SFT.

Should the response be bulleted , or in paragraphs ? SFT trains the model.

Reinforcement Learning from Human Feedback (RHLF)

The problem with SFT is that it is about cloning human behaviour. It learns what is expected from it , basically teaching mannerisms to a model. But, it comes with it’s own problems.

Humans will generally prefer a response that they could not think of. They will like a new response and would appreciate it — but that shouldn’t suggest the model that the quality of the response was good every time. Secondly, LLMs tend to hallucinate. Hallucination by LLMs is also misunderstood several times.

If I ask it give me a review on a book it was never trained on- it will infer from the title, the meta data (if provided) and will generate an answer that fits the requirement , but that doesn’t mean it would be factually correct. It will be totally believable until you do a fact check — and that is Hallucination.

So, how does this work ?

Human — Rank Responses

The model generates multiple responses to a prompt. Humans rank these responses: Which one is best? Which is worse?

This creates pairwise comparisons of answers.

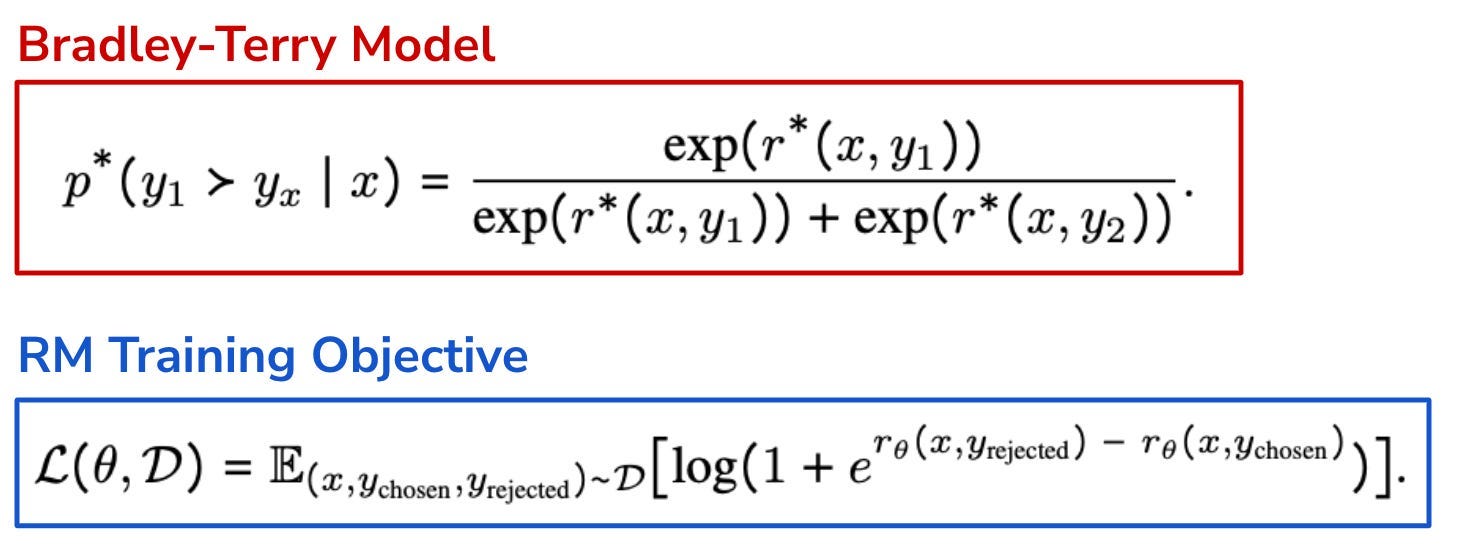

Bradley-Terry Equation

Imagine you’re rating two football teams: Team A and Team B. Let’s say Team A has a skill score of 1.2, while Team B has a skill score of 0.8. According to the Bradley-Terry model, the probability that Team A wins is determined by the ratio of the exponential of its score to the sum of the exponentials of both teams’ scores. Mathematically, this is expressed as:

P(A beats B)= e^1.2 / (e^1.2+e^ 0.8)

This is effectively a softmax over the two scores, where the higher the score, the greater the probability of winning. The larger the difference between the scores, the more confident the model becomes in its prediction.

In the context of RLHF (Reinforcement Learning from Human Feedback), the exact same principle is used, but instead of football teams, we have two responses to a prompt generated by the language model. Instead of predicting who wins a match, we’re modeling which response humans prefer. The reward model assigns each response a numerical score, and the probability that one response is preferred over another is computed using the same softmax formula. This allows the model to mathematically align its predictions with human preferences, providing a foundation for learning from feedback.

Lastly, Proximal Policy Optimisation

Now that we have a reward signal — thanks to the human preference data and the trained reward model — the next step is to actually fine-tune the large language model (LLM) so that it consistently generates outputs that score higher according to this reward model. This is where Reinforcement Learning (RL) comes in, and specifically, where Proximal Policy Optimization (PPO) becomes crucial to the process.

PPO is the algorithm most commonly used in RLHF (Reinforcement Learning from Human Feedback) for language models because it strikes a careful balance between learning and stability. In traditional reinforcement learning, models are updated by maximizing rewards. However, when dealing with LLMs — which can have billions or even trillions of parameters — even small changes to the model’s weights can lead to catastrophic shifts in behavior. These shifts might cause the model to lose its language fluency, generate nonsensical responses, or exhibit erratic behavior. This is why safe exploration and controlled updates are essential in this setting.

PPO addresses this by introducing a clipped objective function that prevents the new model (the updated policy) from straying too far from the old model (the original policy). It does this by calculating the probability ratio between the new and old policies and ensuring that updates are only made if this ratio stays within a small, acceptable range. If the model’s behavior changes too much in one step, the update is clipped — effectively acting like a safety belt that protects against drastic deviations.

This mechanism allows PPO to gradually guide the model towards better behaviors, such as producing responses that are more helpful, polite, truthful, or safe (as determined by the reward model), while at the same time preserving the underlying language generation capabilities that the base model already has. Without this careful balancing act, the model might “over-optimize” for the reward and start generating repetitive, robotic, or unnatural text just to maximize scores — this is called reward hacking.

In short, PPO helps fine-tune the model in a way that improves its alignment with human values and preferences without destabilizing its core language abilities. It allows the model to learn how to behave better while making sure that these improvements are incremental, reversible, and controlled — a critical requirement when working with extremely large models that are costly to retrain or repair. This combination of alignment and stability is why PPO is the algorithm of choice in RLHF pipelines for assistant LLMs like ChatGPT.

And that brings us to the end of this blog.

I hope you now have a clearer understanding of what goes on behind the scenes when building a Large Language Model. I’ve done my best to break down the concepts and simplify them so that even someone new to the field — whether a fresher or a curious beginner — can grasp the core ideas and, hopefully, feel inspired to explore further. After all, every expert once started with curiosity.

Subscribe to my newsletter

Read articles from Aditi Saxena directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by