🛡️Building a Secure ML API for Text Emotion Detection Using FastAPI

Farhan Ali Khan

Farhan Ali Khan

In this article, I’ll walk you through a simple yet security-focused machine learning API I built for text emotion detection. The goal was to expose a locally hosted ML model for inference via a REST API without compromising on security or bloating the deployment.

Note that this project is focused on applying the security steps like JWT-Auth and adding middleware for logging and rate limiting. Due to storage constraints, I haven’t dockerized it yet but the docker file is included in the repo which can be used to create and containerize the docker image.

Overview

I built an API in FastAPI that accepts a text string and returns the predicted emotion (e.g., joy, sadness, anger). The backend uses a fine-tuned DistillBERT model from hugging face.

Request (to the protected endpoint):

curl -X 'POST' \

'http://127.0.0.1:8000/predict' \

-H 'accept: application/json' \

-H 'Authorization: Bearer eyJhbGciOiJIUzI1NiIsInR5cCI6IkpXVCJ9.eyJzdWIiOiJ1c3IiLCJleHAiOjE3NTMxODk4NzJ9.oDvX6khWjW1-IJgNv-_8AaCT9UcDQTIz_boqfET5YSk' \

-H 'Content-Type: application/json' \

-d '{

"text": "I really loved that movie. It had a great plot"

}'

Response:

{

"response": {

"top_prediction": {

"label": "joy",

"score": 0.991

},

"all_predictions": [

{

"label": "sadness",

"score": 0.0005

},

{

"label": "joy",

"score": 0.991

},

{

"label": "love",

"score": 0.0077

},

{

"label": "anger",

"score": 0.0003

},

{

"label": "fear",

"score": 0.0001

},

{

"label": "surprise",

"score": 0.0004

}

]

}

}

Security First: Principles Applied

I think that an API is only as secure as the person creating it. Therefore, I wanted to focus more on how I can make this API as secure as possible. Here’s what I did to make it secure:

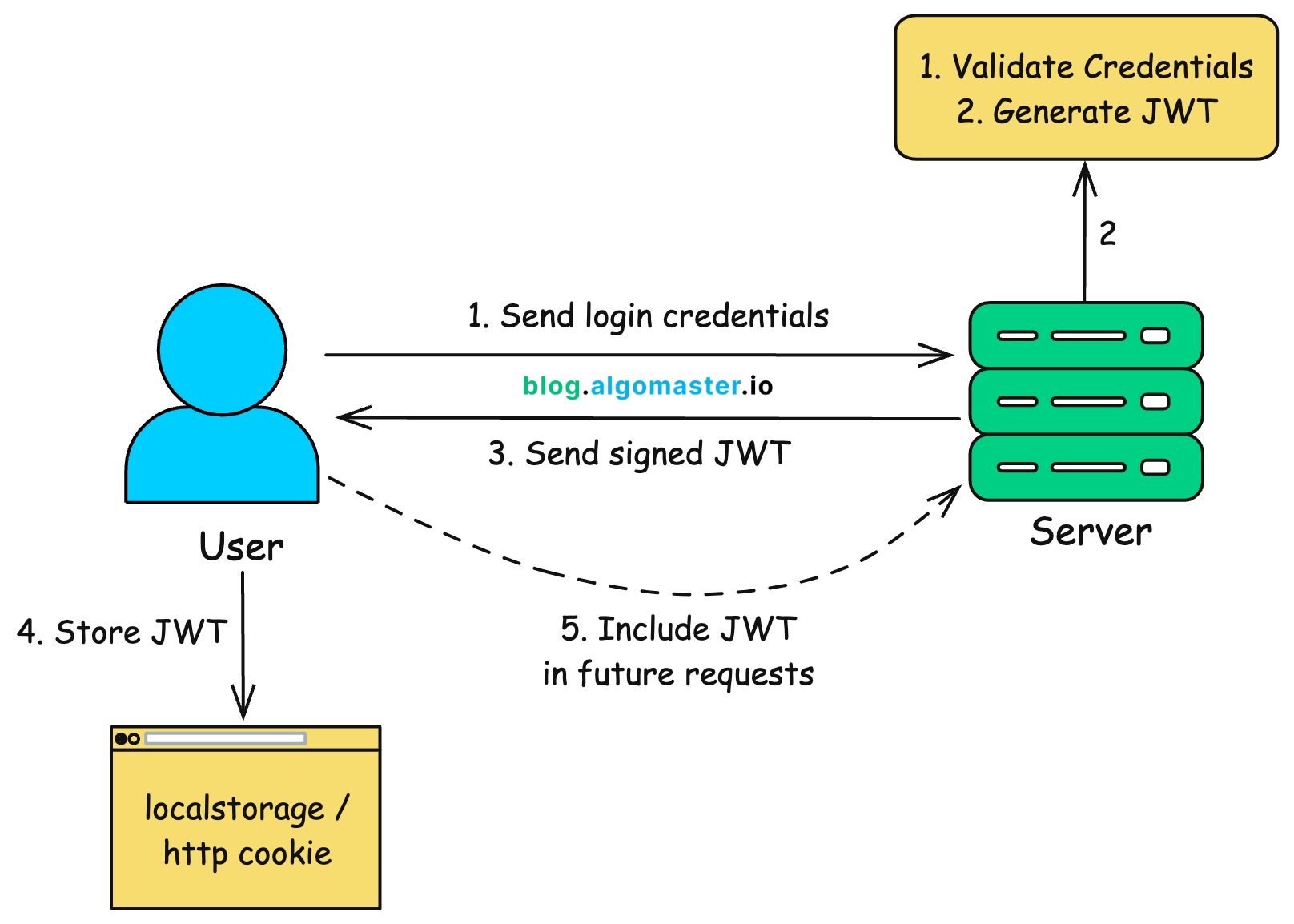

1. JWT Authentication

I used JSON Web Tokens (JWT) for user authentication.

A

/loginroute issues the token.Every call to

/predictrequires a Bearer token in the header.

Source: https://blog.algomaster.io/p/json-web-tokens

2. Rate Limiting (Abuse Prevention)

To avoid abuse, brute-force attempts, and potential Denial-of-Service (DoS)-style attacks, I added a simple in-memory rate limiter to the FastAPI app.

rate_limiting_records : Dict[str, float] = defaultdict(float)

def rate_limiter(request: Request):

client_ip = request.client.host

curr_time = time.time()

if curr_time - rate_limiting_records[client_ip] < 1:

raise HTTPException(status_code=status.HTTP_429_TOO_MANY_REQUESTS, detail="Rate limit exceeded. Try again later.")

rate_limiting_records[client_ip] = curr_time

I simply added this dependency into the endpoint which I want to apply this middleware to.

@app.post("/predict", response_model=Output)

def inference(RateLimiter = Depends(rate_limiter)):

.

.

.

.

.

return JSONResponse(content={"response": prediction}, status_code=200)

Why Rate Limiting Matters?

Protects against abuse: Prevents bots or users from hammering the endpoint.

Reduces DoS risk: Slows down attackers trying to flood your service.

Guards ML resources: Prevents overuse of the model prediction logic which may be computationally expensive.

3. Input & Output Data Validation Using Pydantic

At the heart of FastAPI's request and response handling lies Pydantic, a powerful data validation and settings management library based on Python type annotations.

When we create APIs, ensuring that users send correct, well-formed data is essential — not just to prevent bugs, but also to secure our backend from malformed or malicious input.

Let’s take a look at the registration, input and output(response) schemas our Secure ML API:

from pydantic import BaseModel, Field

from typing import List, Dict

class UserCreate(BaseModel):

username: str = Field(..., description="Username for the new user", example="john_doe")

password: str = Field(..., description="Password for the new user", example="securepassword123")

class Input(BaseModel):

text: str = Field(

description="Input text for emotion prediction",)

class Output(BaseModel):

top_prediction: Dict[str, float] = Field(description="Top predicted emotion with its score", example="{'label': 'joy', 'score': 0.9876}")

all_predictions: List[Dict[str, float]] = Field(

description="All predicted emotions with their scores",

example="[{'label': 'joy', 'score': 0.9876},{'label': 'sadness', 'score': 0.0123},{'label': 'anger', 'score': 0.0001},{'label': 'fear', 'score': 0.0001}{'label': 'love', 'score': 0.0001}]"

)

How it adds security?

Reduces attack surface: Invalid input never reaches your ML model or internal logic.

Prevents injection and type coercion bugs: You’re working with strictly typed, clean data.

Easier to maintain and audit: All your input structure is clearly defined and enforced.

Final Thoughts

Building a secure ML API taught me that security should never be an afterthought as a backend engineer. From request validation with Pydantic to token-based authentication and simple rate limiting, each layer adds more protection and reliability to the system.

Security isn’t just about using tools, it's about thinking like an attacker, anticipating abuse, and designing proactively. FastAPI made implementing these features intuitive, and now I feel more confident deploying APIs, even those involving sensitive AI workloads.

Links:

Subscribe to my newsletter

Read articles from Farhan Ali Khan directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by