Reinforcement Learning

Kunal Kumar Sahoo

Kunal Kumar Sahoo

Introduction

Reinforcement Learning (RL) has emerged as one of the most fascinating and influential paradigms in modern artificial intelligence (AI). Its charm lies in its resemblance to how animals and humans learn through interaction with the world, trial and error, and feedback from outcomes. From controlling game-playing agents that rival human champions to powering robotics, recommendation engines, and autonomous vehicles, RL has proven its versatility and depth. As a PhD student embarking on a journey into RL, one often finds that its appeal comes not just from its applications, but from the elegance of its mathematics, the complexity of its challenges, and the interdisciplinary nature of its development.

Prerequisites

To understand RL in its full scope, however, one must first be equipped with certain foundational knowledge. The prerequisites for diving into RL span several domains. At its core, RL is a sequential decision-making problem grounded in probability and optimization. A working knowledge of linear algebra and multivariable calculus is essential to understand how gradients propagate through networks and how policies are optimized. Equally important is a solid grasp of probability theory and statistics, RL inherently deals with uncertainty, whether modeling state transitions, estimating returns, or evaluating policies.

A basic understanding of data structures and algorithms is crucial, not just for implementing RL algorithms efficiently, but also for understanding the logic planned behind experience replay buffers, exploration strategies, and tree-based planning methods. Furthermore, familiarity with machine learning fundamentals, especially supervised learning is preferred to appreciate the contrast RL presents. Unlike supervised learning where labeled data is available, RL operates in environments where feedback is sparse and often delayed. And finally, those with exposure to control theory or dynamic programming will find it easier to grasp concepts like value iteration, policy iteration, and Bellman equations, which form the theoretical bedrock of reinforcement learning.

History

The roots of RL stretch back to 20th century developments in control theory and dynamic programming. The pioneering work of Richard Bellman in the 1950s introduced the principle of optimality and the recursive structure of decision problems, now enshrined in Bellman equation. Around the same time, the concept of Markov Decision Processes (MDPs) emerged, providing a formal framework for modeling decision-making in environments with stochastic dynamics. While early work in control theory focused on model-based strategies with known dynamics, RL began to distinguish itself through its learning from interaction, often in the absence of complete environment models.

In 1980s and 1990s saw significant strides in model-free RL. Temporal Difference (TD) learning, introduced by Richard Sutton in 1988, provided a means to learn value functions from raw experience by bootstrapping from future estimates. This marked a departure from traditional Monte Carlo methods and enabled faster, online learning. Not long after, Christopher Watkins introduced Q-learning, a now famous algorithm that learns the expected value of action-state pairs and converges to optimal policy under certain conditions. These early methods laid the groundwork for more sophisticated algorithms demonstrated RL’s potential in small-scale problems.

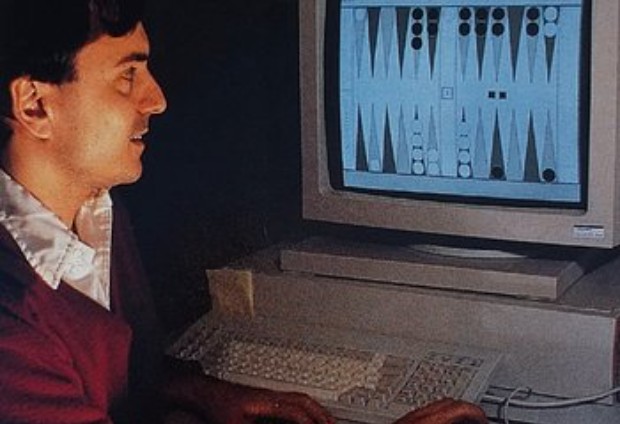

A major breakthrough came in 1990s with Gerald Tesauro’s TD Gammon, an RL-based backgammon-playing program that rivaled expert human players. This system used a neural network to approximate value functions and demonstrated that combining RL with function approximation could lead to powerful generalization capabilities. This approach was ahead of its time, foreshadowing the eventual fusion of deep learning and RL that would redefine the field in the next century.

The real explosion of interest in RL began in the 2010s with the advent of deep reinforcement learning (Deep RL). In 2013, DeepMind introduced the Deep Q-Network (DQN), which successfully combined Q-learning with deep convolutional networks and achieved superhuman performance in a wide range of Atari games. What made DQN remarkable was its ability to learn directly from pixels, using only the game score as a reward signal. This marked a turning point: RL was no longer confined to toy domains and could now tackle large scale, high-dimensional problems.

Following DQN, the field rapidly diversified. New techniques like Double DQN, Dueling DQN, and Prioritized Experience Replay addressed the stability and sample efficiency issues in the original problem formulation. Around the same time, researchers began exploring policy gradient methods, which directly optimize policies rather than estimating value functions. Trust Region Policy Optimization(TRPO) and its successor Proximal Policy Optimization (PPO), became popular due to their stability and performance in continuous action spaces. Deep Deterministic Policy Gradient (DDPG) and Soft Actor Critic (SAC) further advanced the frontier, especially in robotics and control tasks requiring smooth and real-valued actions.

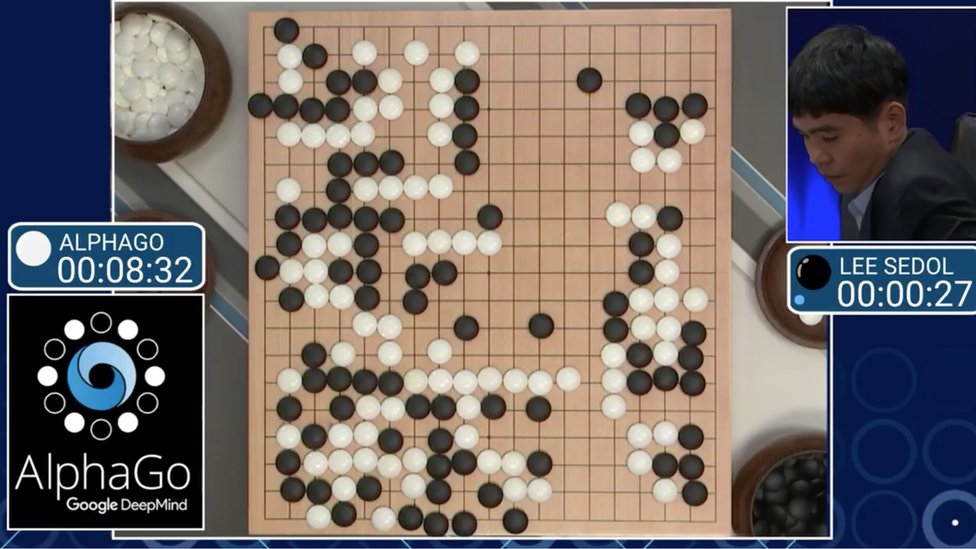

One of the most high-profile demonstrations of RL came in 2016 with the advent of AlphaGo, an RL-powered system that defeated top human players in the ancient game of Go. AlphaGo employed a combination of supervised learning from expert games, reinforcement learning through self-play, and Monte Carlo Tree Search for planning. Its successors, AlphaZero and MuZero, removed the need for human data and even explicit environment models, relying solely on learning from interaction and internal prediction. MuZero, in particular, illustrated how powerful model-based RL could be when coupled with representation learning.

Current times

With these successes, the field of RL expanded into multiple subdomains and began to influence other areas of AI. Model-based RL, where agents learn a predictive model of the environment to simulate and plan future trajectories, gained renewed attention due to its sample efficiency. Offline or batch RL emerged as a critical area for domains like healthcare and autonomous driving, where online exploration can be unsafe or impractical. Algorithms such as Conservative Q-Learning (CQL), Implicit Q-Learning (IQL), and EDAC were developed to learn safely and effectively from fixed datasets.

The boundaries of RL also began to blur as it merged with fields like meta-learning and natural language processing. In meta-RL, the goal is to build agents that can rapidly adapt to new tasks based on past experience, is kind of “learning to learn”. In the realm of language models, reinforcement learning from human feedback (RLHF) became central to aligning to large generative models with human preferences, exemplified by the fine-tuning of GPT-style models.

As of 2025, RL is at the cusp of yet another evolution. The current state-of-the-art methods emphasize not only performance but also safety, interpretability, and generalization. Algorithms like Mamba, a sequence-modeling framework using selective state-space mechanisms, aim to improve long-horizon credit assignment and learning stability. In parallel, explainable reinforcement learning (XRL) has become an active research area, tackling the challenge of interpreting complex agent behavior, which is a necessity for trust in high-stakes applications.

Industry interest in RL has never been higher. Market analyses suggest that the global RL market is growing at an annual rate exceeding 60%, driven by applications in robotics, logistics, finance, and automation. In robotics, RL is being used to teach dexterous manipulation, locomotion, and adaptive control without human intervention. In finance, RL algorithms are empowering portfolio optimization, dynamic hedging, and high-frequency trading. In smart infrastructure, RL controls traffic lights and optimizes energy consumption in data centers. In healthcare, RL is used to personalize treatment plans, optimize drug dosing strategies, and assist in robotic surgeries.

What makes RL truly interdisciplinary is its deep connection with other fields. From control theory and operations research, RL inherits techniques for optimal planning and regulation. From psychology and neuroscience, it draws parallels with how brain processes rewards and learns from feedback. Game theory informs its approaches to multi-agent systems, where agents must cooperate or compete. Optimization theory underpins the training of deep policies and critics, and simulation science provides testbeds where RL agents are born and evaluated.

As you venture into RL, your path will traverse elegant theory and messy real-world challenges. You will grapple with the exploration-exploitation dilemma, confront the instability of training deep networks with sparse rewards, and strive to generalize policies beyond training environments. You will explore new frontiers like learning from demonstrations, adapting across tasks, reasoning under uncertainty, and interacting ethically and transparently with humans.

Getting started

To get started on this journey, there are a few guiding resources that stand out. The textbook "Reinforcement Learning: An Introduction" by Sutton and Barto remains a foundational reference. OpenAI's "Spinning Up in Deep RL" is an excellent practical guide with annotated implementations and key papers. Reading and reproducing classic papers like Mnih et al. (2015) on DQN, Schulman et al. (2017) on PPO, and Silver et al. (2016) on AlphaGo will offer both conceptual clarity and engineering insights. From there, exploring recent conference proceedings from NeurIPS, ICML, and ICLR will keep you abreast of the latest innovations.

Conclusion

In conclusion, reinforcement learning is not just a toolkit, it is a paradigm for understanding and building intelligent behavior. It brings together learning and acting, representation and planning, theory and application. Its evolution over the past decades is a testament to the power of interdisciplinary thinking and iterative innovation. As a researcher stepping into this field, you are not just learning algorithms, you are participating in the ongoing story of artificial intelligence itself.

And in this story, the next chapter could very well be yours to write!

P.S.: The baby walking signifies how we humans reinforce our learnings from our mistakes!

Subscribe to my newsletter

Read articles from Kunal Kumar Sahoo directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Kunal Kumar Sahoo

Kunal Kumar Sahoo

I am a CS undergraduate with passion for applied mathematics. I like to explore the avenues of Artificial Intelligence and Robotics, and try to solve real-world problems with these tools.