Handling Errors When Importing CSV Data into a Database in Spring Boot

Tuanhdotnet

Tuanhdotnet5 min read

1. Why CSV Data Import Is Tricky

Importing data from CSV files is deceptively simple. At first glance, it might seem like just reading lines and inserting them into a database. But real-world scenarios involve:

- Invalid Data: Missing fields, incorrect formats, or unsupported characters.

- Duplicate Entries: Repeated records that violate unique constraints.

- Large File Sizes: Overloading memory when dealing with millions of records.

- Concurrency Issues: Multiple users uploading files simultaneously.

- Partial Failures: Some records succeed while others fail, leaving the database in an inconsistent state.

Each of these challenges demands careful planning and robust error-handling mechanisms.

1.1 Reading the CSV File

Spring Boot provides various libraries to read CSV files, such as OpenCSV or Apache Commons CSV. Let’s start by setting up a basic example to read a CSV file.

@Component

public class CSVReader {

public List<String[]> readCSV(String filePath) throws IOException {

List<String[]> data = new ArrayList<>();

try (BufferedReader br = new BufferedReader(new FileReader(filePath))) {

String line;

while ((line = br.readLine()) != null) {

String[] values = line.split(",");

data.add(values);

}

} catch (IOException e) {

throw new IOException("Error reading CSV file: " + e.getMessage(), e);

}

return data;

}

}

In this code:

- BufferedReader is used to read the file line by line.

- Lines are split using commas, but this is a simplistic approach and may fail for quoted fields or escaped commas.

Improvement: Using a library like OpenCSV avoids edge cases and provides cleaner handling.

1.2 Validating Data

Data validation is crucial to avoid importing corrupted or incomplete data. Use a combination of Java Bean Validation and custom validation logic.

public class CSVRecord {

@NotBlank(message = "Name cannot be blank")

private String name;

@Email(message = "Invalid email format")

private String email;

@Pattern(regexp = "\d{10}", message = "Phone number must be 10 digits")

private String phone;

// Getters and Setters

}

Add validation during processing:

@Service

public class CSVProcessor {

public void validateAndSave(List<String[]> records) {

for (String[] record : records) {

try {

CSVRecord csvRecord = mapToRecord(record);

validate(csvRecord);

saveToDatabase(csvRecord);

} catch (ConstraintViolationException e) {

logError(record, e);

}

}

}

private CSVRecord mapToRecord(String[] record) {

CSVRecord csvRecord = new CSVRecord();

csvRecord.setName(record[0]);

csvRecord.setEmail(record[1]);

csvRecord.setPhone(record[2]);

return csvRecord;

}

private void validate(CSVRecord csvRecord) {

Validator validator = Validation.buildDefaultValidatorFactory().getValidator();

Set<ConstraintViolation<CSVRecord>> violations = validator.validate(csvRecord);

if (!violations.isEmpty()) {

throw new ConstraintViolationException(violations);

}

}

private void saveToDatabase(CSVRecord csvRecord) {

// Save to the database logic

}

private void logError(String[] record, Exception e) {

System.err.println("Error processing record: " + Arrays.toString(record) + " - " + e.getMessage());

}

}

1.3 Handling Duplicate Entries

When duplicate entries are present, database constraints can throw exceptions. Use ON DUPLICATE KEY SQL statements or implement conflict resolution in the code.

@Transactional

public void saveToDatabase(CSVRecord csvRecord) {

Optional<User> existingUser = userRepository.findByEmail(csvRecord.getEmail());

if (existingUser.isPresent()) {

System.out.println("Duplicate record found: " + csvRecord.getEmail());

return; // Skip or update existing record

}

userRepository.save(csvRecord.toEntity());

}

1.4 Managing Large Files

To handle large files, process the CSV in chunks instead of loading it entirely into memory.

public void processLargeCSV(String filePath) throws IOException {

try (Stream<String> lines = Files.lines(Paths.get(filePath))) {

lines.skip(1) // Skip header

.map(this::parseLine)

.forEach(this::processRecord);

}

}

private void processRecord(String[] record) {

try {

// Process each record

} catch (Exception e) {

// Handle errors

}

}

2. Advanced Error-Handling Techniques

2.1 Logging and Monitoring

Set up centralized logging using tools like ELK Stack or Graylog. Log errors with sufficient context for debugging.

private void logError(String[] record, Exception e) {

logger.error("Error processing record: {} - {}", Arrays.toString(record), e.getMessage());

}

2.2 Retry Mechanism

Use a retry mechanism for transient errors, such as database deadlocks or temporary network failures.

@Retryable(

value = {SQLException.class},

maxAttempts = 3,

backoff = @Backoff(delay = 2000)

)

public void saveToDatabaseWithRetry(CSVRecord csvRecord) {

userRepository.save(csvRecord.toEntity());

}

2.3 Transaction Management

Use Spring’s @Transactional annotation to ensure atomicity.

@Transactional

public void importCSV(String filePath) {

List<String[]> records = csvReader.readCSV(filePath);

for (String[] record : records) {

try {

saveToDatabase(mapToRecord(record));

} catch (Exception e) {

throw new RuntimeException("Failed to process record: " + Arrays.toString(record), e);

}

}

}

3. Summary and Best Practices

Validation First: Validate data before processing.

Error Isolation: Log errors for problematic records without failing the entire process.

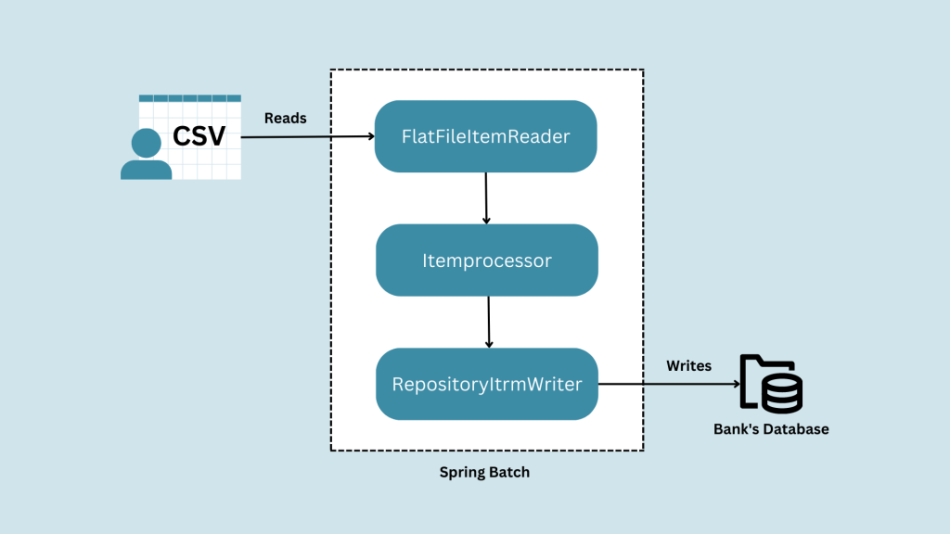

Chunk Processing: Use batch imports for large files.

Centralized Monitoring: Log errors and monitor them for patterns.

Retry for Transient Errors: Implement retries for recoverable errors.

Do you have questions or want to share your challenges? Feel free to comment below!

Read more at : Handling Errors When Importing CSV Data into a Database in Spring Boot

0

Subscribe to my newsletter

Read articles from Tuanhdotnet directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Tuanhdotnet

Tuanhdotnet

I am Tuanh.net. As of 2024, I have accumulated 8 years of experience in backend programming. I am delighted to connect and share my knowledge with everyone.