Unlocking the Potential of Amazon Nova: Capabilities, Performance, Use Cases, FM, Model Insights and Deployment

Fady Nabil

Fady Nabil

Amazon Web Services (AWS) has launched its most powerful foundation model to date — Amazon Nova.

This cutting-edge multimodal model promises to revolutionize how developers and businesses leverage AI for complex tasks and agentic workflows.

Amazon Nova models

Amazon Nova is a new generation of foundation model (FM) offering frontier intelligence and industry-leading price-performance. They offer fast inference, support agentic workflows with Amazon Bedrock Knowledge Bases and RAG, and allow fine-tuning for text and multi-modal data. Optimized for cost-effective performance, they are trained on data in over 200 languages.

Amazon Nova’s Model Family

Before diving into Nova Premier’s capabilities, it’s important to understand how it fits within AWS’s broader Nova model family:

Amazon Nova Micro: A text-only model delivering the lowest latency responses at very low cost

Amazon Nova Lite: A low-cost multimodal model optimized for quickly processing image, video, and text inputs

Amazon Nova Pro: A balanced multimodal model offering the best combination of accuracy, speed, and cost for general use cases

Amazon Nova Premier: The most capable model designed specifically for complex tasks and teacher model distillation

Check out here to explore and know more:

https://nova.amazon.com/

The Power of Model Distillation

Perhaps one of the most exciting aspects of Nova Premier is its role as a teacher model for distillation. This process allows organizations to:

Leverage Nova Premier’s broad intelligence to create specialized models

Use Nova Premier invocation logs as training data for smaller models like Nova Micro

Create student models that match Nova Premier’s accuracy for specific use cases while maintaining lower costs and latency

The result? Complex tasks that might take Nova Premier almost a minute can be completed twice as fast after distillation, making sophisticated AI capabilities accessible to everyday users at scale.

Technical Capabilities and Benchmarks

Amazon Nova understanding models, including Premier, offer impressive technical capabilities:

Support for over 200 languages

Text and vision fine-tuning

State-of-the-art performance on benchmarks like the Berkeley Function Calling Leaderboard (BFCL), VisualWebBench, and Mind2Web

Excellent in-context learning (ICL) and retrieval augmented generation (RAG) performance

Seamless integration with Amazon Bedrock features like Knowledge Bases and Agents

Generated using Amazon Nova Canvas “shapes flowing in and out of a funnel”.

Amazon Nova understanding models

Amazon Nova Micro, Amazon Nova Lite, and Amazon Nova Pro, and Amazon Nova Premier are understanding models that accept text, image, and video inputs and generate text output. They provide a broad selection of capability, accuracy, speed, and cost operation points.

Fast and cost-effective inference across intelligence classes

State-of-the-art text, image, and video understanding

Fine-tuning on text, image, and video input

Leading agentic and multimodal Retrieval Augmented Generation (RAG) capabilities

Excels in coding and software development use cases

Generated using Amazon Nova Canvas “a hummingbird in a garden”.

Amazon Nova creative models

Amazon Nova Canvas and Amazon Nova Reel are creative content generation models that accept text and image inputs and produce image or video outputs. They are designed to deliver customizable high-quality images and videos for visual content generation.

Cost-effective image and video generation

Control over your visual content generation

Multiple approaches to customize and edit visual content

Support for safe and responsible use of AI with watermarking and content moderation

Generated using Amazon Nova Canvas “white background with a dark purple neural network in the center, voice signal as input on left and output on the right”.

Amazon Nova speech-to-speech model

Amazon Nova Sonic is a speech-to-speech model that accepts speech as input and generates speech and text as output.

The model is designed to deliver real-time, human-like voice conversations with contextual richness.

State-of-the-art speech understanding and generation

Available through a bidirectional streaming API, enabling real-time, interactive communication

Supports function calling and knowledge grounding with enterprise data using RAG

Robust handling of user’s pauses, hesitations, and audio interruptions

Built-in controls for the safe and responsible use of AI

Model versions

Amazon Nova Micro

- A text-only model that delivers the lowest latency responses at very low cost. It is highly performant at language understanding, translation, reasoning, code completion, brainstorming, and mathematical problem-solving. With its generation speed of over 200 tokens per second, Amazon Nova Micro is ideal for applications that require fast responses.

- Max tokens: 128k

- Languages: 200+ languages

- Fine-tuning supported: Yes, with text input

Amazon Nova Lite

- Very low-cost multimodal model that is lightning fast for processing image, video, and text inputs. The accuracy of Amazon Nova Lite across a breadth of tasks, coupled with its lightning-fast speed, makes it suitable for a wide range of interactive and high-volume applications where cost is a key consideration.

- Max tokens: 300k

- Languages: 200+ languages

- Fine-tuning supported: Yes, with text, image, and video input

Amazon Nova Pro

- A highly capable multimodal model with the best combination of accuracy, speed, and cost for a wide range of tasks. The capabilities of Amazon Nova Pro, coupled with its industry-leading speed and cost efficiency, makes it a compelling model for almost any task, including video summarization, Q&A, mathematical reasoning, software development, and AI agents that can execute multistep workflows.

- Max tokens: 300k

- Languages: 200+ languages

- Fine-tuning supported: Yes, with text, image, and video input

Amazon Nova Premier

- Most capable model for complex tasks and teacher for model distillation. Customers can use Amazon Nova Premier with Amazon Bedrock Model Distillation to create highly-capable, cost-effective, and low-latency versions of Amazon Nova Pro, Lite, and Micro, for specific needs.

- Max tokens: 1M

- Languages: 200+ languages

- Fine-tuning supported: No. Amazon Nova Premier can be a teacher for model distillation.

Amazon Nova Canvas

- A cost-effective image generation model that creates professional-grade images from text or images provided in prompts. Amazon Nova Canvas also provides features that make it easy to edit images using text inputs, controls for adjusting color scheme and layout, and built-in controls to support safe and responsible use of AI.

- Max input characters: 1,024

- Languages: English

- Fine-tuning supported: Yes

Amazon Nova Reel

- A cost-effective video generation model that allows customers to easily create high quality video from text and images. Amazon Nova Reel supports use of natural language prompts to control visual style and pacing, including camera motion control, and built-in controls to support safe and responsible use of AI.

- Max input characters: 512

- Languages: English

- Fine-tuning supported: Coming soon

Amazon Nova Sonic

- A state-of-the-art speech understanding and generation model that delivers real-time, human-like voice-conversations with industry-leading price-performance. The model supports fluid dialogue and turn-taking, low latency multi-turn conversations, function calling, and knowledge grounding with enterprise data using RAG. Amazon Nova Sonic supports expressive voices, including both masculine-sounding and feminine-sounding voices.

- Max tokens: 300k

- Languages: English (including American and British accents). Additional languages coming soon.

Migrate from OpenAI to Amazon Nova … Why?

OpenAI’s models remain powerful, but their operational costs can be prohibitive when scaled. You can check analysis from Artificial Analysis:

For high-volume applications — like customer support or large document analysis — these cost differences are disruptive.

Not only does Nova Pro offer over three times cost-efficiency, its longer context window also enables it to handle more extensive & complex inputs.

Amazon Nova Use Cases and Real Testing:

You can also get started with this Nova Workshop codebase: Nova Sample code

Output for Nova Pro vs Nova Micro in Amazon Bedrock Playground

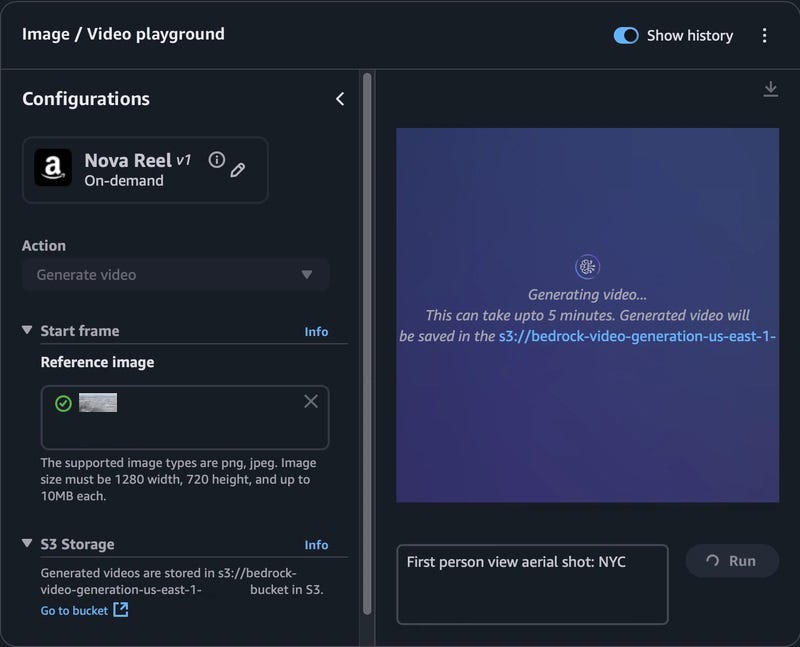

Amazon Bedrock Playground to experience with Nova Reel foundation model:

Upload your image. This image will used by the model to generate the video clip.

The Amazon Nova Reel playground provides real-time progress updates as it generates the requested video clip.

Once the video clip is successfully generated, you’ll see an option to download it.

This video clip is also automatically stored in your S3 bucket. You can delete it from there so that you don’t incur ongoing cloud cost for this s3 bucket.

Fine-tune an Amazon Nova model:

In this we will make fine-tuning and hosting customized Amazon Nova models using Amazon Bedrock.

The following diagram illustrates the solution architecture.

Create a fine-tuning job

Complete the following steps to create a fine-tuning job:

Open the Amazon Bedrock console.

Choose

us-east-1as the AWS Region.Under Foundation models in the navigation pane, choose Custom models.

Choose Create Fine-tuning job under Customization methods.

At the time of writing, Amazon Nova model fine-tuning is exclusively available in the us-east-1 Region.

For Source model, choose Select model.

Choose Amazon as the provider and the Amazon Nova model of your choice Lite or Micro.

Choose Apply.

For Fine-tuned model name, enter a unique name for the fine-tuned model.

For Job name, enter a name for the fine-tuning job.

Under Input data, enter the location of the source S3 bucket (training data) and target S3 bucket (model outputs and training metrics), and optionally the location of your validation dataset.

- In the Hyperparameters section, you can customize the following hyperparameters:

For Epochs¸ enter a value between 1–5.

For Batch size, the value is fixed at 1.

For Learning rate multiplier, enter a value between 0.000001–0.0001

For Learning rate warmup steps, enter a value between 0–100.

Recommend starting with the default parameter values and then changing the settings iteratively. It’s a good practice to change only one or a couple of parameters at a time, in order to isolate the parameter effects. Remember, hyperparameter tuning is model and use case specific.

In the Output data section, enter the target S3 bucket for model outputs and training metrics.

Choose Create fine-tuning job.

Run the fine-tuning job

After you start fine-tuning job, you will be able to see your job under Jobs & status as Training.

When it finishes, the status changes to Complete.

You can now go to the training job & optionally access the training-related artifacts that are saved in output folder.

You can find both training & validation (highly recommend using a validation set) artifacts here.

You can use the training and validation artifacts to assess your fine-tuning job through loss curves (as shown in the following figure), which track training loss (orange) & validation loss (blue) over time.

Host the fine-tuned model & run inference

Now that you have completed the fine-tuning, you can host the model and use it for inference. Follow these steps:

On the Amazon Bedrock console.

Under Foundation models in the navigation pane, choose Custom models.

On the Models tab, choose model you fine-tuned.

- Choose Purchase provisioned throughput.

- Specify a commitment term and review the associated cost for hosting the fine-tuned models.

After the customized model is hosted through provisioned throughput, a model ID will be assigned, which will be used for inference.

For inference with models hosted with provisioned throughput, we have to use the Invoke API in the same way we described previously in this post — simply replace model ID with the customized model ID.

Results

The results of base Amazon Nova models to their fine-tuned pro is best than lite and lite best than micro in accuracy and performance.

Multi-agent collaboration use case:

This use case on AWS Blogs. Nova Premier works a multi-agent collaboration architecture for investment research.

We can build application using multi-agent collaboration in Amazon Bedrock, with Nova Premier powering the supervisor agent that orchestrates the entire workflow.

The supervisor agent analyzes the initial query (for example, “What are the emerging trends in renewable energy investments?”), breaks it down into logical steps, determines which specialized subagents to engage, and synthesizes the final response.

Components:

A supervisor agent powered by Nova Premier

Multiple specialized subagents powered by Nova Pro, each focusing on different financial data sources

Tools that connect to financial databases, market analysis tools, and other relevant information sources

Architect for Components:

The supervisor agent powered by Nova Premier does the following:

Analyzes and determine the underlying topics & sources

Selects the appropriate subagents specific to those topics and sources

Each subagent retrieves their relevant data

The supervisor agent synthesizes this information into a comprehensive report.

Nova Premier in a multi-agent architecture such as this streamlines the financial professional’s work.

Some of Resources here from AWS Documentations and Blog.

Subscribe to my newsletter

Read articles from Fady Nabil directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Fady Nabil

Fady Nabil

I am an Front End Web Developer and chatbots creator from Egypt who likes Software Engineer and UI/UX studies. I enjoy reading about Entreprenuership, Innovation, product manage, Scrum, and agile. I like to play around with Figma, Adobe XD and build tools/ideas to help me during get things done quickly.