Making Sense of Machine Minds: The Magic of Explainable AI

Manasa V

Manasa V

Have you ever asked a friend why they suddenly booked a last-minute weekend trip to a tiny mountain town, and they replied, “It just felt right”? That’s basically what Explainable AI (XAI) is trying to do help us understand the why behind AI’s decisions.

Let’s dive in.

Meet Your Mysterious Friend: AI

Imagine AI as that one friend who seems to know everything - the fastest routes, which series to binge next, even what job you might like five years from now.

But they never quite explain how they know it.

They just say, “Trust me.” Now, that sometimes works. But what if they:

Suggested a playlist that had nothing to do with your music vibe

Took you on a “shortcut” that added 30 minutes to your trip

Suddenly, you really want to know why they made that choice.

Enter XAI: The Translator for Mysterious AI Minds

Explainable AI (XAI) is like asking that friend to walk you through their logic.

“Why did you suggest that obscure mountain town?”

“Well, I saw it has great hiking trails, peaceful scenery, it’s only two hours away, and you’ve been stressed lately - seemed like a good mental reset.”

Now you understand the decision. That’s what XAI does for AI.

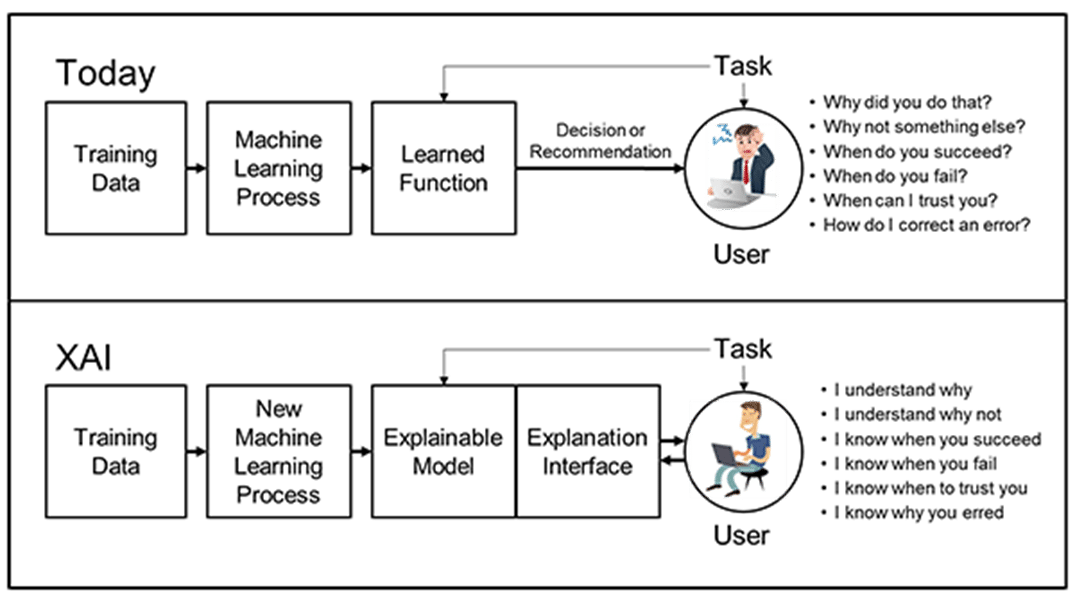

Figure: Traditional AI vs Explainable AI: Understanding the Black Box

Why AI Needs to Explain Itself

Modern AI models especially deep learning ones are like black boxes.

They take in massive amounts of data, run it through countless hidden layers and calculation and out pops a decision.

To us, though? It feels like magic.

But this lack of clarity can be a serious problem:

Fairness: Was the decision biased?

Safety: Why did the AI diagnose that illness?

Debugging: Did the model learn from the right patterns?

Learning: Can we learn from the AI’s choices?

If we don’t understand why, we can’t trust, improve, or challenge what the AI is doing.

💡 Did You Know? In 2018, DARPA (the military research folks) launched an official XAI program because even they didn’t trust black-box AI making life-or-death decisions.

How Do We Make AI Spill the Beans?

Here are a few cool ways we get AI to explain itself:

1. Feature Importance

Feature Importance is just:

"Which things were most important when deciding?"

Example:

"I bought it because the camera is great and the battery lasts long not just because it’s a popular brand.”

It’s about knowing what mattered most in the choice.

Curious how it works? Here’s the code try it yourself!

We will use Random Forest - a type of machine learning model that makes decisions by combining the results of many decision trees.

# feature_importance_example.py

from sklearn.datasets import make_classification

from sklearn.ensemble import RandomForestClassifier

from sklearn.model_selection import train_test_split

import pandas as pd

# Create tiny synthetic dataset

X, y = make_classification(n_samples=300, n_features=5, n_informative=3, random_state=42)

cols = [f"f{i}" for i in range(X.shape[1])]

df = pd.DataFrame(X, columns=cols)

# Train a Random Forest

X_train, X_test, y_train, y_test = train_test_split(df, y, test_size=0.3, random_state=42)

model = RandomForestClassifier(n_estimators=100, random_state=42)

model.fit(X_train, y_train)

# Show feature importances

importances = model.feature_importances_

fi = pd.Series(importances, index=cols).sort_values(ascending=False)

print("Feature importances (higher = more important):\n", fi)

2. SHAP / LIME

These are powerful tools that break down AI decisions, case by case.

SHAP (SHapley Additive exPlanations)

SHAP uses game theory to figure out how much each feature (like skills, experience, location) contributed to a decision.

Example:

An AI recommends a job. SHAP shows that programming skills contributed 50%, location 30%, and past applications 20%.

It gives clear, consistent explanations backed by math no guesswork.

LIME (Local Interpretable Model-Agnostic Explanations)

LIME explains a single decision by slightly changing the input and seeing what affects the result. It builds a simple model just around that one prediction.

Example:

An AI says you’ll love a new sci-fi show. LIME reveals it’s because you’ve been binging space adventures and rating similar shows highly.

It’s like a quick “behind-the-scenes” peek into one specific AI decision.

🎉 Fun Fact Alert! SHAP’s roots go all the way to a Nobel Prize-winning idea thanks to Lloyd Shapley’s game theory magic from 2012!

But Isn’t Some AI Too Complex to Explain?

Yes… and no.

Some advanced models (like deep neural networks) are wildly complex like asking a wizard how they cast a spell.

But researchers are constantly building tools to peek inside the black box:

Visualizations

Local explanations

Simplified versions of models

Hybrid systems that trade a bit of power for a lot of clarity

The point? Even the most advanced AI shouldn’t be a total mystery.

Why This Matters for the Future

AI isn’t just recommending TV shows anymore.

It’s making decisions that impact real lives:

Whether someone qualifies for a loan

Who gets hired or flagged in recruitment

How a patient is diagnosed

Which legal case gets flagged for review

In a world like this, “Trust me” isn’t enough.

We need AI to be:

Transparent

Accountable

Understandable

We need AI that acts more like a helpful guide not a mysterious oracle whispering decisions from the shadows.

Final Thoughts: XAI = AI That Plays Fair

Imagine a future where every smart system came with a little voice saying:

“Hey, here’s why I did that hope it makes sense!”

That’s the goal of Explainable AI.

It turns confusing, black-box decisions into clear, thoughtful reasoning the kind that builds trust, empowers users, and makes AI better for everyone.

So next time your AI-powered app recommends a new city to explore, a course to take, or a job to apply for go ahead and ask:

“Can you explain why?”

Because in the world of AI, curiosity isn’t just cool it’s crucial.

Embracing Curiosity: The Path to Human-Friendly AI

So the next time AI nudges you toward a job opening, a road less traveled, or a productivity hack you didn’t ask for don’t just follow blindly. Ask questions. Get curious. Challenge the black box.

Because when AI explains itself, it stops being just “smart,” and starts becoming human-friendly.

In the end, the best kind of AI isn’t the one that just knows things it’s the one that tells you why.

Let’s make machines not just intelligent but explainable.

Thanks for tagging along! If this sparked your curiosity, stick around more tech made simple is coming your way!

Subscribe to my newsletter

Read articles from Manasa V directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Manasa V

Manasa V

👩💻 Tech Consultant @ Microsoft 💡 Curious mind diving into AI, code & future trends 🚀 Exploring the why and how of everything tech 📚 Forever learning. Always vibing with innovation.