The Toothpaste Principle: How GPT Learns Like We Do

Mohit Kumar

Mohit KumarTable of contents

GPT stands for Generative Pre-trained Transformer.

When you were 5–6 months old:

You didn’t know what “brush teeth” meant.

But your parents kept doing it with toothpaste, water, and the same steps every day.

Over time, your brain connected “brush teeth” → toothpaste, water, cleaning, fresh smell.

So now, if someone says “brush your teeth,” you automatically think of all those steps, even without being told.

Let’s break the name down:

Generative → It can create new content (sentences, stories, code, etc.).

Pre-trained → It learns patterns from massive amounts of data before you ever use it.

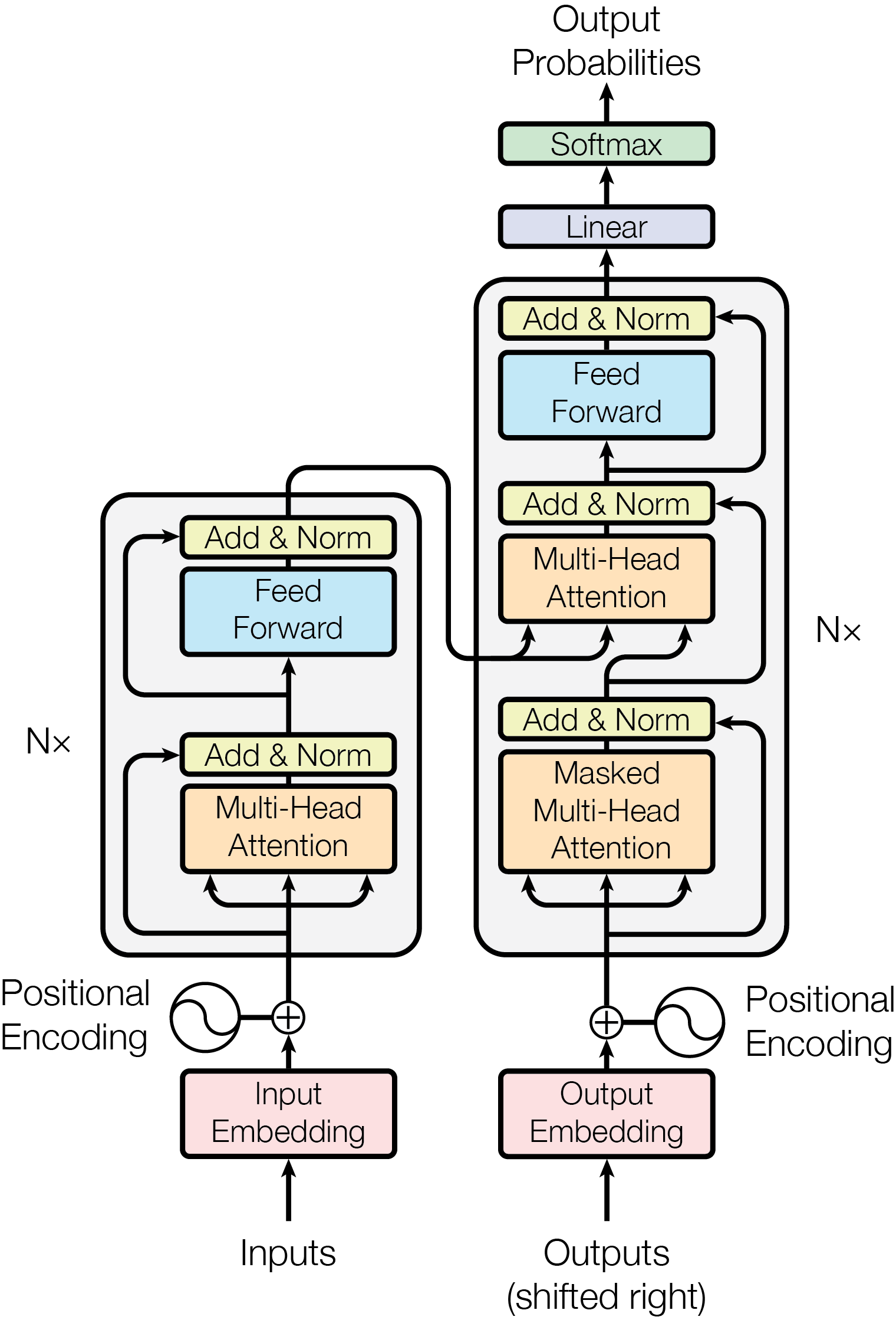

Transformer → This is the neural network architecture that allows it to understand context and relationships between words.

Think of GPT like a super-advanced predictive text system — but instead of just guessing the next word in your text message, it can write a research paper, debug code, or summarize an entire novel.

How it works in steps

Reading and Learning

GPT reads a lot of text (billions of sentences).

It learns what words usually come after other words.

Example: If it sees “Once upon a…”, it learns the next word is often “time”.

Making a Guess

When you ask it something, it doesn’t “look up” an answer — it “guesses” the next word, then the next, then the next… really fast.

Example: You say, “Tell me a joke,” and it guesses a joke word by word until the whole thing is done.

Why it Feels Smart

Because it’s read so much, its guesses are very good — often sounding like a human talking.

But it’s not thinking or knowing like us; it’s just super-skilled at word puzzles.

Diagram Credit:- Google

Resources:-

Attention is All you need by Google → https://arxiv.org/pdf/1706.03762

Subscribe to my newsletter

Read articles from Mohit Kumar directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by