Explain Vector Embeddings to Your Mom

MANOJ KUMAR

MANOJ KUMAR

What Are Vector Embeddings? (Explained to My Mom)

If you’ve ever tried to explain AI to your mom and got that confused-but-smiling look, this one’s for you.

Imagine you have a huge library filled with books, music, and photos. You want to find similar items—like all books about travel, songs with the same vibe, or pictures of sunsets. But computers don’t understand “meaning” the way we do. They need a way to turn meaning into numbers.

That’s where vector embeddings come in.

The Analogy: The Grocery Store Map

Think of every item in a grocery store having an exact location on a map. Similar items (like all fruits) are close together, and very different ones (like milk and soap) are far apart.

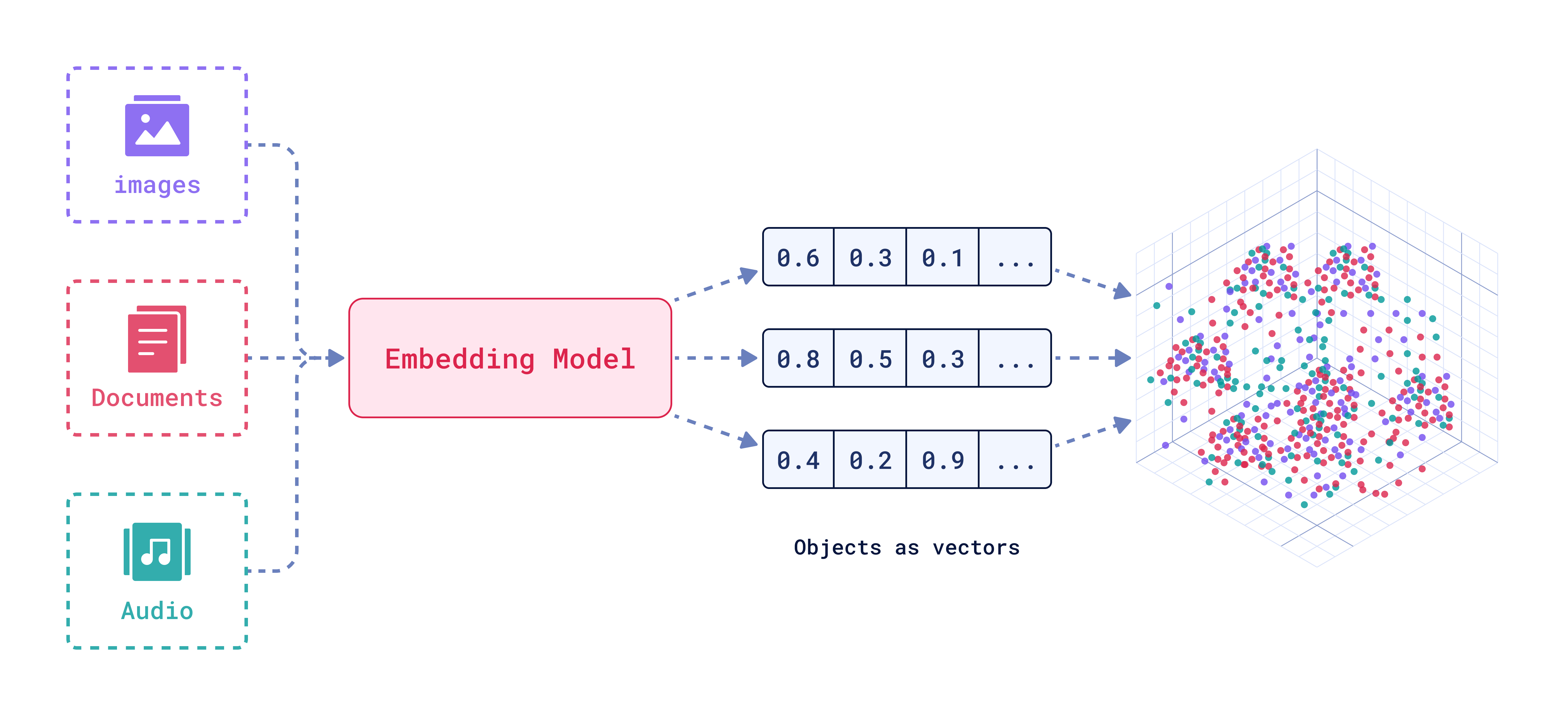

Vector embeddings work the same way turning text, images, or audio into lists of numbers (vectors) so AI can figure out how close or far apart they are in meaning.

How Vector Embeddings Capture Meaning

The beauty of vector embeddings is that they don’t just store data they capture relationships between concepts.

Look at the left side of the image:

If you take the vector for "King" and subtract "man", then add "woman", you get "Queen".

This means the embedding space has learned that gender relationships (man ↔ woman) are consistent in meaning and direction.

On the right side:

Words like "Good" and "Awesome" are very close in the vector space because they share a similar positive sentiment.

Similarly, "Bad" and "Worst" sit close together, representing negative sentiment.

Why This Matters

This ability to capture relationships makes vector embeddings powerful for:

Semantic search (finding related meanings, not just matching words)

Recommendation engines (grouping similar items together)

Natural language understanding (recognizing context and tone)

In short, vector embeddings give AI a map of meaning so instead of just memorizing words, it actually understands their relationships.

How Vector Embeddings Work (Step-by-Step)

1. Input Anything

Images 🖼️

Documents 📄

Audio 🎵

2. Embedding Model

The model converts each input into a vector (a list of numbers like [0.6, 0.3, 0.1, ...]). These numbers capture meaning in mathematical form.

shows how embeddings convert data into numeric vectors.

3. Store in Vector Database

Once converted, these vectors are stored in a special database built for fast similarity search.

shows how these vectors are stored and used for searching.

4. Search by Meaning, Not Exact Words

When you search for something (“sunset at beach”), the system finds items with similar vectors, even if the words don’t exactly match.

Real-Life Examples

Chatbots: Understand similar questions, even if worded differently.

Search Engines: Show results related to meaning, not just exact keywords.

Recommendation Systems: Suggest songs, articles, or videos based on similarity.

Final Takeaway

Vector embeddings are like giving AI a map of meaning every word, image, or sound gets a unique spot, and closeness means similarity.

Just like a grocery store map groups similar items together (fruits with fruits, soaps with soaps), embeddings cluster related concepts in “meaning space.”

This means AI can:

Recognize relationships (King – man + woman = Queen)

Understand sentiment (Awesome ≈ Good, Bad ≈ Worst)

Search by meaning instead of just keywords

Make smarter recommendations

In short, vector embeddings let machines understand relationships instead of just memorizing data, enabling smarter search, better recommendations, and deeper context awareness.

💬 Follow more of my work on LinkedIn: https://www.linkedin.com/in/manojofficialmj/

📌 Hashtags: #chaicode #chaiaurcode

Subscribe to my newsletter

Read articles from MANOJ KUMAR directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

MANOJ KUMAR

MANOJ KUMAR

Haan Ji, I am Manoj Kumar a product-focused Full Stack Developer passionate about crafting and deploying modern web apps, SaaS solutions, and Generative AI applications using Node.js, Next.js, databases, and cloud technologies, with 10+ real-world projects delivered including AI-powered tools and business applications.