Want to Be AI Familiar? Here’s What You Should Know in 2025

Yash Shirsath

Yash ShirsathTable of contents

- 1. LLM, Prompt, Some Popular AI Models

- 2. RAG, CAG, Agentic RAG, VectorDB, Chunking, Token

- 3. Context, Memory, Function Calling

- 4. Model Parameters, Quantization, Inetgration through Model API

- 5. Hugging Face, Ollama, LangChain, LangGraph

- 6. Bolt, Cursor, Continue, Windsurf, RooCode, and Cline

- 7. Model Context Protocol

Artificial Intelligence isn’t just about ChatGPT anymore. The ecosystem surrounding AI is vast and continually evolving, encompassing tools, frameworks, concepts, and workflows that make AI more practical in real world applications.

If you’re starting your AI journey and often feel lost, here’s a beginner friendly guide to the things you must know.

1. LLM, Prompt, Some Popular AI Models

Think of an LLM (Large Language Model) as a super smart text engine. it’s trained on huge amounts of text (books, articles, websites) and learns how to understand and generate human like language.

💡 Example:- When you ask it a question, it predicts the next best word to reply with, like autocomplete, but way smarter.

A prompt (Talking to ChatGPT) is just the input you give to ChatGPT, Gemini (your question, instruction, or request).

The better your prompt, the better the answer you get.

💡 Example:-

Vague Prompt - “Tell me about AI.”

Better Prompt - Explain AI in 3 sentences for a 10 yr old with an example.

GPT-4 (OpenAI) → Great at reasoning.

Claude (Anthropic) → Very safe and good with long context.

Gemini (Google) → Strong at multimodal (text + image).

Llama (Meta) → Open source.

DeepSeek → China’s rising star in AI models.

Read in Detail About AI Models

2. RAG, CAG, Agentic RAG, VectorDB, Chunking, Token

RAG (Retrieval Augmented Generation):- Your AI doesn’t just “guess” from memory, it searches relevant documents and gives smarter answers. (Like GPT with Google built in)

Imagine answering a history examas-Without RAG → You rely only on your memory.

With RAG → You’re allowed to quickly search your history textbook before answering.

CAG (Context Augmented Generation):- A method where the AI uses extra context (documents, chat history, knowledge base, etc.) to generate better, more relevant answers.

In Simple:- Retrieve docs + maintain conversation reasoning/memory → Generate better multi turn dialogue.

Agentic RAG:- Next level RAG. The AI doesn’t only retrieve info it can take actions (like booking a ticket, running code, or querying APIs) while using RAG.

Eg. It Doesn’t stop at just answering. It can plan multiple steps, query different tools, verify its answer, and iterate until it produces a high quality, context aware response.

VectorDB (Vector Database):- Special database for AI memory. Instead of storing data in rows/columns, it stores meaning (embeddings), so the AI can understand similarity (e.g., “car” ≈ “automobile”).

Eg. Whenever you use AI (like ChatGPT or image recognition), your input (text/image) is converted into a vector a list of numbers that captures the meaning or features of that data.

Chunking:- Breaking big text into smaller, digestible pieces so AI can search & answer better. Like cutting a big pizza into slices.

Tokens:- AI’s “word currency.” Models don’t see full words; they see tokens (tiny word pieces). Eg - “What is your name” → “what” + “is” + “your” + “name“. More tokens = more cost & slower response.

3. Context, Memory, Function Calling

When we chat with an AI like ChatGPT, sometimes it feels like magic ryt...? But behind the scenes, some key concepts make these interactions powerful and useful. Let’s explore

Context - Context is the information the AI keeps in mind during a conversation.

Eg. if you ask “What’s the weather in Kalyan today?” and then follow up with “And tomorrow?” the AI understands that "tomorrow" still refers to Kalyan because of the conversation’s context.

Memory - It allows the AI to remember things from past conversations with you, not just within a single chat.

Eg. If last week you told the AI, “My name is Boeing 777-300 ER”, and this week you ask, “Who am I?”, the AI with memory could reply “You’re Boeing 777-300 ER!”.

Function Calling - AI can be connected to external functions (mini programs) to get real time answers.

Eg. You ask 2 ai “Book me a flight to bankok tomorrow night.” AI doesn’t just guess it calls a flight booking function, checks available flights, and gives you actual option.

4. Model Parameters, Quantization, Inetgration through Model API

Model Parameters - Think of parameters like the "knowledge knobs" of an AI model. small model may have 7B parameters (7 billion knobs). large one like GPT-4 has trillions of parameters.

like More parameters = more capacity to understand language, but also requires more computing power.

Eg. A toy car has a few parts easy to run but limited, & Tesla has thousands of parts → more complex but powerful

Quantization - AI models are huge and often too heavy to run on normal laptops or phones. quantization is like compressing a large video into a smaller file without losing too much quality.

It reduces the memory size of the model, making it faster and cheaper to run, alsosometimes accuracy drops little, but trade-off = accessibility.

Eg. Running a 7B model full precision → needs 16GB+ GPU.

Running it quantized to 4-bit → can run on your 8GB laptopiIntegration through Model API - You don’t always need to host the AI model yourself. Companies like OpenAI, Anthropic, and Google let you access their models through APIs. Simple You just send text & get AIs response. saves you from managing servers, GPUs, or scaling issues.

Eg. You don’t need to bake a cake from scratch every time.

Instead, you order from Swiggy/Zomato (API) → cake delivered in minutes.

5. Hugging Face, Ollama, LangChain, LangGraph

Hugging Face - Its like the GitHub for AI models. it’s a platform where developers, researchers, and companies share and use pretrained AI/ML models.

Eg. 1. You want to use a sentiment analysis model. Instead of training one from scratch, you can simply download it from Hugging Face’s Model Hub.

2. It also provides Transformers library (Python package) to easily use models like BERT, GPT, T5, etc.

LLaMA (by Meta AI) - It’s Meta’s family of os llm (similar to GPT). Researchers love it because they can finetune it for custom use cases without needing massive compute like OpenAI or Google.

Eg. LLaMA 2 and LLaMA 3 are available on Hugging Face.

If you want to build a chatbot for your website, you can finetune LLaMA with your company’s documents and integrate it with APIs

LangChain - Framework to build apps with LLMs (connect models, tools, memory).

Eg. Use GPT + SQL DBs → “Show me top 10 customers by rvenue.”LangGraph - A newer framework for building agentic AI systems (AI agents that can plan, act, and loop until they achieve a goal).

Eg. AI travel agent WHo can Plan → Search flights → Compare → Book → Confirm.

LangFlow - A nocode / lowcode drag n drop interface built on top of LangChain. Also help u 2 design, visualize, & run LLM workflows without writing much code.

Eg. Build a chatbot by dropping nodes Input → LLM → Output (no coding req)

6. Bolt, Cursor, Continue, Windsurf, RooCode, and Cline

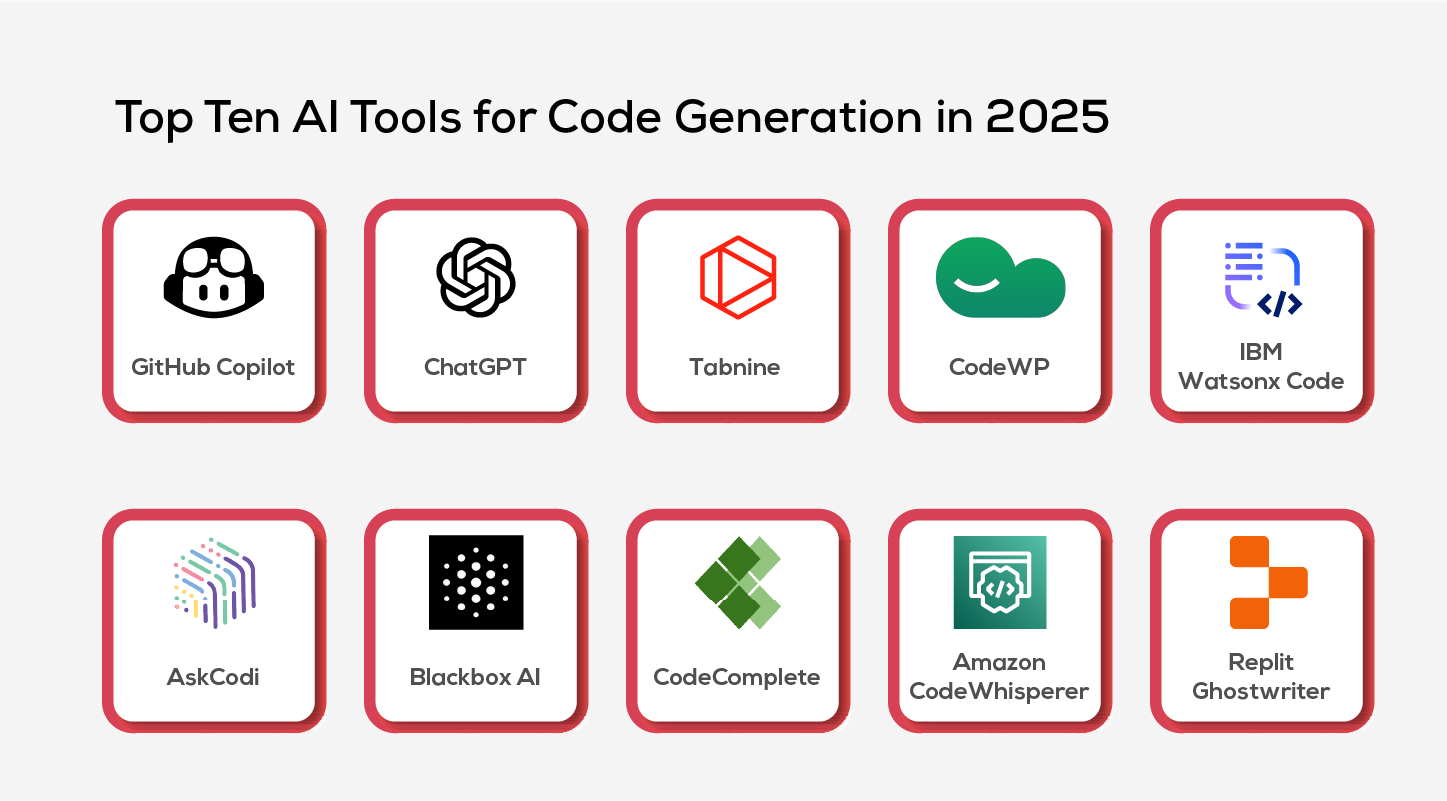

These are AI development tools for coding, debugging, and automating programming tasks. Think of them as supercharged copilots inside your coding environment.

Bolt.new - A lightweight AI code editor (webbased). you can open it in the browser, paste a repo, and chat with AI to fix or generate code.

Eg. Upload your Python project → ask “add a login page with Flask” → AI writes code directly.

Cursor - A VS Code like editor powered by GPT models, It helps autocomplete, debug, and explain code inside the IDE.

Eg. while writing SQL queries, it auto suggests joins or fixes syntax.

Continue - An open source alternative to GitHub Copilot., works with LLMs like GPT, Claude, or Llama to help in your own VS Code/JetBrains.

Eg. Typing Python

def factorial(n):→ Continue suggests the full recursive function.Windsurf - Focuses on collaborative coding with AI. designed for pair programming with AI agents.

Eg. AI acts like a teammate → you assign tasks like “refactor the data pipeline” and it updates code.

RooCode - AI first IDE that can handle large codebases, good for startups building apps quickly.

Eg. Import a whole project → ask “migrate backend from SQLite to PostgreSQL” → RooCode suggests structured changes.

Cline - An AI agent inside your editor (supports multistep tasks), great for running autonomous coding workflows.

7. Model Context Protocol

In Simple, A common language/protocol that lets all AI models use tools, APIs, and data in the same way.

The Model Context Protocol (MCP) is an open standard designed to act as a universal language for AI models and applications. Think of it as a universal translator that lets different AI tools talk to each other seamlessly, regardless of their original company or platform.

Its main purpose is to solve the problem of fragmentation in the AI ecosystem. Currently, every large language model (LLM) has its specific way of receiving data and context. This means if a developer builds an application for OpenAI's GPT4, they have to completely rewrite the code to make it work with Anthropic's Claude or Google's Gemini.

Think of MCP like a “universal charger” but for AI.

Every phone used to have a different charger. then USB C type came, and now one charger works everywhere. similarly, every AI model (ChatGPT, Claude, LangChain apps, etc.) used to have different ways to connect to tools, databases, or APIs.

MCP is the USB-C for AI tools. It gives one standard way for AI models to

Get real-time data, Call APIs, Use databases or memory, Work with plugins

Use Cases -

Finance bot → Model uses MCP to fetch live stock prices from Yahoo Finance API.

Company chatbot → Model uses MCP to query SQL database for employee info.

Coding assistant → Model uses MCP to access GitHub repos and run code.

If you understand these 7 pillars of AI familiarity, from LLMs and RAG to Vector DBs, agentic workflows, memory & tools, model parameters & APIs, and orchestration frameworks like LangChain, you already hold the key to building smart AI systems.

In Short - These 7 things make you Thala for the Reason, sorry I mean AI ready.

Subscribe to my newsletter

Read articles from Yash Shirsath directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Yash Shirsath

Yash Shirsath

💼 Sr. Executive IT@USFBL 📊 Microsoft Certified (AI-900, DP-900) | Oracle APEX Cloud Dev (1Z0-771) ⚡ Core Competencies: Business & Data Analytics, SQL & Python, Tableau, Power BI, Qlik, Excel, Machine Learning, Agentic AI, Cloud & Security With hands-on experience in business analytics, AI applications, and cloud computing, I specialize in transforming raw data into actionable insights that drive growth and innovation across various industries. 🔍 Key Areas of Focus:- Business Intelligence & Analytics (Power BI, Tableau, Excel) Cloud (Azure, AWS, Oracle APEX) & Security Best Practices Machine Learning & Agentic AI SQL & Python for Automation & Analytics Passionate about leveraging technology for strategic impact, exploring AI driven innovations for business growth, and sharing knowledge through blogs and open source contributions.