A Developer’s Journey to the Cloud 1: From Localhost to Dockerized Deployment

Arun SD

Arun SD

About This Series

Over the past 8 years, I’ve built and deployed a variety of applications, each with its own unique set of challenges, lessons, and occasionally, hard-earned scars. Instead of presenting those experiences as isolated technical write-ups, I’ve woven them into a single, continuous narrative: A Developer’s Journey to the Cloud.

While the “developer” in this story is fictional, the struggles, breakthroughs, and aha-moments are all drawn from real projects I’ve worked on spanning multiple tech stacks, deployment models, and problem domains. Each post captures the why and what behind key decisions and technologies, without drowning in step-by-step tutorials.

Think of it as a mix between a memoir and a guide, part storytelling, part practical insight, narrating the messy, funny, and sometimes painful path of learning to build in the cloud.

That Time I Thought localhost Was Lying to Me

It all starts with that beautiful, electric feeling. The final line of code clicks into place, and your application , your glorious, bug-free masterpiece, purrs to life on localhost. You've tested every button, every form, every feature. It's perfect. All that's left is to share it with the world.

"How hard can that be?" I thought, basking in the glow of my monitor. And so began my glorious, agonizing, and unintentionally hilarious journey into the wild.

The First Launch

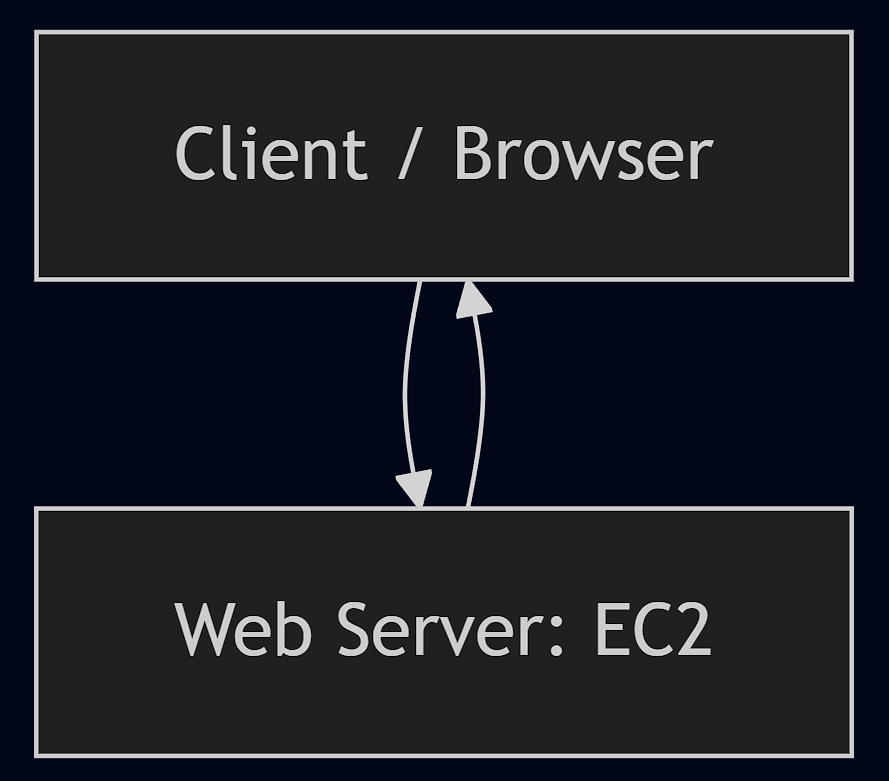

My plan was simple: rent the cheapest virtual server I could find. For a few hundred rupees, I was the proud owner of a blank command line with a public IP address. It felt like being handed the keys to the internet.

With the confidence of someone who had successfully used npm install more than once, I manually installed Node.js and PostgreSQL on the server. Then came the big moment: getting my code onto the server. The process was painfully manual.

First, I prepared my project files locally:

# On my laptop

zip -r my-awesome-app.zip .

Then, I used scp (Secure Copy) to upload the zipped file to the server:

# On my laptop

scp my-awesome-app.zip user@your_server_ip:/home/user/

Finally, I logged into the server to unpack and run everything:

# On the server

ssh user@your_server_ip

unzip my-awesome-app.zip -d app

cd app

npm install

npm start

I typed the IP address into my browser. It worked. My app was alive. I was, for all intents and purposes, a genius.

The first crack appeared a day later. I found a tiny typo. "Easy fix," I thought. I corrected the text, zipped everything up again, and repeated the entire upload-unzip-restart dance. I restarted the app.

And the entire thing crashed.

Somehow, in that simple process, something had gone terribly wrong. I had no history, no undo button. It took me an hour of frantic re-uploading to get it back online. I decided the "F" in FTP stood for "fragile."

Getting Smarter, a Little

Okay, no more zip files. I was a professional, and professionals use Git. I SSH'd into my server and set up a "bare" repository, a special repo just for receiving pushes.

# On the server

git init --bare /var/repos/app.git

I configured a hook that would automatically check out the code into my live directory whenever I pushed to it. My deployment process was now a sleek and sophisticated git push production main. I had leveled up.

This new system worked beautifully for weeks. I built a major new feature, a complex image upload and processing tool. To get access to some new performance improvements, I developed it locally using the latest version of Node.js. As always, it ran like a dream on my laptop. I pushed the code, and the deployment hook ran. I restarted the app with a confident smirk.

It crashed. Instantly.

The error message was a nightmare. A function I was using simply didn't exist. But... I had just used it. It was right there in my code. I spent the next six hours in a state of pure disbelief.

Then, it hit me. My server, being a server, had been prudently set up with the stable, Long-Term Support (LTS) version of Node.js. My feature, built with the shiny new tools of the "latest" version, was trying to call a function that hadn't been introduced in the "stable" release yet. It was then I understood that the most soul-crushing phrase in our industry, "but it works on my machine," isn't a joke. It's a curse. My code wasn't the problem; the entire universe it was running in was fundamentally, fatally different.

Shipping the Entire Universe

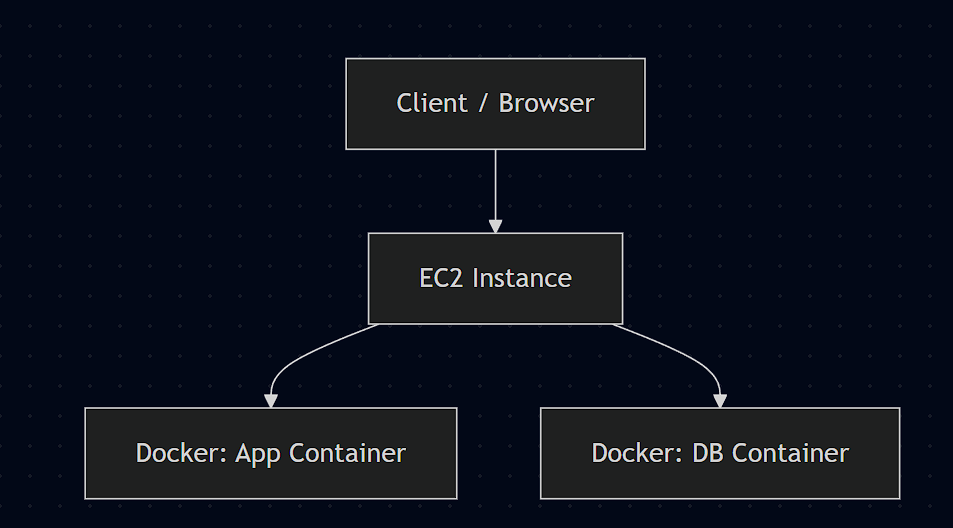

Defeated, I started searching for answers, and I kept stumbling upon the same word: Docker. The promise was simple: what if, instead of just shipping your code, you could ship your code's entire world along with it?

The idea was to define your exact environment in a text file, a Dockerfile. This file acts as a blueprint to create a "container," a lightweight, standardized box holding your app and its perfect environment.

I spent a weekend tinkering. My Dockerfile looked something like this:

# Dockerfile

# Use the 'latest' Node.js version we need

FROM node:latest

# Set the working directory in the container

WORKDIR /app

# Copy package files and install dependencies

COPY package*.json ./

RUN npm install --only=production

# Copy the rest of our application code

COPY . .

# Expose the port and start the server

EXPOSE 3001

CMD [ "node", "index.js" ]

To run my database alongside my app and manage secrets properly, I created a docker-compose.yml file and a separate .env file for my credentials.

This is the .env file, which should never be committed to Git:

Code snippet

# .env

# Database credentials

POSTGRES_USER=myuser

POSTGRES_PASSWORD=mysecretpassword

POSTGRES_DB=myapp_db

And this is the `docker-compose.yml` that uses it:

# docker-compose.yml

version: '3.8'

services:

app:

build: .

ports:

- "3001:3001"

depends_on:

- db

# Load environment variables from the .env file

env_file:

- .env

# Pass necessary variables to the app container

environment:

PGHOST: db

PGUSER: ${POSTGRES_USER}

PGPASSWORD: ${POSTGRES_PASSWORD}

PGDATABASE: ${POSTGRES_DB}

PGPORT: 5432

db:

image: postgres:13

# Use the same .env file to configure the database

env_file:

- .env

I ran docker-compose up on my laptop. It worked. But this was the real test. I installed Docker on my server, copied my project over (including the .env file), and ran the exact same command.

And it just... worked. No errors. No version conflicts. No drama.

It wasn't magic. It was simply that for the first time, the environment on my server was not just similar to my laptop's; it was identical. I hadn't just deployed my code. I had shipped its entire universe in a box, and it didn't care where it was opened.

That's when it clicked. The goal was never just to get the code onto a server. It was to get a predictable, repeatable result, every single time. And my journey, I realized, was just getting started.

Our code is now safe, but our data is living dangerously. Let's fix that.

Stay tuned for the next post: A Developer’s Journey to the Cloud 2: Managed Databases & Cloud Storage.

Subscribe to my newsletter

Read articles from Arun SD directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by