From Virtual Machines to Containers

Omkar Hase

Omkar Hase

Introduction

In the modern world of software development, one of the biggest challenges is making sure applications run the same way everywhere—on a developer’s laptop, a testing server, or in the cloud. I must ensure that at least once in a lifetime, you have definitely heard about the problem, like, “It works properly on my machine, but not on yours.” Traditionally, differences in operating systems, libraries, and configurations often caused apps to behave differently or even break.

This is where containers and the concept of containerization come in. Containers are lightweight, portable packages that bundle an application along with everything it needs to run—libraries, dependencies, and settings—so it behaves consistently in any environment.

Docker is the platform that made containers popular. It provides simple tools to build, ship, and run containers efficiently, helping developers and companies deploy applications faster, reliably, and at scale.

🔹 What is a Container?

You may have heard about the concept of containerization so Containerization is a lightweight virtualization technology that allows you to package an application and everything it needs (code, libraries, dependencies, runtime, configuration files) into a single unit called a container.

Think of it like this:

👉 Instead of worrying about “will this app run on my friend’s laptop or on the company’s server?”, containerization ensures the app runs the same way everywhere because the environment is bundled along with the app. 👉 In simple terms:

Think of a container like a lunchbox (tiffin box) 🥡. You pack your food (application) along with everything it needs (spoons, napkins, side dishes = dependencies).

No matter where you carry the lunchbox – home, office, or park – it’s always ready to eat. Similarly, containers guarantee that your application will run the same everywhere.

Unlike virtual machines, containers don’t need a full operating system. They share the host OS kernel, making them much faster and more efficient.

🔹 What Was The Problem Before Containers/containerization?

Now, let’s rewind a little. Before containers, deploying applications was messy and complicated. Developers used to say the dreaded phrase: “But it works on my machine!” 😅

Here’s why:

A developer writes code on their laptop with certain versions of libraries installed.

When the same code is moved to a testing server or production machine, suddenly it breaks. Why? Because the environment there is slightly different – maybe the OS version doesn’t match, or some libraries are missing.

Let me simplify this for you.

Suppose I have developed an application on a Mac operating system. Later, when I try to test it on Windows, Ubuntu, or any other operating system, it may not work properly or might not even run at all. This is because different operating systems have different libraries, dependencies, and configuration files.

To fix this, teams started using Virtual Machines (VMs). VMs allowed you to create separate environments for each application. It worked better, but there was a problem Each VM needed its own operating system, which made them heavy, slow to start, and resource-hungry jus like – VMs were like carrying around entire kitchens just to serve a single meal and we can only serv one person at time

Now, you might be thinking – “Wait, isn’t this the same as virtualization?” 🤔

That’s a common confusion, and it’s worth clearing up. While both virtualization and containerization aim to run applications in isolated environments, they do it in very different ways. Virtualization uses Virtual Machines (VMs), where each VM runs a full operating system on top of a hypervisor. This makes them heavy, slower to start, and resource-hungry. Containerization, on the other hand, doesn’t need a separate OS for every application. Instead, containers share the host operating system’s kernel, making them lightweight, faster to start, and much more efficient. Think of VMs as entire houses built on one big plot of land, each with its own foundation and utilities. Containers are like apartments in a high-rise building—sharing the same structure but still giving each resident their own private space.

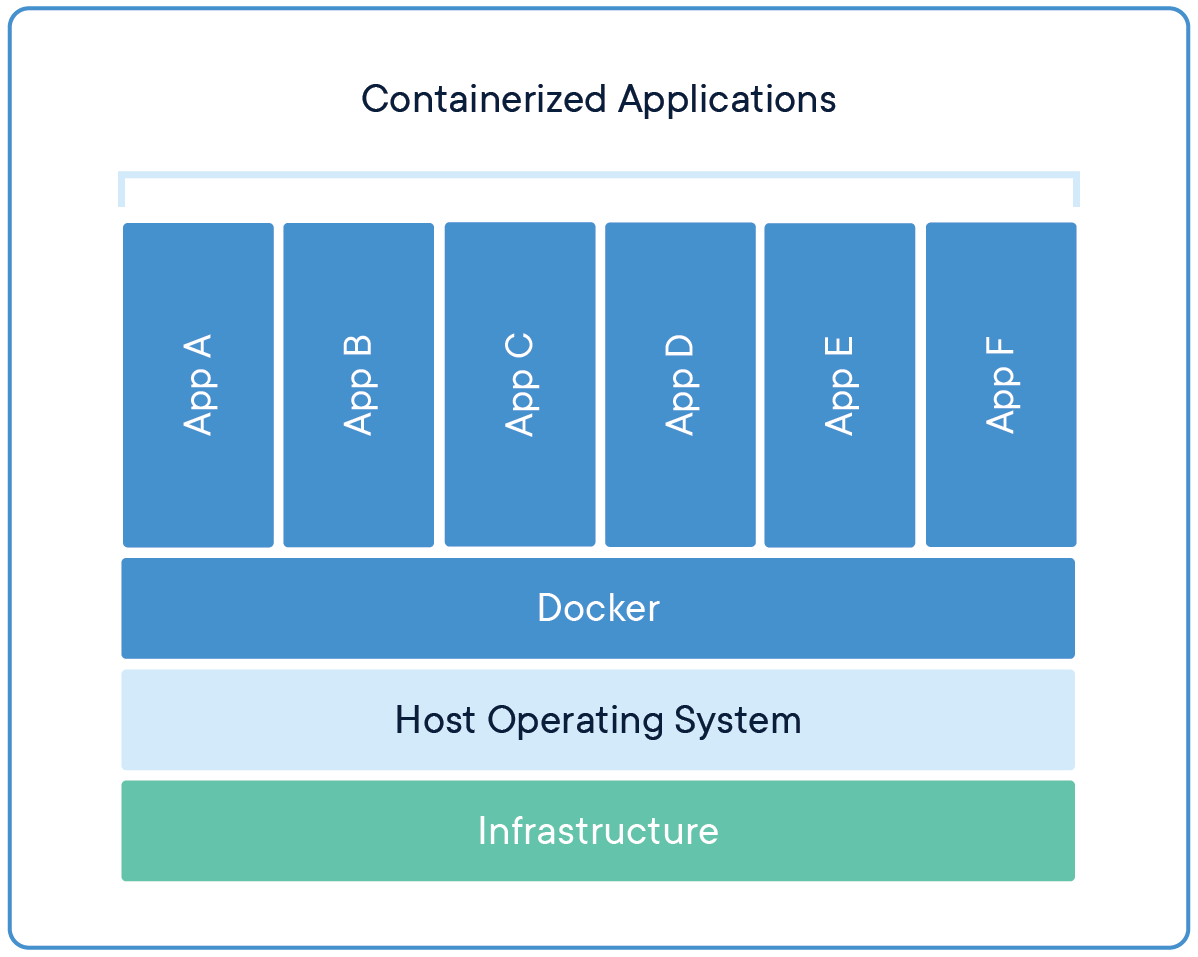

This figure provides a brief idea of what I am trying to convey to you.

🔹So Here’s How Containers Solved These Problems

🚀 When Containers Entered the Scene

Containers changed the game. They fixed the everyday struggles of bare-metal deployments and bulky Virtual Machines. Here’s how they made life easier:

🟢 Consistency Everywhere

Containers bundle your app with everything it needs. So whether you run it on your laptop, in testing, or in the cloud—it behaves the same.

👉 No more “but it worked on my machine!” excuses.⚡ Lightweight by Design

Unlike VMs that carry a full operating system inside, containers share the host OS. This makes them smaller, faster, and much less resource-hungry.🚀 Instant Start

A VM can take minutes to boot, but a container is ready in seconds. Perfect for modern DevOps pipelines where speed is everything.📈 Effortless Scaling

More users? Just spin up more containers. Fewer users? Scale them down. With Kubernetes, you can manage thousands of containers without breaking a sweat.🔒 Safe and Isolated

Containers may share the same system, but each runs in its own bubble. That means apps don’t clash, no matter how different their dependencies are means there is total isolation in between containers.

🔹Some tools that are used for containerization.

🐳 Docker

🫙 Podman

📦 containerd

🔧 CRI-O**

🐧 LXC (Linux Containers)

🚀 rkt (Rocket – deprecated but notable)

Among all the tools that made containerization possible, one name truly transformed the industry: Docker 🐳.

In my next blog, “Docker: The Leading Containerization Platform,” we’ll explore what Docker is, how it works under the hood, and why it became the go-to choice for developers and enterprises worldwide. 🚀

If you’ve ever wondered “Why Docker and not the others?” or “How exactly does it make developers’ lives easier?”—you won’t want to miss this one. 🔥

*stay tuned !!!!!!

*

Subscribe to my newsletter

Read articles from Omkar Hase directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Omkar Hase

Omkar Hase

Hi, I’m Omkar, a passionate DevOps enthusiast on a journey to master modern cloud, CI/CD, and automation tools. I started exploring Linux and version control with Git, and quickly got fascinated by Docker, Kubernetes, AWS, and CI/CD pipelines. Building projects that combine these tools gives me a real sense of problem-solving and learning in a hands-on way. When I’m not coding or deploying pipelines, you’ll find me trekking through nature, hiking new trails. I love combining rhythm and teamwork on the Dhol and Tasha, just like I enjoy orchestrating complex DevOps workflows—both need practice, precision, and passion. Through this blog, I share my experiences, tutorials, and insights so others can learn DevOps step by step, while I continue documenting my own growth along the way.